denoising-diffusion-pytorch

denoising-diffusion-pytorch copied to clipboard

denoising-diffusion-pytorch copied to clipboard

RuntimeError: Trying to resize storage that is not resizable

I want to use Multi-Task Facial Landmark (MTFL) dataset to train DDPM. I use the code bellow.

from denoising_diffusion_pytorch import Unet, GaussianDiffusion, Trainer

model = Unet(

dim = 64,

dim_mults = (1, 2, 4, 8),

flash_attn = True

)

diffusion = GaussianDiffusion(

model,

image_size = 128,

timesteps = 1000, # number of steps

sampling_timesteps = 250 # number of sampling timesteps (using ddim for faster inference [see citation for ddim paper])

)

trainer = Trainer(

diffusion,

'images/AFLW',

train_batch_size = 32,

train_lr = 8e-5,

train_num_steps = 700000, # total training steps

gradient_accumulate_every = 2, # gradient accumulation steps

ema_decay = 0.995, # exponential moving average decay

amp = True, # turn on mixed precision

calculate_fid = True # whether to calculate fid during training

)

trainer.train()

But it reports error:

Traceback (most recent call last):

File "train.py", line 29, in <module>

trainer.train()

File "/mnt/petrelfs/songwensong/denoising-diffusion-pytorch/denoising_diffusion_pytorch/denoising_diffusion_pytorch.py", line 1013, in train

data = next(self.dl).to(device)

File "/mnt/petrelfs/songwensong/denoising-diffusion-pytorch/denoising_diffusion_pytorch/denoising_diffusion_pytorch.py", line 60, in cycle

for data in dl:

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/accelerate/data_loader.py", line 384, in __iter__

current_batch = next(dataloader_iter)

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 633, in __next__

data = self._next_data()

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 1345, in _next_data

return self._process_data(data)

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 1371, in _process_data

data.reraise()

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/_utils.py", line 644, in reraise

raise exception

RuntimeError: Caught RuntimeError in DataLoader worker process 0.

Original Traceback (most recent call last):

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/worker.py", line 308, in _worker_loop

data = fetcher.fetch(index)

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/fetch.py", line 54, in fetch

return self.collate_fn(data)

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/collate.py", line 265, in default_collate

return collate(batch, collate_fn_map=default_collate_fn_map)

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/collate.py", line 119, in collate

return collate_fn_map[elem_type](batch, collate_fn_map=collate_fn_map)

File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/collate.py", line 161, in collate_tensor_fn

out = elem.new(storage).resize_(len(batch), *list(elem.size()))

RuntimeError: Trying to resize storage that is not resizable

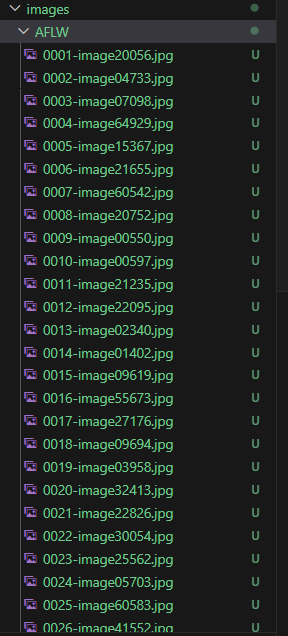

I don't know why, the project directory is as follow:

I want to use Multi-Task Facial Landmark (MTFL) dataset to train DDPM. I use the code bellow.

from denoising_diffusion_pytorch import Unet, GaussianDiffusion, Trainer model = Unet( dim = 64, dim_mults = (1, 2, 4, 8), flash_attn = True ) diffusion = GaussianDiffusion( model, image_size = 128, timesteps = 1000, # number of steps sampling_timesteps = 250 # number of sampling timesteps (using ddim for faster inference [see citation for ddim paper]) ) trainer = Trainer( diffusion, 'images/AFLW', train_batch_size = 32, train_lr = 8e-5, train_num_steps = 700000, # total training steps gradient_accumulate_every = 2, # gradient accumulation steps ema_decay = 0.995, # exponential moving average decay amp = True, # turn on mixed precision calculate_fid = True # whether to calculate fid during training ) trainer.train()But it reports error:

Traceback (most recent call last): File "train.py", line 29, in <module> trainer.train() File "/mnt/petrelfs/songwensong/denoising-diffusion-pytorch/denoising_diffusion_pytorch/denoising_diffusion_pytorch.py", line 1013, in train data = next(self.dl).to(device) File "/mnt/petrelfs/songwensong/denoising-diffusion-pytorch/denoising_diffusion_pytorch/denoising_diffusion_pytorch.py", line 60, in cycle for data in dl: File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/accelerate/data_loader.py", line 384, in __iter__ current_batch = next(dataloader_iter) File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 633, in __next__ data = self._next_data() File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 1345, in _next_data return self._process_data(data) File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/dataloader.py", line 1371, in _process_data data.reraise() File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/_utils.py", line 644, in reraise raise exception RuntimeError: Caught RuntimeError in DataLoader worker process 0. Original Traceback (most recent call last): File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/worker.py", line 308, in _worker_loop data = fetcher.fetch(index) File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/fetch.py", line 54, in fetch return self.collate_fn(data) File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/collate.py", line 265, in default_collate return collate(batch, collate_fn_map=default_collate_fn_map) File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/collate.py", line 119, in collate return collate_fn_map[elem_type](batch, collate_fn_map=collate_fn_map) File "/mnt/petrelfs/songwensong/miniconda3/envs/ddpm/lib/python3.8/site-packages/torch/utils/data/_utils/collate.py", line 161, in collate_tensor_fn out = elem.new(storage).resize_(len(batch), *list(elem.size())) RuntimeError: Trying to resize storage that is not resizableI don't know why, the project directory is as follow:

I got same problem before, but I resize all the images to target size ,problem solved. Hope it could work for you too

Try to set in the DataLoader: pin_memory=False, num_workers=0

In my case, the underlying error message was then displayed. For my dataset, images have different channel numbers (some are in grayscale). So the problem was solved by adding conversion to RGB in __get_item__: img = Image.open(path).convert("RGB"). Hope this helps.

@allglc Thanks to your suggestion I was able to resolve this error in some other project! Confusing error message though.