enet

enet copied to clipboard

enet copied to clipboard

Packet loss when over VPN, not local

Hi, I'm facing an issue when enet is flooding upd packets on mobile, and when on VPN , it will hang the app, so I'm revisiting the game loop and made a simple PC repro, and

The game loop is like this

while (true)

bool polled = false;

while (! polled)

if (0 >= enet_host_check_events (EnetClient_, & event))

if (0 >= enet_host_service (EnetClient_, & event, 0))

break;

polled = true;

handle event

When running locally, there is no problem (except for occasional spike) but I discovered when running over a VPN, there is packet loss that keeps increasing.

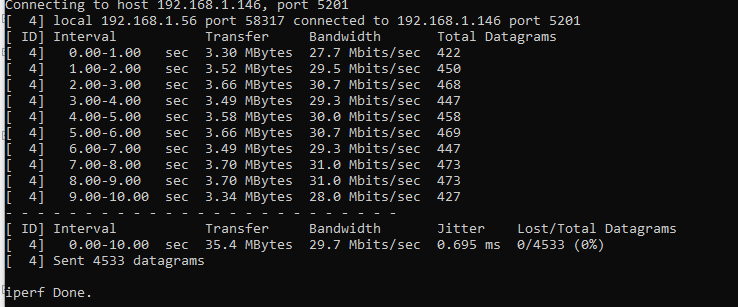

So I tested with perf3 to ensure it is not the network, perf3, could run up to 30Mbit with 0 loss.

IPerf shows 0.85% packet loss when it's 40Mbits. The sample app I have did not even send more than 10Mbits.

Repro

- Run server

- Run client

- Hold 1 for a sec on client to send packet. It logs to memory to avoid allocation

- Press L to see the log on client. I keep track of sent and received number of packets and their timing.

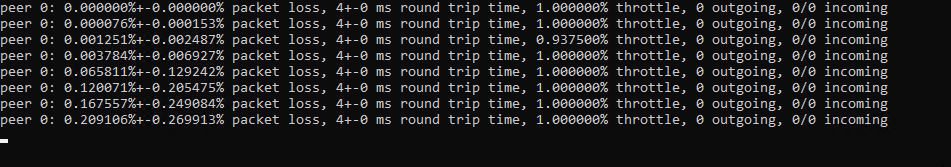

- Initialiy there is no packet loss. Repeat 3, and 4, and you will see it increasing

If you can build enet with the ENET_DEBUG define and then get me a log of the console output so I can have a general idea of what the throttle is doing, that would help me out before I dig into this further.

Server

Client

Have you tried throwing on an enet_host_flush after your service loop? The throttle looks fine but it looks like you may be getting behind on sending out the packets and acking them quickly enough somehow. Also try maybe experimenting with a non-zero timeout on the enet_host_service call on the server side. That seems to be some of the most obvious differences I see than Sauerbraten's ENet plumbing...

Yes, I have tried with flush after sending packet too, you can see that code commented out.

I tried with enet_host_service (enetServer_, & event, 3) , up to 30, on server side, no diff.

@imtrobin Can you give a try to this fork and check if this issue also a thing there? I was fighting a similar problem a while ago for a multiplayer game, so very curious if it works in your case.

@nxrighthere , I cannot compile with your enet fort cleanly. Things like ENET_PACKET_FLAG_UNRELIABLE_FRAGMENT is not defined.

I see your enet version is 2.4, is this a newer version somewhere?

We share a lot with the original ENet, but our fork was modified significantly. API there is slightly different, see the header. The application for testing is available here.

compiled, and having same issue.

We just tested it by transmitting data from the Netherlands to Germany vise versa over 1 Gbps pipe with RTT <30ms, and in our case, the drop rate is white noise, the connection is stable.

What VPN do you use? Where servers located? What's the quality of the network itself?

I would like to see a full traceroute, perf3 is not really that helpful here.

it's my home to my office vpn, both within 10km of each other, trace rt only has 2 hops. iperf3 is no packet loss at 30Mbps over 10 secs, I ran ike 6 times to be sure.

0.85% packet loss with perf3 within 10km? Are you using a virtual machine or a bare metal one?

its a normal desktop PC. I'm using RDP to connect there.

I think the tracert is not correct, cos it's probably tunneled.

I have noticed the same or a similar issue: ENet refuses to send reliable packets if the mobile device is connected via WireGuard VPN (tested with Surfshark), but only on mobile device (iOS/Android share the same behavior). Happens to packets of bigger size (approaching DEFAULT_MTU or bigger, in my case I've tested 1142 bytes of useful data with nxrighthere's fork. Anything lower works fine).

I cannot seem to reproduce it on desktop devices (tried both Windows and MacOS, but kept server running Linux X64). Switching to OpenVPN UDP magically solves all the problems. Seen both in original ENet and nxrighthere's version. While original ENet disconnects the client with a timeout, nxrighthere's version just keeps peer connected and sends packets on other channels normally.

host_service is run on a separate thread with a timeout of 1 ms on both client and server, everything is normal without using WireGuard VPN protocol.

I also have reports that the same behavior is seen on some UAE wifi networks.

Is there any specific useful information I can attach?

ENET_HOST_DEFAULT_MTU is set to 1400. This "MTU" value is used to fragment enet packages. The enet package fragments are the payload when sent over the net. Add ICMP and IPv4 headers and your package becomes 1428 bytes. This is above the threshold of some VPN solutions, meaning the underling stack has to further fragment the UDP package which might or might not work for "reliable packages".

@seragh IPv6 recommended minimum guaranteed to pass everywhere is 1280, which is what I use quite successfully for many years now.

@mman If you further deduct the IPv6 overhead you certainly have safe and future-proof default for ENET_HOST_DEFAULT_MTU. I wouldn't mind such a low default but suggest it to be paired with a convenient helper function to adjust the value.

For background on how we discovered the root cause of the problem of packet loss over Wireguard VPN links with ENet, see here. Also note page two of that forum topic, where I demonstrate that adjusting the MTU used by ENet solves the problem.

lsalzman, thanks for merging seragh's pull request #222. It's part of the solution to this problem.

The other part of the solution is either sensing a correct MTU for the link or using a better default MTU. My Wireguard VPN has an MTU configured at the OS level of 1420 bytes.

1420 is picked specifically so that it fits into a 1500 byte packet with IPv6. If you run WG exclusively over IPv4, you can use up to 1440 https://www.mail-archive.com/[email protected]/msg07105.html

Note that the statement is referring to the MTU of the Wireguard interface at the OS level, not the MTU that ENet should use.

Wireguard header sizes https://www.mail-archive.com/[email protected]/msg01856.html

But, it looks like that calculation ignores the case of IPv6 extension headers. I did some quick searching for documentation on extension headers.

fixed header of IPv6 packet: 40 bytes

extension headers: hop-by-hop options and destination options: 24 bytes routing: 16 bytes (actually 24?) fragment: 8 bytes authentication header and encapsulating security payload (IPSec): potentially more than 16 or 14-269 bytes https://en.wikipedia.org/wiki/IPv6_packet https://www.cisco.com/en/US/technologies/tk648/tk872/technologies_white_paper0900aecd8054d37d.html

Any extension header can appear at most once except Destination Header because Destination Header is present two times in the above list itself. https://www.geeksforgeeks.org/internet-protocol-version-6-ipv6-header/

IPv6 options are always an integer multiple of 8 octets long. https://docs.oracle.com/cd/E18752_01/html/816-4554/ipv6-ref-2.html

Mobility header: doesn't currently allow data to be piggybacked on it, so I think that it's basically just a protocol packet and therefore won't affect ENet's choice of default MTU Host Identity Protocol: (unknown size so far, see RFC7401) Shim6 Protocol: (unknown size so far, see RFC5533) https://www.iana.org/assignments/ipv6-parameters/ipv6-parameters.xhtml

Path MTU discovery is recommended in the RFC for IPv6 for determining an appropriate MTU. But, simplified implementations "like a boot ROM" can use 1280 bytes, since it's the minimum MTU for IPv6. https://www.rfc-editor.org/rfc/rfc8200.html#section-5

UDP header: 8 bytes

I don't know whether Wireguard will pass IPv6 packets that include extension headers. Can someone test it?

This information should be helpful in choosing a maximum MTU for ENet.

Another problem: The mtu variable in ENet seems to be named or used incorrectly. It currently controls the maximum data size per packet, but the RFC standard definition of MTU is the maximum total bytes on wire per packet including headers. Should the variable be renamed, for example to mdu or data_mtu? Or, should the variable keep the name mtu, and then calculations are done to estimate or sense packet header lengths that are then used to compute how much data can be sent per packet?

The current minimum and maximum MTU values used by ENet are apparently based on the RFC standard definition of MTU for IPv4. "IPv4 mandates a path MTU of at least 576 bytes, IPv6 of at least 1280 bytes." https://stackoverflow.com/a/4218766

So, should ENet add a define for the minimum value MTU for IPv6?

I'm assuming that you intend to continue support for only IPv4 at this time. There are three significant issues that remain, and one minor issue.

- The default MTU is not suitable for avoiding fragmentation with Wireguard VPN use.

- There is no means for the application to choose the MTU at connection creation based on link or path conditions

- There is no means for the application to adjust the MTU after the connection has been established

- Minor issue: ENet's definition of MTU is not the same as the standard RFC definition

Fixing issue 1 is easy. I can write a pull request for it. Fixing issue 2 requires a decision. Do you want a PR that adds a function enet_host_create_ex which takes all user-configurable parameters? Realize that if more user-configurable settings are added to the ENetHost structure then the function API will end up changing. Example:

ENET_API ENetHost * enet_host_create_ex (const ENetAddress *address, size_t peerCount, size_t channelLimit, enet_uint32 incomingBandwidth, enet_uint32 outgoingBandwidth, enet_uint32 mtu, size_t duplicatePeers, size_t maximumPacketSize, size_t maximumWaitingData);

Or, do you want a PR that adds a function enet_host_create_templated which takes a single parameter, an ENetHost structure with values configured by the user to override defaults. Only those elements that make sense to allow the user to configure would actually have an effect. This would prevent changes to the API even if more user-configurable settings are added to the ENetHost structure.

Fixing issue 3 would involve adding an API function to allow the application to adjust the MTU, and propagating that adjustment to the other peer of the connection. i would propose to design it similarly to the connection verification sequence where the MTU is sent over the network and the minimum MTU of each peer is ultimately chosen.

Fixing issue 4 involves a decision. Here are the options that I suggest. (a) ignore it (b) change the names of the mtu element in ENetHost and the DEFAULT_MTU defines to something like mtu_data, data_mtu, or mdu (c) change how the mtu variable is used to determine the maximum data size per packet, ie. subtract the expected maximum header size from the mtu

@lsalzman which options for solving each issue would you like to see in a pull request that I make?

You can already change the mtu by just altering the value of it in your host after enet_host_create. So, I opt for (a) ignore it.

lsalzman, you didn't read the whole comment. There are 4 issues. Option (a) only applies to the fourth issue that I listed.

I did. You can override the mtu by changing it on the host, which is used for both sides of the connection.

That would only address issues 2 and 4, provided that the documentation was updated to specify that users are allowed to overwrite that variable.

What about issue 3, adjusting the MTU after the connection has already been established? If one overrides the mtu element after the connection is established then it only has an effect on the local side of the connection.

If you want to do something like that, just tell the other side the mtu and have them fill it in. This is completely achievable at the user level since the field is wide-open to user modification.

Lowered the default MTU to 1392 since on balance that seems a reasonable enough change that should not be disruptive to most users.

This is good, thanks. The 0 A.D. development team (Wildfire Games) chose 1372 so that there is some headroom in case of other protocol overhead, but 1392 tests good with the default Wireguard settings and tunneled IPv4 only for now.