Dockerize CI for robust local reproducers

Opening an issue to discuss the PR #1156 and capture it here so it can live beyond a PR.

I would suggest we modify https://github.com/llvm/torch-mlir/blob/main/build_tools/python_deploy/build_linux_packages.sh since it is used by the release scripts already. We should be able to expose the additional CI parameters https://github.com/llvm/torch-mlir/blob/31727f81d82537a6d30bb09b9c43d7ae2131ba40/.github/workflows/buildAndTest.yml#L15-L20 via any additional ENV variables.

Then when we get to run_on_docker() we branch out based on env vars to call the corresponding flavors of CI builds or Release builds. (https://github.com/llvm/torch-mlir/blob/31727f81d82537a6d30bb09b9c43d7ae2131ba40/.github/workflows/buildAndTest.yml#L50-L101 or the release build with https://github.com/llvm/torch-mlir/blob/31727f81d82537a6d30bb09b9c43d7ae2131ba40/build_tools/python_deploy/build_linux_packages.sh#L101-L105 )

Since the docker image itself is a variable you can override the environment variable with any CUDA docker image etc when you run it.

So locally when you want to build the CI versions you would do something like

TM_PYTHON_VERSIONS=cp310-cp310 MANYLINUX_DOCKER_IMAGE=nvidia/cuda:10.2-cudnn8-devel-ubuntu18.04 TORCH_BINARY=OFF ./build_tools/python_deploy/build_linux_packages.sh

We can have a list for all targets etc so you don't have to run it one by one.

Couple points to note: We may have to expose the .ccache directory inside the docker container since our CIs use ccache extensively.

Let me know if this makes sense if not we can discuss at the community meeting too. I am trying to get the Release builds to move to PyTorch source builds but have to deal with nested pip sandboxes since we build our pip package and pytorch also with pip but that should be independent of these changes.

Thanks @powderluv . This proposal makes sense overall, and would be a lot more customizable with minimum boilerplate. The main concern I have though is it doesn't provide a good mechanism to repro the CI run locally (which was the intent of the original PR). Because it relies on the GitHub Actions step to install initial dependencies (that's how it avoids needing a Dockerfile). Do you think we could dockerize that part, so we have the reproducer for local runs? cc: @silvasean @asaadaldien .

Yeah we can move the CI to run with the new docker images so they will all be reproducible locally.

Perfect. Just to confirm I understood:

- We'd be using a

Dockerfileto absorb these GHA setup steps:

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: 3.9

- name: Install MLIR Python depends

run: |

python -m pip install -r $GITHUB_WORKSPACE/externals/llvm-project/mlir/python/requirements.txt

shell: bash

- name: Install PyTorch nightly depends

run: |

python -m pip install -r requirements.txt

shell: bash

- name: Install Ninja

uses: llvm/actions/install-ninja@55d844821959226fab4911f96f37071c1d4c3268

- This Dockerfile (potentially one per platform) is used to build and launch containers for building+testing torch-mlir (using shell scripts that run on GHA CI and locally alike).

Does that sound correct? I think this would be a big step forward in providing a consistent way to reproduce failures locally, and also make sending PRs upstream a lot easier. WDYT?

Yes this sounds good. Just that the docker image we have already should be able to do all of this. It has all versions of Python we care about, and installing requirements can be done for build configs that require it (running through setup.py automates some of those aspects).

So essentially all your changes can start with one file: torch-mlir/build_tools/python_deploy/build_linux_packages.sh

And you should get to a point where you can run all the equivalents of the CIs currently locally via that script. Once that script is good it is just a matter of updating GHA.

Makes sense. Thank you for the clarifications and guidance!

We need to make sure we mount and hit ccache inside the docker when on GHA.

We need to make sure we mount and hit ccache inside the docker when on GHA.

After a few iterations on this, I am able to confirm that ccache is now configured to hit (~98.91% hit rate) even within the docker container on GHA. The CMake build step took <5 minutes (which otherwise needed >1.5 hours without ccache).

stats updated Sat Aug 6 14:27:28 2022

stats zeroed Sat Aug 6 14:20:32 2022

cache hit (direct) 2178

cache hit (preprocessed) 3

cache miss 24

cache hit rate 98.91 %

unsupported code directive 1

cleanups performed 0

files in cache 6678

cache size 498.6 MB

max cache size 2.0 GB

In general I'm seeing good first-signs-of-life for the dockerized GHA CI run (all unit tests passing). Do you know why I still see some integration tests failing? Maybe something off with the python setup? I'll continue to cleanup (reduce/eliminate Dockerfile, move setup steps to shell scripts that are docker run etc) and then look into these failures more.

are you on top of main that seems like an issue we just fixed

Yes, the last CI run (linked above) is from a rebase on https://github.com/llvm/torch-mlir/commit/5618890ca05db7336022f02224022db250a8e420 (current HEAD@main which is green). So should be some setup issue specific to my CI workflow.

Here's what I get when running them locally:

Process ForkProcess-15:

Traceback (most recent call last):

File "/usr/lib/python3.8/multiprocessing/process.py", line 313, in _bootstrap

self.run()

File "/usr/lib/python3.8/multiprocessing/process.py", line 108, in run

self._target(*self._args, **self._kwargs)

File "/opt/src/torch-mlir/torch-mlir/build/tools/torch-mlir/python_packages/torch_mlir/torch_mlir_e2e_test/torchscript/framework.py", line 357, in worker

sync_results.append(

File "<string>", line 2, in append

File "/usr/lib/python3.8/multiprocessing/managers.py", line 834, in _callmethod

conn.send((self._id, methodname, args, kwds))

File "/usr/lib/python3.8/multiprocessing/connection.py", line 206, in send

self._send_bytes(_ForkingPickler.dumps(obj))

File "/usr/lib/python3.8/multiprocessing/reduction.py", line 51, in dumps

cls(buf, protocol).dump(obj)

File "/usr/local/lib/python3.8/dist-packages/torch/multiprocessing/reductions.py", line 366, in reduce_storage

fd, size = storage._share_fd_cpu_()

RuntimeError: unable to write to file </torch_123_3393598452_36>: No space left on device (28)

No space left on device 😀

Yeah. Do the tests really need to write to disk? I see this error with local runs; GHA CI fails due to a crash that is unrelated to this though. Example:

FAIL - "AdaptiveAvgPool2dNonUnitOutputSizeDynamicModule_basic"

Runtime error: Testing process terminated. Either the compiler crashed or the compiled code crashed at runtime.

dunno about tests having to write to disk. I assume e2e tests would need it with all the downloading of models etc.

Interestingly, disabling multiprocessing (with -s as @silvasean suggested) fixes the unexpected failures in the end 2 end integration tests (now all pass or expectedly fail). So definitely something with multiprocessing is not playing well from inside a docker container. Before we can dockerize these runs, it'd be good to get to the bottom of this, unless we're okay disabling multiprocessing for CI runs. These are not very long running anyway - what was the motivation for multiprocessing here?

We disable it for macOS too. It is just to run the tests faster. How many cores do you have in the system ? Maybe it is dependent on the number of cores ?

Maybe it is dependent on the number of cores ?

It breaks both locally and on GHA runners, so I presume this is not something that could be fixed by changing number of cores or disk space. I tried to capture the stderr (which is also tricky due to multiprocessing enabled) but you can see from the logs that it is very fragile. I also see different tests fail in different runs.

Disabling multiprocessing, the workflow is green and completes in ~20 minutes.

Maybe worth trying a Ubuntu 22.04 with Python3.10 docker too (our CI is on those now). Worth digging into a little bit to see why we have this failure (since it will become 3x slower) if we run sequentially.

since it will become 3x slower

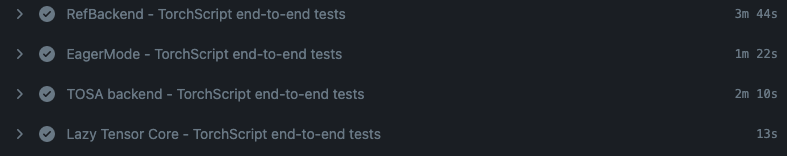

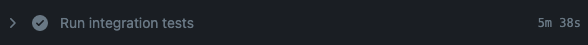

I'm seeing little to no degradation with multiprocessing disabled. In fact I am seeing speedups. For example these were observed from the same commit:

This is the runtime for E2E tests in existing CI (~total: 7 min 29 sec):

This is in docker CI (with multiprocessing disabled) (~total: 5 min 38 sec):

faster is good anyway we get it 👍

Yeah, Python MP is not great so I am not surprised it isn't better on 2 cores. +1 for limiting it.

I have a few follow-on questions about the different matrix options in CI.

- Am I understanding correct that today we only test

in-treeand llvmbinarybuilds + tests in CI? Because I see both build and test steps gated with

if: matrix.llvmtype == 'binary'

What is the out-of-tree workflow checking? Should we at least enable the build in CI (if not tests)? I think we could do so by removing this line.

- What is the motivation behind creating matrix options that are not exercised - like

llvmtypeandllvmbuildtypecould be collapsed into one?

llvmtype: [source, binary]

llvmbuildtype: [in-tree, out-of-tree]

exclude:

# No need for "out-of-tree LLVM and PyTorch source"

- llvmtype: source

llvmbuildtype: in-tree

- llvmtype: binary

llvmbuildtype: out-of-tree

The goal with the matrix build was mostly driven by fast-fail so the fastest CI will kill all others if it fails. Second goal was to mimic all the builds we had at that time (https://github.com/llvm/torch-mlir/actions/runs/2746164338) . Now that we have the matrix build and captured all the CI configs we can relax / constrain more easily.

The OOT builds didn't have tests running in the past so I set it up that way. OOT is mainly checking

- Can we build torch-mlir with latest clang (the same version in our repo)

- Can we build in OOT mode vs in-tree mode that is popular with vendors

Also see @silvasean suggesting on trimming tests https://discord.com/channels/636084430946959380/742573221882364009/1006350527895965807

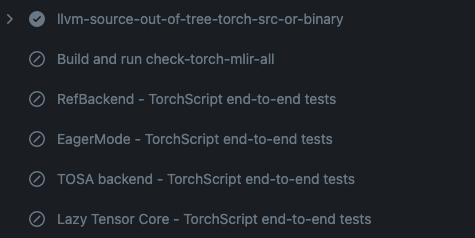

Thanks for the context. I understand excluding tests for OOT case, but it seems we're also excluding OOT build.

Can we build torch-mlir with latest clang (the same version in our repo)

FWICT we're not building torch-mlir OOT in CI. We're just "building llvm" and "configuring cmake to build torch-mlir", but the build command itself is gated:

- name: Build and run check-torch-mlir-all

if: matrix.llvmtype == 'binary'

run: |

cd $GITHUB_WORKSPACE

export PYTHONPATH="$GITHUB_WORKSPACE/build/tools/torch-mlir/python_packages/torch_mlir"

cmake --build build --target check-torch-mlir-all

So only the llvm/mlir repo is getting built, not torch-mlir. Is this a correct interpretation? Shouldn't we include building torch-mlir OOT in CI?

Yes looks like a bug. It should also build torch-mlir

Yes looks like a bug. It should also build torch-mlir

@powderluv Thanks for confirming. The fix was exceedingly simple :) PTAL: https://github.com/llvm/torch-mlir/pull/1188

We landed this a while ago!! Great work team!