Broadcast fails after a few segments when using -orchAddr domain.com

I was running a test stream this morning with a potential client to use my O for 24/7 streaming and the Broadcast was failings after a few segments leaving the B in an unusable stuck state. I had him try using the IP address of one of my nodes and then it worked.

Steps to reproduce the behavior:

Start Broadcaster with the following: .\livepeer -broadcaster -network arbitrum-one-mainnet -ethUrl https://arbitrum-mainnet.infura.io/v3/xxx -ethKeystorePath C:\Users\xxxx.lpData\arbitrum-one-mainnet\keystore\UTC--2022-06-xxx -rtmpAddr 127.0.0.1:1935 -httpAddr 0.0.0.0:8935 -transcodingOptions P240p25fps16x9,P360p25fps16x9,P576p25fps16x9,P720p25fps16x9 -orchAddr solarfarm.multiorch.com

Expected behavior The stream should work with the domain name specified in the -orchAddr

Screenshots

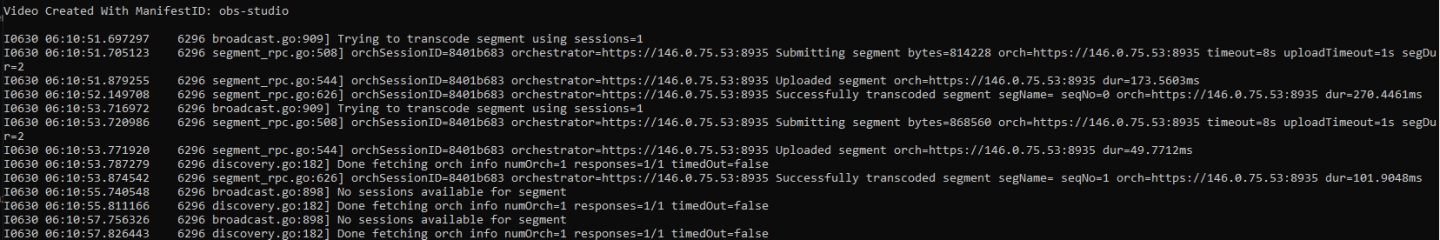

This is what the Broadcaster showed

Additional context Please see this discord discussion from June 18th for additional context where a few Os were testing a similar setup and saw the same behavior. https://discord.com/channels/423160867534929930/431166747085897740/987811196348559411

Log from earlier test below

I think they are referring to this part where it transcoded a few segments before losing connection to the orchestrator.

broadcaster | I0618 20:07:00.855896 1 segment_rpc.go:626] orchSessionID=3bc969d4 orchestrator=https://orch-chicago.xodeapp.xyz:8935 Successfully transcoded segment segName= seqNo=3 orch=https://orch-chicago.xodeapp.xyz:8935 dur=146.352305ms

broadcaster | I0618 20:07:00.855946 1 drivers.go:210] Downloading uri=https://orch-chicago.xodeapp.xyz:8935/stream/3bc969d4/P360p30fps16x9/3.ts

broadcaster | I0618 20:07:00.856062 1 drivers.go:210] Downloading uri=https://orch-chicago.xodeapp.xyz:8935/stream/3bc969d4/P240p30fps16x9/3.ts

broadcaster | I0618 20:07:00.857979 1 drivers.go:228] Downloaded uri=https://orch-chicago.xodeapp.xyz:8935/stream/3bc969d4/P240p30fps16x9/3.ts dur=1.886509ms bytes=86104

broadcaster | I0618 20:07:00.858498 1 drivers.go:228] Downloaded uri=https://orch-chicago.xodeapp.xyz:8935/stream/3bc969d4/P360p30fps16x9/3.ts dur=2.541427ms bytes=101332

broadcaster | I0618 20:07:00.859177 1 census.go:1180] Logging SegmentDownloaded... dur=3.244259ms

broadcaster | I0618 20:07:00.900413 1 broadcast.go:1266] Successfully validated segment

broadcaster | I0618 20:07:01.185400 1 discovery.go:167] No orchestrators found, increasing discovery timeout to 1s

broadcaster | I0618 20:07:01.185423 1 discovery.go:182] Done fetching orch info numOrch=1 responses=1/2 timedOut=true

broadcaster | I0618 20:07:01.185461 1 broadcast.go:125] Ending session refresh dur=501.119618ms orchs=0

broadcaster | E0618 20:07:01.694718 1 discovery.go:115] err="Could not get orchestrator orch=https://orch.xodeapp.xyz:8935: rpc error: code = Canceled desc = context canceled"

I've been doing endurance test locally with streamtester for almost 10 hours:

./streamtester -sim 10 -repeat 10000

And so far didn't encounter any abnormal behavior of B. One idea is that it may be related to O capacity, if new streams are appearing too quickly, there's a chance (in case of limited number of Os) to run out of sessions, despite number of parallel streams never exceeding capacity, because when stream stops, there's no way to know if it will resume and O reserve T session for it - with a timeout of 5 mins, I believe. On production, where the number of Os is normally much larger than number of streams, that should be unlikely to happen.

Are you using a domain name for the -orchAddr? It works fine when we use an IP address but using a domain name is when we see the issue.

I don't think it's a stream capacity issue. When we were testing, we had the issue when we only sent 1 session to an O with no other work at the time.

Hmm, after further investigation, I think it's a race condition here. Domain name or IP doesn't matter, it just affects latency so that error appears. The issue seem to occur when segment transcoding finishes very quickly. I'm on it and, hopefully, will have a fix soon.

Turns out domain name does matter after all, but in an indirect way, with issue occurring on a localhost as well. Fix is ready. Workaround for current version: specify precisely the same -orchAddr on B and -serviceAddr on O. 127.0.0.1 won't work with localhost. For above, this would require adding -orchAddr solarfarm.multiorch.com argument on B and -serviceAddr solarfarm.multiorch.com on O.

Thanks @papabear99 for spotting this, it was particularly nasty bug which might have caused other unnoticed job routing issues.