cloudflow

cloudflow copied to clipboard

cloudflow copied to clipboard

Allow for the same application to be deployed in separate namespaces in a single k8s cluster

Is your feature request related to a problem? Please describe. Users have asked for this feature specifically for the ability to use a single kubernetes cluster and cloudflow env to test and run a production cloudflow application

Is your feature request related to a specific runtime of cloudflow or applicable for all runtimes? all runtimes

Describe the solution you'd like I would like to be able to specify an environment configuration on the deployment of a cloudflow application that would deploy the application into an env specific namespace and use env specific Kafka topics.

Describe alternatives you've considered

Additional context I am open to suggestions. Users have requested this feature because not all customers have multiple seperate K8s environments.

Hello!

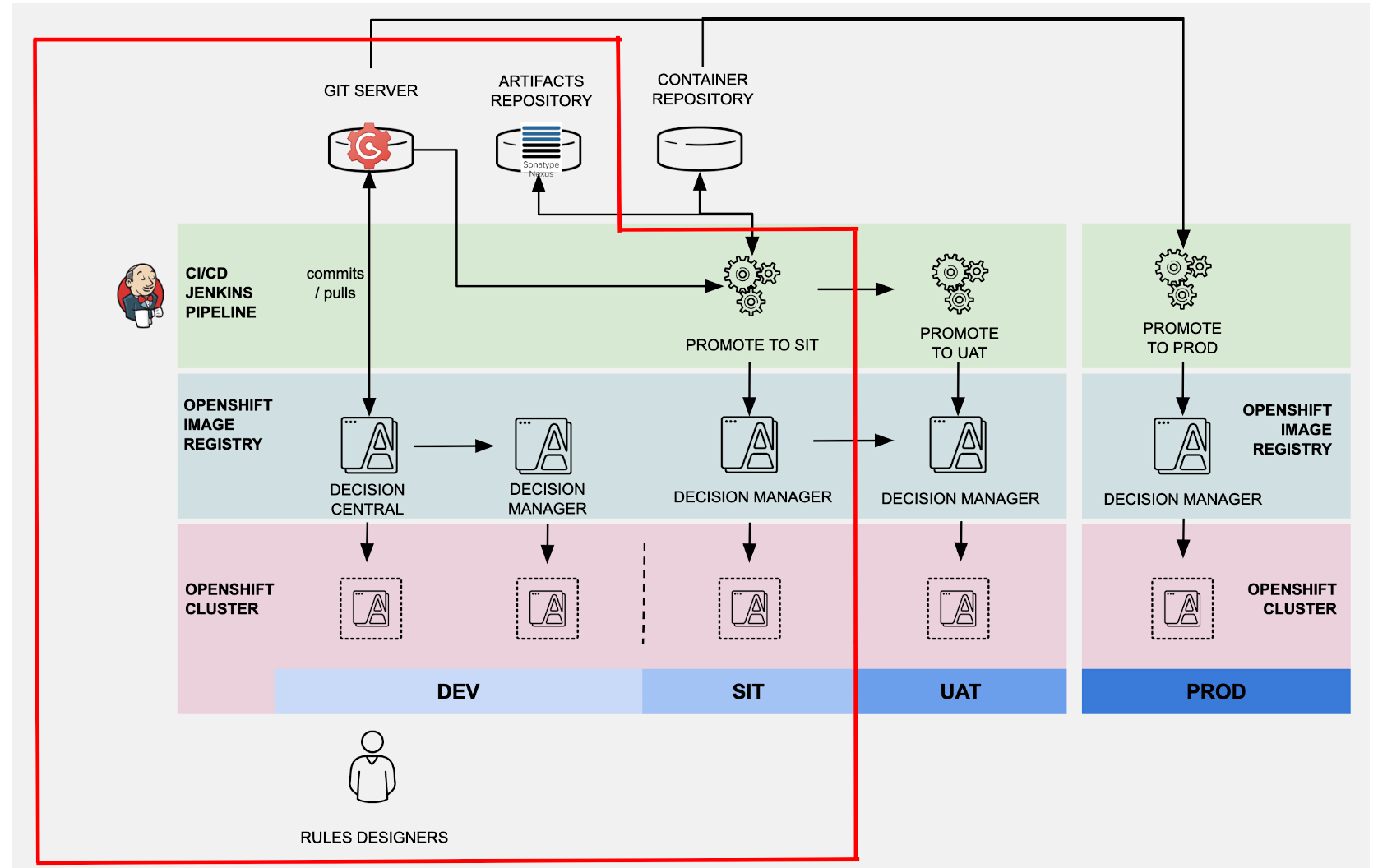

It will be great - for OpenShift it is the common case:

Possible solution - add labels or annotation to the cloudflow custom resources that point to the instance of Cloudflow (by default we have one instance of custom resource "Cloudflow" named "default", but can install separate instances for different environments - dev, sit, uat and other). In this case we can just change cloudflow label and project name and have the same topic names and other typical resources inside different cloudflow instances. I think it is the simpliest way - Cloudflow Operator just need to check this label/annotation before transform CloudflowApplication to FlinkApplication's, KafkaTopic's and other.

Another important issue is the delivery of updates between the test and production Kubernetes clusters. The native way is to move the appropriate docker images and apply a set of manifests that match the environment specific. Is a similar approach now possible in Cloudflow, and if not, how is it supposed to transfer updates between environments? Am I right that all spicific environment settings must be configured in the blueprint file and we need to store different blueprint files for different environment separately (for example in different Git branches)?

The blueprint file can stay the same, you can keep versions of the configuration files for specific environments.

(where you keep the configuration files for environments is up to you)

Please see https://cloudflow.io/docs/current/develop/cloudflow-configuration.html

the same topic names and other typical resources inside different cloudflow instances. @jenoOvchi I'm not sure what you mean since kafka topics are a cluster-wide resource, not namespaced?

(where you keep the configuration files for environments is up to you)

Am i right that in this case we need to rebuild all images for every environment?

the same topic names and other typical resources inside different cloudflow instances. @jenoOvchi I'm not sure what you mean since kafka topics are a cluster-wide resource, not namespaced?

We can can give namespaced privileges to strimzi because in cloudflow case no need to deploy Kafka resources to another namespaces.

@jenoOvchi I mean that it is problematic for a cloudflow app in different environments, ie dev, test etc, to use the same topics. So we need a solution for that since you don't want a dev env to write to the test env, for instance.

One way would be to prefix the names of topics but that's not a great solution.

You don't need to rebuild images @jenoOvchi

You don't need to rebuild images @jenoOvchi

Ok, thanks! We will try.

@jenoOvchi I mean that it is problematic for a cloudflow app in different environments, ie dev, test etc, to use the same topics. So we need a solution for that since you don't want a dev env to write to the test env, for instance.

I think for fast workaround we can use next solution:

- implement to Cloudflow Operator ability to check instance label;

- deploy several instances of Cloudflow with Cloudflow Custom Resource to different namespaces with multiple instance mode for Spark and Flink Operators and namespaced scope Strimzi Operator (it is possible with "Deploying the Cluster Operator to watch a single namespace" option - https://strimzi.io/docs/0.16.2/full.html#deploying-cluster-operator-str);

- create Cloudflow configuration files for every Cloudflow instance with specific instance labels and environment variables;

- deploy this Cloudflow configuration files to Kubernetes cluster. That creates namespaces for Cloudflow Pipelines with Cloudflow Application Custom Resources. Then Cloudflow Operators of this environments creates topics in it's Kafka instances and Flink and Spark Application Custom Resources, Configmaps and other in their namespaces. Then Spark and Flink Operators creates Deployments for their Custom Resources. Is it possible?