Integration with other private front-ends

I was so happy when the privacy community recognized the need for private front-ends of major services like YouTube, Twitter, Google search and projects such as these popped-up and were (ab)used at such a massive scale that Youtube had to rate-limit Invidious and Instagram had to rate-limit Bibliogram (till the project was no longer viable for daily use). It brings me great joy to see such widescale adoption of these projects. But there's one more thing yet to do....

Integration

We see these amazing efforts by developers and the wonderful communities to develop and sustain each front-end. But all this thankless manpower and momentum is being limited into building standalone products. These private frontends lack collaboration among them, and for good reason. Sustaining these projects isn't a small thing to achieve. Active devs (they have their own personal lives), positive and engaging community, and deployment of several instances. Each is a herculean task by itself. Thus, cross-project collaboration is just damn near impossible.

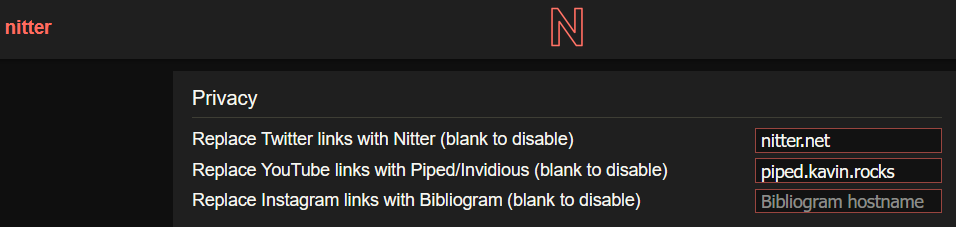

A small but significant step towards this is the integration of rest of these private front-ends in each of them. Each of the major services for which these private front-ends were built, have the other major services' links & embeds in them. For example, on Reddit we usually see posts where a YouTube video, or an Imgur album or a Medium article is linked. On Twitter bio's people usually link their Youtube channels (we already see this integration in Nitter where YouTube & Instagram links are replaced)

I think each standalone project matured enough to be integrated into all other front-ends. Projects such as Privacy Redirect and Untrack Me, are hanging by a thread to accomplish the same, due to the sheer number of these private front-ends. And it is clear why such projects are in huge demand. The community needed such integrations and they delivered the solution. But they are not to be a permanent solution. Because, their mere existence shows how disconnected these standalone private front-ends are and the fierce demand from the community for them not to be standalone and to be integrated with each other.

Enough rant about this, but moving forward we have to remember what drove the birth and the success of these projects. It is the choice, or rather the lack of choice to use these major services without being tracked, without JS, or with customization. So, while the integration needs to happen, it should still be optional i.e., some users may prefer YouTube links to Invidious links in Libreddit. And Nitter wonderfully executed the same by this principle, by giving users choice, to remove such integrations.

Gonna tag devs of such projects (@3nprob). I encourage others to tag people who can make this integration possibile 🙏

And of course, @spikecodes if you think this isn't the place to post this, I'd be happy to take it down.

Appreciate the tag @obeho :)

I've been thinking about this a bit as well. Maybe we should set up some place for coordination (Matrix chat or an ActivityPub forum for example?)

Just to illustrate how differently this can look:

https://codeberg.org/teddit/teddit/pulls/249 https://github.com/searx/searx/pull/2724

Some random thoughts on this:

- Each of these altfronts will need to do the same link-rewriting functionality. For some services it's as trivial as replacing a domain; for others some aspect of the URL may need to be modified further

- Embedded media is another aspect that may have some nuances

- There's significant duplicate work and it would be great if we can minimize that

- The solution should be aware of how different altfronts (e.g. Nitter) needs to be rewritten. But it should not have hardcoded instance URLs.

- The obvious answer would be to create a shared library. However, due to the variety of programming languages (JS/TS, C#, python, ruby, rust, go, etc), having to reimplement it in each language supposed to use it is IMO not feasible.

- It must be portable across CPU architectures (or at least x86/amd64/arm64, maybe armv7).

- It should not be a significant effort to integrate into existing altfronts.

- Care has to be taken to not make it vulnerable to exploitation due to the risk of processing arbitrary untrusted input strings

So the options I see:

- A native library with bindings for user languages

- A minimal stateless microservice that can be hosted together with any altfront to rewrite URLs. 2a. ...called for each URL 2b. ...fed the HTTP response intended for the user, rewriting any links therein before returning it

- 2b, but instead an HTTP proxy sitting in front, just transparently rewriting responses.

There are pros and cons of each.

I'm personally leaning towards 3:

- It could guarantee everything is rewritten - no room for missed cases

- Transparent for each altfront: 0 new code or configuration needed for each service, it would just be a matter of putting it instead of/between whatever reverse proxy is used

In fact, we may not even need to write new software; a community-managed configuration and setup for privoxy could solve all of this. We could also leverage of all the security and existing work done by privoxy implementers. I think an ansible playbook and a Dockerfile would make this very accessible for new and existing hosters.

My pleasure 😊 @3nprob Thank you for engaging 🙏

I'm glad to hear that. I saw the yaml file discussion with @return42 and it looks similar to what Privacy Redirect is doing (replacing the hostname), it seems like a great start.

Though most of your comment went over my head on my first read, I got the gist of it and I felt like reading one of those RFCs from IETF.

I should say, the idea of all these altfronts (thanks for this word) being served by a centralized server is ambitious. As you've pointed out, its building and implementation would need a lot of collaboration, but I think it needs to happen in a public forum (preferably GH/GL/CB/SH) rather than an obscure chat room on Matrix or ActivityPub (never heard of it). Because, if this goes forward, it'll be the most daunting step taken by a decentralized and disorganized open-source community. And it will define the collaborative efforts between projects in the future. To simply put it, this is too big a deal for a fuck up.

This will take months of effort, just to gather the right set of people (without quorum there's no use). Till then, the trivial things that can be done by each altfront, like replacing the domain, and/or other modifications that their devs can think of, should be done in parallel and without any influence from the progress of this collaborative effort. Whichever direction the wind may flow, I strongly think that, this integration shouldn't be viewed as a binary thing, rather as a progress bar that doesn't have an end.

Hey @obeho and @3nprob! :wave:

I appreciate the detailed messages explaining the importance and possible implementations of this integration policy. Though, I wonder if rewriting links to other altfronts would be worth it considering users could simply install Privacy Redirect to achieve the same goal across the internet.

If this was to be added, I don't think a third party proxy/frontend/HTTP API should be used. Instead, I think it would be fine for Libreddit to support this natively as Nitter does.

Hey everyone, great initiative! Supporting this in Nitter has always been important to me, so this is very nice to see.

A concern I'd like to voice is differences in URL rewriting rules between different projects for the same service. I'm working on adding support for replacing Reddit links to Nitter, but there doesn't seem to be a way to support v.redd.it links uniformly between Teddit and Libreddit. With Teddit you can do teddit.net/vids/<id>.mp4, but Libreddit doesn't support this, and it seems there's no way to take that video ID and turn it into a path Libreddit understands. This leaves me with three options: drop support for rewriting video links, turn it into two separate replacement options (e.g. "Replace Reddit links (Teddit)"), or add a separate toggle that specifies whether it's Teddit or Libreddit. None of these are optimal of course, so I'm likely going to just not support v.redd.it replacements for the time being.

Another case is Invidious and Piped, though I'm not aware of any differences there (speaking of Piped, maybe @FireMasterK has some thoughts on the matter). Similar issues are likely going to arise with other "competing" projects, so it would be nice to talk about how to deal with this issue.

Hey @zedeus! :wave:

but Libreddit doesn't support this

Actually it does! The format is <instance>/vid/<id>/<resolution>.mp4. Example:

https://libreddit.spike.codes/vid/up2akh3qsx781/1080.mp4

Libreddit can currently proxy any Reddit official link except for their AWS emoji storage.

Cool, how strict is the resolution part? Will it fail if 1080 isn't available, or is there a fallback mechanism? Would you be willing to support the Teddit-style endpoint as a redirect for standardization purposes?

Cool, how strict is the resolution part? Will it fail if

1080isn't available, or is there a fallback mechanism?

Yes, it will fail if the requested resolution isn't available from Reddit.

Would you be willing to support the Teddit-style endpoint as a redirect for standardization purposes?

Sorry but I don't think that would be possible as Libreddit fetches videos on demand (meaning it needs a resolution to include in the v.redd.it url) as opposed to Teddit's fetching and downloading in advance.

@zedeus This is part of why I'm arguing for that there should be a way for the different projects to share efforts and remove the implentation concern from the frontalts themselves, and a separate proxy is the best I've been able to come up with so far. Especially as the amount of online BS, number of altfronts, and attempts by corporates to nip them, just keeping up will be a big effort.

Meanwhile I do find it great and commendable that for now @spikecodes is willing to shoulder this for libreddit. And there is no harm done in having redundant rewriting or proxying done at two points in the request-response cycle (apart from inefficiency, but IMO that's negligible and a worthwhile tradeoff).

As for differences between overlapping implementations like teddit/libreddit; Ideally I think as far as possible, implementations should strive for transparently handling the HTTP requests exactly as if they were sent to the downstream service - just swap out the host part.

When this is not the case for some practical reason, hopefully maintainers are open to aligning with each other so different implementations are interchangable.

In the video example above, I think teddit could still be made to support the libreddit-style URL despite how media is proxied? So far the author has been very approachable and a pleasure to interact with so if someone starts working on that (or even the bigger refactoring effort involved in addressing how it handles proxying of media, as it results in degraded UX) I don't think it should be an issue.

@obeho What I'm proposing isn't as big of an effort as it seems to me that you think and it can be made fairly modular and independently. Basically two options. Today a canonical deployments would look like this (LB here is some form of reverse proxy like nginx/caddy/haproxy/envoy/traefik, using libreddit as example); rewriteproxy could be a deployment of privoxy or an extended morty or similar.

Request: Browser -> LB -> libreddit -> reddit.com

Response: Browser <- LB <- libreddit <- reddit.com

Option 1

In this case, libreddit gets the response already rewritten. It would need to support connecting through a cleartext HTTP proxy (or trust an alternative TLS cert, but I have a feeling this will be a hassle and confusing for many implementors).

Request: Browser -> LB -> libreddit -> rewriteproxy -> reddit.com

Response: Browser <- LB <- libreddit <- rewriteproxy <- reddit.com

This approach is already taken by searx, using morty as the rewriteproxy to strip tracking-parts etc from links in response.

Option 2

Here, libreddit does not need to do anything at all; it returns the links as-is and they get rewritten before the response is handed back to the user.

Request: Browser -> LB -> rewriteproxy -> libreddit -> reddit.com

Response: Browser <- LB <- rewriteproxy <- libreddit <- reddit.com

Hope that makes sense.

I love the initiative, but I do see a few problems in the suggested solutions so far.

I personally don't like the idea of a "rewriteproxy" since that doesn't allow users to select an instance, doesn't work with all implementation (eg: in Piped), adds unnecessary complexity to an instance setup guide.

My ideas on solutions:

- Some kind of specification on how URLs should be rewritten in front-ends (and fixed URL patterns for front-end software to follow)

- A ruleset for rewriting URLs, which has to be processed by the frontend, similar to the previous idea, for example:

{

"youtube": [

{

"regex": "(?:http[s]?:\\/\\/)?(?:www\\.)?(?:youtu\\.be)\\/([a-zA-Z0-9]{11})",

"subs": "$frontend/watch?v=$1" // where an eg for $frontend would be: https://piped.kavin.rocks

}, // add more for each type of URL

]

}

In this idea, I think it should be feasible to automate support for a lot of frontends.

@FireMasterK Thanks for the feedback - I do think that the added infrastructure complexity can be addressed by provided setup scripts/docker-compose.yaml/etc and clear documentation. Given that many projects already have actually unnecessary complexity (e.g. using Redis for caching while already using nginx, rather than just configuring nginx properly), I don't think it should be that big of a deal.

since that doesn't allow users to select an instance

I think that this can be addressed by providing a preferred instance in an HTTP header or similar. But that's the kind of functionality I think can be added at a later stage. And, ideally, it would already by default be an instance hosted alongside the entrypoint. Either way with option 1 above, the power is still always with the entry altfront. One can also do cool things like out-of-the-box load-balancing between different services for increased availability and decentralization, and routing through VPNs etc. In either case I hope we agree that it's always desirable that those who have the means will self-host and can configure to their desires on their own server.

Would you be able to shine light on what the issue with Piped would be? I might just get to trying it out in a bit (if it's successful I think that's a better way to make the point than only bikeshedding anyway ;)), so a heads-up of pitfalls would be helpful.

I also considered the same idea you're proposing but discounted it because:

- Good luck arriving on a spec that captures all edge-cases, and getting everyone to implement it identically. We're a very fragmented bunch.

- I'm quite concerned about the potential for DoS and even worse security vectors (extracting server secrets, remote code execution) introduced by everyone implementing and running complex regex transformation (especially on URLs derived from untrusted sources, and whose output will be outgoing reuests to untrusted endpoints). Just look at the log4j incident - and that was just logs! Historically these kinds of mechanism have resulted in CVEs time and again. Even when done by professionals with proper expertise. It's hard to get right and historically many libraries and execution environments have gotchas that we can't expect FLOSS contributors to master. Right now most of these projects are a bit under the radar and aren't popular targets - this will probably change. I want the happy enthusiast rookie coder to be able to contribute code without requiring thorough review of these things.

This project is quite similar to the gateway proposal: https://github.com/benbusby/farside