Asynchronous vectorized environment does not work for cavia_trpo and maml_trpo.

Hi, first of all thank you so much for providing us such an excellent project! But I meet a problem and really need your help:

When I am running the cavia_trpo.py and maml_trpo.py, I met an error that:

########

/Users/yurunsheng/anaconda3/envs/marl/lib/python3.8/site-packages/gym/logger.py:30: UserWarning: WARN: Box bound precision lowered by casting to float32

warnings.warn(colorize('%s: %s'%('WARN', msg % args), 'yellow'))

Traceback (most recent call last):

File "

But it run properly in maml_dice.py.

May I ask you is there any difference between cavia_trpo.py and maml_dice.py when implementing the asynchronous vectorized environment and how to solve this prbolem? I use the MacOS 10.13.6 with python 3.8.

Here is the place the bug appear:

class SubprocVecEnv(gym.Env):

def __init__(self, env_factory, queue):

self.lock = mp.Lock()

self.remotes, self.work_remotes = zip(*[mp.Pipe() for _ in env_factory])

self.workers = [EnvWorker(remote, env_fn, queue, self.lock)

for (remote, env_fn) in zip(self.work_remotes, env_factory)]

for worker in self.workers:

worker.daemon = True

worker.start()

for remote in self.work_remotes:

remote.close()

self.waiting = False

self.closed = False

self.remotes[0].send(('get_spaces', None)) #bugs here

observation_space, action_space = self.remotes[0].recv()

self.observation_space = observation_space

self.action_space = action_space

I also found @yaoxunji met the similar problem in https://github.com/learnables/learn2learn/issues/184. May our problems be similar?

Thank you so much for your attention!

Best, Runsheng

BTW, today i checked the code and found the difference is

########

def make_env():

return gym.make(env_name)

Comparing with

def make_env():

env = gym.make(env_name)

env = ch.envs.ActionSpaceScaler(env)

return env

##########

So i change the make_env() in maml_trpo.py and it seems can run normally. I found that ch.envs.ActionSpaceScaler() is just a wrapping to scale the action space. May I ask you why for the same environment particle-2D, maml_trpo.py uses ActionSpaceScaler(), while maml_dice.py doesnt?

Thank you again! Runsheng

Hello @yurunsheng1 ,

Thanks for the bug report. As you saw in the cherry issue, it seems to only affect some specific users and I don't know what the cause might be. I'd be happy to merge a fix if you find one.

Hi @seba-1511 ,

I found that just commenting out (delete) the getattr_() function in https://github.com/learnables/cherry/blob/master/cherry/envs/base.py will solve my problems.

But I am not sure whether this brute action will cause any potential risk.

May I ask you is there any usage for the getattr() function?

Thank in advance! Runsheng

Thanks for reporting @yurunsheng1 ,

It allows you to access env.property instead of env.env.property when you wrap your environment with ActionSpaceScaler. I guess the issue is with pickling. Which OS and Python version are you using?

Thank you so much for your reply! @seba-1511

I use the OS 10.13.6 and the Python 3.8.5.

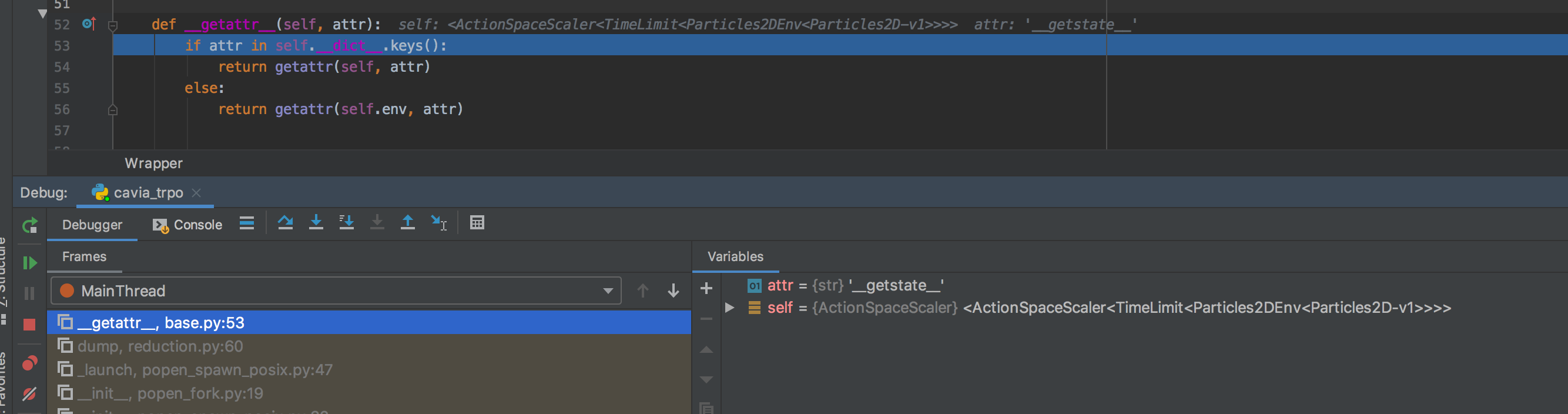

BTW, If I set the breakpoint at the beginning of getattr(), I will find that the attr has been assigned _getstate _. Unfortunately, there is no _getstate _ in _dict _ (line 53), so it will go to (line 56, the first Fig).

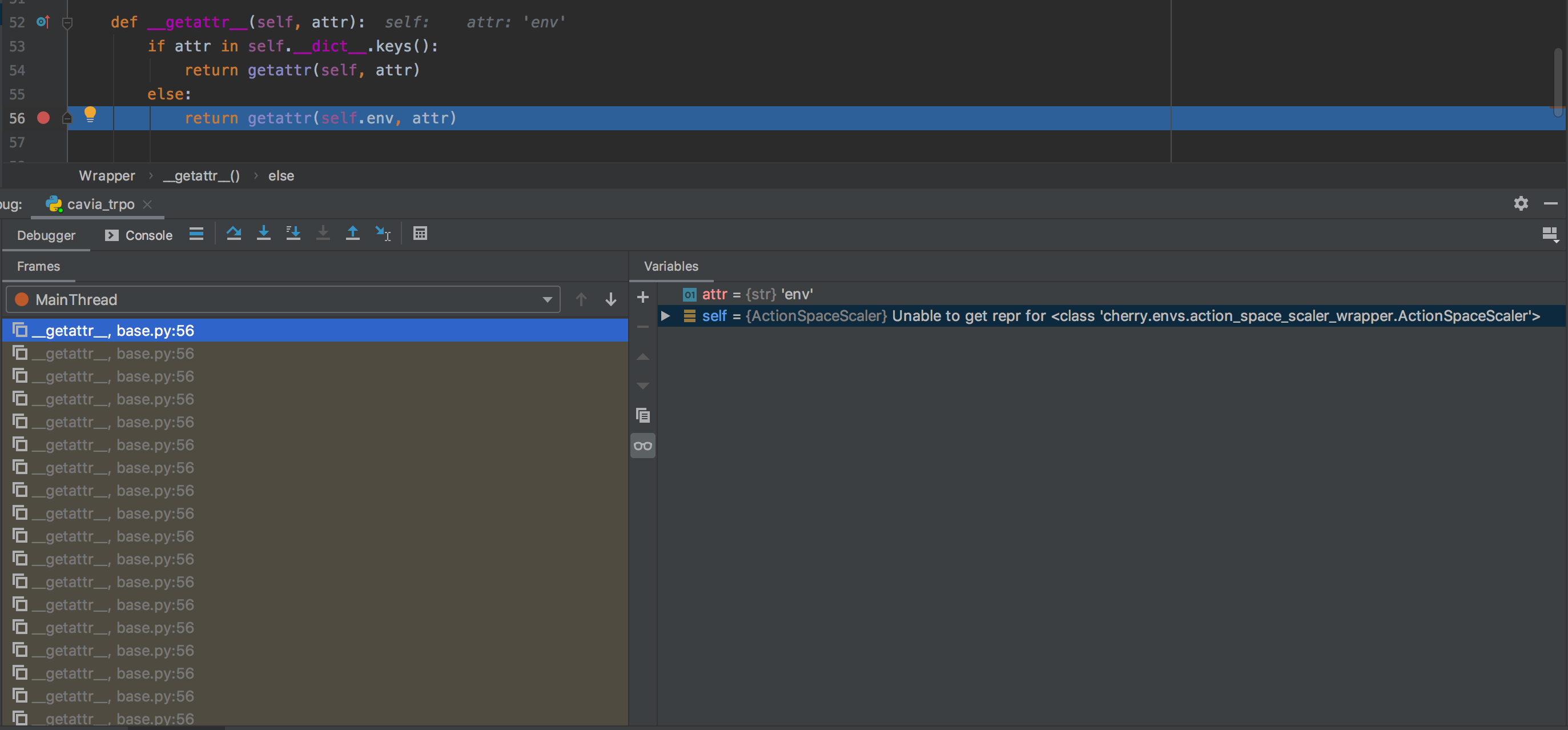

Then the _attr _ = env, since there is no env in _dict _(line 53), it still go to line 56, conduct the same behaviors, and loop forever (the second figure).

Is it connected to pickling? Could you give more details?

Thanks again!

This observation makes sense: the infinite recursion is also what threw your original error.

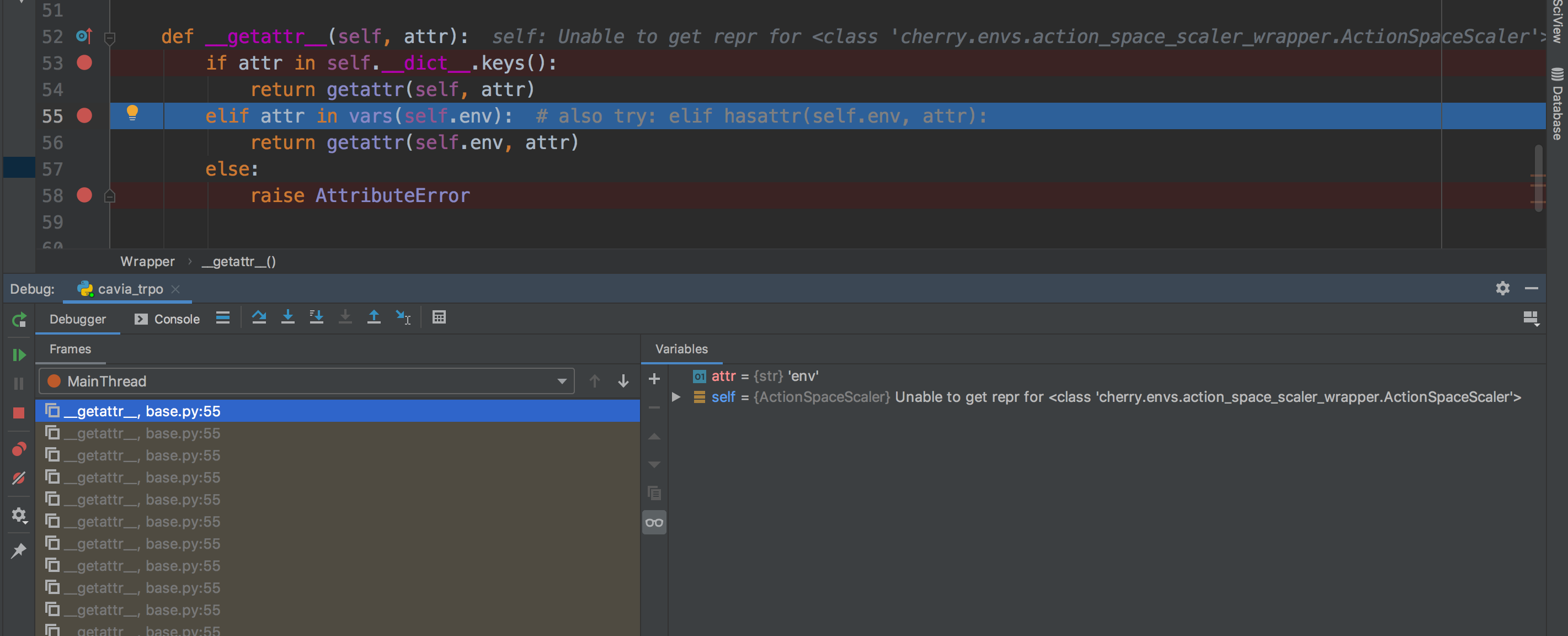

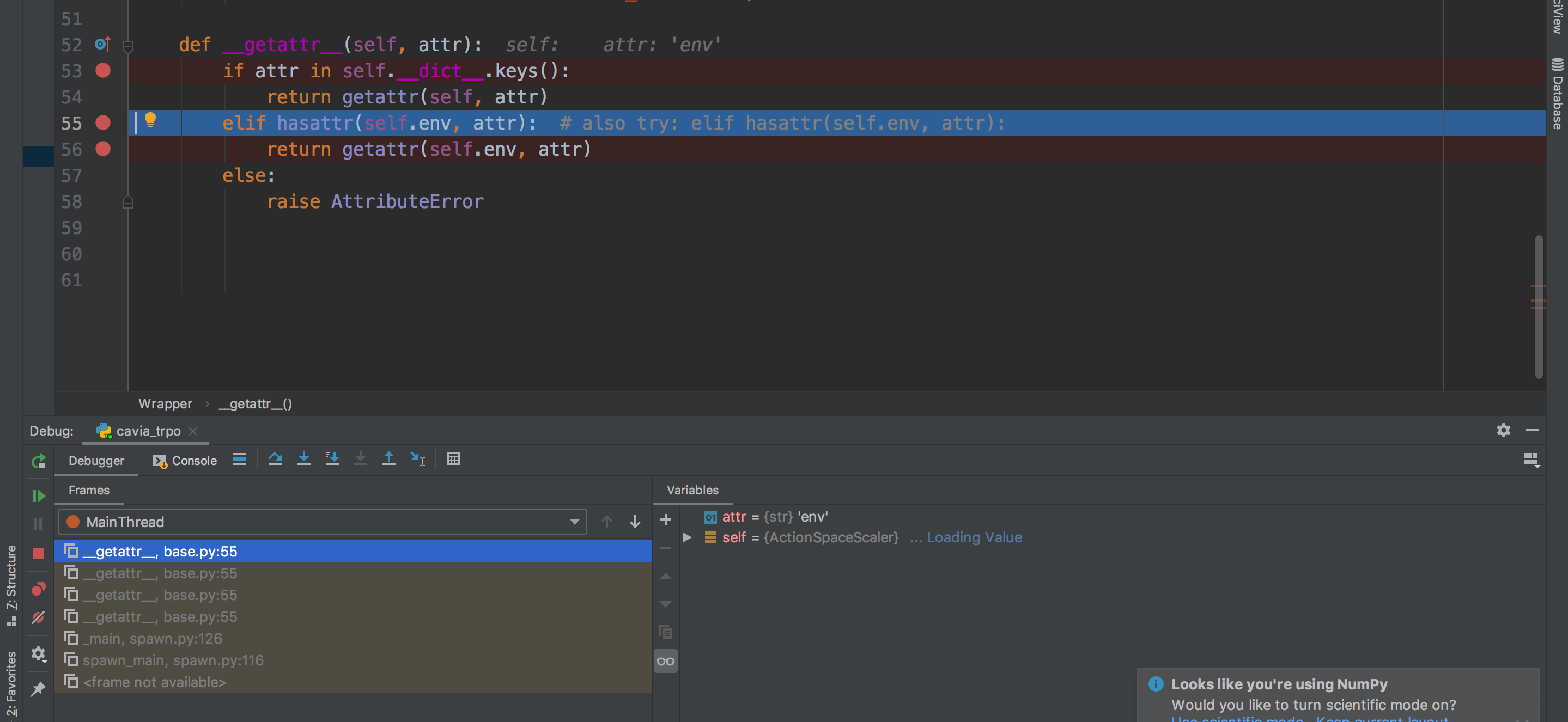

I think you're right and the issue might be due to pickling. Here's a thread that proposes a fix, but maybe that instead of attr not in vars(self.env) we might have to check for hasattr(self.env, attr).

Thank you so much for useful suggestions!

It almost solve my problems!

I rewrite the _ getattr_(self, attr) as:

def _ getattr_(self, attr):

if attr not in vars(self):

raise AttributeError

if attr in self.__dict__.keys():

return getattr(self, attr)

else:

return getattr(self.env, attr)

However, it will still raise AttributeError .

But when I put _ getattr_(self, attr) into ActionSpaceScaler class, it can run successfully.

May I ask you whether this function is well-written?

Will it be an alternative solver to this problem if I put the function to the ActionSpaceScaler class?

Plus, if I use the hasattr(self.env, attr) as you recommended, it still cast the maximum recursion error.

def _ getattr_(self, attr):

if hasattr(self.env, attr):

raise AttributeError

if attr in self.__dict__.keys():

return getattr(self, attr)

else:

return getattr(self.env, attr)

I think we should try to fix this issue in Wrapper, so that other wrappers derived from it can also be used with multiprocessing. If you have time, could you try the following? (I'd do it myself but can't reproduce the error.)

def __getattr__(self, attr):

if attr in self.__dict__.keys():

return getattr(self, attr)

elif attr in vars(self.env): # also try: elif hasattr(self.env, attr):

return getattr(self.env, attr)

else:

raise AttributeError

Very sorry for late reply but I was so busy these days 😭.

I ran both of the codes you suggested but it is sad that it still meets the maximum recursion bug as shown in the Figs.

But I still think the scheme you proposed are almost correct. Maybe there just still sth we ignore.

Just feel free to ask me to run the new code if you have any new idea. I am very willing to address the problems completely.

And also thank you again for giving me so many useful suggestions and helps!

Closing: inactive (and was resolved elsewhere?).