Automatic Data Collection for ATOM Testing in CI/CD Pipeline

The Context:

I'm working with @miguelriemoliveira and @rarrais on applying a CI/CD pipeline for ATOM as part of my master's thesis (see: https://github.com/lardemua/atom/issues/556). The goal is to run this section of the ATOM calibration pipeline on the Cloud, using AWS RoboMaker. In order to fully test ATOM in this manner, the data collection process must be uninteractive, which it is not at the moment. So, on my ATOM fork, I'm working on making this process work in an alternative manner.

The Goal:

Instead of acquiring a dataset from a bagfile, datasets are acquired directly.

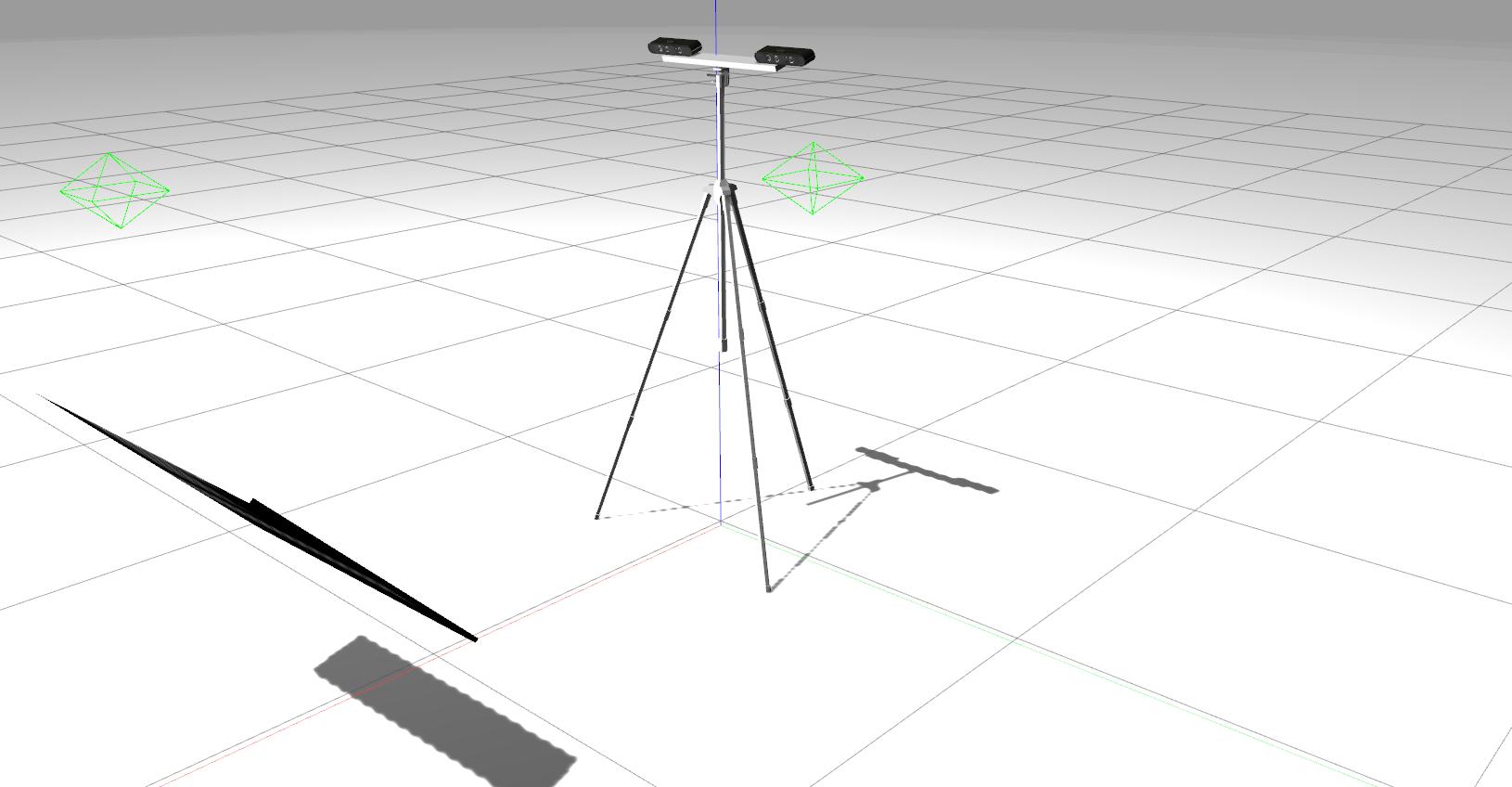

The robot in question is a very simple robot, consisting of a tripod with two RGB cameras on a plate. Since this robot is static, the only thing that differs between collections is the calibration pattern's pose.

The final goal is to have a script that moves the sensor pattern according to a movement sequence (which is described in a JSON file), pauses for some time between each pose, and records a collection. Just like ATOM does now, except without any form of user interaction.

A possible solution is having an interactive_pattern script that includes a menu that allows the user to manually save the pattern's pose into a dictionary. After several poses were saved, making up the "movement sequence", this dictionary would then be saved as a JSON file.

Another script, pattern_movement could read this JSON file as input and move the pattern according to these poses. When a pose specified in the JSON file is reached, the /collect_data/save_collection service would be called, saving the collection as part of a dataset. In order to comply with ATOM's guidelines for a good collection, the script would wait some time after the pattern reaches the pose before saving the collection.

What's Done So Far:

On my fork, I, with a great deal of help from @miguelriemoliveira, have created a script called pattern_movement that moves the calibration pattern automatically, both in Gazebo and RViz. So far, this movement is hardcoded into the script.

Running a gazebo instance, RViz, and running pattern_movement as it is now, we get the following:

Very nice video @Kazadhum !

Hi @Kazadhum. Glad to see your work is going well.

I have done this script for a similar purpose. Here is the video. Maybe it could give you some ideas.

Hi @JorgeFernandes-Git and @Kazadhum ,

I am not sure if this script should be removed from ATOM as it is specific to a robotic system.

To keep it we should make it general, possibly using the tools for recording a path into a json @Kazadhum developed later.

What do you think?

Hi @JorgeFernandes-Git and @Kazadhum ,

I am not sure if this script should be removed from ATOM as it is specific to a robotic system.

To keep it we should make it general, possibly using the tools for recording a path into a json @Kazadhum developed later.

What do you think?

Yes, this script can only be used in robotic systems that have at least one RGB camera. I just mention it because it could be helpful in any way.

Hello @Kazadhum and @JorgeFernandes-Git! Sorry for the delay in answering!

Thank you for the suggestion! I've managed to get this to work but haven't posted the results here as of yet!

Note: The two scripts described below are not in ATOM, but instead in my t2rgb fork, since they are system-specific.

Basically, I have a script, named record_pattern_motion that does just that: it uses ATOM's interactive_pattern script as a base, and records the pattern's different poses in a JSON file. Given a JSON "movement sequence file", a script called pattern_motion does the following:

- Updates the calibration pattern's pose (by publishing it as a ROS topic)

- Waits a for 5 seconds

- Calls the

/collect_data/save_collectionservice - Waits five more seconds

- Moves on to the next pattern pose and repeats the process until the JSON file is completely iterated over

This way, no bagfile or interaction is needed to acquire a dataset and the goal is achieved!

However, as mentioned, it is system-specific. And in that case I don't know how this would work in a generalized system to be integrated into ATOM.

I'll try to get a video of this working here as soon as I can.

Thanks @Kazadhum for the detailed description.