release

release copied to clipboard

release copied to clipboard

Clean up `latest` tag in registry.k8s.io

What happened:

We have some images with the tag latest published in the registry.k8s.io; however, we don't promote or update those images, and using that might cause issues because they might not be using the last release published.

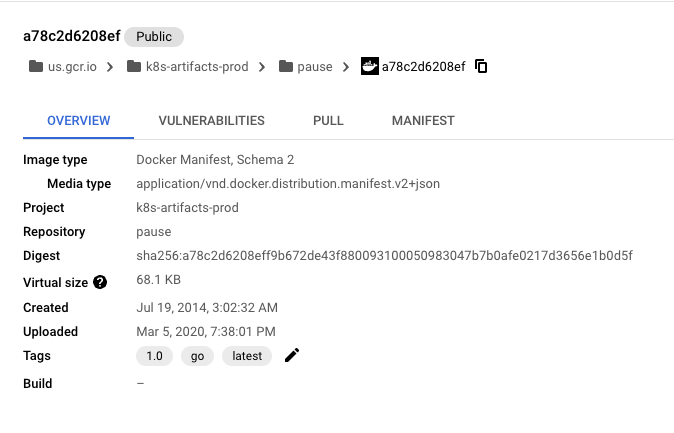

One example is the registry.k8s.io/pause that has the latest tag and the same digest as the 1.0 release; however, we are in the 3.9 release for the pause image.

We should perform a clean up in the registry at some point.

/cc @BenTheElder @liggitt @saschagrunert @dims cc @kubernetes/release-managers @kubernetes/sig-release-leads /assign

IMO, this should be at /priority important-longterm

/priority important-longterm

/sig k8s-infra /area artifacts

from: https://console.cloud.google.com/gcr/images/k8s-artifacts-prod/us/pause@sha256:a78c2d6208eff9b672de43f880093100050983047b7b0afe0217d3656e1b0d5f/details?project=k8s-artifacts-prod

We do have this information of which images have "latest" tag:

https://cs.k8s.io/?q=%22latest%22&i=nope&files=DO-NOT-MODIFY-legacy-backfill&excludeFiles=&repos=kubernetes/k8s.io

We can either automate it or clean things up manually. Since it does feel like a one time thing, i'd lean towards cleaning it up by hand

i think we run manually, and if that happens again then we automate and have a cron job. but i hope this does not happen again

FYI I've discovered an image that only has a single latest tag and no other tags which we also know is highly used (# 13 in image names across all tags by bandwidth).

$ crane ls registry.k8s.io/hpa-example latest

I'm not sure what to do with that, but I think we might want to avoid deleting :latest if we never published any other tags (!)

/milestone v1.28

@dims: The provided milestone is not valid for this repository. Milestones in this repository: [next, v1.16, v1.17, v1.18, v1.19, v1.20, v1.21, v1.22, v1.23, v1.24, v1.25, v1.26]

Use /milestone clear to clear the milestone.

In response to this:

/milestone v1.28

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.

I think we run manually, and if that happens again then we automate and have a cron job. but i hope this does not happen again

This should be prevented by presubmit validating the promoter manifests. We can do something similar to https://github.com/kubernetes/k8s.io/blob/main/registry.k8s.io/manifests/manifest_test.go but for the image files, banning specifically latest tag at least.

hpa-example remains an unresolved problem.

So, I think at least some of the hpa-example traffic is from GKE docs, which are/have-been fixed.

Though it's hard to say if anyone else is using it and there are still no other tags.

We should probably be communicating this with a blogpost etc before taking any action. Even if the end result is a clearer state, I think we'll be surprised by what ultimately breaks :-)

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale