org

org copied to clipboard

org copied to clipboard

Git LFS data usage in kubernetes-sigs

This is a tracking issue to capture emails from GitHub about Git LFS data usage in kubernetes-sigs.

Timeline so far:

- We received an email from GitHub on 29 March 2022 that we have used 80% of our data plan for Git LFS for kubernetes-sigs:

We wanted to let you know that you’ve used 80% of your data plan for Git LFS on the organization kubernetes-sigs. No immediate action is necessary, but you might want to consider purchasing additional data packs to cover your bandwidth and storage usage:

https://github.com/organizations/kubernetes-sigs/billing/data/upgrade

Current usage as of 29 Mar 2022 10:38AM UTC:

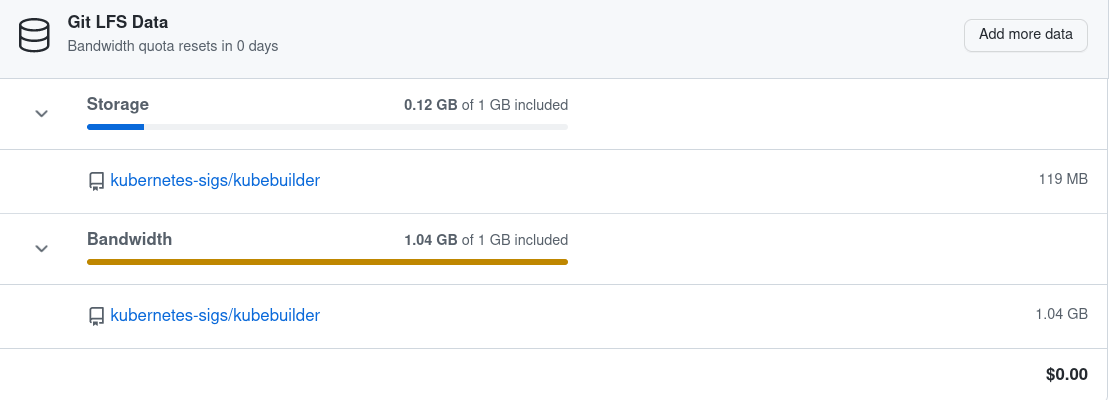

Bandwidth: 0.81 GB / 1 GB (81%)

Storage: 0.12 GB / 1 GB (12%)

- Two days later on 31 March 2022, we received another email from GitHub which said that we have used 100% of our data plan.

You’ve used 100% of your data plan for Git LFS on the organization kubernetes-sigs. Please purchase additional data packs to cover your bandwidth and storage usage:

https://github.com/organizations/kubernetes-sigs/billing/data/upgrade

Current usage as of 31 Mar 2022 06:32AM UTC:

Bandwidth: 1.01 GB / 1 GB (101%)

Storage: 0.12 GB / 1 GB (12%)

- We tried finding the repo that was using Git LFS (slack thread) and could only track it down to kube-builder. Looking at the repo, we could not find any file using Git LFS. GitHub does not provide this information either.

kubebuilder did use Git LFS in the past, but the offending file does not exist on recent branches/versions anymore. It exists on some really older versions. Unless someone is explicitly pulling down those versions, our bandwidth should not have been affected.

- I created a ticket with GitHub with the following questions: 1) which file was causing this and 2) who/what was leading to the traffic. I received the following response:

Hi Nikhita,

Thanks for reaching out. I'm seeing the storage on the following repo:

kubernetes-sigs/kubebuilder (119 MB storage)

I'm on the billing support team, and I'm going to defer to our Technical support team for help with your two specific questions.

However, I noticed that the kubernetes-sigs is covered by a 100% coupon, which does include covering LFS data packs. I've just added a data pack, which bumps LFS storage and bandwidth quotas to 50GB each per month. You should no longer be seeing the error that you're at 100% of LFS usage.

You'll hear from our Technical Support team as soon as they are able, and let us know if we can help with anything else.

Best,

Jack

I will add more details to this issue when I hear more from GitHub.

/assign @nikhita

@mrbobbytables is also working with GitHub to make details of Git LFS usage more transparent and discoverable.

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/close we're good here 👍

@mrbobbytables: Closing this issue.

In response to this:

/close we're good here 👍

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.