helm chart support namespace override for multi-namespace deployments in combined charts

add namespace override for multi-namespace deployments in combined charts

https://github.com/helm/charts/pull/15202

What do you want to happen?

- helm chart of ingress-nginx support namespace override for multi-namespace deployments in combined charts

- https://github.com/helm/charts/pull/15202 adds a namespaceOverride parameter, which allows this chart to be used in cases where templating is used across namespaces

- now

namespaceOverrideis in helm template chart by default

Is there currently another issue associated with this? no

Does it require a particular kubernetes version? no

my pr to this issue: https://github.com/kubernetes/ingress-nginx/issues/8661

@jasine: This issue is currently awaiting triage.

If Ingress contributors determines this is a relevant issue, they will accept it by applying the triage/accepted label and provide further guidance.

The triage/accepted label can be added by org members by writing /triage accepted in a comment.

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.

https://github.com/kubernetes/ingress-nginx/pull/8661

Apologies but I was curious to learn and understand what you mean by multi-namespace deployments in combined charts .

I am unable to relate to installing objects from one instance of the ingress-nginx controller into multiple-namespaces. All namespace scoped objects of one instance of ingress-nginx controller, require to be in one namespace. You can see that in the manifests.

I am unable to relate to combined charts as in combining ingress-nginx controller helm-chart with other charts.

@longwuyuan

when use ingress-nginx as other charts' dependency(Helm Dependency), helm will install manifests of ingress-nginx to parent chart's namespace, because .Release.Namespace is parent chart's namespace. so, if we want ingress-nginx installed to a separated namespace, community of helm project recommend add namespaceOverride (https://github.com/helm/charts/pull/15202)

many helm charts support namespaceOverride, such as kube-prometheus-stack

Thanks for the information @jasine .

It will require dev skills to understand the changes proposed in your PR, by analysing the code. So, the idea here is to describe everything in a simpler easy way, because we need some confidence that nothing will break, and also the maintenance will be easy. Secondly, your case is very very small percentage but the changes your PR is proposing is extremely complicated and intrusive.

Some aspects, that are not understood clearly from your PR, are listed below. So please comment in as much detail as possible, on each of the bullets below ;

(1) Changing 35 files to just pass a namespace string during install, seems an extremely high overkill. Can you not just instrument some method of passing --namespace <namespace-name-string> to helm. I would personally, just find a easier way to pass the "namespace name" string, if I am attempting to do something like this (include one chart inside another).

(2) Having multiple instances of ingress-nginx controller, in the same cluster, is not just limited to installing in separate namespaces. That is because there are objects involved that have clusterwide scope. Are you already aware of all the fields and values that must be unique to each instance of the ingress-nginx controller and did you already factor all of that in your proposed change ?

(3) Each instance of the ingress-nginx controller, needs to be configured for a unique ingress-class. This is likely the most significant aspect here and I am still looking at your proposed changes, but we need to explicitly write and discuss this. Are you aware of this ingress-class aspect and have you factored that in your proposed changes.

(4) I am still looking at the changes proposed. But on a very high level, are your proposed changes completely optional or do your changes force all users to opt-in into the codepath of namespaceOverride. It will not be possible to support all the complications that may arise, for users who do not have the same use case as yours (including this chart inside another chart).

(5) The ingress-nginx controller has implications that are tied to different providers. The project publishes manifests that are recommended based on who the cluster provider is. The helm chart is just one of install options and its a generic instal as against being a provider specific install. I am not sure of the impact and the maintenance requirements of supporting your proposed changes, in the context of multiple providers. Do you have any insight on this aspect.

Thank you once again for your contribution.

@longwuyuan thanks for your responding

(1) Changing 35 files to just pass a namespace string during install, seems an extremely high overkill. Can you not just instrument some method of passing --namespace

to helm. I would personally, just find a easier way to pass the "namespace name" string, if I am attempting to do something like this (include one chart inside another).

passing --namespace <namespace-name-string> to helm just works for parent chart, there are some discussions in helm's repo, https://github.com/helm/helm/issues/5358, finally the official solution is namespaceOverride, it works just like fullnameOverride which is already in this repo https://github.com/kubernetes/ingress-nginx/blob/4a6d15a5a2a07a4421c37cec56e5d8773d81e763/charts/ingress-nginx/values.yaml#L8

(2) Having multiple instances of ingress-nginx controller, in the same cluster, is not just limited to installing in separate namespaces. That is because there are objects involved that have clusterwide scope. Are you already aware of all the fields and values that must be unique to each instance of the ingress-nginx controller and did you already factor all of that in your proposed change ?

changes I have made just replaced .Release.Namespace in relevant manifests files, no other fields and values changed, so it has no impact on multiple instances of ingress-nginx controller

(3) Each instance of the ingress-nginx controller, needs to be configured for a unique ingress-class. This is likely the most significant aspect here and I am still looking at your proposed changes, but we need to explicitly write and discuss this. Are you aware of this ingress-class aspect and have you factored that in your proposed changes.

It does not have impact on ingress-class, it just change target namespace of manifests created if namespaceOverride is configured.

(4) I am still looking at the changes proposed. But on a very high level, are your proposed changes completely optional or do your changes force all users to opt-in into the codepath of namespaceOverride. It will not be possible to support all the complications that may arise, for users who do not have the same use case as yours (including this chart inside another chart).

namespaceOverride is optional, it is not configured in value file by default, and for most users namespace is the same as before .Release.Namespace, see https://github.com/kubernetes/ingress-nginx/pull/8661/files#r888682571

(5) The ingress-nginx controller has implications that are tied to different providers. The project publishes manifests that are recommended based on who the cluster provider is. The helm chart is just one of install options and its a generic instal as against being a provider specific install. I am not sure of the impact and the maintenance requirements of supporting your proposed changes, in the context of multiple providers. Do you have any insight on this aspect.

I think these changes will not affect multiple providers

@jasine Let us track this issue here and not in the PR.

If you sort of analyse the 5 aspects I had highlighted, you will understand that your proposed solution is not assuring that the problem will be solved.

-

After you have

namespaceOverridefeature available in the ingress-nginx controller helm chart, you will not be able to use the first, second or any other instance of the ingress-nginx controller installation. This is because, with your proposed solution, all the controllers will be configured to watch the same ingress-class so all instances of ingress-nginx controller, in all the namespaces that you have installed them, will try to process all the ingress objects, so there will be contention. You can experience this by trying to do a default install of the controller in namespace1 and namespace2 of your Minikube/kind/other dev cluster. Since you did not comment about this and since there is no clarity in this issue or the PR, you can read these links about ingress-class- https://kubernetes.io/docs/concepts/services-networking/ingress/#default-ingress-class

- https://kubernetes.github.io/ingress-nginx/#how-to-easily-install-multiple-instances-of-the-ingress-nginx-controller-in-the-same-cluster

-

Also the

controller.ingressClassResource.controllerValueneeds to be unique per ingress-nginx controller installation

Apologies if I don't understand this whole thing and feel free to guide me to the related info if I am wrong

@longwuyuan sorry, I did not get your point, why change namespace from user specified .Release.Namespace to another user specified namespaceOverride has impact on ingress-class, users can also set ingressClassResource.controllerValue and other values as before, and create several instance of the ingress-nginx controller installation.

I have tested the chart with namespaceOverride on k3s, and compared to official release of ingress-nginx chart, it seems that there no relevant problem you described occurred.

@jasine , thank you for the information.

Is it possible for you to copy/paste post info like below suggestion ;

- Please don't post screenshots. Please try to post the copy/paste of the command and the output of the command. Prefix all commands with

date, as much as possible - output of a newly created k3s cluster

date && kubectl get all -A -o wide - show your clone of your fork of ingress-nginx controller. just to see that your clone/branch has the changes

- do the install of the ingress-nginx controller with your values file etc but by using the ingress-nginx chart inside another chart

- repeat

date && kubectl get all -n <namespaceof1stcontroller> date && kubectl get ingressclasses- install the second instance of the ingress-nginx controller. Make sure to use ingress-nginx controller chart inside another chart

- repeat

date && kubectl get all -n <secondcontrollernamespace> date && kubectl get ingressclassesdate && kubectl get svc -A -o wide | egrep -i "load|node"- create your app-deployment, app-service, app-ingress and show

date && kubectl describefor all 3 objects - curl your app with -v

- show logs of controller1 pod and also controller2 pod

- create second-app-deployment, second-app-service etc and repeat

kubectl describe - show logs of controller1 pod and also controller2 pod

Add any other info that will help others

@longwuyuan following is the test procedure as you requested:

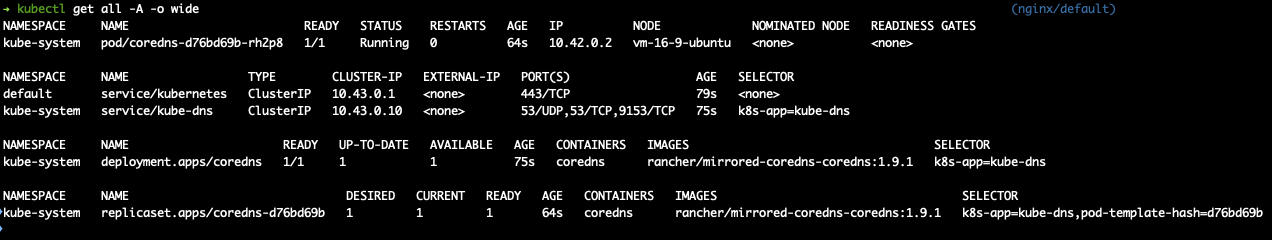

output of a newly created k3s cluster kubectl get all -A -o wide

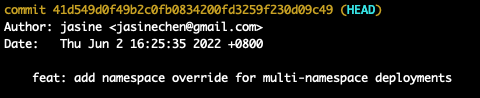

show your clone of your fork of ingress-nginx controller. just to see that your clone/branch has the changes

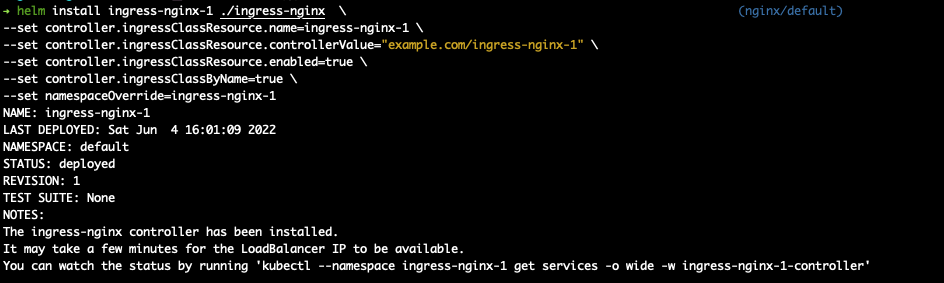

do the install of the ingress-nginx controller with your values file etc

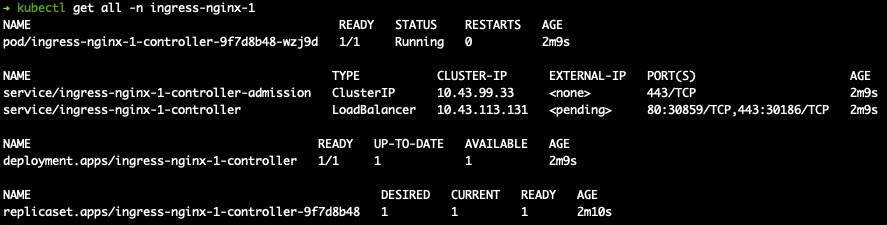

repeat kubectl get all -n {namespaceof1stcontroller}

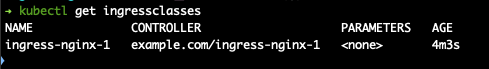

kubectl get ingressclasses

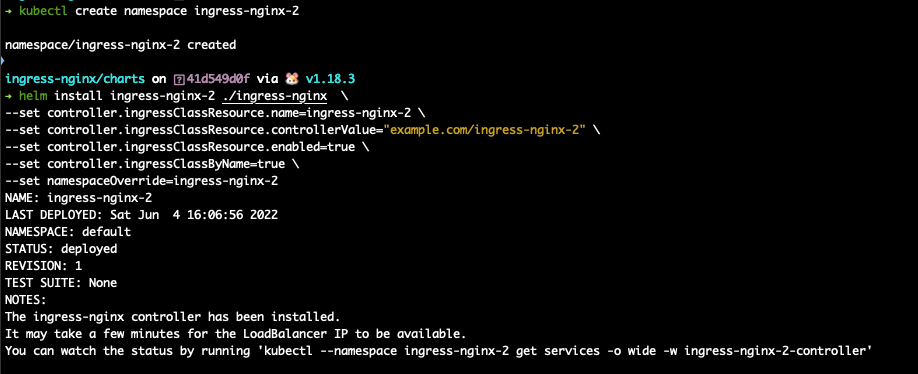

install the second instance of the controller, repeat kubectl get all -n {secondcontrollernamespace} kubectl get ingress classes

create your app-deployment, app-service, app-ingress and show kubectl describe for all 3 objects

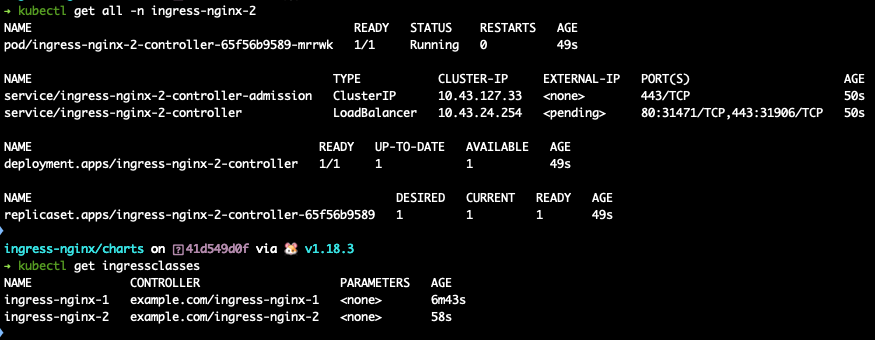

use a echo-server chart for app testing, install a instance to ingress-nginx-1 namespace with ingress class ingress-nginx-1

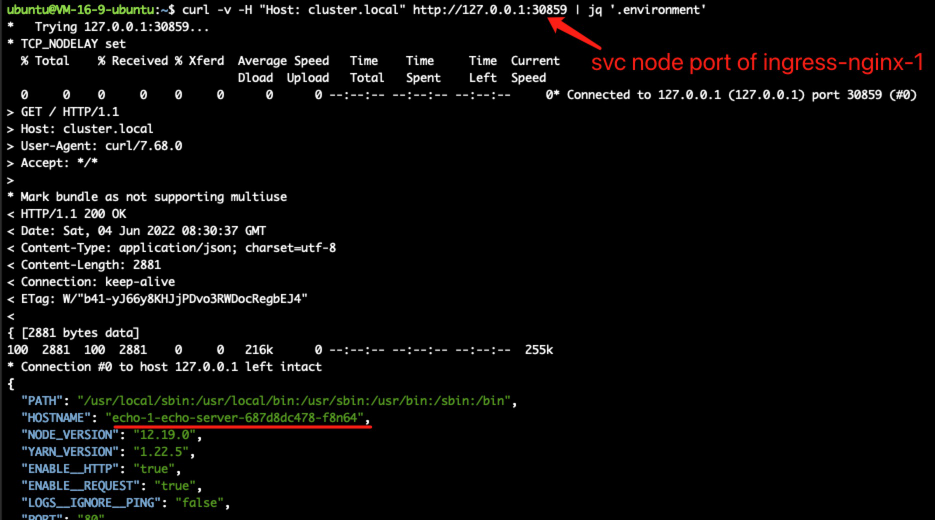

curl your app with -v

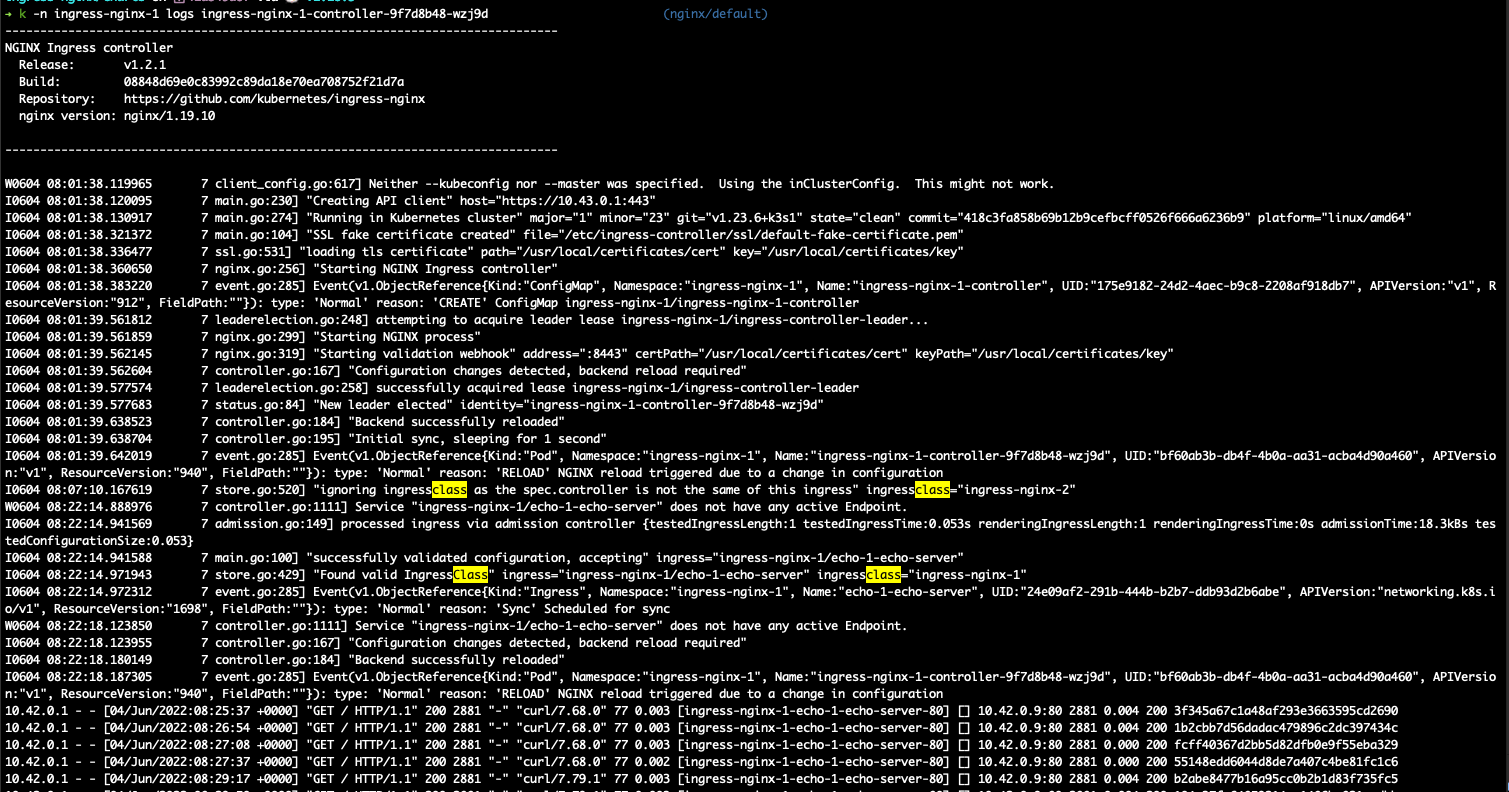

show logs of controller1 pod and also controller2 pod

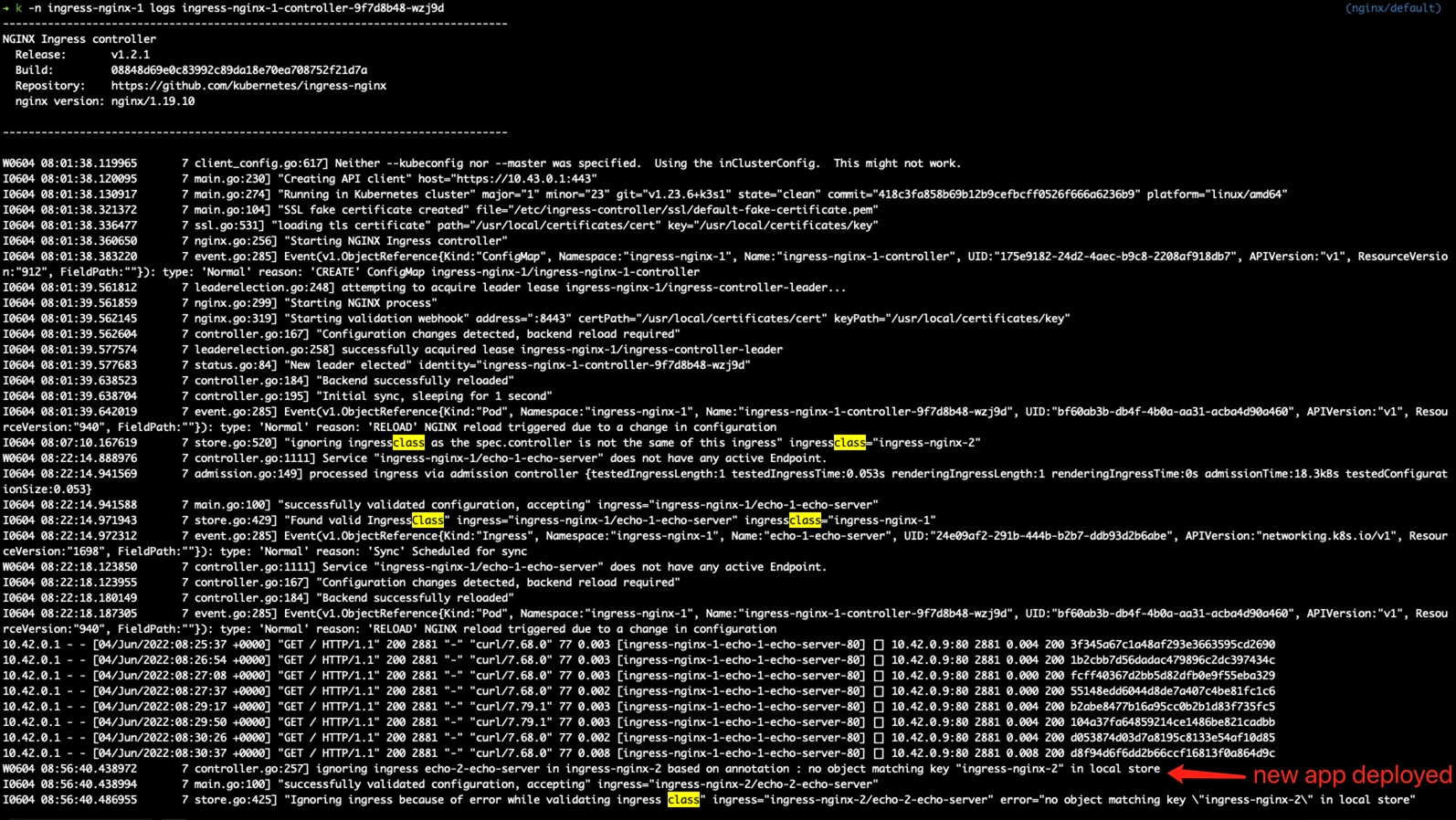

controller1:

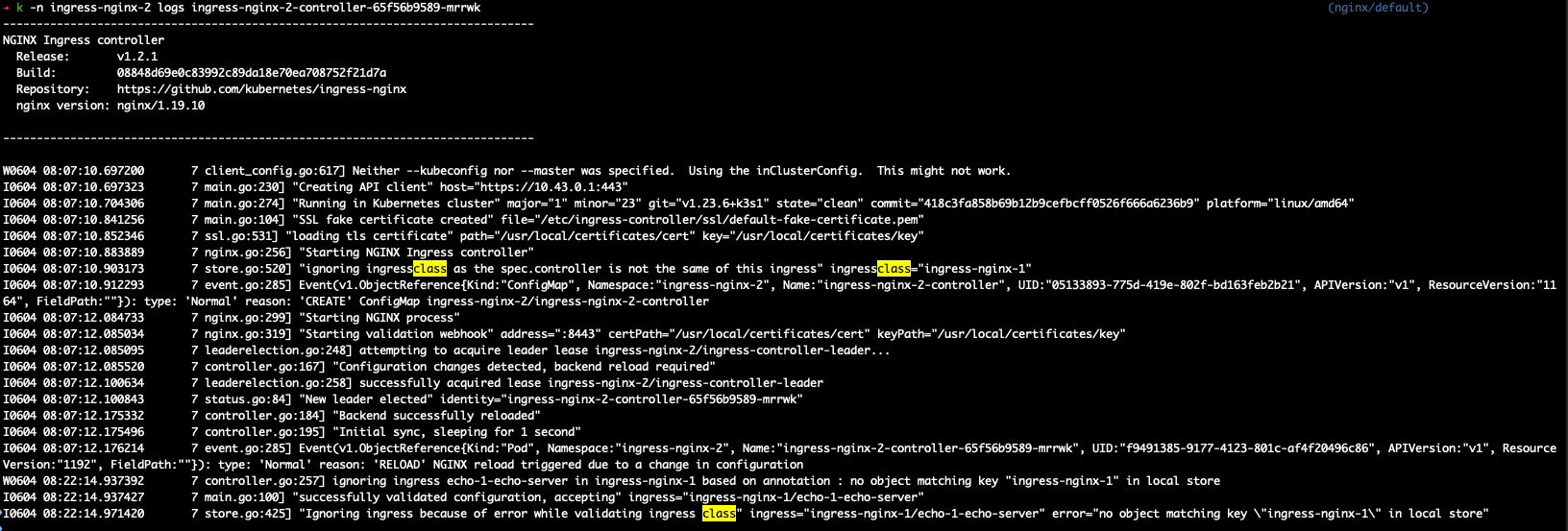

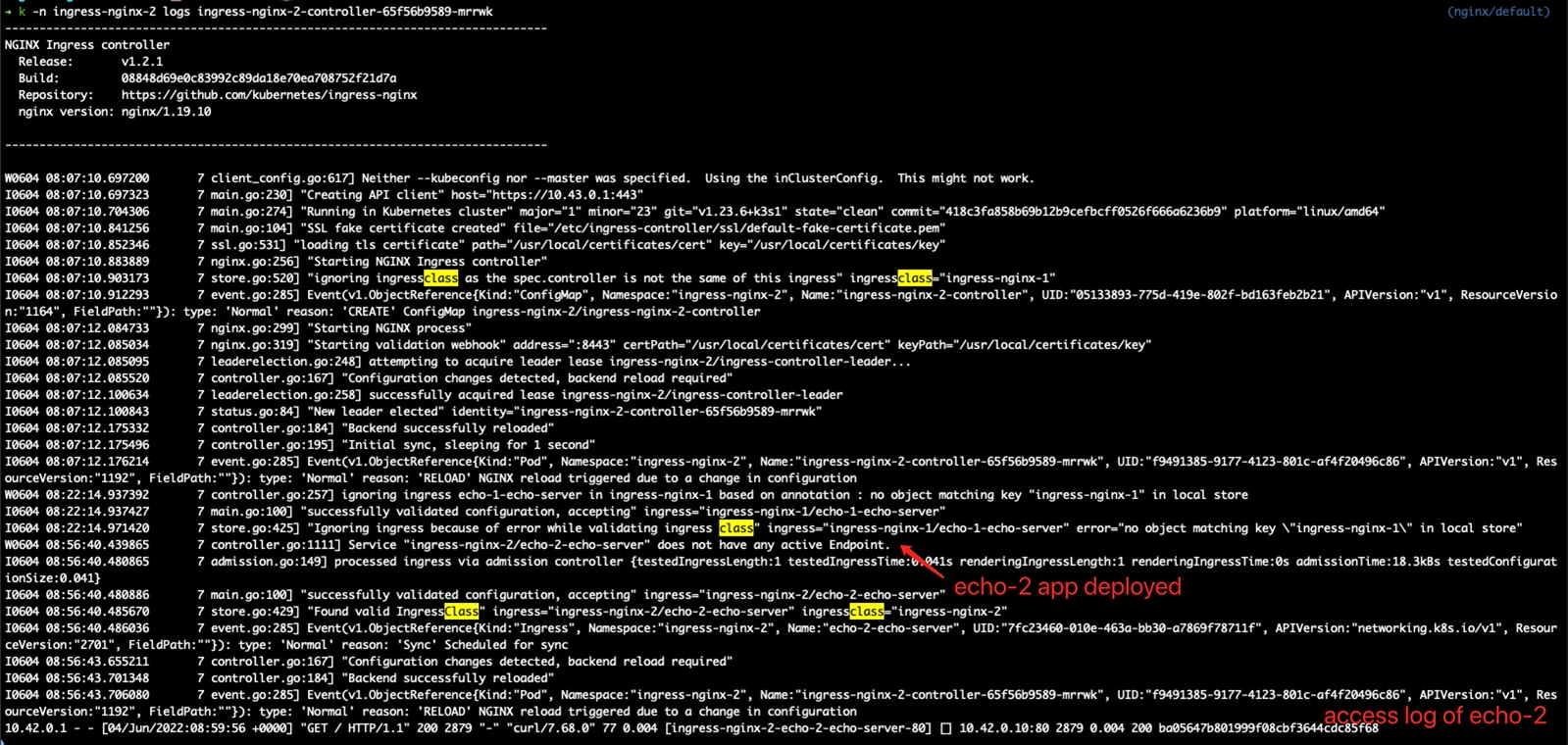

controller2:

create second-app-deployment, second-app-service etc and repeat kubectl describe

use same chart echo-server , install another instance to ingress-nginx-2 namespace with ingress class ingress-nginx-2

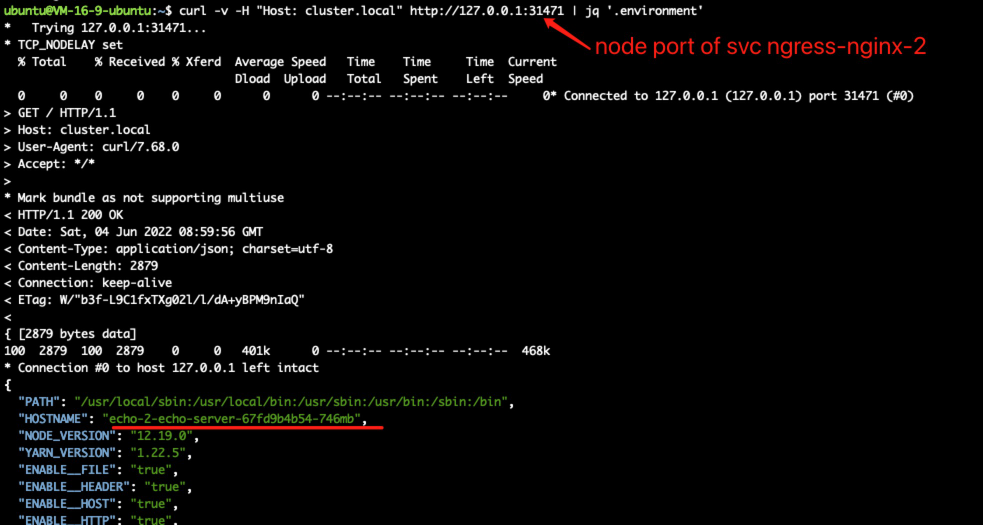

curl with -v:

show logs of controller1 pod and also controller2 pod

controller 1:

controller 2:

@jasine thank you very much for the information. It helps.

But I think I have second thoughts. Basically, I was hoping that you will include the ingress-nginx controller helm chart inside some other chart. I think it is not absolutely required now, but if you think its not too much trouble, then please delete what you have posted above. Then re-post the information, using the ingress-nginx controller inside another chart.

Also look at the list of commands in my precious post. I have edited it. Basically I don't see the second controller service port number and I see servicetype as LoadBalancer in pending state. So maybe this is kind cluster on macOS . I mean what you have posted is not 100% proof of chart inside chart with namespaceOverride and 2 working ingress objects with different IngressClass.

@longwuyuan

But I think I have second thoughts. Basically, I was hoping that you will include the ingress-nginx controller helm chart inside some other chart. I think it is not absolutely required now, but if you think its not too much trouble, then please delete what you have posted above. Then re-post the information, using the ingress-nginx controller inside another chart.

I have tested with private chart of my company, which use ingress-nginx controller with namespaceOverride inside another chart. It's really a little trouble to make a chart to do all the tests and snapshots again, I think as showed in my snapshot, helm install with namespaceOverride value set is convincible.

Also look at the list of commands in my precious post. I have edited it. Basically I don't see the second controller service port number

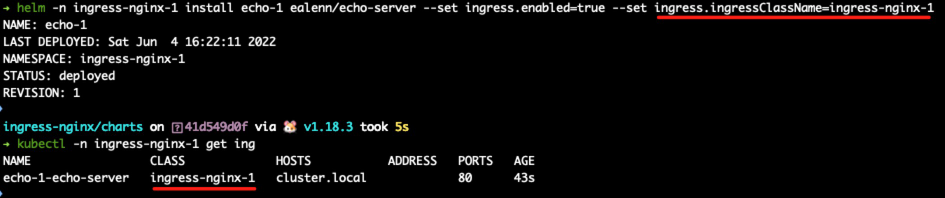

there is a snapshot of kubectl get all -n ingress-nginx-2 , you can find the second controller service node port is 31471, while the first controller service node port is 30859, my following test send curl request to these ports

and I see servicetype as LoadBalancer in pending state. So maybe this is kind cluster on macOS . I mean what you have posted is not 100% proof of chart inside chart with namespaceOverride and 2 working ingress objects with different IngressClass.

It's not on my mac, but on a cvm with a k3s installed, I did not installed any load balancer controller, so the state of loadBalancer is pending, and I think curl on each ingress-nginx controller's service node port is enough to prove both controllers are working as expected, and all the ingress-class works.

the environment for testing is kept, I can add your public ssh key so that you can login and check if necessary

@jasine thank you for the contribution and the information you have provided.

My thoughts are as follows ;

-

I think this is a improvement because you said several other charts support the

namespaceOverride. But the improvement is not demanded by a large enough number of users so it seems like a improvement just for your use case -

The changes are intrusive and from the perspective of changing 35 files, it is not a usual or normal change. I am not a developer and although I can understand the changes, a developer maintainer should review this. The goal is to know and predict impact. Or even to know if this the best way to introduce this feature

-

The timing of making this change is also better decided by maintainer developers

-

Personally, there is no example here that actually uses ingress-nginc chart inside another chart. So that means I am commenting on something that I have not even seen in action. If its possible for you to just pick a random chart (echo-server maybe as example) from https://artifacthub.io and then create a new chart that includes ingress-nginx controller chart, inside your new chart, then I would like to see that new chart-inside-chart installed 2 times on the same cluster and see the "curl" & "kubectl describe ..." etc here. But that is my personal view. Let us wait for reviews from other developer maintainers

-

This PR is only related to the project's helm-chart manifest but ingress-nginx has static yaml install manifests as well. So it means other install methods are not getting this feature and I don't even know if that is a factor

-

The repeated reference to multi-namespace deployments is very very odd because

multi-namespaceliterally means one instance of the controller in multiple-namespaces. So I think you should remove that phrasemulti-namespaceand use phrases likemultiple-installs-in-one-cluster -

Please comment if you have added any documentation by editing any README anywhere. I don't see any so asking. If you have not done so, then I suggest you please consider adding very detailed documentation

-

I don't see any tests in the changed files. Please comment if you have added any tests. I don't see any so asking. The test should definitely do "chart-inside-chart" install, 2 times in one single kind cluster. The tests for this project are under /tests

Beyond that, I will wait for advise/comments/sigestions from other developer maintainers

@longwuyuan

thanks for the long conversation, I think this is quiet a simple problem, although I have changed 35 files, they are just text match and replacement, I did not modify them one by one.

namespaceOverride is now in official helm template, it means every helm chart created has the code snippet added In my pr

I respect your serious attitude and concern, and let's wait for other developer maintainers opinion

thanks again

@jasine thanks for your contribution and thank you for patience with my comments.

Some seemingly simple and harmless changes were merged earlier and later created complications hence my comments. On a high level, if this chart was a like a simple webserver or a api, then a upstream feature from helm like e namespaceOverride would be a trivial change. But in my limited knowledge and awareness, I listed the concerns like only you asking for it and only one manifest (helm chart) changing but static yaml manifest not changing etc etc.

@longwuyuan it seems that no progress has made these days, can I do anything to help?

@jasine there is work ongoing that is higher priority than new features so when there is a window of opportunity, pending PRs get reviewed. There is not much for you to do to make progress. We just need to wait. Thanks for the contribution as it helps so much.

I would also like to see namespaceOverride added. I think the largest reason there isn't a lot of interest in this feature is that people just switch to a different chart. I've switched to bitnami's helm chart for ingress-nginx which already includes the namespaceOverride option. It would be nice if this helm chart would include the same.

When managing a set of dependencies for a large number of clusters, it's easier to package all of your dependencies into a single helm chart using subcharts.

Assessing the risk of the PR shouldn't be done based on the files touched. There are only ~50 changes, and all changes are identical when swapping references to .Release.Namespace

+1 for namespaceOverride

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

+1 for namespaceOverride

+1 for namespaceOverride

@longwuyuan Since there are so many people with this need, can you consider merging my pull request?

I lost view on what the original problem reported was and what the current state of the user is.

But if its all about using ingress-nginx controller chart as a subchart to a parent chart, then I think someone submitted a PR to add namespace as a variable.

@longwuyuan I didn't find this configuration in the latest chart. Could you tell me which configuration it is, or which PR added this feature?

I have carefully reviewed the latest deployment file in the chart, and its value is still .Release.Namespace. This can't solve the issue of deploying as a subchart.

For such a simple and clear issue, I've provided the best practice from the official Helm community, and also offered the modified PR. I have tested and verified it in my production environment. I had over ten discussions with @longwuyuan , proving that this is a reasonable, low-risk requirement that should be adopted. Meanwhile, many people under this issue have expressed the same demand, and some even switched to unofficial solutions because this need is not supported.

I wonder if our community operators understand the technology, can they understand and hear the voices of users? I think such laissez-faire behavior seriously harms the enthusiasm of open-source participants and damages the reputation of the Linux Foundation. I am deeply disappointed about this.

Please tell me how I should participate and promote this feature in the face of such a situation.

@aledbf @strongjz @ChiefAlexander @cpanato

We have repeatedly mention to anyone with an issue to bring it to our attention is to attend the community meeting we hold every two weeks.

We are flooded with Github notification requests for reviews for issues and PRs. Our core maintainers are only three people on a volunteer basis.

@rikatz @tao12345666333 and myself spent a day and half yesterday to get the latest CVE fixes for 1.9.3 and 1.8.4 out.

I could not find mention of this PR in our slack channels, or the community notes document.

I apologize this issue and PR got overlooked, it is not easy maintaining a project as large as this, we do our best.

Our next meeting is October 26th at 11am eastern.

Thank you, James

We have repeatedly mention to anyone with an issue to bring it to our attention is to attend the community meeting we hold every two weeks.

We are flooded with Github notification requests for reviews for issues and PRs. Our core maintainers are only three people on a volunteer basis.

@rikatz @tao12345666333 and myself spent a day and half yesterday to get the latest CVE fixes for 1.9.3 and 1.8.4 out.

I could not find mention of this PR in our slack channels, or the community notes document.

I apologize this issue and PR got overlooked, it is not easy maintaining a project as large as this, we do our best.

Our next meeting is October 26th at 11am eastern.

Thank you, James

Sorry, I didn't try to communicate via Slack. Moving forward, I will prepare the Pull Request and then communicate on Slack.