Services randomly being assigned Ingress Controller's IP address

You can probably skip down to the bottom to the "Others" section. I only put in all the commands in case it helped and because it's required.

NGINX Ingress controller version (exec into the pod and run nginx-ingress-controller --version.):

POD_NAMESPACE=cert-manager

POD_NAME=$(kubectl get pods -n cert-manager -l app.kubernetes.io/name=ingress-nginx --field-selector=status.phase=Running -o jsonpath='{.items[0].metadata.name}')

kubectl exec -it $POD_NAME -n $POD_NAMESPACE -- /nginx-ingress-controller --version

$ kubectl exec -it $PODkubectl exec -it $POD_NAME -n $POD_NAMESPACE -- /nginx-ingress-controller --version

-------------------------------------------------------------------------------

NGINX Ingress controller

Release: v1.1.0

Build: cacbee86b6ccc45bde8ffc184521bed3022e7dee

Repository: https://github.com/kubernetes/ingress-nginx

nginx version: nginx/1.19.9

-------------------------------------------------------------------------------

Kubernetes version (use kubectl version):

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.1", GitCommit:"632ed300f2c34f6d6d15ca4cef3d3c7073412212", GitTreeState:"clean", BuildDate:"2021-08-19T15:45:37Z", GoVersion:"go1.16.7", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.1", GitCommit:"632ed300f2c34f6d6d15ca4cef3d3c7073412212", GitTreeState:"clean", BuildDate:"2021-08-19T15:39:34Z", GoVersion:"go1.16.7", Compiler:"gc", Platform:"linux/amd64"}

Environment:

- Cloud provider or hardware configuration: Bare-metal

- OS (e.g. from /etc/os-release):

$ sudo cat /etc/os-release

NAME="Ubuntu"

VERSION="21.04 (Hirsute Hippo)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 21.04"

VERSION_ID="21.04"

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

VERSION_CODENAME=hirsute

UBUNTU_CODENAME=hirsute

- Kernel (e.g.

uname -a):Linux k-m-001 5.11.0-31-generic #33-Ubuntu SMP Wed Aug 11 13:19:04 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux - Install tools:

- Manual

- Basic cluster related info:

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k-m-001 Ready control-plane,master 247d v1.22.1 10.7.50.11 <none> Ubuntu 21.04 5.11.0-31-generic docker://20.10.8

k-w-001 Ready worker 247d v1.22.1 10.7.60.11 <none> Ubuntu 21.04 5.11.0-49-generic docker://20.10.8

k-w-002 Ready worker 247d v1.22.1 10.7.60.12 <none> Ubuntu 21.04 5.11.0-49-generic docker://20.10.8

k-w-003 Ready worker 166d v1.22.3 10.7.60.13 <none> Ubuntu 20.04.2 LTS 5.4.0-109-generic docker://20.10.10

- How was the ingress-nginx-controller installed:

- If helm was used then please show output of

helm ls -A | grep -i ingressquickstart cert-manager 1 2021-12-18 10:10:59.81553352 +0000 UTC deployed ingress-nginx-4.0.13 1.1.0 - If helm was used then please show output of

helm -n <ingresscontrollernamepspace> get values <helmreleasename>

- If helm was used then please show output of

$ helm -n cert-manager get values cert-manager

USER-SUPPLIED VALUES:

null

- Current State of the controller:

kubectl describe ingressclasses

$ kubectl describe ingressclasses

Name: nginx

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=quickstart

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/version=1.1.0

helm.sh/chart=ingress-nginx-4.0.13

Annotations: meta.helm.sh/release-name: quickstart

meta.helm.sh/release-namespace: cert-manager

Controller: k8s.io/ingress-nginx

Events: <none>

kubectl -n <ingresscontrollernamespace> get all -A -o wideFar too many to list (in the 100s)kubectl -n <ingresscontrollernamespace> describe po <ingresscontrollerpodname>

Name: quickstart-ingress-nginx-controller-54f6f89679-6jpnj

Namespace: cert-manager

Priority: 0

Node: k-s-002/10.7.61.12

Start Time: Tue, 03 May 2022 00:43:01 +0000

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=quickstart

app.kubernetes.io/name=ingress-nginx

pod-template-hash=54f6f89679

Annotations: <none>

Status: Running

IP: 10.244.6.208

IPs:

IP: 10.244.6.208

Controlled By: ReplicaSet/quickstart-ingress-nginx-controller-54f6f89679

Containers:

controller:

Container ID: docker://f709f5d0456a6e67b948ab431266482cf7b3cd2c60344f0656ce5e8d36804fd5

Image: k8s.gcr.io/ingress-nginx/controller:v1.1.0@sha256:f766669fdcf3dc26347ed273a55e754b427eb4411ee075a53f30718b4499076a

Image ID: docker-pullable://k8s.gcr.io/ingress-nginx/controller@sha256:f766669fdcf3dc26347ed273a55e754b427eb4411ee075a53f30718b4499076a

Ports: 80/TCP, 443/TCP, 8443/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

Args:

/nginx-ingress-controller

--publish-service=$(POD_NAMESPACE)/quickstart-ingress-nginx-controller

--election-id=ingress-controller-leader

--controller-class=k8s.io/ingress-nginx

--configmap=$(POD_NAMESPACE)/quickstart-ingress-nginx-controller

--validating-webhook=:8443

--validating-webhook-certificate=/usr/local/certificates/cert

--validating-webhook-key=/usr/local/certificates/key

State: Running

Started: Tue, 03 May 2022 00:43:04 +0000

Ready: True

Restart Count: 0

Requests:

cpu: 100m

memory: 90Mi

Liveness: http-get http://:10254/healthz delay=10s timeout=1s period=10s #success=1 #failure=5

Readiness: http-get http://:10254/healthz delay=10s timeout=1s period=10s #success=1 #failure=3

Environment:

POD_NAME: quickstart-ingress-nginx-controller-54f6f89679-6jpnj (v1:metadata.name)

POD_NAMESPACE: cert-manager (v1:metadata.namespace)

LD_PRELOAD: /usr/local/lib/libmimalloc.so

Mounts:

/usr/local/certificates/ from webhook-cert (ro)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-w29qp (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

webhook-cert:

Type: Secret (a volume populated by a Secret)

SecretName: quickstart-ingress-nginx-admission

Optional: false

kube-api-access-w29qp:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

kubectl -n <ingresscontrollernamespace> describe svc <ingresscontrollerservicename>

Name: quickstart-ingress-nginx-controller

Namespace: cert-manager

Labels: app=ingress-nginx

app.kubernetes.io/component=controller

app.kubernetes.io/instance=quickstart

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/version=1.1.0

helm.sh/chart=ingress-nginx-4.0.13

Annotations: meta.helm.sh/release-name: quickstart

meta.helm.sh/release-namespace: cert-manager

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=quickstart,app.kubernetes.io/name=ingress-nginx

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.99.248.72

IPs: 10.99.248.72

IP: 10.7.9.10

LoadBalancer Ingress: 10.7.9.10

Port: nginx-http 80/TCP

TargetPort: http/TCP

NodePort: nginx-http 30756/TCP

Endpoints: 10.244.6.208:80

Port: nginx-https 443/TCP

TargetPort: https/TCP

NodePort: nginx-https 31070/TCP

Endpoints: 10.244.6.208:443

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

Name: quickstart-ingress-nginx-controller-admission

Namespace: cert-manager

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=quickstart

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/version=1.1.0

helm.sh/chart=ingress-nginx-4.0.13

Annotations: meta.helm.sh/release-name: quickstart

meta.helm.sh/release-namespace: cert-manager

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=quickstart,app.kubernetes.io/name=ingress-nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.98.183.213

IPs: 10.98.183.213

Port: https-webhook 443/TCP

TargetPort: webhook/TCP

Endpoints: 10.244.6.208:8443

Session Affinity: None

Events: <none>

- Current state of ingress object, if applicable:

kubectl -n <appnnamespace> get all,ing -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/cert-manager-55658cdf68-2q9zj 1/1 Running 4 (19h ago) 35h 10.244.2.71 k-w-002 <none> <none>

pod/cert-manager-cainjector-967788869-mgsgn 1/1 Running 6 (19h ago) 35h 10.244.2.70 k-w-002 <none> <none>

pod/cert-manager-webhook-6668fbb57d-xz44s 1/1 Running 2 (19h ago) 35h 10.244.1.158 k-w-001 <none> <none>

pod/nginx-rp-7bf4f94459-lzrrv 1/1 Running 6 (4h16m ago) 4h20m 10.244.2.89 k-w-002 <none> <none>

pod/nginx-rp-deployment-restart-27521760--1-fvcwx 0/1 Completed 0 2d20h <none> k-w-001 <none> <none>

pod/nginx-rp-deployment-restart-27523200--1-4kwfb 0/1 Completed 0 44h <none> k-w-002 <none> <none>

pod/nginx-rp-deployment-restart-27524640--1-fcf57 0/1 Completed 0 20h <none> k-w-001 <none> <none>

pod/quickstart-ingress-nginx-controller-54f6f89679-6jpnj 1/1 Running 0 4h7m 10.244.6.208 k-s-002 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/cert-manager ClusterIP 10.108.25.240 <none> 9402/TCP 135d app.kubernetes.io/component=controller,app.kubernetes.io/instance=cert-manager,app.kubernetes.io/name=cert-manager

service/cert-manager-webhook ClusterIP 10.105.231.106 <none> 443/TCP 135d app.kubernetes.io/component=webhook,app.kubernetes.io/instance=cert-manager,app.kubernetes.io/name=webhook

service/nginx-rp LoadBalancer 10.108.72.63 10.7.9.11 80:31640/TCP,443:32154/TCP 134d app=nginx-rp

service/quickstart-ingress-nginx-controller LoadBalancer 10.99.248.72 10.7.9.10 80:30756/TCP,443:31070/TCP 135d app.kubernetes.io/component=controller,app.kubernetes.io/instance=quickstart,app.kubernetes.io/name=ingress-nginx

service/quickstart-ingress-nginx-controller-admission ClusterIP 10.98.183.213 <none> 443/TCP 135d app.kubernetes.io/component=controller,app.kubernetes.io/instance=quickstart,app.kubernetes.io/name=ingress-nginx

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/cert-manager 1/1 1 1 135d cert-manager quay.io/jetstack/cert-manager-controller:v1.6.1 app.kubernetes.io/component=controller,app.kubernetes.io/instance=cert-manager,app.kubernetes.io/name=cert-manager

deployment.apps/cert-manager-cainjector 1/1 1 1 135d cert-manager quay.io/jetstack/cert-manager-cainjector:v1.6.1 app.kubernetes.io/component=cainjector,app.kubernetes.io/instance=cert-manager,app.kubernetes.io/name=cainjector

deployment.apps/cert-manager-webhook 1/1 1 1 135d cert-manager quay.io/jetstack/cert-manager-webhook:v1.6.1 app.kubernetes.io/component=webhook,app.kubernetes.io/instance=cert-manager,app.kubernetes.io/name=webhook

deployment.apps/nginx-rp 1/1 1 1 134d nginx-rp nginx:1.15-alpine app=nginx-rp

deployment.apps/quickstart-ingress-nginx-controller 1/1 1 1 135d controller k8s.gcr.io/ingress-nginx/controller:v1.1.0@sha256:f766669fdcf3dc26347ed273a55e754b427eb4411ee075a53f30718b4499076a app.kubernetes.io/component=controller,app.kubernetes.io/instance=quickstart,app.kubernetes.io/name=ingress-nginx

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/cert-manager-55658cdf68 1 1 1 135d cert-manager quay.io/jetstack/cert-manager-controller:v1.6.1 app.kubernetes.io/component=controller,app.kubernetes.io/instance=cert-manager,app.kubernetes.io/name=cert-manager,pod-template-hash=55658cdf68

replicaset.apps/cert-manager-cainjector-967788869 1 1 1 135d cert-manager quay.io/jetstack/cert-manager-cainjector:v1.6.1 app.kubernetes.io/component=cainjector,app.kubernetes.io/instance=cert-manager,app.kubernetes.io/name=cainjector,pod-template-hash=967788869

replicaset.apps/cert-manager-webhook-6668fbb57d 1 1 1 135d cert-manager quay.io/jetstack/cert-manager-webhook:v1.6.1 app.kubernetes.io/component=webhook,app.kubernetes.io/instance=cert-manager,app.kubernetes.io/name=webhook,pod-template-hash=6668fbb57d

replicaset.apps/nginx-rp-544fbb46b9 0 0 0 44h nginx-rp nginx:1.15-alpine app=nginx-rp,pod-template-hash=544fbb46b9

replicaset.apps/nginx-rp-6bccf57c4d 0 0 0 2d20h nginx-rp nginx:1.15-alpine app=nginx-rp,pod-template-hash=6bccf57c4d

replicaset.apps/nginx-rp-779f99ff44 0 0 0 4d20h nginx-rp nginx:1.15-alpine app=nginx-rp,pod-template-hash=779f99ff44

replicaset.apps/nginx-rp-7bf4f94459 1 1 1 20h nginx-rp nginx:1.15-alpine app=nginx-rp,pod-template-hash=7bf4f94459

replicaset.apps/nginx-rp-86c6f499c 0 0 0 3d20h nginx-rp nginx:1.15-alpine app=nginx-rp,pod-template-hash=86c6f499c

replicaset.apps/nginx-rp-bc4dc6b6 0 0 0 5d20h nginx-rp nginx:1.15-alpine app=nginx-rp,pod-template-hash=bc4dc6b6

replicaset.apps/quickstart-ingress-nginx-controller-54f6f89679 1 1 1 135d controller k8s.gcr.io/ingress-nginx/controller:v1.1.0@sha256:f766669fdcf3dc26347ed273a55e754b427eb4411ee075a53f30718b4499076a app.kubernetes.io/component=controller,app.kubernetes.io/instance=quickstart,app.kubernetes.io/name=ingress-nginx,pod-template-hash=54f6f89679

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE CONTAINERS IMAGES SELECTOR

cronjob.batch/nginx-ingress-deployment-restart 1 * 16 * * False 0 16d 74d kubectl bitnami/kubectl <none>

cronjob.batch/nginx-rp-deployment-restart 0 8 * * * False 0 20h 98d kubectl bitnami/kubectl <none>

NAME COMPLETIONS DURATION AGE CONTAINERS IMAGES SELECTOR

job.batch/nginx-ingress-deployment-restart-27502501 0/1 16d 16d kubectl bitnami/kubectl controller-uid=69fa6527-2eaf-48e0-8db1-55f0b3675227

job.batch/nginx-rp-deployment-restart-27418080 0/1 74d 74d kubectl bitnami/kubectl controller-uid=47afeda1-07de-403c-9cbc-6b53535b8487

job.batch/nginx-rp-deployment-restart-27521760 1/1 5s 2d20h kubectl bitnami/kubectl controller-uid=d88d31be-e6a6-4052-9de0-249623ea94c6

job.batch/nginx-rp-deployment-restart-27523200 1/1 7s 44h kubectl bitnami/kubectl controller-uid=4376318d-aa70-49cd-b9d0-a630b7dd9162

job.batch/nginx-rp-deployment-restart-27524640 1/1 4s 20h kubectl bitnami/kubectl controller-uid=e0706404-63bf-4aca-946d-a54053cae0c9

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/nginx-ingress <none> XXXX.XXX 10.7.9.10 80, 443 134d

kubectl -n <appnamespace> describe ing <ingressname>

$ kubectl -n cert-manager describe ing nginx-ingress

Name: nginx-ingress

Namespace: cert-manager

Address: 10.7.9.10

Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>)

TLS:

XXXX.XXX-tls-cert terminates XXXX.XXX

Rules:

Host Path Backends

---- ---- --------

XXXX.XXX

/(.*) nginx-rp:80 (10.244.2.89:80)

Annotations: cert-manager.io/acme-challenge-type: http01

cert-manager.io/issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/rewrite-target: /$1

Events: <none>

-

If applicable, then, your complete and exact curl/grpcurl command (redacted if required) and the reponse to the curl/grpcurl command with the -v flag

-

Others:

My ingress rules

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

labels:

app: nginx-ingress

namespace: cert-manager

annotations:

cert-manager.io/acme-challenge-type: http01

kubernetes.io/ingress.class: nginx

cert-manager.io/issuer: "letsencrypt-prod"

nginx.ingress.kubernetes.io/rewrite-target: /$1

name: nginx-ingress

namespace: cert-manager

spec:

rules:

- host: XXXX.XXX

http:

paths:

- path: /(.*)

pathType: Prefix

backend:

service:

name: nginx-rp

port:

number: 80

tls:

- hosts:

- XXXX.XXX

secretName: XXXX.XXX-tls-cert

What happened: Cluster has been running fine for the last 6~ months. I have a cronjob that restarts my nodes at the start of the month and my nginx proxies and ingress controllers every few days. When it restarted earlier today many things started to break. Sometimes accessing sites and services on my cluster would work fine. Other times it would get stuck in redirect loops, report 500, 502 or 404 errors. After spending hours trying to troubleshoot it, adding custom error pages to my reverse proxies trying to figure out which one was causing these issues (since it was happening randomly) I found that there was an nginx pod that was doing 308 redirects and that none of mine were configured to do so. After checking the logs inside the ingress pod I saw that it was the culprit returning all the error codes. Bypassing the ingress controller and hitting my services directly (or by going through my other nginx reverse proxies) worked fine with no issues.

What you expected to happen: Not sure if there was an update or something, but it has basically stopped working since the cluster was restarted.

What do you think went wrong?

No idea. Haven't touched the cluster in months. Has been restarting fine. Maybe some update in the last 4 weeks?

How to reproduce it:

Honestly I don't know why this is happening all of a sudden, and I'm not sure how to reproduce on another cluster, unless you want my entire K8s cluster.

@Slyke: This issue is currently awaiting triage.

If Ingress contributors determines this is a relevant issue, they will accept it by applying the triage/accepted label and provide further guidance.

The triage/accepted label can be added by org members by writing /triage accepted in a comment.

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.

@Slyke can you share logs of ingress pod which caused error ?

Hey, sure, here they are:

From the Ingress nginx pod (handles https, certs etc):

10.244.1.0 - - [03/May/2022:05:45:27 +0000] "GET / HTTP/1.1" 308 164 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" 1824 0.000 [cert-manager-nginx-rp-80] [] - - - - e470303cd44aa808197335678d4ecd78

10.244.6.1 - - [03/May/2022:05:45:27 +0000] "GET /motioneye/ HTTP/2.0" 308 164 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" 110 0.074 [cert-manager-nginx-rp-80] [] 10.244.2.89:80 164 0.076 308 e470303cd44aa808197335678d4ecd78

10.244.6.1 - - [03/May/2022:05:45:27 +0000] "GET / HTTP/2.0" 200 194 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" 41 0.002 [cert-manager-nginx-rp-80] [] 10.244.2.89:80 194 0.000 200 6456f697aff0ef9776689ba0bce72f81

10.244.6.1 - - [03/May/2022:05:45:27 +0000] "GET /favicon.ico HTTP/2.0" 200 194 "https://XXXX.XXX/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" 122 0.002 [cert-manager-nginx-rp-80] [] 10.244.2.89:80 194 0.004 200 9628a34354b1376121752f11fd152cee

10.244.6.1 - - [03/May/2022:05:45:53 +0000] "GET /grafana/d/1zTDHnhnz/furnace-monitor?orgId=1&refresh=30s&from=now-12h&to=now HTTP/2.0" 404 548 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" 97 0.044 [cert-manager-nginx-rp-80] [] 10.244.2.89:80 548 0.044 404 fe9e0abface9715fb72bf6c0405a680f

10.244.6.1 - - [03/May/2022:05:45:53 +0000] "GET /favicon.ico HTTP/2.0" 200 194 "https://XXXX.XXX/grafana/d/1zTDHnhnz/furnace-monitor?orgId=1&refresh=30s&from=now-12h&to=now" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" 119 0.002 [cert-manager-nginx-rp-80] [] 10.244.2.89:80 194 0.000 200 b4493c9179dceed266fe5e7ebcdfd78d

From the reverse proxy that the ingress pod hands the request to (since it needs to be in the same namespace as the ingress pod), aka RP1:

10.244.6.208 - - [03/May/2022:05:45:27 +0000] "GET /motioneye/ HTTP/1.1" 308 164 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" "10.244.6.1"

10.244.6.208 - - [03/May/2022:05:45:27 +0000] "GET / HTTP/1.1" 200 194 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" "10.244.6.1"

10.244.6.208 - - [03/May/2022:05:45:27 +0000] "GET /favicon.ico HTTP/1.1" 200 194 "https://XXXX.XXX/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" "10.244.6.1"

10.244.2.1 - - [03/May/2022:05:45:35 +0000] "GET /health HTTP/1.1" 200 11 "-" "kube-probe/1.22" "-"

10.244.2.1 - - [03/May/2022:05:45:45 +0000] "GET /health HTTP/1.1" 200 11 "-" "kube-probe/1.22" "-"

10.244.6.208 - - [03/May/2022:05:45:53 +0000] "GET /grafana/d/1zTDHnhnz/furnace-monitor?orgId=1&refresh=30s&from=now-12h&to=now HTTP/1.1" 404 548 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" "10.244.6.1"

10.244.6.208 - - [03/May/2022:05:45:53 +0000] "GET /favicon.ico HTTP/1.1" 200 194 "https://XXXX.XXX/grafana/d/1zTDHnhnz/furnace-monitor?orgId=1&refresh=30s&from=now-12h&to=now" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" "10.244.6.1"

Config:

location ~/(.*)$ {

proxy_pass http://nginx-rp.injester.svc.cluster.local;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Scheme $scheme;

proxy_redirect http:// $scheme://;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-Host $http_host;

proxy_set_header X-Forwarded-Uri $request_uri;

proxy_pass_header X-Transmission-Session-Id;

# For websockets

proxy_http_version 1.1;

proxy_set_header Connection $connection_upgrade;

proxy_set_header Upgrade $http_upgrade;

proxy_cache_bypass $http_upgrade;

proxy_set_header X-Real-IP $remote_addr;

}

Second reverse proxy that handles authentication, url rewrites etc aka RP2:

10.244.2.89 - - [03/May/2022:05:45:27 +0000] "GET /favicon.ico HTTP/1.1" 200 194 "https://XXXX.XXX/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" "10.244.6.1, 10.244.6.208"

10.244.2.89 - - [03/May/2022:05:45:53 +0000] "GET /favicon.ico HTTP/1.1" 200 194 "https://XXXX.XXX/grafana/d/1zTDHnhnz/furnace-monitor?orgId=1&refresh=30s&from=now-12h&to=now" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36" "10.244.6.1, 10.244.6.208"

Config:

location ~/grafana/?(.*)?$ {

proxy_pass http://grafana.home.svc.cluster.local;

rewrite /grafana/(.*) /$1 break;

# Websockets

proxy_set_header Connection $connection_upgrade;

proxy_set_header Upgrade $http_upgrade;

proxy_cache_bypass $http_upgrade;

proxy_set_header X-Real-IP $remote_addr;

# Grafana specific

proxy_max_temp_file_size 0;

include conf.d/proxy.config;

include conf.d/auth.config;

}

location ~/motioneye/?(.*)?$ {

if ($request_uri ~ /motioneye$ ) {

add_header Content-Type text/html;

return 200 '<meta http-equiv="refresh" content="0; URL=$uri/" />';

}

proxy_pass http://motioneye.home.svc.cluster.local;

rewrite /motioneye/(.*) /$1 break;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

include conf.d/proxy.config;

include conf.d/auth.config;

}

When navigating to XXXX.XXX/motioneye, it gets redirected with a 308 to XXXX.XXX/. This will sometimes also loop infinitely. When navigating to XXXX.XXX/grafana/* it returns 404. These services can be access if I set my DNS to point XXXX.XXX to the reverse proxy's IP (using Metal-LB as the load balancer).

I should also mention that my 2 reverse proxies have custom error pages:

error_page 404 /custom_404.html;

location = /custom_404.html {

internal;

return 404 "RP2: 404 page - resource not found";

}

error_page 500 /custom_500.html;

location = /custom_500.html {

internal;

return 500 "RP2: 500 page - internal server error";

}

error_page 502 /custom_502.html;

location = /custom_502.html {

internal;

return 502 "RP2: 502 page - bad gateway";

}

error_page 503 /custom_503.html;

location = /custom_503.html {

internal;

return 503 "RP2: 503 page - service temporarily unavailable";

}

error_page 400 /custom_400.html;

location = /custom_400.html {

internal;

return 400 "RP2: 400 page - bad request";

}

The 404s, 500s, 502s etc I'm seeing are:

/remove-kind bug /kind support

Here is it when setting XXXX.XXX to point to 10.7.9.11 (reverse proxy RP1 that nginx ingress talks to) on my DNS on my LAN:

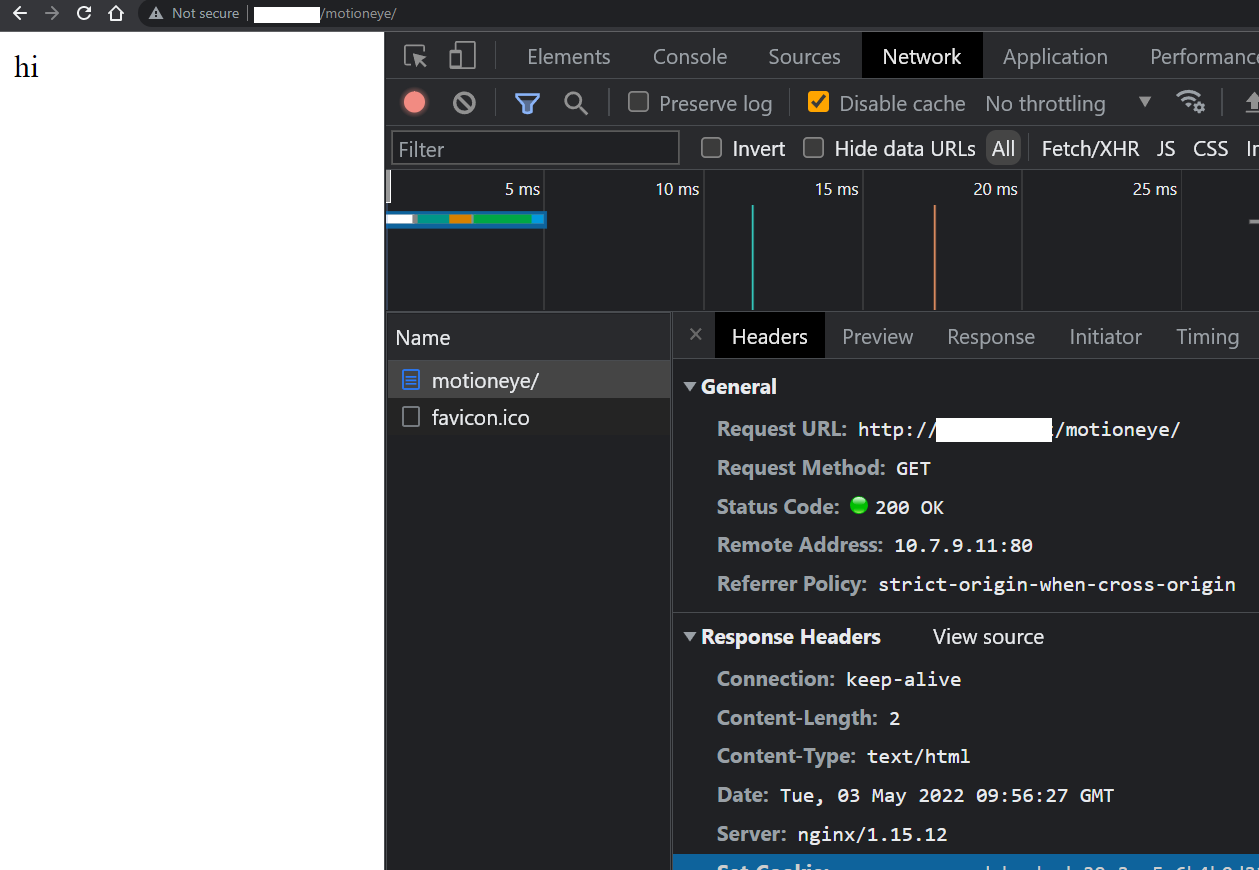

I just changed the motioneye nginx on the second (most inner) reverse proxy to:

location ~/motioneye/?(.*)?$ {

if ($request_uri ~ /motioneye$ ) {

add_header Content-Type text/html;

return 200 '<meta http-equiv="refresh" content="0; URL=$uri/" />';

}

add_header Content-Type text/html;

return 200 'hi';

proxy_pass http://motioneye.home.svc.cluster.local;

rewrite /motioneye/(.*) /$1 break;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

include conf.d/proxy.config;

include conf.d/auth.config;

}

The only other thing between the browser and RP1 is the ingress nginx.

After a lot of investigations I've discovered that sometimes my services are resolving to the LAN IP of the nginx ingress controller.

These commands were executed a few seconds apart:

/ $ ping authelia.injester.svc.cluster.local

PING authelia.injester.svc.cluster.local (10.7.9.10): 56 data bytes

ping: permission denied (are you root?)

/ $ ping motioneye.home.svc.cluster.local

PING motioneye.home.svc.cluster.local (10.105.38.36): 56 data bytes

ping: permission denied (are you root?)

/ $ ping motioneye.home.svc.cluster.local

PING motioneye.home.svc.cluster.local (10.7.9.10): 56 data bytes

/ $ ping authelia.injester.svc.cluster.local

PING authelia.injester.svc.cluster.local (10.108.82.103): 56 data bytes

ping: permission denied (are you root?)

/ $ ping authelia.injester.svc.cluster.local

PING authelia.injester.svc.cluster.local (10.7.9.10): 56 data bytes

ping: permission denied (are you root?)

/ $ ping authelia.injester.svc.cluster.local

PING authelia.injester.svc.cluster.local (10.7.9.10): 56 data bytes

ping: permission denied (are you root?)

Every time I press up then enter I can see the IP changing.

The 10.7.9.10 IP address is from:

apiVersion: v1

kind: Service

metadata:

labels:

app: ingress-nginx

name: quickstart-ingress-nginx-controller

namespace: cert-manager

spec:

ports:

- name: nginx-http

protocol: TCP

port: 80

- name: nginx-https

protocol: TCP

port: 443

loadBalancerIP: 10.7.9.10

type: LoadBalancer

Here's describing the service:

$ kubectl describe svc -n injester authelia

Name: authelia

Namespace: injester

Labels: app=authelia

Annotations: <none>

Selector: app=authelia

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.108.82.103

IPs: 10.108.82.103

IP: 10.7.9.70

LoadBalancer Ingress: 10.7.9.70

Port: authelia-tcp 9091/TCP

TargetPort: 9091/TCP

NodePort: authelia-tcp 30513/TCP

Endpoints: 10.244.1.212:9091

Session Affinity: None

External Traffic Policy: Local

HealthCheck NodePort: 30333

Events: <none>

$ kubectl describe svc -n home motioneye

Name: motioneye

Namespace: home

Labels: app=motioneye

Annotations: <none>

Selector: app=motioneye

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.105.38.36

IPs: 10.105.38.36

Port: motioneye-http 8765/TCP

TargetPort: 8765/TCP

Endpoints: 10.244.4.55:8765

Port: motioneye-http2 80/TCP

TargetPort: 8765/TCP

Endpoints: 10.244.4.55:8765

Session Affinity: None

Events: <none>

I'm not sure why or how this is happening. It's like something is messing with Metal-LB's load balancer IP settings or something.

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle rotten

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs.

This bot triages issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Reopen this issue with

/reopen - Mark this issue as fresh with

/remove-lifecycle rotten - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/close not-planned

@k8s-triage-robot: Closing this issue, marking it as "Not Planned".

In response to this:

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs.

This bot triages issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied- After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied- After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closedYou can:

- Reopen this issue with

/reopen- Mark this issue as fresh with

/remove-lifecycle rotten- Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/close not-planned

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.