autoscaler

autoscaler copied to clipboard

autoscaler copied to clipboard

Hetzner Failed to get node infos for groups

Which component are you using?: Rancher with K3S 1.24 Autoscaler v1.25.0 Hetzner Cloud

cluster-autoscaler

What version of the component are you using?: 1.25.0

What did you expect to happen?: Not sure, it doesnt throw errors on initial bootup? Not sure whats the issue there

root@Rancher1:~# kubectl logs cluster-autoscaler-6bbc7d777-65pmm --namespace=kube-system I0911 19:39:20.180846 1 leaderelection.go:248] attempting to acquire leader lease kube-system/cluster-autoscaler... I0911 19:39:37.741205 1 leaderelection.go:258] successfully acquired lease kube-system/cluster-autoscaler W0911 19:39:39.720046 1 hetzner_servers_cache.go:94] Fetching servers from Hetzner API I0911 19:39:39.912952 1 node_instances_cache.go:156] Start refreshing cloud provider node instances cache I0911 19:39:39.913027 1 node_instances_cache.go:168] Refresh cloud provider node instances cache finished, refresh took 28.352µs I0911 19:39:49.914012 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:39:49.914795 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:39:49.916052 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://worker-1 exists error: failed to get servers for node worker-1 error: server not found I0911 19:39:59.917076 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:39:59.917134 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:39:59.917482 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://worker-1 exists error: failed to get servers for node worker-1 error: server not found I0911 19:40:09.918765 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:40:09.918817 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:40:09.919155 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://rancher2 exists error: failed to get servers for node rancher2 error: server not found I0911 19:40:19.919815 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:40:19.919862 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:40:19.920137 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://rancher3 exists error: failed to get servers for node rancher3 error: server not found I0911 19:40:29.921380 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:40:29.921413 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:40:29.921692 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://worker-1 exists error: failed to get servers for node worker-1 error: server not found W0911 19:40:39.922774 1 hetzner_servers_cache.go:94] Fetching servers from Hetzner API I0911 19:40:40.191242 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:40:40.191356 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:40:40.191946 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://rancher3 exists error: failed to get servers for node rancher3 error: server not found I0911 19:40:50.192858 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:40:50.193165 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:40:50.193636 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://worker-1 exists error: failed to get servers for node worker-1 error: server not found I0911 19:41:00.194803 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:41:00.194886 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:41:00.195563 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://worker-1 exists error: failed to get servers for node worker-1 error: server not found I0911 19:41:10.196672 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:41:10.196707 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:41:10.197092 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://rancher3 exists error: failed to get servers for node rancher3 error: server not found I0911 19:41:20.197971 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 I0911 19:41:20.198032 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 E0911 19:41:20.198962 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://worker-1 exists error: failed to get servers for node worker-1 error: server not found I0911 19:41:30.199314 1 hetzner_node_group.go:437] Set node group pool1 size from 0 to 0, expected delta 0 I0911 19:41:30.199365 1 hetzner_node_group.go:437] Set node group draining-node-pool size from 0 to 0, expected delta 0 E0911 19:41:30.199588 1 static_autoscaler.go:298] Failed to get node infos for groups: failed to check if server k3s://worker-1 exists error: failed to get servers for node worker-1 error: server not found

How to reproduce it (as minimally and precisely as possible): Using 1.25.0

When I use 1.23.1 I dont see the errors, but also it doesnt scale

Anything else we need to know?:

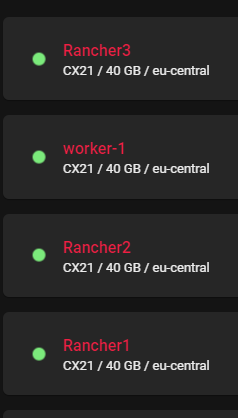

I got following servers running: Rancher1, Rancher2, Rancher3 as Control Planes worker1 as worker node, all inside the cluster, I tried to deploy the autoscaler with the example yaml in the Hetzner folder.

My nodes in the cluster:

In Hetzner:

Same here.

I'm facing the same problem.

After reading through the Makefile I saw that BUILD_TAGS which would be used for the PROVIDER variable. I tried this.

BUILD_TAGS=hetzner make build-in-docker

docker build -t ghcr.io/tomasnorre/hetzner-cluster-autoscaler:0.0.1 -f Dockerfile.amd64 .

But it didn't change the situation.

Update:

have also tried:

BUILD_TAGS=hetzner REGISTRY=ghcr.io/tomasnorre make make-image

BUILD_TAGS=hetzner REGISTRY=ghcr.io/tomasnorre make push-image

But still no difference.

Hi, I ran into the same issue and fixed it by using the 1.24 autoscaler. I'm also running k3s 1.24 and matching that version with the autoscaler version, it works. You just have to check out the cluster-autoscaler-release-1.24 version of autoscaler, and build and use that image.

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

Same here Cluster version 1.26.4 Rancher K3s version 1.26.7 ``E0821 14:48:10.005095 1 static_autoscaler.go:337] Failed to get node infos for groups: failed to check if server hcloud://xxxxxx exists error: failed to get servers for node integrator-production-worker-jobs-0 error: server not found

/area provider/hetzner

It looks like you are using a different cloud-controller-manager than hcloud-cloud-controller-manager (HCCM). The Hetzner Cluster Autoscaler Provider expects that the Node.ProviderID matches the format HCCM (hcloud://$SERVER_ID), and if it does not then it can not properly resolve the Nodes.

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle rotten - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle rotten

Running into this with 1.28 k3s and the chart for cluster-autoscaler-9.36.0

/remove-lifecycle rotten

Hey @Mrono,

have you checked my comment above and compared against your nodes?

It looks like you are using a different cloud-controller-manager than hcloud-cloud-controller-manager (HCCM). The Hetzner Cluster Autoscaler Provider expects that the

Node.ProviderIDmatches the format HCCM (hcloud://$SERVER_ID), and if it does not then it can not properly resolve the Nodes.

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs.

This bot triages issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Reopen this issue with

/reopen - Mark this issue as fresh with

/remove-lifecycle rotten - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/close not-planned

@k8s-triage-robot: Closing this issue, marking it as "Not Planned".

In response to this:

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs.

This bot triages issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied- After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied- After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closedYou can:

- Reopen this issue with

/reopen- Mark this issue as fresh with

/remove-lifecycle rotten- Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/close not-planned

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.

/reopen

@Bryce-Soghigian: Reopened this issue.

In response to this:

/reopen

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.

/remove-lifecycle rotten

@Bryce-Soghigian Not sure if you reopened because you experience the issue or for issue maintenance reasons.

I do believe that this can be closed. I have provided an explanation for the issue the user was facing 5 months ago. I have kept it open to allow the affected users to respond whether it fixed their problem, but so far no one has confirmed or denied that the suggested fix works.

Not sure what to do besides letting it be closed through the lifecycle or closing it myself.

/close

@Bryce-Soghigian: Closing this issue.

In response to this:

/close

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.