metrics-server

metrics-server copied to clipboard

metrics-server copied to clipboard

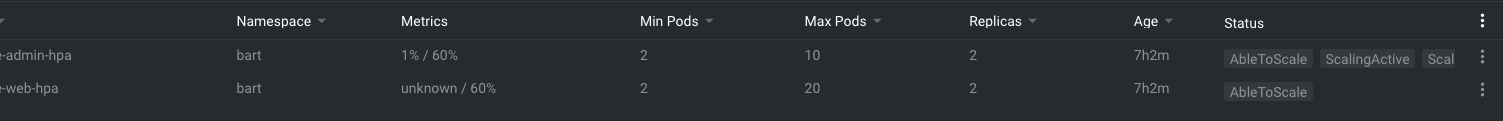

Metrics-server doesn't share CPU metrics for second HPA

Hi guys, my case is next: I have:

- two different deployments (two pods and two containers inside each pod).

- two HPAs for each deployment.

- one metrics-server pod in the same (with deployments) namespace. The problem is - the metrics server doesn't send the CPU metric to one of the HPA. I say CPU, because during my test I tried to set the autoscaler threshold to check CPU+Memory and memory values were shared with the HPA by the metrics server. below are my deployments\debug and some versions.

kubectl version

sk@mac talkable % kubectl version --output=yaml

clientVersion:

buildDate: "2022-06-15T14:14:10Z"

compiler: gc

gitCommit: f66044f4361b9f1f96f0053dd46cb7dce5e990a8

gitTreeState: clean

gitVersion: v1.24.2

goVersion: go1.18.3

major: "1"

minor: "24"

platform: darwin/arm64

kustomizeVersion: v4.5.4

serverVersion:

buildDate: "2022-07-06T18:06:50Z"

compiler: gc

gitCommit: ac73613dfd25370c18cbbbc6bfc65449397b35c7

gitTreeState: clean

gitVersion: v1.21.14-eks-18ef993

goVersion: go1.16.15

major: "1"

minor: 21+

platform: linux/amd64

my metrics-server deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

uid: f603713a-e6c4-45b5-a4f4-2bfa3a228150

resourceVersion: '66802670'

generation: 3

creationTimestamp: '2022-09-08T13:49:19Z'

labels:

k8s-app: metrics-server

annotations:

deployment.kubernetes.io/revision: '1'

kubectl.kubernetes.io/last-applied-configuration: >

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"k8s-app":"metrics-server"},"name":"metrics-server","namespace":"kube-system"},"spec":{"selector":{"matchLabels":{"k8s-app":"metrics-server"}},"strategy":{"rollingUpdate":{"maxUnavailable":0}},"template":{"metadata":{"labels":{"k8s-app":"metrics-server"}},"spec":{"containers":[{"args":["--cert-dir=/tmp","--secure-port=4443","--kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP","--kubelet-use-node-status-port","--metric-resolution=15s","--kubelet-insecure-tls=true"],"image":"k8s.gcr.io/metrics-server/metrics-server:v0.6.1","imagePullPolicy":"IfNotPresent","livenessProbe":{"failureThreshold":3,"httpGet":{"path":"/livez","port":"https","scheme":"HTTPS"},"periodSeconds":10},"name":"metrics-server","ports":[{"containerPort":4443,"name":"https","protocol":"TCP"}],"readinessProbe":{"failureThreshold":3,"httpGet":{"path":"/readyz","port":"https","scheme":"HTTPS"},"initialDelaySeconds":20,"periodSeconds":10},"resources":{"requests":{"cpu":"200m","memory":"300Mi"}},"securityContext":{"allowPrivilegeEscalation":false,"readOnlyRootFilesystem":true,"runAsNonRoot":true,"runAsUser":1000},"volumeMounts":[{"mountPath":"/tmp","name":"tmp-dir"}]}],"hostNetwork":true,"nodeSelector":{"kubernetes.io/os":"linux"},"priorityClassName":"system-cluster-critical","serviceAccountName":"metrics-server","volumes":[{"emptyDir":{},"name":"tmp-dir"}]}}}}

managedFields:

- manager: kubectl-client-side-apply

operation: Update

apiVersion: apps/v1

time: '2022-09-08T13:49:19Z'

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

f:labels:

.: {}

f:k8s-app: {}

f:spec:

f:progressDeadlineSeconds: {}

f:replicas: {}

f:revisionHistoryLimit: {}

f:selector: {}

f:strategy:

f:rollingUpdate:

.: {}

f:maxSurge: {}

f:maxUnavailable: {}

f:type: {}

f:template:

f:metadata:

f:labels:

.: {}

f:k8s-app: {}

f:spec:

f:containers:

k:{"name":"metrics-server"}:

.: {}

f:args: {}

f:image: {}

f:imagePullPolicy: {}

f:livenessProbe:

.: {}

f:failureThreshold: {}

f:httpGet:

.: {}

f:path: {}

f:port: {}

f:scheme: {}

f:periodSeconds: {}

f:successThreshold: {}

f:timeoutSeconds: {}

f:name: {}

f:ports:

.: {}

k:{"containerPort":4443,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:hostPort: {}

f:name: {}

f:protocol: {}

f:readinessProbe:

.: {}

f:failureThreshold: {}

f:httpGet:

.: {}

f:path: {}

f:port: {}

f:scheme: {}

f:initialDelaySeconds: {}

f:periodSeconds: {}

f:successThreshold: {}

f:timeoutSeconds: {}

f:resources:

.: {}

f:requests:

.: {}

f:cpu: {}

f:memory: {}

f:securityContext:

.: {}

f:allowPrivilegeEscalation: {}

f:readOnlyRootFilesystem: {}

f:runAsNonRoot: {}

f:runAsUser: {}

f:terminationMessagePath: {}

f:terminationMessagePolicy: {}

f:volumeMounts:

.: {}

k:{"mountPath":"/tmp"}:

.: {}

f:mountPath: {}

f:name: {}

f:dnsPolicy: {}

f:hostNetwork: {}

f:nodeSelector:

.: {}

f:kubernetes.io/os: {}

f:priorityClassName: {}

f:restartPolicy: {}

f:schedulerName: {}

f:securityContext: {}

f:serviceAccount: {}

f:serviceAccountName: {}

f:terminationGracePeriodSeconds: {}

f:volumes:

.: {}

k:{"name":"tmp-dir"}:

.: {}

f:emptyDir: {}

f:name: {}

- manager: kube-controller-manager

operation: Update

apiVersion: apps/v1

time: '2022-09-14T10:19:49Z'

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:deployment.kubernetes.io/revision: {}

f:status:

f:availableReplicas: {}

f:conditions:

.: {}

k:{"type":"Available"}:

.: {}

f:lastTransitionTime: {}

f:lastUpdateTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

k:{"type":"Progressing"}:

.: {}

f:lastTransitionTime: {}

f:lastUpdateTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

f:observedGeneration: {}

f:readyReplicas: {}

f:replicas: {}

f:updatedReplicas: {}

selfLink: /apis/apps/v1/namespaces/kube-system/deployments/metrics-server

status:

observedGeneration: 3

replicas: 5

updatedReplicas: 5

readyReplicas: 5

availableReplicas: 5

conditions:

- type: Progressing

status: 'True'

lastUpdateTime: '2022-09-08T13:49:49Z'

lastTransitionTime: '2022-09-08T13:49:19Z'

reason: NewReplicaSetAvailable

message: ReplicaSet "metrics-server-d4db696ff" has successfully progressed.

- type: Available

status: 'True'

lastUpdateTime: '2022-09-14T10:19:49Z'

lastTransitionTime: '2022-09-14T10:19:49Z'

reason: MinimumReplicasAvailable

message: Deployment has minimum availability.

spec:

replicas: 5

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

creationTimestamp: null

labels:

k8s-app: metrics-server

spec:

volumes:

- name: tmp-dir

emptyDir: {}

containers:

- name: metrics-server

image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1

args:

- '--cert-dir=/tmp'

- '--secure-port=4443'

- >-

--kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

- '--kubelet-use-node-status-port'

- '--metric-resolution=15s'

- '--kubelet-insecure-tls=true'

ports:

- name: https

hostPort: 4443

containerPort: 4443

protocol: TCP

resources:

requests:

cpu: 200m

memory: 300Mi

volumeMounts:

- name: tmp-dir

mountPath: /tmp

livenessProbe:

httpGet:

path: /livez

port: https

scheme: HTTPS

timeoutSeconds: 1

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

readinessProbe:

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

timeoutSeconds: 1

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: IfNotPresent

securityContext:

runAsUser: 1000

runAsNonRoot: true

readOnlyRootFilesystem: true

allowPrivilegeEscalation: false

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: metrics-server

serviceAccount: metrics-server

hostNetwork: true

securityContext: {}

schedulerName: default-scheduler

priorityClassName: system-cluster-critical

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 0

maxSurge: 25%

revisionHistoryLimit: 10

progressDeadlineSeconds: 600

metrics-server pods log

http2: server connection error from 10.100.100.79:35886: connection error: PROTOCOL_ERROR

http2: server connection error from 10.100.101.182:59154: connection error: PROTOCOL_ERROR

http2: server connection error from 10.100.101.182:59154: connection error: PROTOCOL_ERROR

http2: server connection error from 10.100.100.79:46480: connection error: PROTOCOL_ERROR

http2: server connection error from 10.100.100.79:46480: connection error: PROTOCOL_ERROR

E0914 04:31:20.140554 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.20:10250/metrics/resource\": remote error: tls: internal error" node="ip-10-100-101-20.ec2.internal"

E0914 04:31:35.114571 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.165:10250/metrics/resource\": dial tcp 10.100.101.165:10250: connect: connection refused" node="ip-10-100-101-165.ec2.internal"

E0914 04:32:03.603806 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.165:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-101-165.ec2.internal"

E0914 04:32:18.604001 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.165:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-101-165.ec2.internal"

E0914 04:32:33.604932 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.165:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-101-165.ec2.internal"

E0914 04:36:20.109588 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.234:10250/metrics/resource\": remote error: tls: internal error" node="ip-10-100-101-234.ec2.internal"

http2: server connection error from 10.100.101.182:35710: connection error: PROTOCOL_ERROR

E0914 04:51:48.603743 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.234:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-101-234.ec2.internal"

E0914 04:52:03.603706 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.234:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-101-234.ec2.internal"

E0914 04:52:18.603738 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.234:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-101-234.ec2.internal"

E0914 04:52:33.602756 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.234:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-101-234.ec2.internal"

E0914 04:52:48.602962 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.234:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-101-234.ec2.internal"

http2: server connection error from 10.100.100.79:40706: connection error: PROTOCOL_ERROR

http2: server connection error from 10.100.101.182:33332: connection error: PROTOCOL_ERROR

E0914 09:58:35.117983 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.7:10250/metrics/resource\": remote error: tls: internal error" node="ip-10-100-101-7.ec2.internal"

E0914 09:59:05.111799 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.100.56:10250/metrics/resource\": dial tcp 10.100.100.56:10250: connect: connection refused" node="ip-10-100-100-56.ec2.internal"

E0914 09:59:33.604484 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.100.56:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-100-56.ec2.internal"

E0914 09:59:48.603800 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.100.56:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-100-56.ec2.internal"

my deployment test777-admin-hpa

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: test777-admin-hpa

namespace: bart

uid: 9ad88361-29f6-47cb-bd5f-951d82382616

resourceVersion: '66845935'

creationTimestamp: '2022-09-14T06:47:32Z'

labels:

app.kubernetes.io/managed-by: Helm

annotations:

meta.helm.sh/release-name: test777-bart

meta.helm.sh/release-namespace: bart

managedFields:

- manager: helm

operation: Update

apiVersion: autoscaling/v2beta2

time: '2022-09-14T06:47:32Z'

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:meta.helm.sh/release-name: {}

f:meta.helm.sh/release-namespace: {}

f:labels:

.: {}

f:app.kubernetes.io/managed-by: {}

f:spec:

f:maxReplicas: {}

f:metrics: {}

f:minReplicas: {}

f:scaleTargetRef:

f:apiVersion: {}

f:kind: {}

f:name: {}

- manager: kube-controller-manager

operation: Update

apiVersion: autoscaling/v1

time: '2022-09-14T06:49:03Z'

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:autoscaling.alpha.kubernetes.io/conditions: {}

f:autoscaling.alpha.kubernetes.io/current-metrics: {}

f:status:

f:currentCPUUtilizationPercentage: {}

f:currentReplicas: {}

f:desiredReplicas: {}

selfLink: >-

/apis/autoscaling/v2beta1/namespaces/bart/horizontalpodautoscalers/test777-admin-hpa

status:

currentReplicas: 2

desiredReplicas: 2

currentMetrics:

- type: Resource

resource:

name: cpu

currentAverageUtilization: 1

currentAverageValue: 4m

conditions:

- type: AbleToScale

status: 'True'

lastTransitionTime: '2022-09-14T06:47:47Z'

reason: ReadyForNewScale

message: recommended size matches current size

- type: ScalingActive

status: 'True'

lastTransitionTime: '2022-09-14T07:52:13Z'

reason: ValidMetricFound

message: >-

the HPA was able to successfully calculate a replica count from cpu

resource utilization (percentage of request)

- type: ScalingLimited

status: 'True'

lastTransitionTime: '2022-09-14T10:24:33Z'

reason: TooFewReplicas

message: the desired replica count is less than the minimum replica count

spec:

scaleTargetRef:

kind: Deployment

name: test777-admin

apiVersion: apps/v1

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 60

test777-admin-hpa

sk@mac ~ % kubectl describe hpa test777-admin-hpa -n bart

Name: test777-admin-hpa

Namespace: bart

Labels: app.kubernetes.io/managed-by=Helm

Annotations: meta.helm.sh/release-name: test777-bart

meta.helm.sh/release-namespace: bart

CreationTimestamp: Wed, 14 Sep 2022 09:47:32 +0300

Reference: Deployment/test777-admin

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): 1% (4m) / 60%

Min replicas: 2

Max replicas: 10

Deployment pods: 2 current / 2 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ReadyForNewScale recommended size matches current size

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from cpu resource utilization (percentage of request)

ScalingLimited True TooFewReplicas the desired replica count is less than the minimum replica count

my deployment test777-web-hpa

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: test777-web-hpa

namespace: bart

uid: 92be51b4-d9dd-4f64-8f45-355612a98717

resourceVersion: '66802167'

creationTimestamp: '2022-09-14T06:47:32Z'

labels:

app.kubernetes.io/managed-by: Helm

annotations:

meta.helm.sh/release-name: test777-bart

meta.helm.sh/release-namespace: bart

managedFields:

- manager: helm

operation: Update

apiVersion: autoscaling/v2beta2

time: '2022-09-14T06:47:32Z'

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:meta.helm.sh/release-name: {}

f:meta.helm.sh/release-namespace: {}

f:labels:

.: {}

f:app.kubernetes.io/managed-by: {}

f:spec:

f:maxReplicas: {}

f:metrics: {}

f:minReplicas: {}

f:scaleTargetRef:

f:apiVersion: {}

f:kind: {}

f:name: {}

- manager: kube-controller-manager

operation: Update

apiVersion: autoscaling/v1

time: '2022-09-14T06:58:49Z'

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:autoscaling.alpha.kubernetes.io/conditions: {}

f:autoscaling.alpha.kubernetes.io/current-metrics: {}

f:status:

f:currentReplicas: {}

f:desiredReplicas: {}

selfLink: >-

/apis/autoscaling/v2beta1/namespaces/bart/horizontalpodautoscalers/test777-web-hpa

status:

currentReplicas: 2

desiredReplicas: 2

currentMetrics:

- type: Resource

resource:

name: memory

currentAverageValue: '1275105280'

- type: ''

conditions:

- type: AbleToScale

status: 'True'

lastTransitionTime: '2022-09-14T06:47:47Z'

reason: SucceededGetScale

message: the HPA controller was able to get the target's current scale

- type: ScalingActive

status: 'False'

lastTransitionTime: '2022-09-14T07:51:28Z'

reason: FailedGetResourceMetric

message: >-

the HPA was unable to compute the replica count: failed to get cpu

utilization: missing request for cpu

- type: ScalingLimited

status: 'False'

lastTransitionTime: '2022-09-14T06:58:49Z'

reason: DesiredWithinRange

message: the desired count is within the acceptable range

spec:

scaleTargetRef:

kind: Deployment

name: test777-web

apiVersion: apps/v1

minReplicas: 2

maxReplicas: 20

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 60

test777-web-hpa (the HPA with

sk@mac ~ % kubectl describe hpa test777-web-hpa -n bart

Name: test777-web-hpa

Namespace: bart

Labels: app.kubernetes.io/managed-by=Helm

Annotations: meta.helm.sh/release-name: test777-bart

meta.helm.sh/release-namespace: bart

CreationTimestamp: Wed, 14 Sep 2022 09:47:32 +0300

Reference: Deployment/test777-web

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): <unknown> / 60%

Min replicas: 2

Max replicas: 20

Deployment pods: 2 current / 2 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True SucceededGetScale the HPA controller was able to get the target's current scale

ScalingActive False FailedGetResourceMetric the HPA was unable to compute the replica count: failed to get cpu utilization: missing request for cpu

ScalingLimited False DesiredWithinRange the desired count is within the acceptable range

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedGetResourceMetric 4m13s (x1530 over 6h28m) horizontal-pod-autoscaler failed to get cpu utilization: missing request for CPU

and debug:

sk@mac ~ % kubectl top nodes

W0914 16:27:41.333865 46184 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

ip-10-100-100-145.ec2.internal 91m 1% 2426Mi 16%

ip-10-100-100-172.ec2.internal 191m 2% 10825Mi 74%

ip-10-100-100-8.ec2.internal 254m 6% 3680Mi 55%

ip-10-100-101-20.ec2.internal 93m 1% 4004Mi 27%

ip-10-100-101-7.ec2.internal 103m 1% 5319Mi 36%

sk@mac test777 % NODE_NAME=ip-10-100-101-7.ec2.internal

kubectl get --raw /api/v1/nodes/$NODE_NAME/proxy/metrics/resource

# HELP container_cpu_usage_seconds_total [ALPHA] Cumulative cpu time consumed by the container in core-seconds

# TYPE container_cpu_usage_seconds_total counter

container_cpu_usage_seconds_total{container="agent",namespace="newrelic",pod="newrelic-nrk8s-kubelet-7sj7p"} 38.90565046 1663163146734

container_cpu_usage_seconds_total{container="aws-node",namespace="kube-system",pod="aws-node-h9glf"} 40.953685422 1663163145869

container_cpu_usage_seconds_total{container="aws-node-termination-handler",namespace="kube-system",pod="aws-node-termination-handler-bk9tf"} 5.409004909 1663163139909

container_cpu_usage_seconds_total{container="kube-proxy",namespace="kube-system",pod="kube-proxy-x6ls4"} 4.57357219 1663163140416

container_cpu_usage_seconds_total{container="kubelet",namespace="newrelic",pod="newrelic-nrk8s-kubelet-7sj7p"} 49.65748969 1663163147833

container_cpu_usage_seconds_total{container="metrics-server",namespace="kube-system",pod="metrics-server-5d76bc7fb9-4fq48"} 0.848519497 1663163137506

container_cpu_usage_seconds_total{container="newrelic-logging",namespace="newrelic",pod="newrelic-newrelic-logging-6sp87"} 78.950603304 1663163147499

container_cpu_usage_seconds_total{container="test777",namespace="voidnew",pod="test777-web-6d46d657b8-wnq56"} 213.64960915 1663163147938

container_cpu_usage_seconds_total{container="test777-admin",namespace="voidnew",pod="test777-admin-5cb67bf7c9-wlk2x"} 139.70000229 1663163150146

container_cpu_usage_seconds_total{container="test777-nginx",namespace="voidnew",pod="test777-admin-5cb67bf7c9-wlk2x"} 1.098650398 1663163142615

container_cpu_usage_seconds_total{container="test777-nginx",namespace="voidnew",pod="test777-web-6d46d657b8-wnq56"} 3.834383147 1663163136242

container_cpu_usage_seconds_total{container="test777-sidekiq-api-stats",namespace="voidnew",pod="test777-sidekiq-api-stats-6744f4d475-fqnzd"} 20.872339072 1663163135417

container_cpu_usage_seconds_total{container="test777-sidekiq-default",namespace="voidnew",pod="test777-sidekiq-default-65cdf6d68c-hpptv"} 107.027204477 1663163144046

# HELP container_memory_working_set_bytes [ALPHA] Current working set of the container in bytes

# TYPE container_memory_working_set_bytes gauge

container_memory_working_set_bytes{container="agent",namespace="newrelic",pod="newrelic-nrk8s-kubelet-7sj7p"} 2.279424e+07 1663163146734

container_memory_working_set_bytes{container="aws-node",namespace="kube-system",pod="aws-node-h9glf"} 4.93568e+07 1663163145869

container_memory_working_set_bytes{container="aws-node-termination-handler",namespace="kube-system",pod="aws-node-termination-handler-bk9tf"} 1.3234176e+07 1663163139909

container_memory_working_set_bytes{container="kube-proxy",namespace="kube-system",pod="kube-proxy-x6ls4"} 2.1225472e+07 1663163140416

container_memory_working_set_bytes{container="kubelet",namespace="newrelic",pod="newrelic-nrk8s-kubelet-7sj7p"} 1.2808192e+07 1663163147833

container_memory_working_set_bytes{container="metrics-server",namespace="kube-system",pod="metrics-server-5d76bc7fb9-4fq48"} 1.8219008e+07 1663163137506

container_memory_working_set_bytes{container="newrelic-logging",namespace="newrelic",pod="newrelic-newrelic-logging-6sp87"} 2.6406912e+07 1663163147499

container_memory_working_set_bytes{container="test777",namespace="voidnew",pod="test777-web-6d46d657b8-wnq56"} 2.015215616e+09 1663163147938

container_memory_working_set_bytes{container="test777-admin",namespace="voidnew",pod="test777-admin-5cb67bf7c9-wlk2x"} 2.112708608e+09 1663163150146

container_memory_working_set_bytes{container="test777-nginx",namespace="voidnew",pod="test777-admin-5cb67bf7c9-wlk2x"} 3.989504e+06 1663163142615

container_memory_working_set_bytes{container="test777-nginx",namespace="voidnew",pod="test777-web-6d46d657b8-wnq56"} 3.8912e+06 1663163136242

container_memory_working_set_bytes{container="test777-sidekiq-api-stats",namespace="voidnew",pod="test777-sidekiq-api-stats-6744f4d475-fqnzd"} 3.42626304e+08 1663163135417

container_memory_working_set_bytes{container="test777-sidekiq-default",namespace="voidnew",pod="test777-sidekiq-default-65cdf6d68c-hpptv"} 3.86850816e+08 1663163144046

# HELP node_cpu_usage_seconds_total [ALPHA] Cumulative cpu time consumed by the node in core-seconds

# TYPE node_cpu_usage_seconds_total counter

node_cpu_usage_seconds_total 1927.972810339 1663163151057

# HELP node_memory_working_set_bytes [ALPHA] Current working set of the node in bytes

# TYPE node_memory_working_set_bytes gauge

node_memory_working_set_bytes 5.550145536e+09 1663163151057

# HELP pod_cpu_usage_seconds_total [ALPHA] Cumulative cpu time consumed by the pod in core-seconds

# TYPE pod_cpu_usage_seconds_total counter

pod_cpu_usage_seconds_total{namespace="kube-system",pod="aws-node-h9glf"} 41.021928699 1663163144187

pod_cpu_usage_seconds_total{namespace="kube-system",pod="aws-node-termination-handler-bk9tf"} 5.568402876 1663163151346

pod_cpu_usage_seconds_total{namespace="kube-system",pod="kube-proxy-x6ls4"} 4.582493498 1663163136940

pod_cpu_usage_seconds_total{namespace="kube-system",pod="metrics-server-5d76bc7fb9-4fq48"} 1.027940438 1663163147544

pod_cpu_usage_seconds_total{namespace="newrelic",pod="newrelic-newrelic-logging-6sp87"} 79.082432117 1663163146109

pod_cpu_usage_seconds_total{namespace="newrelic",pod="newrelic-nrk8s-kubelet-7sj7p"} 88.495799851 1663163140332

pod_cpu_usage_seconds_total{namespace="voidnew",pod="test777-admin-5cb67bf7c9-wlk2x"} 140.750414508 1663163144358

pod_cpu_usage_seconds_total{namespace="voidnew",pod="test777-sidekiq-api-stats-6744f4d475-fqnzd"} 21.028948976 1663163135699

pod_cpu_usage_seconds_total{namespace="voidnew",pod="test777-sidekiq-default-65cdf6d68c-hpptv"} 107.171260406 1663163140584

pod_cpu_usage_seconds_total{namespace="voidnew",pod="test777-web-6d46d657b8-wnq56"} 217.721807448 1663163151213

# HELP pod_memory_working_set_bytes [ALPHA] Current working set of the pod in bytes

# TYPE pod_memory_working_set_bytes gauge

pod_memory_working_set_bytes{namespace="kube-system",pod="aws-node-h9glf"} 5.548032e+07 1663163144187

pod_memory_working_set_bytes{namespace="kube-system",pod="aws-node-termination-handler-bk9tf"} 1.4176256e+07 1663163151346

pod_memory_working_set_bytes{namespace="kube-system",pod="kube-proxy-x6ls4"} 2.2171648e+07 1663163136940

pod_memory_working_set_bytes{namespace="kube-system",pod="metrics-server-5d76bc7fb9-4fq48"} 1.9361792e+07 1663163147544

pod_memory_working_set_bytes{namespace="newrelic",pod="newrelic-newrelic-logging-6sp87"} 2.7332608e+07 1663163146109

pod_memory_working_set_bytes{namespace="newrelic",pod="newrelic-nrk8s-kubelet-7sj7p"} 3.9682048e+07 1663163140332

pod_memory_working_set_bytes{namespace="voidnew",pod="test777-admin-5cb67bf7c9-wlk2x"} 2.117742592e+09 1663163144358

pod_memory_working_set_bytes{namespace="voidnew",pod="test777-sidekiq-api-stats-6744f4d475-fqnzd"} 3.43740416e+08 1663163135699

pod_memory_working_set_bytes{namespace="voidnew",pod="test777-sidekiq-default-65cdf6d68c-hpptv"} 3.86633728e+08 1663163140584

pod_memory_working_set_bytes{namespace="voidnew",pod="test777-web-6d46d657b8-wnq56"} 2.01990144e+09 1663163151213

# HELP scrape_error [ALPHA] 1 if there was an error while getting container metrics, 0 otherwise

# TYPE scrape_error gauge

scrape_error 0

sk@mac talkable % kubectl get --raw /api/v1/nodes/$NODE_NAME/proxy/stats/summary | jq '{cpu: .node.cpu, memory: .node.memory}'

{

"cpu": {

"time": "2022-09-14T13:47:01Z",

"usageNanoCores": 100871342,

"usageCoreNanoSeconds": 1948665238173

},

"memory": {

"time": "2022-09-14T13:47:01Z",

"availableBytes": 10741108736,

"usageBytes": 10238730240,

"workingSetBytes": 5561221120,

"rssBytes": 5365252096,

"pageFaults": 3195654,

"majorPageFaults": 924

}

}

[root@ip-10-100-101-7 /]# mount | grep group

tmpfs on /sys/fs/cgroup type tmpfs (ro,nosuid,nodev,noexec,mode=755)

cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,xattr,release_agent=/usr/lib/systemd/systemd-cgroups-agent,name=systemd)

cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,cpu,cpuacct)

cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,devices)

cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,blkio)

cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,memory)

cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,net_cls,net_prio)

cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,perf_event)

cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,pids)

cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,hugetlb)

cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,freezer)

cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,cpuset)

Also cannot understand why I still getting some protocol and scraper errors in metrics-server logs. Like this:

E0914 04:32:03.603806 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.165:10250/metrics/resource\": context deadline exceeded" node="ip-10-100-101-165.ec2.internal"

E0914 04:31:20.140554 1 scraper.go:140] "Failed to scrape node" err="Get \"https://10.100.101.20:10250/metrics/resource\": remote error: tls: internal error" node="ip-10-100-101-20.ec2.internal"

http2: server connection error from 10.100.100.79:46480: connection error: PROTOCOL_ERROR

From my point of view - it looks like the metrics server couldn't handle the CPU sharing for two HPAs or can do it partially, on and off. Is it possible to test my theory?

Appreciate any help guys.

/kind support

Hi, @serg4kostiuk, Thanks for the feedback.

The prerequisite for metrics-server to work properly is that the metrics/resource endpoint of nodes can be accessed normally.

But judging from the metrics-server logs provided, there is a probability of failure to access the metrics/resource endpoints of some nodes.

There are four main types of errors

-

PROTOCOL_ERRORnode10.100.100.79and node10.100.101.182 -

remote error: tls: internal error"node10.100.101.2010.100.101.234and10.100.101.7 -

connect: connection refusednode10.100.101.165and10.100.100.56 -

context deadline exceedednode10.100.101.16510.100.101.234and10.100.100.56

I am not clear about the status of the cluster. Maybe there is a problem with the network status?

I'm having a similar problem. When I first start the cluster the metrics-server works fine. I can do stuff like kubectl top nodes / pods and also the HPA configurations seem to work.

The problem begins when new nodes are created. The metrics-server loses track of new nodes. When I do kubectl top nodes I get unknown values for the new added node.

I also noticed through the logs, that the metrics server tries to reach the new node in a different port, for example the first working node is at port 4443, but the new node fails at port 10250.

I'm having a similar problem. When I first start the cluster the metrics-server works fine. I can do stuff like kubectl top nodes / pods and also the HPA configurations seem to work.

Any steps can reproduce it? what's version about k8s and metrics-server.

I've resolved the issue.

The issue was that I'd set labels and annotations for cronJob and Jobs accordingly. In HPA we have set a reference to the scaled object - scaleTargetRef-kind- Deployment. But for some reason, HPA picks up additional resources by label (including cronJobs, Jobs) and as these resources cannot be scaled here is the error. So, the following changes were made for cronJob and Job kinds:

Glad if it helps. Good luck!