external-dns

external-dns copied to clipboard

external-dns copied to clipboard

Record Deletion in Windows DNS Server

What happened: We installed externalDNS to create DNS Records in Windows DNS Server. The RR are created fine, but afterwards not deleted, when removing the kubernetes service.

What you expected to happen: The RR is deleted.

How to reproduce it (as minimally and precisely as possible):

-

Create a config, with these parameters: helm install external-dns

--set source=ingress

--set txtOwnerId=k8s

--set domainFilters[0]=*******

--set provider=rfc2136

--set rfc2136.host=*******

--set rfc2136.port=53

--set rfc2136.zone=*******

--set rfc2136.insecure=true

--set rfc2136.tsigAxfr=false

bitnami/external-dns -

Deploy a service.

-

DNS record will be created

-

delete the service

-

DNS record is not deleted from the database.

Anything else we need to know?:

Environment:

- External-DNS version (use

external-dns --version): 1.4.0 - DNS provider: Windows DNS Server

- Others: Kubernetes Version: v1.20.8

Have you enabled Zone transfer from any server in Windows? Works fine for me.

Edit: After rebuilding the cluster, I do not see the records getting deleted, weird.

same issue here, zone transfer is enabled. the policy is sync.

I've got the same issue. Could this be caused by the fact that rfc2136 provider is in alpha state? I don't have zone transfers enabled and I don't think that should matter unless I'm missing something

I have the same issue.

Zone transfers are enabled (they need to be, because the external-dns needs to check which records are there so it can decide to delete them).

I can see from the (debug) logging that the TXT records with owner IDs are getting transferred: (NOTE: Only the example-app and example-app-2 records are managed by external-dns)

...

time="2022-05-06T07:06:43Z" level=debug msg="Record=cssh.SO.stater.com.\t3600\tIN\tA\t172.22.40.125"

time="2022-05-06T07:06:43Z" level=debug msg="Record=example-app.SO.stater.com.\t0\tIN\tA\t172.22.40.125"

time="2022-05-06T07:06:43Z" level=debug msg="Record=example-app.SO.stater.com.\t0\tIN\tTXT\t\"heritage=external-dns,external-dns/owner=k8s-so,external-dns/resource=service/example-app/platform-k8s-example-app-edns\""

time="2022-05-06T07:06:43Z" level=debug msg="Record=example-app-2.SO.stater.com.\t0\tIN\tA\t172.22.40.125"

time="2022-05-06T07:06:43Z" level=debug msg="Record=example-app-2.SO.stater.com.\t0\tIN\tTXT\t\"heritage=external-dns,external-dns/owner=k8s-so,external-dns/resource=service/example-app/platform-k8s-example-app-edns\""

time="2022-05-06T07:06:43Z" level=debug msg="Record=mssql-knowledge-and-experience.SO.stater.com.\t3600\tIN\tA\t172.22.40.141"

time="2022-05-06T07:06:43Z" level=debug msg="Record=zabbix-proxy.SO.stater.com.\t3600\tIN\tCNAME\tsozbxprx01.europe.stater.corp."

...

But then it's claiming the owner ID does not match (which is weird because just above it's clearly getting the TXT records with the correct owner ids):

...

time="2022-05-06T07:06:43Z" level=debug msg="Skipping endpoint cssh.SO.stater.com 3600 IN A 172.22.40.125 [] because owner id does not match, found: \"\", required: \"k8s-so\""

time="2022-05-06T07:06:43Z" level=debug msg="Skipping endpoint example-app.SO.stater.com 0 IN A 172.22.40.125 [] because owner id does not match, found: \"\", required: \"k8s-so\""

time="2022-05-06T07:06:43Z" level=debug msg="Skipping endpoint example-app-2.SO.stater.com 0 IN A 172.22.40.125 [] because owner id does not match, found: \"\", required: \"k8s-so\""

time="2022-05-06T07:06:43Z" level=debug msg="Skipping endpoint mssql-knowledge-and-experience.SO.stater.com 3600 IN A 172.22.40.141 [] because owner id does not match, found: \"\", required: \"k8s-so\""

time="2022-05-06T07:06:43Z" level=debug msg="Skipping endpoint zabbix-proxy.SO.stater.com 3600 IN CNAME sozbxprx01.europe.stater.corp [] because owner id does not match, found: \"\", required: \"k8s-so\""

...

Same issue here - based on @willemm's logs above it looks like the Operator is glitching out when reading the owner tag - looks like a string parsing problem.

It also looks like @k8s-ci-robot's has been going around closing various permutations of this exact same issue over the last couple years before someone gets a chance to offer a fix:

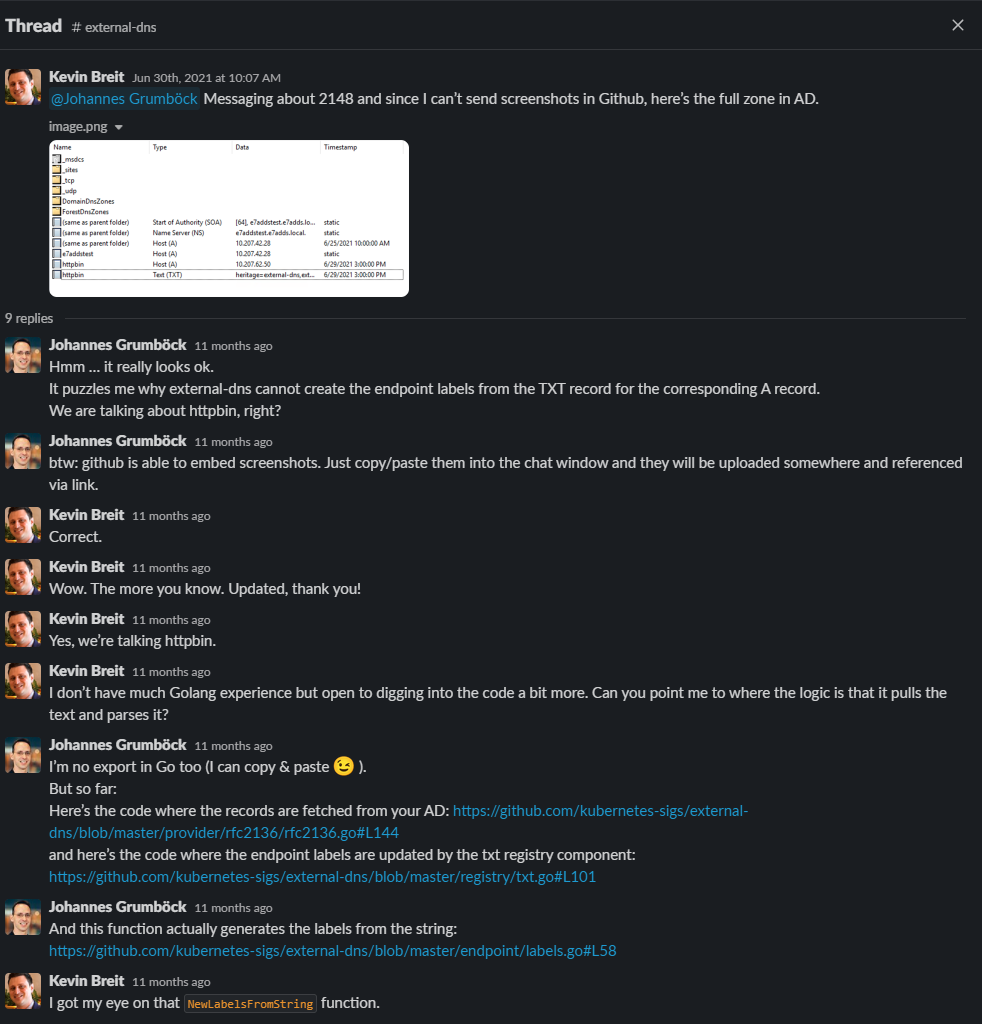

- (Windows DNS) https://github.com/kubernetes-sigs/external-dns/issues/2148

- (Windows DNS) https://github.com/kubernetes-sigs/external-dns/issues/2194

- (Windows DNS) https://github.com/kubernetes-sigs/external-dns/issues/882

- (Similar issue on Openstack) https://github.com/kubernetes-sigs/external-dns/issues/1122

(and it's probably going to close this one too).

This is the closest someone got to a fix - https://github.com/kubernetes-sigs/external-dns/issues/2148#issuecomment-872448356

Some slack discussion here:

https://kubernetes.slack.com/archives/C771MKDKQ/p1625062020143900

Looks like this is the problematic function: https://github.com/kubernetes-sigs/external-dns/blob/da6f6730438511924c78de9090aea29b5e088fc8/provider/rfc2136/rfc2136.go#L131

And the trail has gone cold.

@njuettner, @Raffo, @linki, @jgrumboe - understanding Windows DNS isn't tested, Windows is extremely common for many Enterprises running On-Prem K8s - any ideas who can actually help provide some guidance?

@mdrakiburrahman sorry, to say but I can't support here anymore since I'm not using external-dns or Windows-DNS (never did actually) anymore and can't contribute here.

Hello. I have the same problem with bind9.

RR are created but not deleted.

I have no experience with Go nor enough Kubernetes knowledge to debug this myself, but I willing to invest time and effort if someone could guide me thru.

Thanks!

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle rotten

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs.

This bot triages issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Reopen this issue with

/reopen - Mark this issue as fresh with

/remove-lifecycle rotten - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/close not-planned

@k8s-triage-robot: Closing this issue, marking it as "Not Planned".

In response to this:

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs.

This bot triages issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied- After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied- After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closedYou can:

- Reopen this issue with

/reopen- Mark this issue as fresh with

/remove-lifecycle rotten- Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/close not-planned

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository.