external-dns

external-dns copied to clipboard

external-dns copied to clipboard

Root domain constantly added with doubled name (OVH only?)

What happened:

I registered domain something.space (cheap domain for tests) via OVH and would like to use that for nginx. I need to set it up to point to my IP (ie 11.22.33.44) with and without www subdomain.

Tried record A and CNAME and they are working correctly for subdomains, and not for main domain.

Subdomains is registered as:

www.something.space. A 11.22.33.44

but main domain is:

something.space.something.space. A 11.22.33.44

..and it's constantly added, no txt record is stored.

What you expected to happen: There should be no difference with those two

How to reproduce it (as minimally and precisely as possible): This example shows problem on my side:

kind: Service

apiVersion: v1

metadata:

name: nginx-test-4

annotations:

external-dns.alpha.kubernetes.io/hostname: something.space

spec:

type: ExternalName

externalName: 11.22.33.44

This should register CNAME for this IP, generated name is something.space.something.space

Anything else we need to know?: I'm not yet sure if it's caused by that strange domain name (*.space) or it's bug in OVH provider. From debug logs I can see that it creates that doubled name for main domain and only subdomain for subdomains.

Environment:

- External-DNS version (use

external-dns --version): 0.7.6, but also checked latest - DNS provider: OVH

- Others: *.space domain which is quite odd but cheap to test out.

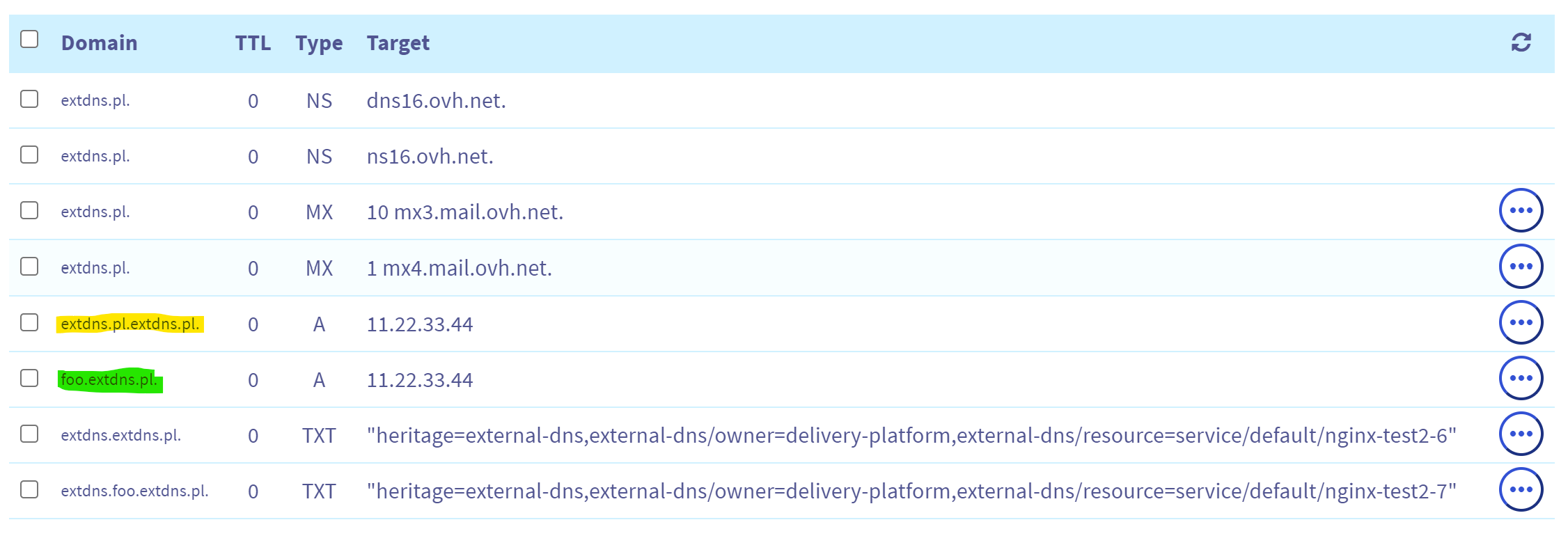

Today I checked same thing with latest external-dns and different domain name (*.pl) and problem is still present, this is probably something about OVH connector,

Services to create foo.extdns.pl and extdns.pl :

kind: Service

apiVersion: v1

metadata:

name: nginx-test2-6

annotations:

external-dns.alpha.kubernetes.io/hostname: extdns.pl

spec:

type: ExternalName

externalName: 11.22.33.44

---

kind: Service

apiVersion: v1

metadata:

name: nginx-test2-7

annotations:

external-dns.alpha.kubernetes.io/hostname: foo.extdns.pl

spec:

type: ExternalName

externalName: 11.22.33.44

Logs:

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213550925 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213551092 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213490211 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213550926 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213550927 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213551281 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213551207 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213551093 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213550928 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213490215 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213490880 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213551282 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213551208 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Getting record 5213490881 for extdns.pl"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213550925 for extdns.pl is {ovhRecordFields:{FieldType:TXT SubDomain:extdns.foo TTL:0 Target:\"heritage=external-dns,external-dns/owner=delivery-platform,external-dns/resource=service/default/nginx-test2-7\"} ID:5213550925 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213551092 for extdns.pl is {ovhRecordFields:{FieldType:A SubDomain:extdns.pl TTL:0 Target:11.22.33.44} ID:5213551092 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213490211 for extdns.pl is {ovhRecordFields:{FieldType:NS SubDomain: TTL:0 Target:dns16.ovh.net.} ID:5213490211 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213550926 for extdns.pl is {ovhRecordFields:{FieldType:A SubDomain:extdns.pl TTL:0 Target:11.22.33.44} ID:5213550926 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213550927 for extdns.pl is {ovhRecordFields:{FieldType:A SubDomain:foo TTL:0 Target:11.22.33.44} ID:5213550927 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213551281 for extdns.pl is {ovhRecordFields:{FieldType:TXT SubDomain:extdns TTL:0 Target:\"heritage=external-dns,external-dns/owner=delivery-platform,external-dns/resource=service/default/nginx-test2-6\"} ID:5213551281 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213551207 for extdns.pl is {ovhRecordFields:{FieldType:TXT SubDomain:extdns TTL:0 Target:\"heritage=external-dns,external-dns/owner=delivery-platform,external-dns/resource=service/default/nginx-test2-6\"} ID:5213551207 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213551093 for extdns.pl is {ovhRecordFields:{FieldType:TXT SubDomain:extdns TTL:0 Target:\"heritage=external-dns,external-dns/owner=delivery-platform,external-dns/resource=service/default/nginx-test2-6\"} ID:5213551093 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213550928 for extdns.pl is {ovhRecordFields:{FieldType:TXT SubDomain:extdns TTL:0 Target:\"heritage=external-dns,external-dns/owner=delivery-platform,external-dns/resource=service/default/nginx-test2-6\"} ID:5213550928 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213490215 for extdns.pl is {ovhRecordFields:{FieldType:NS SubDomain: TTL:0 Target:ns16.ovh.net.} ID:5213490215 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213551282 for extdns.pl is {ovhRecordFields:{FieldType:A SubDomain:extdns.pl TTL:0 Target:11.22.33.44} ID:5213551282 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Record 5213551208 for extdns.pl is {ovhRecordFields:{FieldType:A SubDomain:extdns.pl TTL:0 Target:11.22.33.44} ID:5213551208 Zone:extdns.pl}"

time="2021-10-26T07:52:54Z" level=info msg="OVH: 2 changes will be done"

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Add an entry to extdns.pl zone (ID : 5213550928) : extdns 0 IN TXT \"heritage=external-dns,external-dns/owner=delivery-platform,external-dns/resource=service/default/nginx-test2-6\""

time="2021-10-26T07:52:54Z" level=debug msg="OVH: Add an entry to extdns.pl zone (ID : 5213551208) : extdns.pl 0 IN A 11.22.33.44"

time="2021-10-26T07:52:55Z" level=info msg="OVH: 1 zones will be refreshed"

time="2021-10-26T07:52:55Z" level=debug msg="OVH: Refresh extdns.pl zone"

This creates this:

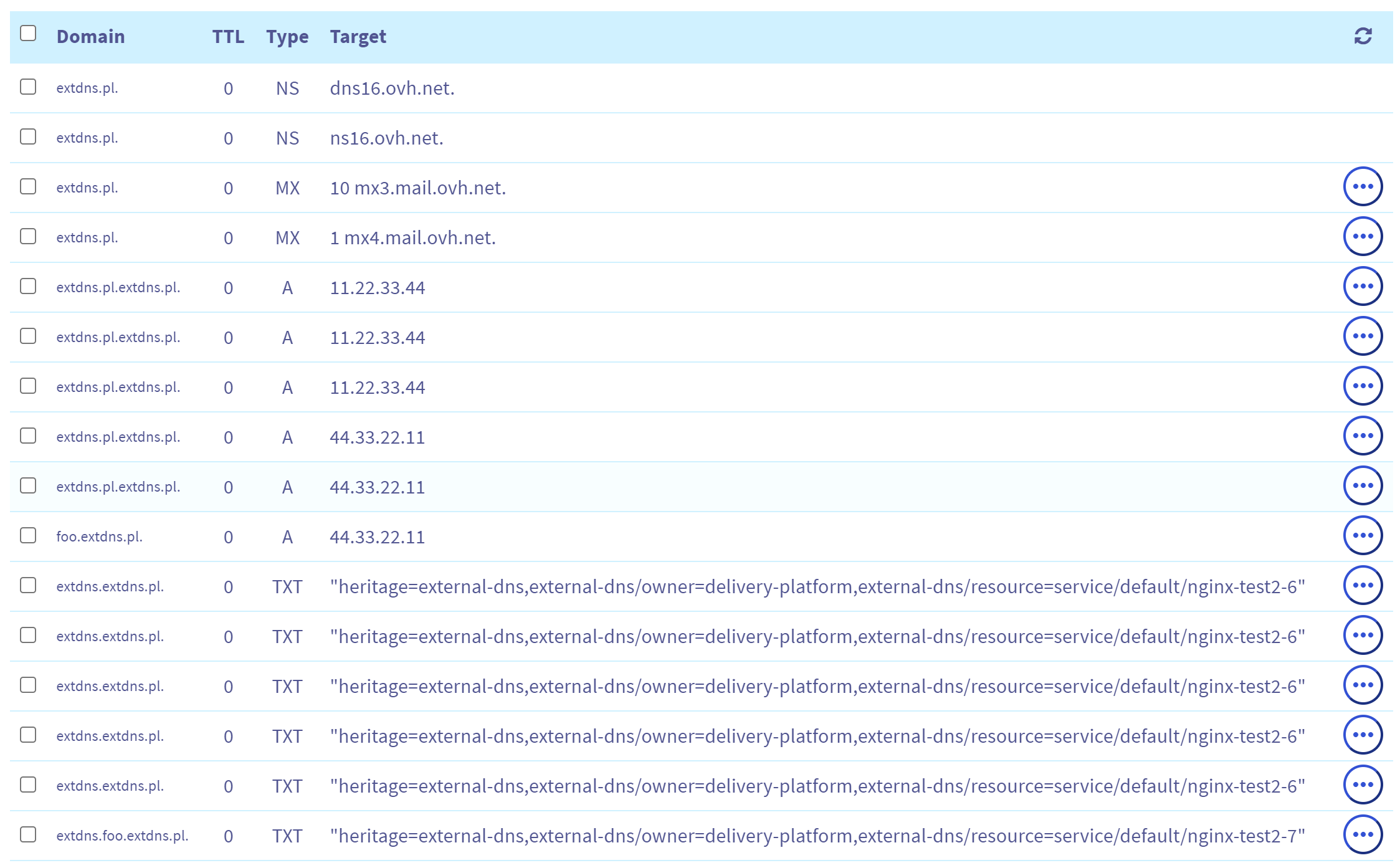

And it's adding same all the time :( Here I changed IP to 44.33.22.11

Subdomain was updated and main domain yet again stuck doubled.

BTW: notice that I used prefix "extdns." - same as domain, I tested that with different names to - no luck.

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

This is still an issue :(

I am facing the same issue. Did someone found a way to fix this ?

For now I added root domains manually and use it only for subdomains. OVH addon is still ALPHA quality and I won't use that for production.

From OVH, i found a middle fix, which is to set a dot prefix:

.domain.tld

Then the entry added is correct for root domain.

But i am still facing issue the the value is recorded every minute, and a new A record is created every minute... any one found how to fix this ?

@Raffo any idea on how to fix this ?

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

/remove-lifecycle stale

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale