cluster-api-provider-azure

cluster-api-provider-azure copied to clipboard

cluster-api-provider-azure copied to clipboard

AzureManagedControlPlane with existing vnet and existing subnet attempts to delete subnet when cluster is deleted

/kind bug

[Before submitting an issue, have you checked the Troubleshooting Guide?]

What steps did you take and what happened:

If you use a AzureManagedControlPlane with an existing virtualNetwork.name and an existing virtualNetwork.subnet.name it will properly use the existing resources. However, when deleting the cluster it will attempt to delete the subnet specified in virtualNetwork.subnet.name. You are then stuck and unable to finish the deletion of the cluster without also losing the existing subnet (if it already has resources in it).

What did you expect to happen:

While the documentation doesn't specifically say that you can use an existing subnet, I read it as implied because you can use an existing vnet. If that's not accurate, I can open a PR to update the documentation accordingly. However, it would be nice if you could use an existing subnet inside of an existing vnet when creating new AKS clusters.

In our environment, we have a single subnet in our vnets that we'd like to use for new AKS clusters being created with CAPZ as we do a migration so that we don't have to redo any peering connections.

Anything else you would like to add: [Miscellaneous information that will assist in solving the issue.]

This seems similar to https://github.com/kubernetes-sigs/cluster-api-provider-azure/issues/1590

Environment:

- cluster-api-provider-azure version: registry.k8s.io/cluster-api-azure/cluster-api-azure-controller:v1.4.0

- Kubernetes version: (use

kubectl version): 1.22.11 - OS (e.g. from

/etc/os-release): n/a

/area managedclusters

Hi @mtougeron, I think the first thing we want to do is validate that AKS itself supports the create flow you outlined (install into a custom (pre-existing) VNET + custom (pre-existing) subnet. Does the delete flow using vanilla AKS preserve both the previously existing subnet + VNET, only deleting the AKS-specific resources?

AKS itself supports the create flow you outlined (install into a custom (pre-existing) VNET + custom (pre-existing) subnet

It worked with CAPZ at least but I haven't tried with doing it via the CLI or Azure Portal. I'll try that.

Does the delete flow using vanilla AKS preserve both the previously existing subnet + VNET, only deleting the AKS-specific resources

When deleting via CAPZ it preserved all other resources in the subnet & VNET. But I didn't try via the CLI/Portal. I'll try that as well.

AKS itself supports the create flow you outlined (install into a custom (pre-existing) VNET + custom (pre-existing) subnet

It worked with CAPZ at least but I haven't tried with doing it via the CLI or Azure Portal. I'll try that.

Yes, creating via the Portal worked. I was able to deploy into an existing VNET and subnet that had other resources already in it.

Does the delete flow using vanilla AKS preserve both the previously existing subnet + VNET, only deleting the AKS-specific resources

When deleting via CAPZ it preserved all other resources in the subnet & VNET. But I didn't try via the CLI/Portal. I'll try that as well.

When I deleted the manually AKS cluster via the Portal it worked and left the existing resources alone.

I think the root cause is that the subnet deletion isn't checking for the ownership tag like it does for the VNET deletion.

FYI subnets can't have tags in Azure (they are a nested resource) so we have to rely on the VNet tags to determine if the subnet is owned. Just like we do for self-managed CAPZ clusters, we have to assume that if a user is bringing a pre-existing VNet, they are also bringing existing subnets (otherwise it's impossible to tell which subnets were created by CAPZ vs. the user).

The issue seems to be that https://github.com/kubernetes-sigs/cluster-api-provider-azure/blob/main/azure/scope/managedcontrolplane.go#L330 is always returning true for ManagedClusters.

FYI subnets can't have tags in Azure

Ah, wasn't aware of that. That would explain why I couldn't find something related to it in the code. :)

is always returning true for ManagedClusters

Good find!

The issue seems to be that https://github.com/kubernetes-sigs/cluster-api-provider-azure/blob/main/azure/scope/managedcontrolplane.go#L330 is always returning true for ManagedClusters.

@alexeldeib you owe @CecileRobertMichon a 🍷

🙇♂️🎉

Nice find!!

On Thu, Jul 28, 2022 at 3:22 PM Jack Francis @.***> wrote:

The issue seems to be that https://github.com/kubernetes-sigs/cluster-api-provider-azure/blob/main/azure/scope/managedcontrolplane.go#L330 is always returning true for ManagedClusters.

@alexeldeib https://github.com/alexeldeib you owe @CecileRobertMichon https://github.com/CecileRobertMichon a 🍷

— Reply to this email directly, view it on GitHub https://github.com/kubernetes-sigs/cluster-api-provider-azure/issues/2484#issuecomment-1198542152, or unsubscribe https://github.com/notifications/unsubscribe-auth/ABT4LWKRPFN3PNWOBTKBP6TVWLMWPANCNFSM53W63AZQ . You are receiving this because you were mentioned.Message ID: @.*** com>

I actually couldn't repro this.

I used the standard reference AKS template that we use to test, but with a modified AzureManagedControlPlane:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: AzureManagedControlPlane

metadata:

name: ${CLUSTER_NAME}

namespace: default

spec:

identityRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: AzureClusterIdentity

name: ${CLUSTER_IDENTITY_NAME}

location: ${AZURE_LOCATION}

resourceGroupName: aks-mine

sshPublicKey: ${AZURE_SSH_PUBLIC_KEY_B64:=""}

subscriptionID: ${AZURE_SUBSCRIPTION_ID}

version: ${KUBERNETES_VERSION}

virtualNetwork:

cidrBlock: 10.0.0.0/8

name: aks-mine-vnet

subnet:

cidrBlock: 10.240.0.0/16

name: aks-mine-subnet

So basically, before creating the cluster I created a resource group with the name "aks-mine", and then created a new Virtual Network in that resource group called "aks-mine-vnet" with a subnet called "aks-mine-subnet" (I used the CIDR configuration declared in the above spec).

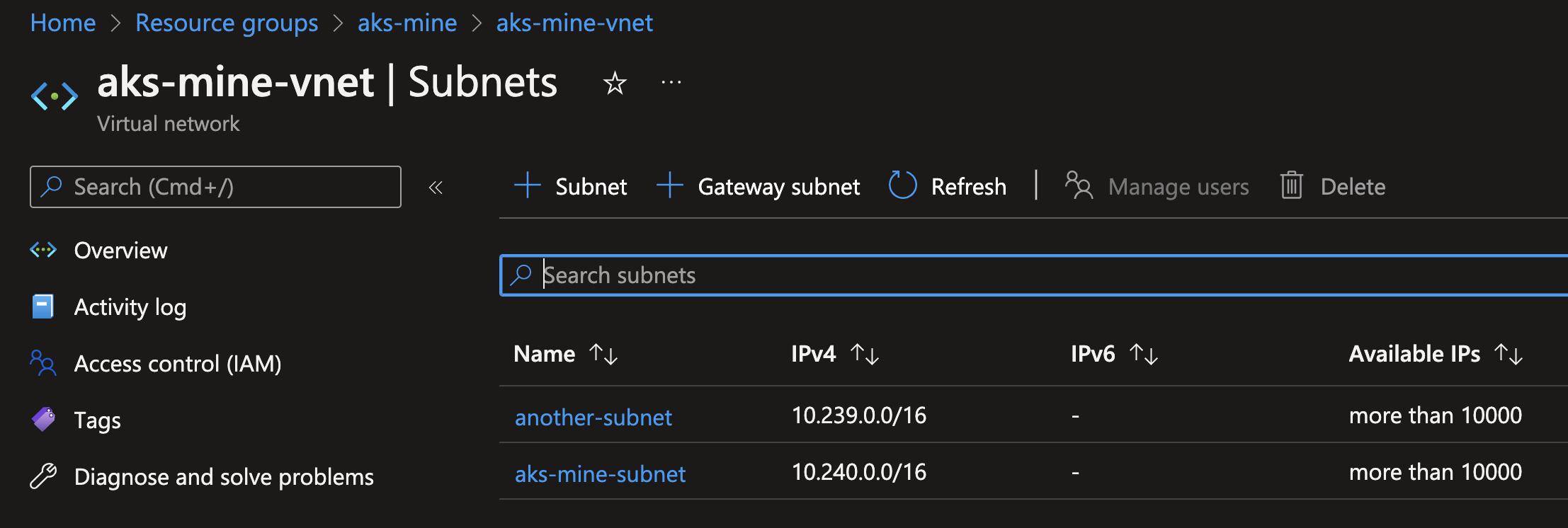

I think created another subnet in "aks-mine-vnet" with no capz reference called "another-subnet":

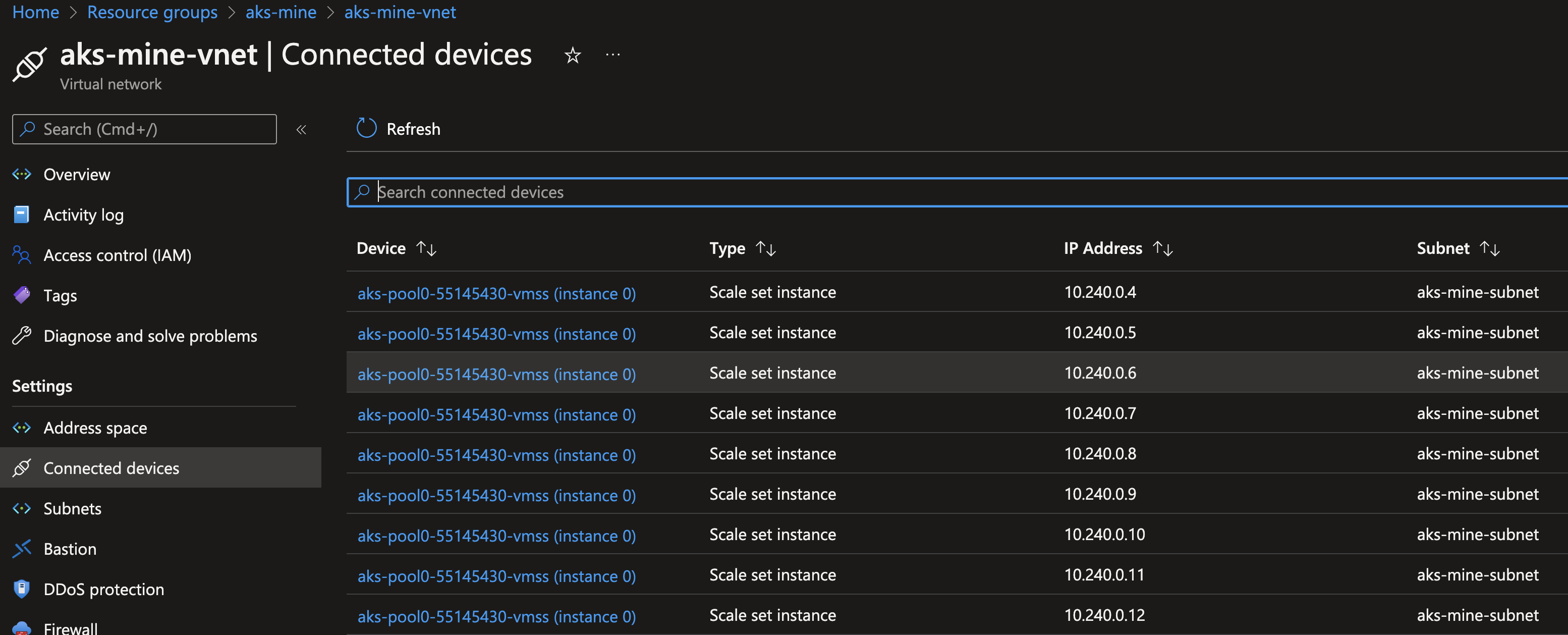

After all of the above, I created the AKS cluster from the reference template (w/ the above AzureManagedControlPlane mods) and observed the cluster create successfully, with the Azure CNI vnet IP addresses assigned in the pre-existing VNET + subnet as expected:

A short while later, I deleted the cluster:

$ k get clusters -A

NAMESPACE NAME PHASE AGE VERSION

default aks-mine Provisioned 10m

$ k delete cluster aks-mine

cluster.cluster.x-k8s.io "aks-mine" deleted

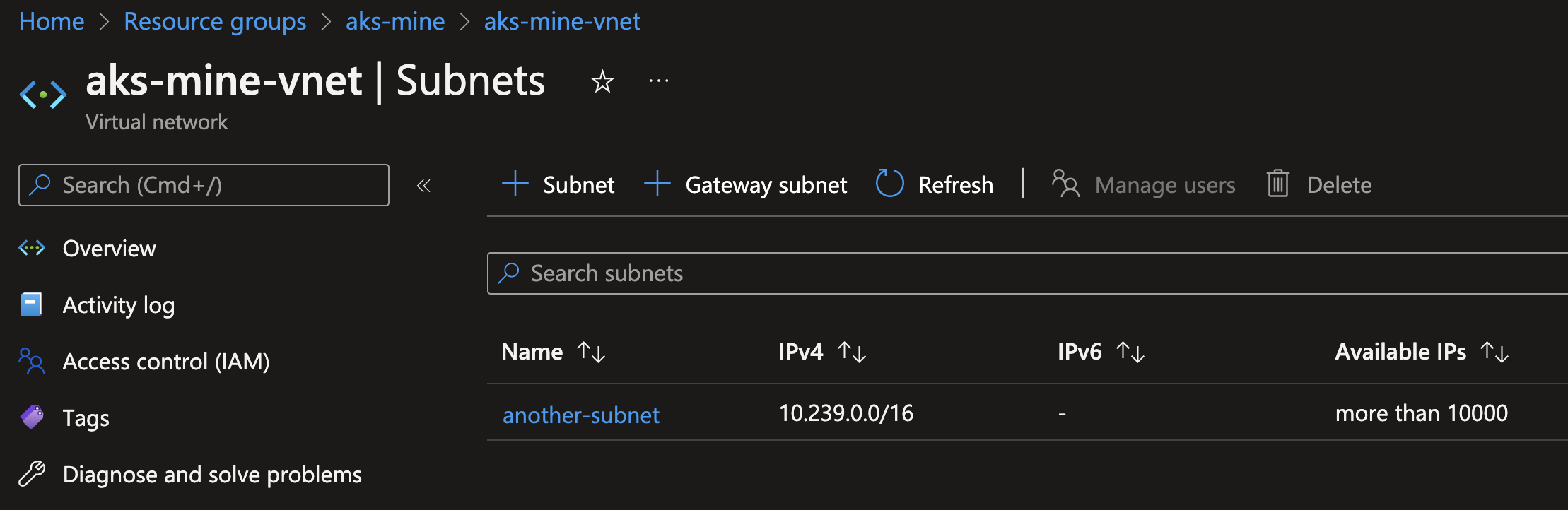

The pre-existing resource group and vnet still exist, as well as that random subnet that I created:

What about my repro above is different from what you're doing, and observing, in your environment?

What about my repro above is different from what you're doing, and observing, in your environment?

It shouldn't delete the aks-mine-subnet subnet since it was pre-existing and unmanaged by CAPZ.

/assign @mtougeron @jackfrancis