csi-driver-smb

csi-driver-smb copied to clipboard

csi-driver-smb copied to clipboard

Old share from old server mounted after deleting and recreating volume/deployment with the same name

What happened:

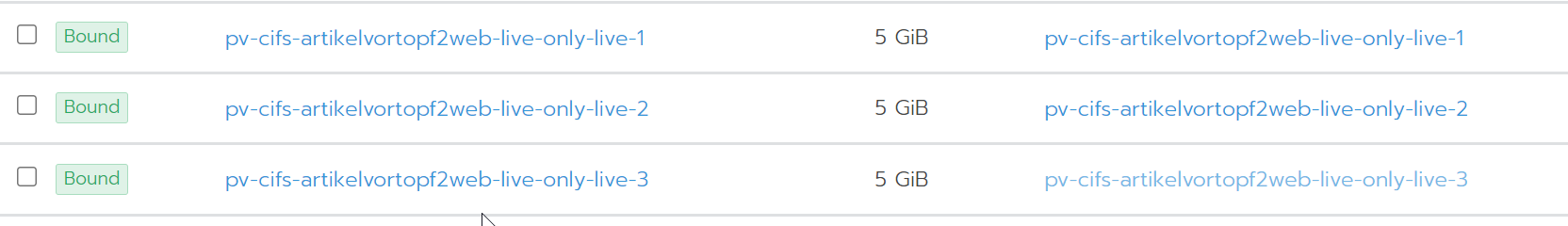

Our deployments are generated from a git repo, volumes are generated from a configfile and get generated + numbered names like this

After a user changed the target server for a smb mount the volume is updated and i can see the new config in the pv

❯ k describe pv pv-cifs-artikelvortopf2web-live-only-live-1

Name: pv-cifs-artikelvortopf2web-live-only-live-1

Labels: kapp.k14s.io/app=1663849042183958382

kapp.k14s.io/association=v1.6cf92940af1aa5db892a65e20305842d

Annotations: kapp.k14s.io/identity: v1;//PersistentVolume/pv-cifs-artikelvortopf2web-live-only-live-1;v1

kapp.k14s.io/original:

{"apiVersion":"v1","kind":"PersistentVolume","metadata":{"labels":{"kapp.k14s.io/app":"1663849042183958382","kapp.k14s.io/association":"v1...

kapp.k14s.io/original-diff-md5: d896c768fb4b1cfee46f39dc38dffbbc

pv.kubernetes.io/bound-by-controller: yes

Finalizers: [kubernetes.io/pv-protection]

StorageClass:

Status: Bound

Claim: portal/pv-cifs-artikelvortopf2web-live-only-live-1

Reclaim Policy: Retain

Access Modes: RWX

VolumeMode: Filesystem

Capacity: 5Gi

Node Affinity: <none>

Message:

Source:

Type: CSI (a Container Storage Interface (CSI) volume source)

Driver: smb.csi.k8s.io

FSType:

VolumeHandle: pv-cifs-artikelvortopf2web-live-only-live-1

ReadOnly: false

VolumeAttributes: source=//srv-sql-be-live.mydomain.com/BulkFiles/artikelvortopf2

Events: <none>

But the deployment still mounts the old server

root@artikelvortopf2web-live-84bbf97b5d-8zthw:/var/www# mount | grep cifs

//srv-mssql-be.mydomain.com/BulkFiles on /var/www/bulk type cifs (rw,relatime,vers=3.0,cache=strict,username=user,domain=DOMAIN,uid=0,forceuid,gid=0,forcegid,addr=172.16.1.7,file_mode=0777,dir_mode=0777,soft,nounix,serverino,mapposix,rsize=1048576,wsize=1048576,echo_interval=60,actimeo=1)

root@artikelvortopf2web-live-84bbf97b5d-8zthw:/var/www#

Im not yet sure whats the problem, my first guess was some kind of caching, because the name of the volume does not change...

What you expected to happen:

Updated mount to the new server

How to reproduce it:

I guess create a volume, use it, delete it , recreate it with the same name but different server... I will try to verify this..

Anything else we need to know?:

Environment:

- CSI Driver version: 1.5.0 (Helm Chart version ?)

- Kubernetes version (use

kubectl version): v1.18.10 - OS (e.g. from /etc/os-release): 18.04.4 LTS (GNU/Linux 4.15.0-191-generic x86_64)

- Kernel (e.g.

uname -a): GNU/Linux 4.15.0-191-generic x86_64 - Install tools:

- Others:

Same problem with current version. 1.9.0

Scaling up my deployment i even get different results in each instance....

These are the mounts in three instances of the same deployment, everything is totally mixed up

root@artikelvortopf2web-live-687c6f8f9b-sjkbj:/var/www# mount | grep cifs

//srv-mssql-be.mydomain.com/BulkFiles on /var/www/storage type cifs

//10.10.10.195/k8s-cifs/artikelvortopf/live/media on /var/www/bulk type cifs

//10.10.10.195/k8s-cifs/artikelvortopf/live/storage on /var/www/public/product_images type cifs

root@artikelvortopf2web-live-687c6f8f9b-hcl7v:/var/www# mount | grep cifs

//10.10.10.195/k8s-cifs/artikelvortopf/live/storage on /var/www/storage type cifs

//10.10.10.195/k8s-cifs/artikelvortopf/live/media on /var/www/bulk type cifs

//10.10.10.195/k8s-cifs/artikelvortopf/live/storage on /var/www/public/product_images type cifs

root@artikelvortopf2web-live-687c6f8f9b-hcl7v:/var/www# mount | grep cifs

//10.10.10.195/k8s-cifs/artikelvortopf/live/storage on /var/www/storage type cifs

//10.10.10.195/k8s-cifs/artikelvortopf/live/media on /var/www/bulk type cifs

//10.10.10.195/k8s-cifs/artikelvortopf/live/storage on /var/www/public/product_images type cifs

And these are the mounts and the paths where they should be mounted.:

//10.10.10.195/k8s-cifs/artikelvortopf/live/storage => /var/www/storage

//10.10.10.195/k8s-cifs/artikelvortopf/live/media => /var/www/public/product_images

//srv-sql-be-live.mydomain.com/BulkFiles/artikelvortopf2 => /var/www/bulk

So after some more checking i found old mounts on some of the workers

//srv-mssql-be.mydomain.com/BulkFiles on /var/lib/kubelet/plugins/kubernetes.io/csi/pv/pv-cifs-artikelvortopf2web-live-only-live-1/globalmount

as the mountpoint is using the name of the PV, it seems the mount is not validated but just used instead of creating a new one with the new, correct destination.

After manually unounting these old mounts or restarting the node everything is mounted correctly again.

How does the lifecycle of a mount look like ? When does the globalmount get unmounted ? I saw them on multpiple nodes where no workload/pod was running anymore.

So after some more checking i found old mounts on some of the workers

//srv-mssql-be.mydomain.com/BulkFiles on /var/lib/kubelet/plugins/kubernetes.io/csi/pv/pv-cifs-artikelvortopf2web-live-only-live-1/globalmountas the mountpoint is using the name of the PV, it seems the mount is not validated but just used instead of creating a new one with the new, correct destination.

After manually unounting these old mounts or restarting the node everything is mounted correctly again.

How does the lifecycle of a mount look like ? When does the

globalmountget unmounted ? I saw them on multpiple nodes where no workload/pod was running anymore.

I'm seeing this behaviour too, but unable to find globalmount.

I've torn everything in the cluster down and removed and reinstalled the csi driver, but I'm still seeing this

kubectl exec -it csi-smb-node-lc9pd -n kube-system -c smb -- mount | grep cifs

//10.20.0.10/proxmox-zfs on /var/lib/kubelet/plugins/kubernetes.io/csi/pv/pv-cfg/globalmount type cifs //10.20.0.10/proxmox-zfs on /var/lib/kubelet/plugins/kubernetes.io/csi/pv/pv-smb/globalmount type cifs

the two pvs no longer exist, but i'm unable to find /var/lib/kubelet/plugins/kubernetes.io on the hosts.

Edit: nvm. found it on one host. same issue. How were you able to remove the files? Edit2: umount the cifs share on the host, delete the pv files.

Thanks. I thought I was going mental.

In my case, I accidentally mounted the wrong directory in my pv and it seems like it has persisted after that as a result.

Should this not get unmounted from all hosts when the pv is removed, or is that not possible to do?

@xlanor we were moving shares from older servers to new ones. Only the hostname/ip changed, paths and pv names remained unchanged and we had mounts mixed up all over the place... not ideal :( But somewhat similar to your accidental wrong/corrected mount

There should definitely be a full check on pv-name / host / ip and and all mount properties ( which i did not test.. eg changing smb version and stuff.. ) and some kind of cleanup for orphaned/incorrect mounts

I'm more than open to contributing to this, but would need clarification on what exactly the expected behaviour would be like from the maintainer, like you said w.r.t the lifecycle.

I imagine this might not be a problem in cloud if you tear down hosts regularly and respawn them, but I'm running this on prem in my homelab where this is slightly more problematic

how did you change the server name in pv?

PVs are deleted and recreated as far as i know.

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs.

This bot triages issues and PRs according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue or PR as fresh with

/remove-lifecycle stale - Mark this issue or PR as rotten with

/lifecycle rotten - Close this issue or PR with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

Not stale, still an issue

/remove-lifecycle stale

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle rotten - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle rotten

/remove-lifecycle rotten

Is there any workaround for this issue if I have encountered it? i.e. how do I force it to use the correct parameters?

The Kubernetes project currently lacks enough contributors to adequately respond to all issues.

This bot triages un-triaged issues according to the following rules:

- After 90d of inactivity,

lifecycle/staleis applied - After 30d of inactivity since

lifecycle/stalewas applied,lifecycle/rottenis applied - After 30d of inactivity since

lifecycle/rottenwas applied, the issue is closed

You can:

- Mark this issue as fresh with

/remove-lifecycle stale - Close this issue with

/close - Offer to help out with Issue Triage

Please send feedback to sig-contributor-experience at kubernetes/community.

/lifecycle stale

/remove-lifecycle stale

if you change the setting in the pv, the change would only take effect when you schedule the pod with that volume mount to other node without having this volume mount, that's by design.

Since this driver uses globalmount, the unmount for specific pv would only happen when the last pod with that volume mount is deleted on the node.

close this issue since it's by design.

I had to write a script to umount all related mounts in globalmount, even after all workloads were removed. Anyhow... as long as the behavior is not predictable for the user and changing the mount is allowed.. this is shitty by design.

There should only be two options

- Change mount => error not allowed

- Change mount => new mount active

Now we have a nice mix of nodes pointing to different shares... just roll a dice to see where you end up 🤷

I had to write a script to umount all related mounts in globalmount, even after all workloads were removed. Anyhow... as long as the behavior is not predictable for the user and changing the mount is allowed.. this is shitty by design.

There should only be two options

* Change mount => error not allowed * Change mount => new mount activeNow we have a nice mix of nodes pointing to different shares... just roll a dice to see where you end up 🤷

any chance you can share this script? still running into this issue sadly and suspect it just caused an outage my end..

@xlanor Not really a finished script to share.. i had a list of old servernames that were used in mounts before the migrated to newer servers. script connected to all workers, checked for old mounts using those servernames and umounted them.

Get a list of all PVs with UNC path of the share

kubectl get pv -o json | jq -r '.items[] | select(.spec.csi) | "\(.metadata.name) \(.spec.csi.volumeAttributes.source)"'

pv-cifs-47111a8841df1f56cff925721f851c16e0f9a25f-only-1 //srv-mssql1.mydomain.com/BulkFiles

pv-cifs-62bdeee83e02f2194d860fc264076dc039d45a6d-only-1 //srv-sql-be1.mydomain.com/BulkFiles/artikelvortopf2

pv-cifs-6f675830524d0ba158cb5bb4d1cedda4ec19ddd5-only-1 //srv-nav-sql.mydomain.com/BULKFiles

grep what for the old servernames, connecto to all workers and run

umount /var/lib/kubelet/plugins/kubernetes.io/csi/pv/pv-cifs-6f675830524d0ba158cb5bb4d1cedda4ec19ddd5-only-1/globalmount

I think that was it overall..