kubeone

kubeone copied to clipboard

kubeone copied to clipboard

Azure External CSI and kube-controller-manager volume-attachment conflict (race)

What happened?

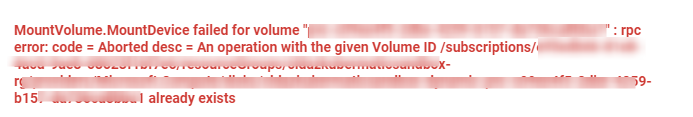

With external CSI for Azure, sometimes, our volumes do not get bound with error "An operation with the given VolumeID xxx already exists"

Error message:

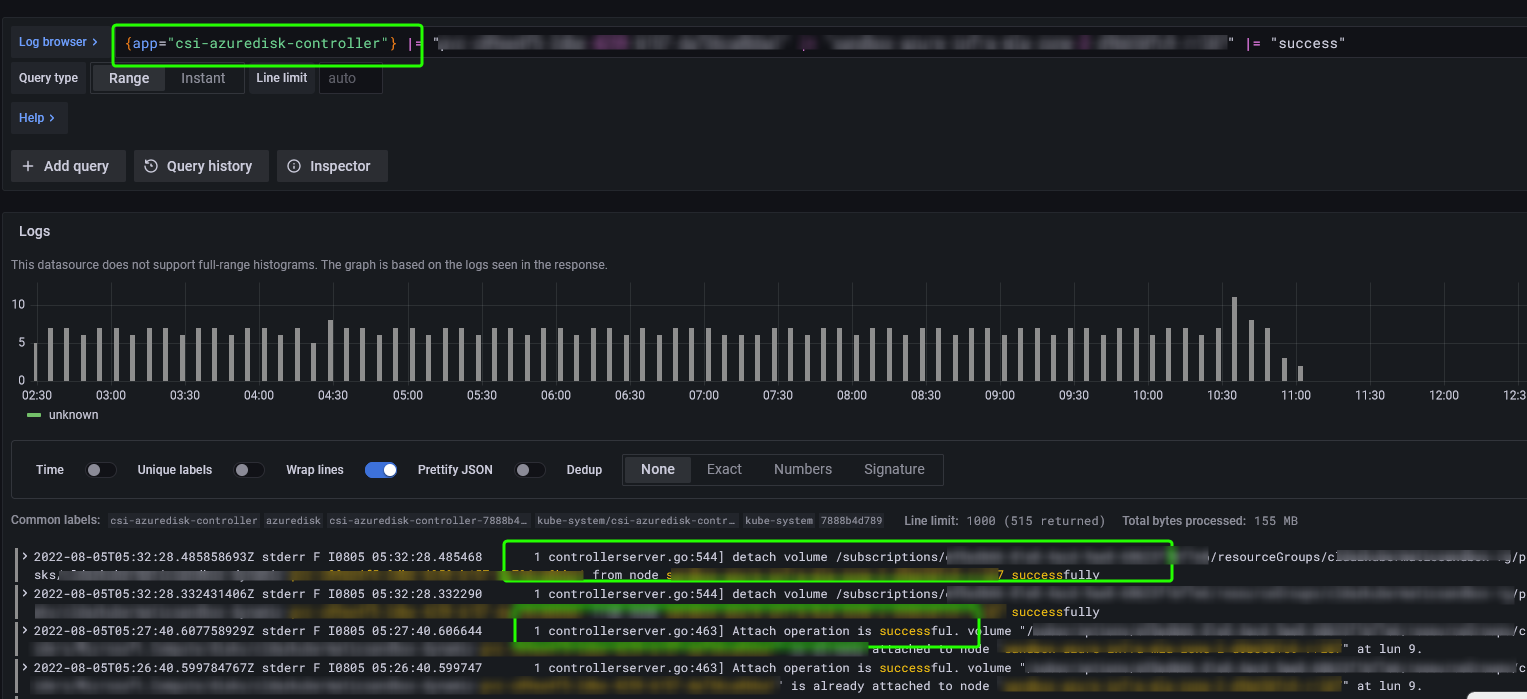

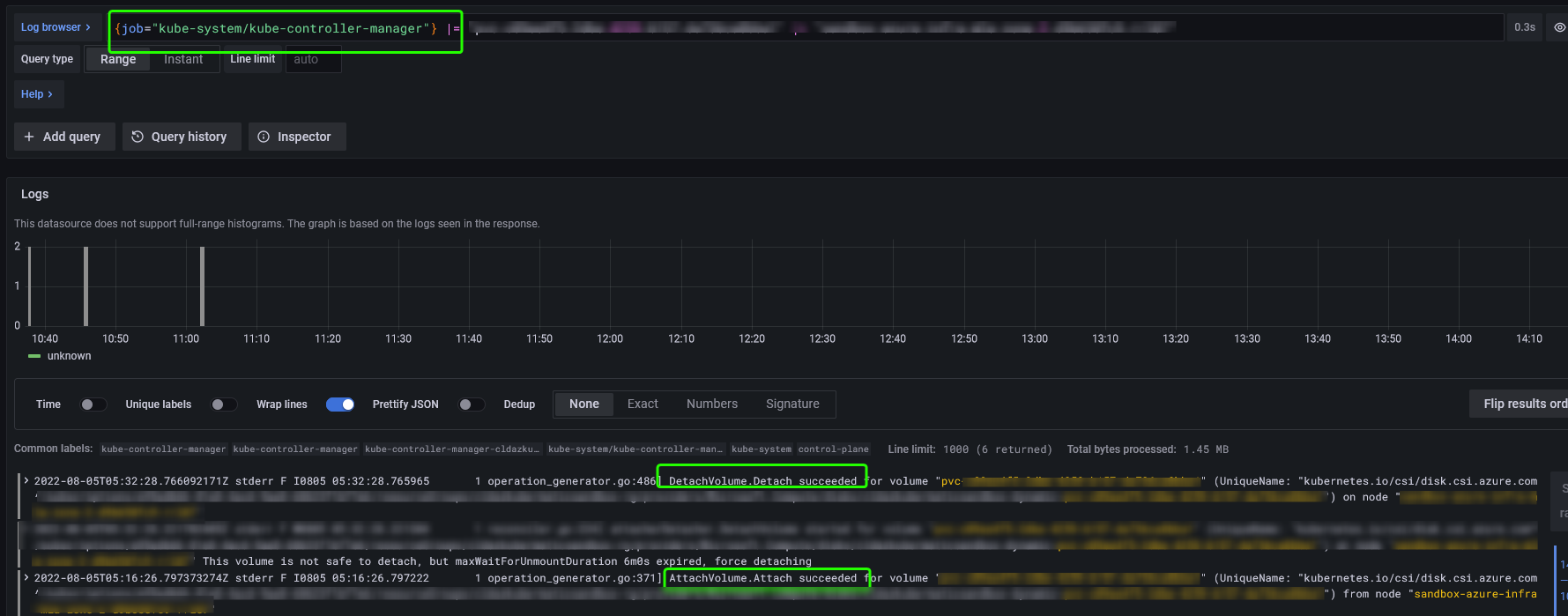

Upon closure look at logs, it appears that both, csi-azuredisk-controller and kube-controller-manager, try to attach volume and create conflict.

csi-azuredisk-controller logs:

kube-controller-manager logs:

At this junction only solution that I am currently aware of is.. to delete the node where this conflict is happening.

Expected behavior

Volume attachment should work without any issues

How to reproduce the issue?

Unfortunately, there are not confirmed steps to reproduce the issue

What KubeOne version are you using?

$ kubeone version

{

"kubeone": {

"major": "1",

"minor": "4",

"gitVersion": "1.4.4",

"gitCommit": "3d62a6ff07d0f3eacf9c9900acf8ccb71333466f",

"gitTreeState": "",

"buildDate": "2022-06-02T13:24:15Z",

"goVersion": "go1.18.1",

"compiler": "gc",

"platform": "linux/amd64"

},

"machine_controller": {

"major": "1",

"minor": "43",

"gitVersion": "v1.43.3",

"gitCommit": "",

"gitTreeState": "",

"buildDate": "",

"goVersion": "",

"compiler": "",

"platform": "linux/amd64"

}

Provide your KubeOneCluster manifest here (if applicable)

apiVersion: kubeone.k8c.io/v1beta2

kind: KubeOneCluster

name: XXXXXX

versions:

kubernetes: "1.22.9"

apiEndpoint:

host: 'XXXX.xxx.xx.xx'

containerRuntime:

containerd: {}

cloudProvider:

external: true

azure: {}

clusterNetwork:

nodePortRange: "30000-30199"

addons:

enable: true

path: "./addons" # always apply

addons:

- name: azure-sc

- name: backup-restic

- name: cluster-autoscaler # Using default addon from kubeone now.

- name: docker-pre-pull-daemonset

- name: ntp-daemonset

- name: k8s-event-logger

What cloud provider are you running on?

Azure

What operating system are you running in your cluster?

Ubuntu 20.04

Additional information

I heavily suspect the observed behaviour when it comes to the perceived race condition is "working as intended", because VolumeAttachment objects are likely created and watched by kube-controller-manager and then processed by the CSI driver (see for example the description for the external-attacher sidecar). Essentially, kube-controller-manager requests a volume attachment and the CSI driver acts on it by attaching a volume. I would expect both to log their part of that work flow.

Issues go stale after 90d of inactivity.

After a furter 30 days, they will turn rotten.

Mark the issue as fresh with /remove-lifecycle stale.

If this issue is safe to close now please do so with /close.

/lifecycle stale