kube-ovn

kube-ovn copied to clipboard

kube-ovn copied to clipboard

A Bridge between SDN and Cloud Native (Project under CNCF)

# Expected Behavior Two staticIP pod can ping each other # Actual Behavior Two pods can not ping each other # Steps to Reproduce the Problem 1.create statefulset-xyz 2.create statfulset-uvw...

# Expected Behavior vm still works well when kube-ovn-controller set keep-vm-ip = true, and reboot all vm # Actual Behavior # Steps to Reproduce the Problem use kube-ovn 1.10.0 1....

k3s有一个svclb-traefik,会添加一条主机80 443端口的流量DNAT到svclb-traefik Pod的iptables规则:  1.10.2版本未生成该iptables规则:  导致双网络接口一个可以通,一个不通:  而1.9.5版本没有该问题:  此外,1.10.2版本中ovn-central组件一直报错:  1.9.5版本无此问题。 感谢。

We only have doc about chaining mode with Cilium now. But we need a guide to show what capabilities we have and how to show them.

# Expected Behavior pod内部获取到的web请求的源ip是外部请求发起的IP地址。 # Actual Behavior pod内部获取到的web请求的源ip是node节点在join子网的IP地址。 # Steps to Reproduce the Problem 1. 集群外node访问nodeport 的ip 2. pod内部打印remoteAddr # Additional Info - Kubernetes version: **Output of `kubectl version`:** ```...

# Expected Behavior 解决偶现的问题 # Actual Behavior # Steps to Reproduce the Problem 1. 重复创建nat-gw-pod,创建和删除 fip 有概率出现 # ping fip 不通时的情况  # ping fip 正常时的情况  对比发现 少了net1网卡 ttl...

目前像deployment这类workload资源,只有ip_pool配置,现在有一个场景需要mac地址也可以像ip_pool一样有一个可分配的池,不知有有没有什么解决方案? apiVersion: apps/v1 kind: Deployment metadata: namespace: ovn-test name: starter-backend labels: app: starter-backend spec: replicas: 2 selector: matchLabels: app: starter-backend template: metadata: labels: app: starter-backend annotations: ovn.kubernetes.io/ip_pool: 10.16.0.15,10.16.0.16,10.16.0.17 #如何实现mac_pool spec:...

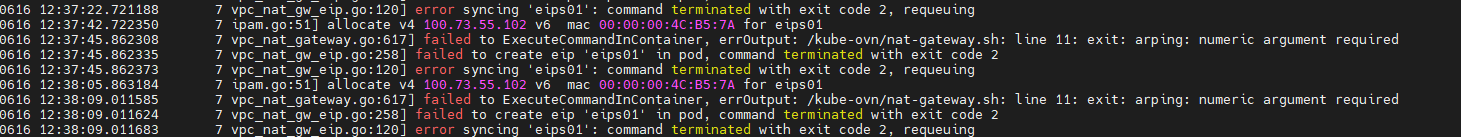

# Expected Behavior # Actual Behavior # Steps to Reproduce the Problem 通过nsenter进到容器的namespace中,执行arping 命令,返回执行结果为成功  而直接进入容器,执行arping命令,返回执行结果为失败  这导致无法成功创建EIP,ovn-controller报错如下:  # Additional Info - Kubernetes version: **Output of `kubectl version`:** ```...

# Expected Behavior # Actual Behavior # Steps to Reproduce the Problem 1. 2. 3. # Additional Info - Kubernetes version: **Output of `kubectl version`:** ``` (paste your output here)...

# Expected Behavior 当存在大量 POD 时,占用内存 18G 左右,不知道占用是否正常。 删除 POD 后,占用的内存应该释放。 # Actual Behavior kube-ovn-controller 在大量 POD (1W)场景,占用内存 18G 左右,删除 POD 后,内存未释放。 # Steps to Reproduce the Problem 1. 使用...