What is the reason that the trained LoRA will only splash screen?

Hello everyone, I am new to this, I would like to ask why my trained LoRA only splashes, I have trained with different image sets many times with this result, is it because of the CUDA version problem? My CUDA is version 12.1

I'm not sure the CUDA version causes it or not, but please check that --v2 and --v_parameterization options are appropriate for the model you trained on.

Thank you very much for your prompt reply. My running command is as follows, without specifying the --v2 and --v_parameterization options.

accelerate launch --num_cpu_threads_per_process=2 "train_network.py" --enable_bucket \

--pretrained_model_name_or_path="runwayml/stable-diffusion-v1-5" --train_data_dir="${train_data_dir}" \

--resolution=512,512 --output_dir="${output_dir}" --logging_dir="${logging_dir}" --network_alpha="128" \

--save_model_as=safetensors --network_module=networks.lora --text_encoder_lr=5e-5 --unet_lr=0.0001 \

--network_dim=128 --output_name="${output_name}" --lr_scheduler_num_cycles="1" --learning_rate="0.0001" \

--lr_scheduler="constant" --train_batch_size="2" --max_train_steps="435" --save_every_n_epochs="1" \

--mixed_precision="fp16" --save_precision="fp16" --seed="1234" --caption_extension=".txt" --cache_latents \

--max_data_loader_n_workers="1" --clip_skip=2 --bucket_reso_steps=64 --xformers \

--use_8bit_adam --bucket_no_upscale

Have you tried a different scheduler like cosine with restarts for example?

@TingTingin Thank you for your reply. I don't quite understand the meaning of lr_scheduler, but I will give it a try. Thank you!

Try cosine_with_restarts as your scheduler instead

@TingTingin Thanks for your reply! I trained two LoRAs with cosine and cosine_with_restarts lr_scheduler respectively, still splash screen

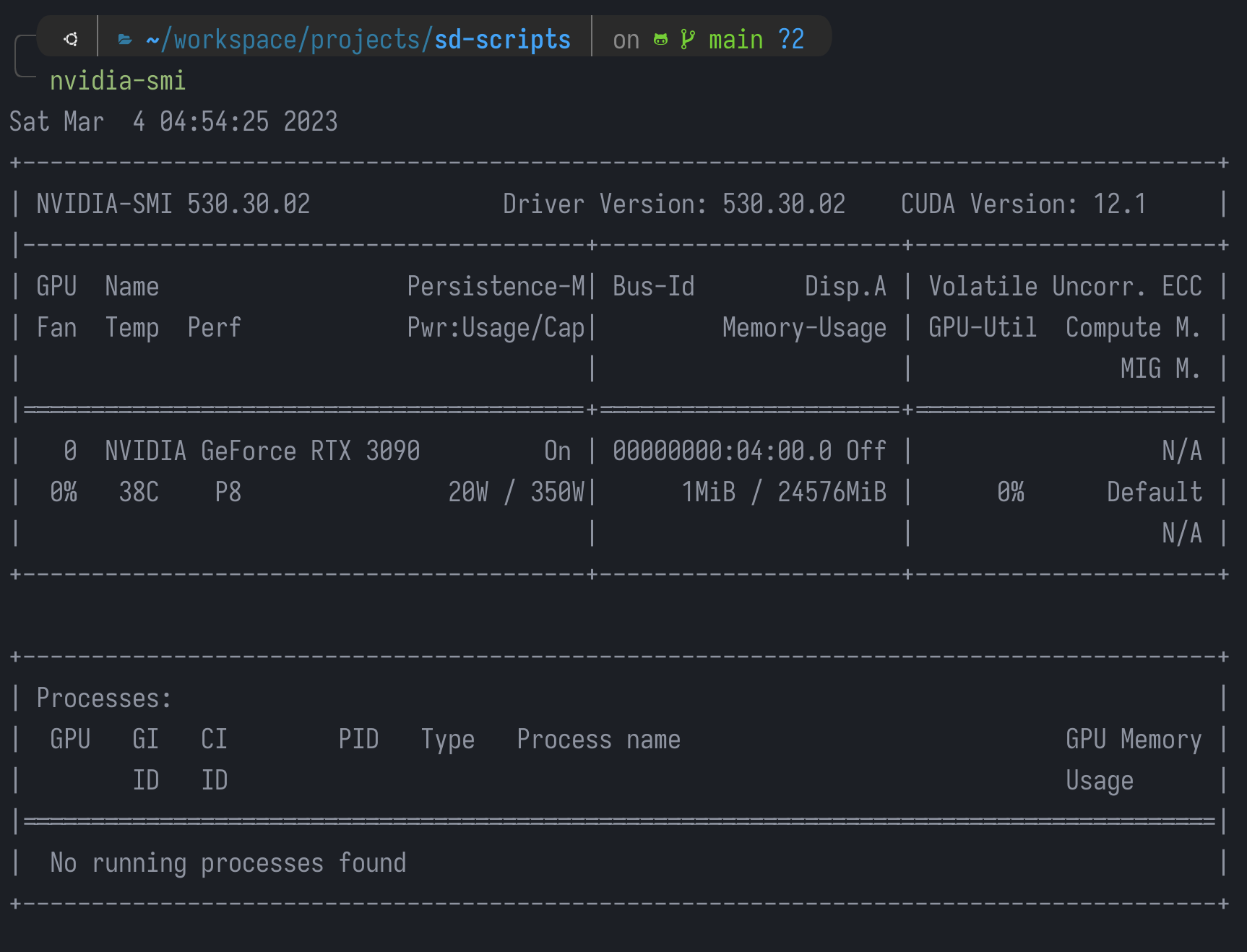

What gpu you have is it a 1660?

@TingTingin My GPU is 3090

Any update on this issue ? I have the exact same problem.

What settings? And gpu

I was using wrong precision by using default accelerate setting (accelerate config default)

after setting mixed_precision to fp16 the output lora is working.

!rm ~/.cache/huggingface/accelerate/default_config.yaml

!accelerate config default --mixed_precision fp16