Support Topology Aware Hint

What is changing? (Please include as many details as possible.)

The address that Knative registers in Revision's EndpointSlice does not include zone information. Can we include zone information to optimize traffic routing?

https://kubernetes.io/docs/concepts/services-networking/topology-aware-hints/#implementation-control-plane

How will this impact our users?

In what release will this take happen (to the best of your knowledge)?

Ex. v0.2-v0.3

Context

None

Additional info

AFAIK, we do not currently set EndpointSlice. They are generated by the k8s controller that mirrors the Endpoints.

@kahirokunn what would you need the info for? Do you have special use-case for that?

@ReToCode

AFAIK, we do not currently set EndpointSlice. They are generated by the k8s controller that mirrors the Endpoints.

What does this mean? I may have misunderstood how this part works. I am curious and would appreciate a little more explanation if possible.

what would you need the info for? Do you have special use-case for that?

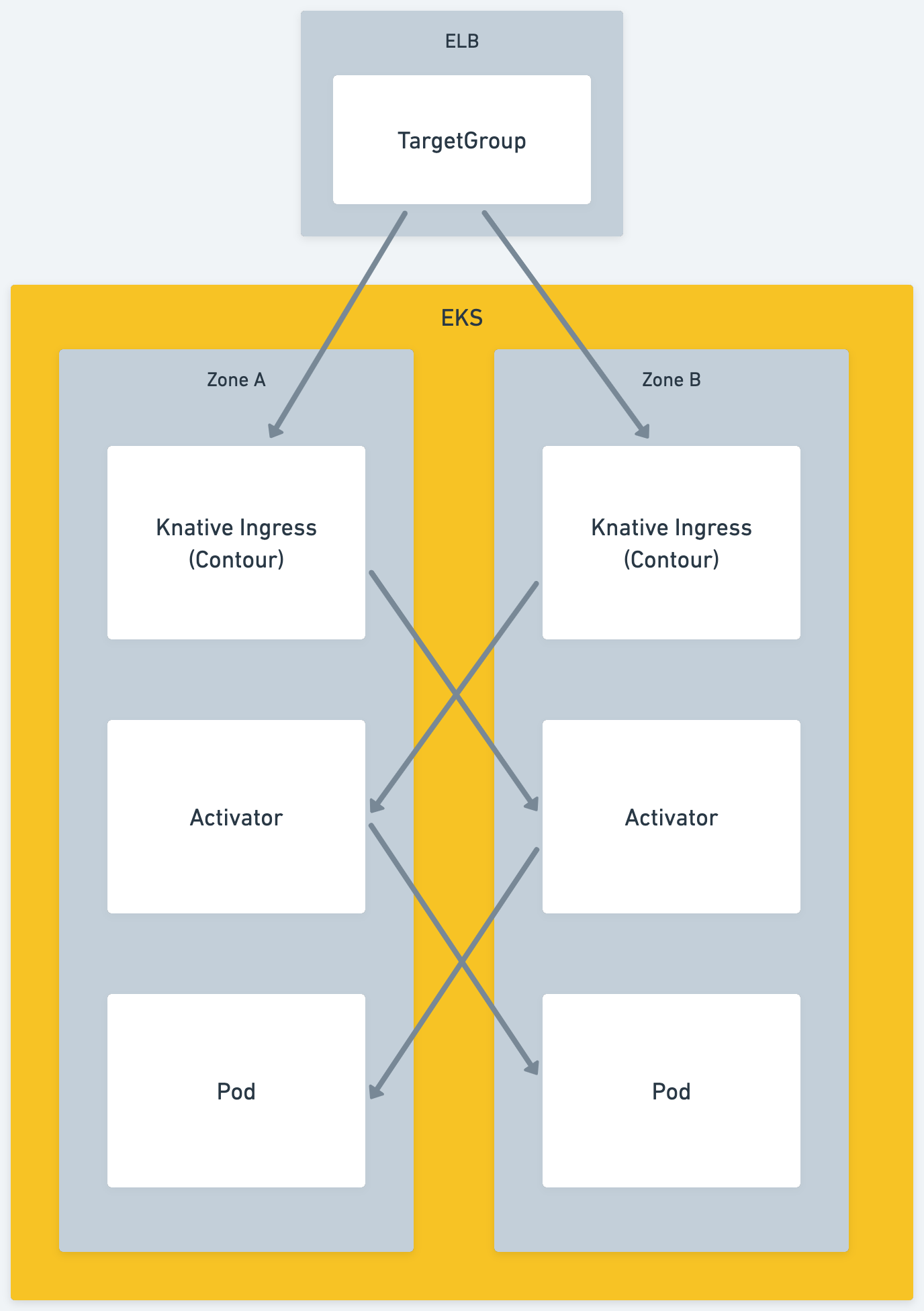

I am building a microservice in Knative on EKS. I am using 3 availability zones. I am using three availability zones, and traffic crosses the zones. When traffic crosses a zone, I have to pay by the number of bytes communicated between ingress and egress of the zone. Topology Aware Hint can solve the problem of East-West Traffic.

What does this mean?

Knative does not create the EndpointSlices (at least for now). Kubernetes does this automatically based on the Endpoints object to keep them in sync. So we (meaning Knative) do not know about the topology and could not add anything to the EndpointSlices.

From your use-case I do not understand how having that would help you. Couldn't you label your nodes with their availability zones and then use this: https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/ to schedule certain services to a specific zone?

@ReToCode This is the motivation behind the topology aware hint. https://github.com/kubernetes/enhancements/blob/master/keps/sig-network/2433-topology-aware-hints/README.md#motivation

Knative Serving Controller actually generates the following Endpoints.

apiVersion: v1

kind: Endpoints

metadata:

annotations:

argocd.argoproj.io/tracking-id: sample-service:serving.knative.dev/Service:sample-service/sample-service

service.kubernetes.io/topology-aware-hints: auto

serving.knative.dev/creator: system:serviceaccount:argocd:argocd-application-controller

serving.knative.dev/lastModifier: system:serviceaccount:argocd:argocd-application-controller

creationTimestamp: "2023-01-31T03:15:33Z"

labels:

argocd.argoproj.io/instance: sample-service

serving.knative.dev/route: sample-service

serving.knative.dev/service: sample-service

name: sample-service

namespace: sample-service

ownerReferences:

- apiVersion: serving.knative.dev/v1

blockOwnerDeletion: true

controller: true

kind: Route

name: sample-service

uid: ba45fc94-dbab-4b69-b89a-1a0cb7ecda41

resourceVersion: "18448192"

uid: 80501913-352f-4f7d-bb02-d993045b47bb

subsets:

- addresses:

- ip: 172.20.160.124

ports:

- name: http2

port: 80

protocol: TCP

I will give you one example.

Contour-external-envoy has Topology Aware Hints for Endpoint Slices.

$ kubectl get endpointslices -n contour-external contour-external-envoy-6rkdj -o yaml | yq .endpoints[].hints

forZones:

- name: ap-northeast-1d

forZones:

- name: ap-northeast-1c

forZones:

- name: ap-northeast-1a

Endpoints in contour-external-envoy have nodeName and targetRef in the addresses.

apiVersion: v1

kind: Endpoints

metadata:

annotations:

endpoints.kubernetes.io/last-change-trigger-time: "2023-02-23T00:04:48Z"

creationTimestamp: "2023-01-10T02:05:12Z"

labels:

app.kubernetes.io/component: envoy

app.kubernetes.io/instance: contour-external

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: contour

argocd.argoproj.io/instance: contour-external

helm.sh/chart: contour-10.1.3

networking.knative.dev/ingress-provider: contour

name: contour-external-envoy

namespace: contour-external

resourceVersion: "38885079"

uid: 6c86fea3-9d45-4fb5-895c-c80113d21618

subsets:

- addresses:

- ip: 10.0.102.125

nodeName: ip-10-0-108-135.ap-northeast-1.compute.internal

targetRef:

kind: Pod

name: contour-external-envoy-8666f67765-cvnr6

namespace: contour-external

uid: be4267e6-8e63-4672-afe6-86a2fd18fd55

- ip: 10.0.145.30

nodeName: ip-10-0-155-68.ap-northeast-1.compute.internal

targetRef:

kind: Pod

name: contour-external-envoy-8666f67765-7xgsc

namespace: contour-external

uid: 86783d60-774f-4dee-acbe-af9a6bb98aa2

- ip: 10.0.52.106

nodeName: ip-10-0-36-223.ap-northeast-1.compute.internal

targetRef:

kind: Pod

name: contour-external-envoy-8666f67765-tmhjf

namespace: contour-external

uid: fc36ba6e-e448-49f5-9f61-42762c9a6896

ports:

- name: https

port: 8443

protocol: TCP

- name: http

port: 8080

protocol: TCP

Perhaps this lack of information is the reason why k8s controller cannot add Topology Aware Hints.

Is it possible to add nodeName and targetRef to subsets[].addresses[] of Endpoints generated by Knative Serving?

Or is there a reason not to add the nodeName and targetRef that everyone else is adding?

I found the code. Is it one of these?

https://github.com/knative/serving/blob/1d1cd7946090e3df2765be6b30865bf86434f31d/pkg/autoscaler/statforwarder/leases.go#L236

https://github.com/knative/serving/blob/45f7c054f69448695d4e9bc11f5a451b3c9f1eff/pkg/reconciler/route/resources/service.go#L127

@ReToCode I don't know the details of how knative serving works, but is it something like this? https://github.com/knative/serving/pull/13727

Hey @kahirokunn so our Revision's K8s service endpoints is manually managed by our ServerlessService controller here

https://github.com/knative/serving/blob/main/pkg/reconciler/serverlessservice/serverlessservice.go

But I believe even settings hints won't help as the activator performs it's own load balancing and it's not hint aware. A general concern I have is how some things could become unbalanced. ie. if the ingress picks an activator that's in the same AZ - it might cause an imbalance in the spread of requests.

Related to your PR #13727 it won't have the desired effect you're looking for - the statsforwarder is used to forward stats from one autoscaler to another when they're running in a high availability setup. The target autoscaler is known because it is the lease holder for a specific revision.

I don't have a great answer to your problem. You could try and run distinct clusters in the same AZ (with Knative deployed to each one) and perform some global routing over that.

Otherwise making some Knative components topology aware would require a lot of thought about how it works, what knobs operators and users can control etc. This would warrant going through the feature track process - https://github.com/knative/community/blob/main/mechanics/FEATURE-TRACKS.md

But I believe even settings hints won't help as the activator performs it's own load balancing and it's not hint aware.

Knative will initially load-balance the Activator, but as the number of pods grows, it will no longer go through the Activator. Therefore, it is still effective to set up hints.

However, in the case of a pod that handles images and video, network fees are very expensive, so we don't want to cross the zone.

So, I think it is good to separate the implementation of hints in the Service and the load balancing by the Activator as per the Hints.

ie. if the ingress picks an activator that's in the same AZ - it might cause an imbalance in the spread of requests.

This is what we would rather see happen. We would rather that this be the case, since we can just disperse them on the path to Knative Ingress. If the path from Ingress to Activator is not also concentrated in the same AZ, the cross zone fee may be added.

Now, the maximum number of times you cross over is two, so you will incur three times the network charges as if you did not cross over. This is critical for services that have a large number of users or that exchange large amounts of data.

If the path from Ingress to Activator is not also concentrated in the same AZ, the charge for crossing zones may be added. For example, I distribute the ELB -> Knative Ingress route by round-robin, but I think it is enough if it is evenly distributed there.

However, there are times when you may want to focus more on evenness than network rates, so we think it would be best if feature flags could be used to switch between functions.

I have written a Knative Topology Aware Hint Feature Proposal. However, I did not have the right to save it on the team drive. 😢 https://docs.google.com/document/d/1ecA7VwsRUqfy10Z0QyVM55LHmDxbJ2Y2REJ3gDgAkAY/edit?usp=sharing

@kahirokunn I'm using grafana rollout operator to group rollouts for multiple AZ's. Created pretty much the same feature request #14045 :\

Knative could use similar rollout strategy, or just integrate with that grafana rollout operator, as is.

This issue is stale because it has been open for 90 days with no

activity. It will automatically close after 30 more days of

inactivity. Reopen the issue with /reopen. Mark the issue as

fresh by adding the comment /remove-lifecycle stale.

keep

I think it is best to have compatibility in the endpoints that Knative generates, not in workarounds.

This issue is stale because it has been open for 90 days with no

activity. It will automatically close after 30 more days of

inactivity. Reopen the issue with /reopen. Mark the issue as

fresh by adding the comment /remove-lifecycle stale.

Keep