Multi-tenancy support

Problem

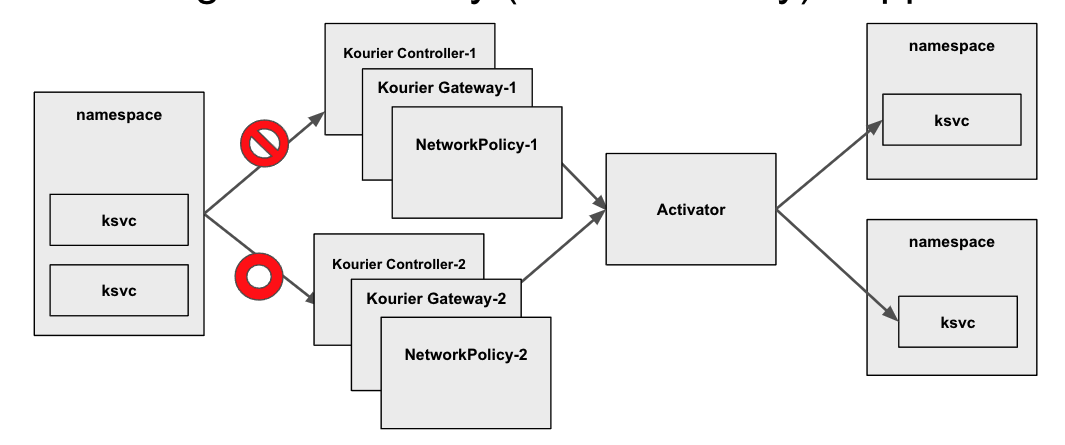

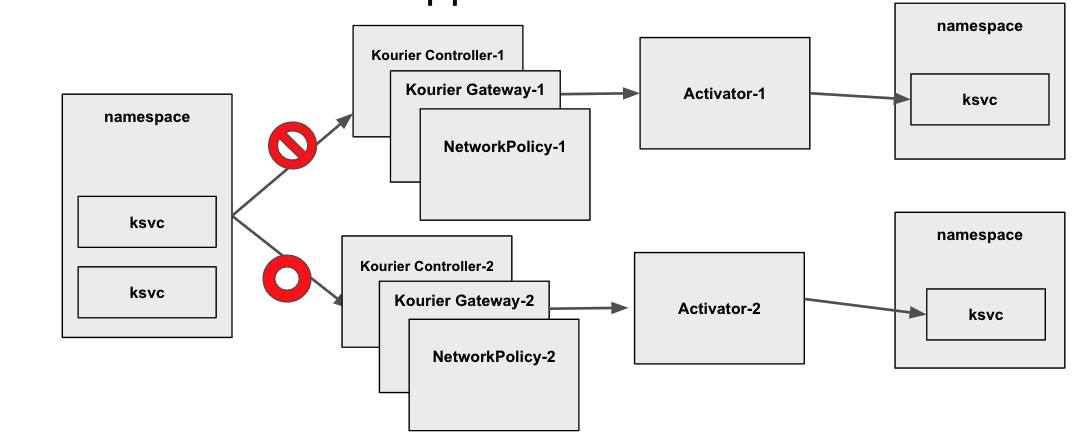

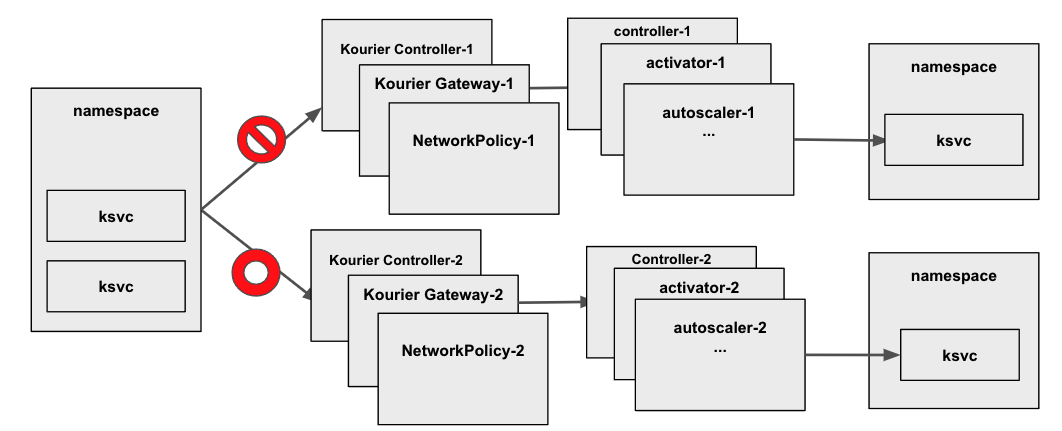

Currently, the Knative serving data-plane components ingress gateway, activator, and autoscaler are cluster-singletons and shared amongst all users of a cluster. One implication of this architectural choice is that every KService can reach every other KService within the cluster network-wise, regardless of namespaces or any additional isolation setup via NetworkPolicy resources.

Describe the feature

True multi-tenancy would require complete separation of all platform resources, which is not entirely possible on Kubernetes as specific resources like CRDs have a cluster-wide scope. However, some extensions are required to separate the network traffic for different tenants (defined as isolated user-groups of a Kubernetes cluster). There are multiple levels of isolations of those shared components that differ in complexity and resource usage. This presentation gives an overview of the possible implementations that we (@nak3, @rhuss) can imagine.

In summary, it's about these three isolation levels:

Multi Ingress Gateway (NetworkPolicy) Support

Multi Activator Support

Multiple Knative Control Planes

Let's discuss which level should be approached. I'd propose to go for Leve 1 as this requires the least amount of changes and the least amount of resource overhead. The question will be how to map a tenant to K8s concepts, i.e., namespace. Having one tenant per namespace seems to be too fine-granular and not very flexible. Instead, an additional grouping concept like a new CRD KnativeMemberRoll containing a list of namespaces associated with a specific tenant or an implicit grouping via namespace labels is suggested.

Related issue: https://github.com/knative/serving/issues/12466

Another option we're exploring is this:

- we accepted the fact that the activator should always be in the path - for a lot of reasons beyond this issue

- add logic to the activator to block cross-NS traffic by having the activator watch all pods to be able to correlate "client IP"->NS. Then the activator can quickly look up the client IP to see if the NS is the same as the destinion's. Then block it if not.

One line of code change (+ a new file) for the PoC just to prove that it can work. If you're interested, here's the experimental branch: https://github.com/knative/serving/compare/main...duglin:nsBlocker?expand=1

I'd propose to go for Level 1 as this requires the least amount of changes and the least amount of resource overhead.

That makes sense. As a next step we should consider at some point full isolation since there are people who might not want to have shared components for QoS and other reasons. For the first option although activator technically can do the request filtering it will start behaving like envoy. I think that adds another concern to that component which adds more complexity and the filtering comes a bit late in the call sequence imho. The sooner you cut the request the better I think is as it will not consume resources from other requests especially if activator is always on the path. Also from an ops perspective it would be much cleaner to apply policies per ingress for different tenants instead of all configuration being setup at the activator side.

Having one tenant per namespace seems to be too fine-granular and not very flexible. Instead, an additional grouping concept like a new CRD KnativeMemberRoll containing a list of namespaces associated with a specific tenant or an implicit grouping via namespace labels is suggested.

Maybe we could consider the K8s models as described here eg. in namespaces as a service a group of namespaces (one or more) are mapped to a tenant. Also there are interesting K8s projects we might want to consider such as Hierarchical Namespaces.

This issue is stale because it has been open for 90 days with no

activity. It will automatically close after 30 more days of

inactivity. Reopen the issue with /reopen. Mark the issue as

fresh by adding the comment /remove-lifecycle stale.

/remove-lifecycle stale

👋 I ran into the issue of https://github.com/knative/serving/issues/12466 and follow it through here. Is there an ETA for this? Thanks!

Hi @owenthereal community is working on this. Work is being done at the networking level https://github.com/knative-sandbox/net-kourier/pull/852, soon we will have a tracking issue for the rest of the work, but no ETA afaik. /cc @lionelvillard

This issue is stale because it has been open for 90 days with no

activity. It will automatically close after 30 more days of

inactivity. Reopen the issue with /reopen. Mark the issue as

fresh by adding the comment /remove-lifecycle stale.

/remove-lifecycle stale

I prefer Multi Activator Support approach, if share actiator, some cve may influence muti-user

This issue is stale because it has been open for 90 days with no

activity. It will automatically close after 30 more days of

inactivity. Reopen the issue with /reopen. Mark the issue as

fresh by adding the comment /remove-lifecycle stale.

/remove-lifecycle stale

The last update on this issue mentioned some work being done in Kourier to support level 1, is there parallel efforts being done in net-istio to support multi-tenancy?