RACNN-pytorch

RACNN-pytorch copied to clipboard

RACNN-pytorch copied to clipboard

Paper level accuracy?

Hi @klrc ,

First of all great repo!! I have been trying to replicate the results of this paper. Till now, I am stuck at 68% top-1 accuracy. I saw you got 69 top-1 accuracy at 23 epochs. Did you get chance to complete the training and get better results?

Please Let me know thanks!

Hi @pshroff04 ,

Thank you for your attention!

I trained for 30 epochs and then stopped, with little improvements in results:

acc_b0_top1: 79.82673% acc_b1_top1: 70.48267% acc_b2_top1: 69.86386% acc_b0_top5: 95.29703% acc_b1_top5: 91.39851% acc_b2_top5: 91.33663%

I will update the readme.md, according to the acc curve, i think it could be further trained for about 10 epochs, but there is a strange issue about the model:

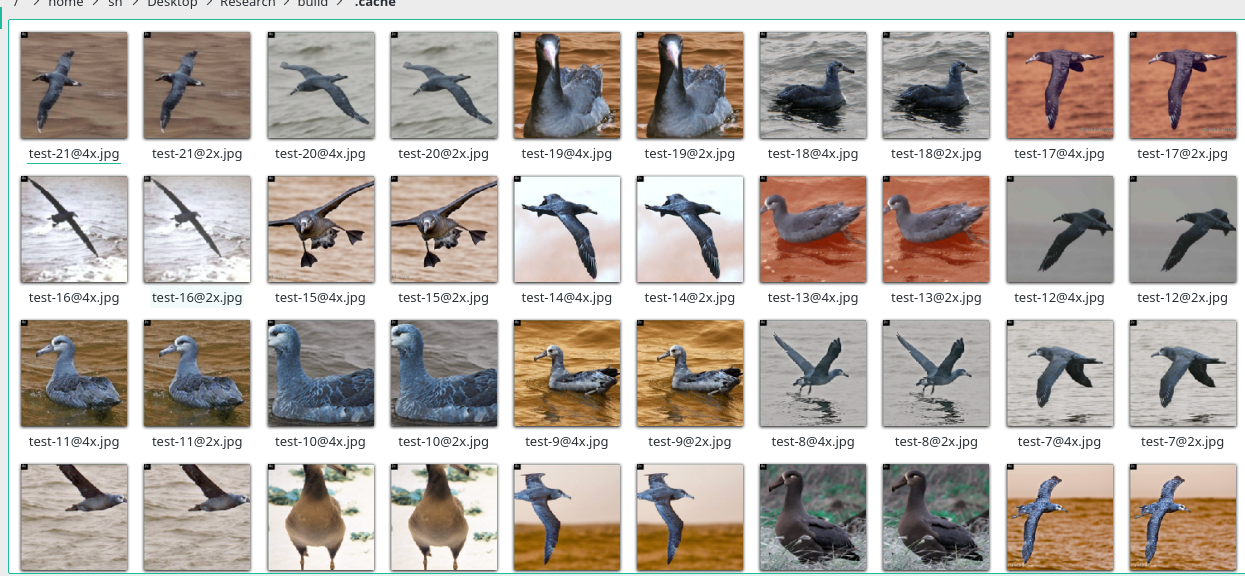

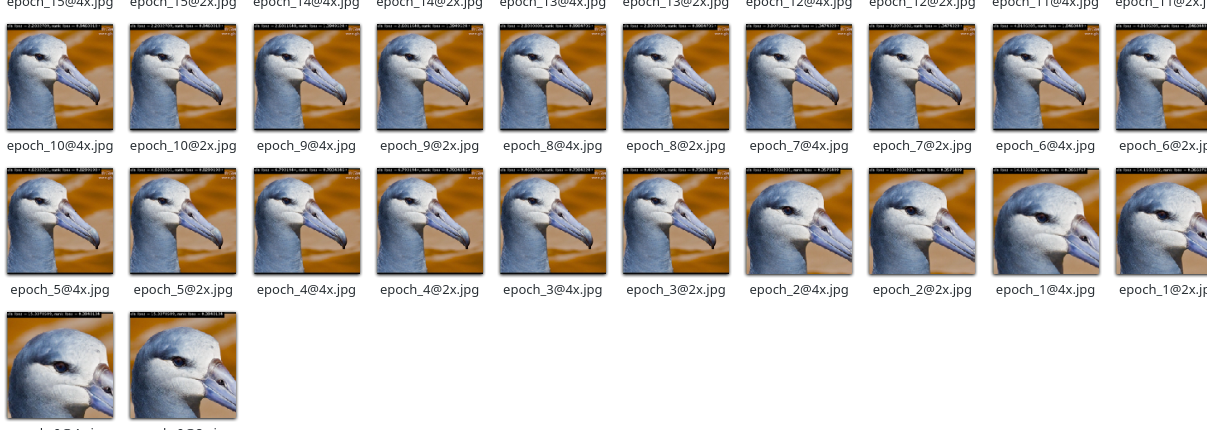

According to the output picture, during the final training phase, the two outputs tend to output the full image size, which is not consistent with the original intention of the paper. Do you have any idea about it?

I'll share with you if there is a better result.

Have you checked other examples ? Is it consistent with all the test images?

Have you checked other examples ? Is it consistent with all the test images?

here is:

it just losses the zoom function in the first 10 epoch... i'll check my loss function

it just losses the zoom function in the first 10 epoch... i'll check my loss function

Have you checked other examples ? Is it consistent with all the test images?

There may be a problem in the training strategy, i just train it for 1 epoch each alternately, maybe it needs to be fully trained?

I haven't pre-trained my backbone with CUB dataset. Do you that can bump up the accuracy?

Actually, I trained with 5 epochs alternatively. I found that there is a slight increase in accuracy in initial epochs but in long run it gives almost the same accuracy. But you can try once.

I haven't pre-trained my backbone with CUB dataset. Do you that can bump up the accuracy?

Actually, I trained with 5 epochs alternatively. I found that there is a slight increase in accuracy in initial epochs but in long run it gives almost the same accuracy. But you can try once.

I pre-trained backbone with CUB200. could you print the tx, ty, tl (attention) of your final model output? I wonder what it should be, Thank you!

Sure!, These are my attentions: (seems pretty erratic to me) Let me know your views on this. `Print attentions Coors(tx,ty,tl): (0.297393798828125, 0.297393798828125, 447.7026062011719)

Print attentions Coors(tx,ty,tl): (0.300567626953125, 0.300567626953125, 447.6994323730469)

Print attentions Coors(tx,ty,tl): (2.84716796875, 2.84716796875, 445.15283203125)

Print attentions Coors(tx,ty,tl): (2.146453857421875, 2.146453857421875, 445.8535461425781)

Print attentions Coors(tx,ty,tl): (1.6961517333984375, 1.6961517333984375, 222.30384826660156)

Print attentions Coors(tx,ty,tl): (2.839813232421875, 2.839813232421875, 221.16018676757812)

Print attentions Coors(tx,ty,tl): (8.19921875, 8.19921875, 215.80078125)

Print attentions Coors(tx,ty,tl): (21.786834716796875, 21.786834716796875, 202.21316528320312)

Print attentions Coors(tx,ty,tl): (0.67449951171875, 0.67449951171875, 447.32550048828125)

Print attentions Coors(tx,ty,tl): (70.68051147460938, 70.68051147460938, 377.3194885253906)

Print attentions Coors(tx,ty,tl): (1.244140625, 1.244140625, 446.755859375)

Print attentions Coors(tx,ty,tl): (0.16094970703125, 0.16094970703125, 447.83905029296875)

Print attentions Coors(tx,ty,tl): (1.8235015869140625, 1.8235015869140625, 222.17649841308594)

Print attentions Coors(tx,ty,tl): (26.552581787109375, 26.552581787109375, 197.44741821289062)

Print attentions Coors(tx,ty,tl): (9.3990478515625, 9.3990478515625, 214.6009521484375)

Print attentions Coors(tx,ty,tl): (1.070159912109375, 1.070159912109375, 222.92984008789062)

Print attentions Coors(tx,ty,tl): (192.3031005859375, 295.9336853027344, 149.33333333333334)

Print attentions Coors(tx,ty,tl): (12.35284423828125, 12.35284423828125, 435.64715576171875)

Print attentions Coors(tx,ty,tl): (0.216766357421875, 0.216766357421875, 447.7832336425781)

Print attentions Coors(tx,ty,tl): (3.62994384765625, 3.62994384765625, 444.37005615234375)

Print attentions Coors(tx,ty,tl): (20.281951904296875, 20.281951904296875, 203.71804809570312)

Print attentions Coors(tx,ty,tl): (20.678756713867188, 20.678756713867188, 203.3212432861328) `

Also, I am using the same dataloader. I found some issue with it: Line 83 of CUB_loader.py

img = cv2.imread(self._imgpath[index])

cv2 loads the image in BGR format. But the transformation compose has function .toPILImage() . This transformation assumes the input ndarray is in RGB (Check assumptions under this. Please correct me if I am wrong.

Sure!, These are my attentions: (seems pretty erratic to me) Let me know your views on this. `Print attentions Coors(tx,ty,tl): (0.297393798828125, 0.297393798828125, 447.7026062011719)

Print attentions Coors(tx,ty,tl): (0.300567626953125, 0.300567626953125, 447.6994323730469)

Print attentions Coors(tx,ty,tl): (2.84716796875, 2.84716796875, 445.15283203125)

Print attentions Coors(tx,ty,tl): (2.146453857421875, 2.146453857421875, 445.8535461425781)

Print attentions Coors(tx,ty,tl): (1.6961517333984375, 1.6961517333984375, 222.30384826660156)

Print attentions Coors(tx,ty,tl): (2.839813232421875, 2.839813232421875, 221.16018676757812)

Print attentions Coors(tx,ty,tl): (8.19921875, 8.19921875, 215.80078125)

Print attentions Coors(tx,ty,tl): (21.786834716796875, 21.786834716796875, 202.21316528320312)

Print attentions Coors(tx,ty,tl): (0.67449951171875, 0.67449951171875, 447.32550048828125)

Print attentions Coors(tx,ty,tl): (70.68051147460938, 70.68051147460938, 377.3194885253906)

Print attentions Coors(tx,ty,tl): (1.244140625, 1.244140625, 446.755859375)

Print attentions Coors(tx,ty,tl): (0.16094970703125, 0.16094970703125, 447.83905029296875)

Print attentions Coors(tx,ty,tl): (1.8235015869140625, 1.8235015869140625, 222.17649841308594)

Print attentions Coors(tx,ty,tl): (26.552581787109375, 26.552581787109375, 197.44741821289062)

Print attentions Coors(tx,ty,tl): (9.3990478515625, 9.3990478515625, 214.6009521484375)

Print attentions Coors(tx,ty,tl): (1.070159912109375, 1.070159912109375, 222.92984008789062)

Print attentions Coors(tx,ty,tl): (192.3031005859375, 295.9336853027344, 149.33333333333334)

Print attentions Coors(tx,ty,tl): (12.35284423828125, 12.35284423828125, 435.64715576171875)

Print attentions Coors(tx,ty,tl): (0.216766357421875, 0.216766357421875, 447.7832336425781)

Print attentions Coors(tx,ty,tl): (3.62994384765625, 3.62994384765625, 444.37005615234375)

Print attentions Coors(tx,ty,tl): (20.281951904296875, 20.281951904296875, 203.71804809570312)

Print attentions Coors(tx,ty,tl): (20.678756713867188, 20.678756713867188, 203.3212432861328) `

that is it!

If your tl is the raw output of APN and you use the same AttentionCropFunction, this should reflect some issues.

According to the paper & AttentionCropFunction implementation, tl is half the size of the attention box, which means all the tl beyond 224 (0.5*448) are inappropriate.

(in my implementation means tl>0.5. they are not multiplied with 448, but basically the same output as yours)

here is my output: (scale-1, scale-2 each line)

tensor([[0.9949, 0.0620, 0.7058]]) tensor([[0.9914, 0.9090, 0.9104]])

tensor([[0.9514, 0.2913, 0.4905]]) tensor([[0.3651, 0.9811, 0.9993]])

tensor([[1.4516e-01, 6.3023e-04, 9.9867e-01]]) tensor([[7.1983e-01, 3.1705e-04, 9.9996e-01]])

tensor([[0.8614, 0.6607, 0.5352]]) tensor([[0.7248, 1.0000, 0.9999]])

tensor([[0.1537, 0.0438, 0.9953]]) tensor([[0.4155, 0.0156, 0.9893]])

tensor([[0.2311, 0.9943, 0.8963]]) tensor([[0.0094, 0.9998, 0.9997]])

tensor([[0.0814, 0.0198, 0.6079]]) tensor([[7.5714e-01, 6.0488e-04, 9.9275e-01]])

tensor([[0.0559, 0.0806, 0.9969]]) tensor([[8.8674e-04, 9.9418e-01, 9.9997e-01]])

And then i found the problem in the range of tx, ty, tl, which is between [0,1] as the output of Sigmoid() in APN, but i think tl should be in [0,0.5], since the autograd part (not the cropping) in AttentionCropFunction just doesn't care if the tl goes out of bounds (just simply compare according to formular(5) in paper), which may leads to some meaningless value .. I think this obliterated the function of APN. Maybe later I would like to see the author's output if I have a chance.(but sorry for not having enough time now)

I'll just try fixing this and see what happens. (Also, i'll check if the margin in L_{rank} needs to be adjust since im using mobilenet.)

(sorry for my broken english, its so hard for me..)

Edited: good news, It goes well now with this for only 5 epochs now!

[2019-12-31 15:12:15] :: Testing on test set ...

[2019-12-31 15:12:31] Accuracy clsf-0@top-1 (201/725) = 62.74752%

[2019-12-31 15:12:31] Accuracy clsf-0@top-5 (201/725) = 88.73762%

[2019-12-31 15:12:31] Accuracy clsf-1@top-1 (201/725) = 67.57426%

[2019-12-31 15:12:31] Accuracy clsf-1@top-5 (201/725) = 89.35644%

[2019-12-31 15:12:31] Accuracy clsf-2@top-1 (201/725) = 54.33168%

[2019-12-31 15:12:31] Accuracy clsf-2@top-5 (201/725) = 83.84901%

Also, I am using the same dataloader. I found some issue with it: Line 83 of CUB_loader.py

img = cv2.imread(self._imgpath[index])cv2 loads the image in BGR format. But the transformation compose has function .toPILImage() . This transformation assumes the input ndarray is in RGB (Check assumptions under this. Please correct me if I am wrong.

yes, you are right! Although the network trains RGB/BGR in same way, the mean&std can be wrong. I'll further check this

Also, I am using the same dataloader. I found some issue with it: Line 83 of CUB_loader.py

img = cv2.imread(self._imgpath[index])cv2 loads the image in BGR format. But the transformation compose has function .toPILImage() . This transformation assumes the input ndarray is in RGB (Check assumptions under this. Please correct me if I am wrong.

Good news !!

after adding these lines in model.py for rescale tl, I've reached 74% acc with only 7 epochs, and its still increasing:

[2019-12-31 15:25:37] :: Testing on test set ...

[2019-12-31 15:25:53] Accuracy clsf-0@top-1 (201/725) = 72.46287%

[2019-12-31 15:25:53] Accuracy clsf-0@top-5 (201/725) = 92.38861%

[2019-12-31 15:25:53] Accuracy clsf-1@top-1 (201/725) = 74.25743%

[2019-12-31 15:25:53] Accuracy clsf-1@top-5 (201/725) = 91.76980%

[2019-12-31 15:25:53] Accuracy clsf-2@top-1 (201/725) = 71.22525%

[2019-12-31 15:25:53] Accuracy clsf-2@top-5 (201/725) = 90.90347%

so far I made change in cub200-pretrain & AttentionCropFunction & rescale tl in forward(), it seems working fine now. you can check these changes with your code and I hope this will help you!

Hey, did you rescale the raw APN outputs even during pretraining APN?

Hey @klrc , after using pretrained backbone. I observe that my signmoid activation at the end of APN layers saturate very quickly.

_atten1: tensor([6.0899e-07, 1.0000e+00, 9.9899e-01], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([0.0000, 0.6112, 0.9876], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([0.0001, 0.2239, 0.9355], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([0.0000, 0.1769, 0.9498], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([8.3101e-06, 7.8170e-01, 9.0070e-01],device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([7.7697e-06, 7.6733e-01, 9.3726e-01], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([0.0002, 0.1603, 0.9636], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([4.0348e-06, 9.6948e-01, 9.8192e-01], device='cuda:0', grad_fn=<SelectBackward>)

Did you face this issue?

Hey, did you rescale the raw APN outputs even during pretraining APN?

Yes, I did

Hey @klrc , after using pretrained backbone. I observe that my signmoid activation at the end of APN layers saturate very quickly.

_atten1: tensor([6.0899e-07, 1.0000e+00, 9.9899e-01], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([0.0000, 0.6112, 0.9876], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([0.0001, 0.2239, 0.9355], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([0.0000, 0.1769, 0.9498], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([8.3101e-06, 7.8170e-01, 9.0070e-01],device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([7.7697e-06, 7.6733e-01, 9.3726e-01], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([0.0002, 0.1603, 0.9636], device='cuda:0', grad_fn=<SelectBackward>) _atten1: tensor([4.0348e-06, 9.6948e-01, 9.8192e-01], device='cuda:0', grad_fn=<SelectBackward>)Did you face this issue?

That didn't happen during my pretraining, have you checked your pretrain loss?

Btw, happy new year!

Happy New year!! @klrc I found the problem with my dataloader. Thanks for all the help :)

Have you checked other examples ? Is it consistent with all the test images?

here is:

it just losses the zoom function in the first 10 epoch... i'll check my loss function

I tried to train the network for deepfake detection. In the training phase of APN, the face is zoomed in normally, but in the alternate training phase, it doesn't work and shows as a full image. 😭 Do you have any idea about it?

Have you checked other examples ? Is it consistent with all the test images?

here is:

it just losses the zoom function in the first 10 epoch... i'll check my loss function

I tried to train the network for deepfake detection. In the training phase of APN, the face is zoomed in normally, but in the alternate training phase, it doesn't work and shows as a full image. 😭 Do you have any idea about it?

sorry for that, i think there is actually some issue about the second phase. As mentioned above, the zooming also disappears quickly in CUB200 in second phase (while still having a high accuracy, quite strange), i thinks its an issue with the margin loss implementation, i can't get exact margin loss settings from paper. maybe the joint loss breaks the balance and just falling to one of them, increasing the margin loss may work.

sorry that i didn't go further on this implementation, but i'll try checking this if i have time. Before that, maybe you can try this, or the original source code, hope it could help you!

In this case, I wonder if you could just freeze the APN from training. 🤔 @Lebenslang

Thank you!I froze the APN network but the accuracy has been decreasing. I'll check my code for other issues : )