keras

keras copied to clipboard

keras copied to clipboard

'only supports NHWC tensor format' in Conv3D on M1 Mac

Please go to TF Forum for help and support:

https://discuss.tensorflow.org/tag/keras

If you open a GitHub issue, here is our policy:

It must be a bug, a feature request, or a significant problem with the documentation (for small docs fixes please send a PR instead). The form below must be filled out.

Here's why we have that policy:.

Keras developers respond to issues. We want to focus on work that benefits the whole community, e.g., fixing bugs and adding features. Support only helps individuals. GitHub also notifies thousands of people when issues are filed. We want them to see you communicating an interesting problem, rather than being redirected to Stack Overflow.

System information.

- Have I written custom code (as opposed to using a stock example script provided in Keras):

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): MacOS Monterey

- TensorFlow installed from (source or binary): Using Miniforge

- TensorFlow version (use command below): 2.8

- Python version: 3.9

- Bazel version (if compiling from source):

- GPU model and memory: M1 Mac

- Exact command to reproduce:

You can collect some of this information using our environment capture script:

https://github.com/tensorflow/tensorflow/tree/master/tools/tf_env_collect.sh

You can obtain the TensorFlow version with: python -c "import tensorflow as tf; print(tf.version.GIT_VERSION, tf.version.VERSION)"

**I am trying to run tensorflow on M1 Mac(installed via miniforge) and while fitting the model I am getting error for Invalid argument. Can some one please help with that is wrong? Below is the code which I am trying to run:

Code: To fit data using below.

model_1.fit(train_generator, steps_per_epoch=steps_per_epoch, epochs=20, verbose=1, callbacks=callbacks_list, validation_data=val_generator, validation_steps=validation_steps, class_weight=None, workers=1, initial_epoch=0)

The input format I am using is as below:

model_1 = Sequential() model_1.add(Conv3D(8,kernel_size=(3,3,3),padding='same',input_shape=(30, 120, 120, 3))) model_1.add(BatchNormalization()) model_1.add(Activation('relu'))**.

Describe the problem clearly here. Be sure to convey here why it's a bug in Keras or why the requested feature is needed.

only supports NHWC tensor format error is received.

Describe the expected behavior.

- Do you want to contribute a PR? (yes/no):

- If yes, please read this page for instructions

- Briefly describe your candidate solution(if contributing):

**#input_shape = (30, 100, 100, 3) #write your model here model_1 = Sequential() model_1.add(Conv3D(8,kernel_size=(3,3,3),padding='same',input_shape=(30, 120, 120, 3))) model_1.add(BatchNormalization()) model_1.add(Activation('relu'))

model_1.add(Conv3D(16, (3, 3, 3), padding='same')) model_1.add(Activation('relu')) model_1.add(BatchNormalization()) model_1.add(MaxPooling3D(pool_size=(2, 2, 2)))

model_1.add(Conv3D(32, (2, 2, 2), padding='same')) model_1.add(Activation('relu')) model_1.add(BatchNormalization()) model_1.add(MaxPooling3D(pool_size=(2, 2, 2)))

model_1.add(Conv3D(64, (2, 2, 2), padding='same')) model_1.add(Activation('relu')) model_1.add(BatchNormalization()) model_1.add(MaxPooling3D(pool_size=(2, 2, 2)))

model_1.add(Conv3D(128, (2, 2, 2), padding='same')) model_1.add(Activation('relu')) model_1.add(BatchNormalization()) model_1.add(MaxPooling3D(pool_size=(2, 2, 2)))

Flatten layer

model_1.add(Flatten())

model_1.add(Dense(1000, activation='relu')) model_1.add(Dropout(0.5))

model_1.add(Dense(500, activation='relu')) model_1.add(Dropout(0.5))

#Softmax layer

model_1.add(Dense(5, activation='softmax'))

optimiser = tf.keras.optimizers.SGD(lr=0.001, decay=1e-6, momentum=0.7, nesterov=True) #write your optimizer #optimiser = tf.keras.optimizers.Adam(lr=0.001)#write your optimizer model_1.compile(optimizer=optimiser, loss='categorical_crossentropy', metrics=['categorical_accuracy']) print (model_1.summary())

train_generator = generator(train_path, train_doc, batch_size) val_generator = generator(val_path, val_doc, batch_size)

model_name = 'model_init' + '' + str(curr_dt_time).replace(' ','').replace(':','') + '/'

if not os.path.exists(model_name): os.mkdir(model_name)

filepath = model_name + 'model-{epoch:05d}-{loss:.5f}-{categorical_accuracy:.5f}-{val_loss:.5f}-{val_categorical_accuracy:.5f}.h5'

checkpoint = ModelCheckpoint(filepath, monitor='val_loss', verbose=1, save_best_only=True, save_weights_only=False, mode='auto', period=1)

LR = ReduceLROnPlateau(monitor='val_accuracy', patience=5, verbose=1, factor=0.5, min_lr=0.00001)# write the REducelronplateau code here

callbacks_list = [checkpoint, LR]

if (num_train_sequences%batch_size) == 0: steps_per_epoch = int(num_train_sequences/batch_size) else: steps_per_epoch = (num_train_sequences//batch_size) + 1

if (num_val_sequences%batch_size) == 0: validation_steps = int(num_val_sequences/batch_size) else: validation_steps = (num_val_sequences//batch_size) + 1

model_1.fit(train_generator, steps_per_epoch=steps_per_epoch, epochs=20, verbose=1, callbacks=callbacks_list, validation_data=val_generator, validation_steps=validation_steps, class_weight=None, workers=1, initial_epoch=0)**.

Provide a reproducible test case that is the bare minimum necessary to generate the problem. If possible, please share a link to Colab/Jupyter/any notebook.

Link for already opened issue: https://github.com/tensorflow/tensorflow/issues/56205

**File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/keras/engine/training_generator_v1.py:202, in model_iteration(model, data, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, validation_freq, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch, mode, batch_size, steps_name, **kwargs) 199 break 201 # Setup work for each epoch. --> 202 model.reset_metrics() 203 epoch_logs = {} 204 if mode == ModeKeys.TRAIN:

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/keras/engine/training_v1.py:1003, in Model.reset_metrics(self) 1001 metrics = self._get_training_eval_metrics() 1002 for m in metrics: -> 1003 m.reset_state() 1005 # Reset metrics on all the distributed (cloned) models. 1006 if self._distribution_strategy:

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/keras/metrics.py:260, in Metric.reset_state(self) 258 return self.reset_states() 259 else: --> 260 backend.batch_set_value([(v, 0) for v in self.variables])

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/util/dispatch.py:206, in add_dispatch_support..wrapper(*args, **kwargs) 204 """Call target, and fall back on dispatchers if there is a TypeError.""" 205 try: --> 206 return target(*args, **kwargs) 207 except (TypeError, ValueError): 208 # Note: convert_to_eager_tensor currently raises a ValueError, not a 209 # TypeError, when given unexpected types. So we need to catch both. 210 result = dispatch(wrapper, args, kwargs)

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/keras/backend.py:3829, in batch_set_value(tuples) 3827 assign_ops.append(assign_op) 3828 feed_dict[assign_placeholder] = value -> 3829 get_session().run(assign_ops, feed_dict=feed_dict)

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/keras/backend.py:745, in get_session(op_input_list) 743 if not _MANUAL_VAR_INIT: 744 with session.graph.as_default(): --> 745 _initialize_variables(session) 746 return session

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/keras/backend.py:1193, in _initialize_variables(session) 1189 candidate_vars.append(v) 1190 if candidate_vars: 1191 # This step is expensive, so we only run it on variables not already 1192 # marked as initialized. -> 1193 is_initialized = session.run( 1194 [variables_module.is_variable_initialized(v) for v in candidate_vars]) 1195 # TODO(kathywu): Some metric variables loaded from SavedModel are never 1196 # actually used, and do not have an initializer. 1197 should_be_initialized = [ 1198 (not is_initialized[n]) and v.initializer is not None 1199 for n, v in enumerate(candidate_vars)]

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/client/session.py:967, in BaseSession.run(self, fetches, feed_dict, options, run_metadata) 964 run_metadata_ptr = tf_session.TF_NewBuffer() if run_metadata else None 966 try: --> 967 result = self._run(None, fetches, feed_dict, options_ptr, 968 run_metadata_ptr) 969 if run_metadata: 970 proto_data = tf_session.TF_GetBuffer(run_metadata_ptr)

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/client/session.py:1190, in BaseSession._run(self, handle, fetches, feed_dict, options, run_metadata) 1187 # We only want to really perform the run if fetches or targets are provided, 1188 # or if the call is a partial run that specifies feeds. 1189 if final_fetches or final_targets or (handle and feed_dict_tensor): -> 1190 results = self._do_run(handle, final_targets, final_fetches, 1191 feed_dict_tensor, options, run_metadata) 1192 else: 1193 results = []

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/client/session.py:1368, in BaseSession._do_run(self, handle, target_list, fetch_list, feed_dict, options, run_metadata) 1365 return self._call_tf_sessionprun(handle, feed_dict, fetch_list) 1367 if handle is None: -> 1368 return self._do_call(_run_fn, feeds, fetches, targets, options, 1369 run_metadata) 1370 else: 1371 return self._do_call(_prun_fn, handle, feeds, fetches)

File ~/miniforge3/envs/tensorflow/lib/python3.9/site-packages/tensorflow/python/client/session.py:1394, in BaseSession._do_call(self, fn, *args) 1389 if 'only supports NHWC tensor format' in message: 1390 message += ('\nA possible workaround: Try disabling Grappler optimizer' 1391 '\nby modifying the config for creating the session eg.' 1392 '\nsession_config.graph_options.rewrite_options.' 1393 'disable_meta_optimizer = True') -> 1394 raise type(e)(node_def, op, message)

InvalidArgumentError: Node 'training/Adam/gradients/gradients/dropout_1/cond_grad/StatelessIf': Connecting to invalid output 1 of source node dropout_1/cond which has 1 outputs. Try using tf.compat.v1.experimental.output_all_intermediates(True).`**.

Include any logs or source code that would be helpful to diagnose the problem. If including tracebacks, please include the full traceback. Large logs and files should be attached. Try to provide a reproducible test case that is the bare minimum necessary to generate the problem.

@mj-mayank, I was facing a different error while executing the given code. Kindly find the gist of it here and share the complete code and dependencies. Thank you!

This issue has been automatically marked as stale because it has no recent activity. It will be closed if no further activity occurs. Thank you.

@tilakrayal Please add below set of code also after import commands

##Generator function for input data without augmentation.

x = 30 # No. of frames images y = 120 # Width of the image z = 120 # height

def generator(source_path, folder_list, batch_size): img_idx = [x for x in range(0,x)] #create a list of image numbers you want to use for a particular video while True: t = np.random.permutation(folder_list) num_batches = len(folder_list)//batch_size # calculate the number of batches for batch in range(num_batches): # we iterate over the number of batches batch_data = np.zeros((batch_size,x,y,z,3)) # x is the number of images you use for each video, (y,z) is the final size of the input images and 3 is the number of channels RGB batch_labels = np.zeros((batch_size,5)) # batch_labels is the one hot representation of the output for folder in range(batch_size): # iterate over the batch_size imgs = os.listdir(source_path+'/'+ t[folder + (batchbatch_size)].split(';')[0]) # read all the images in the folder for idx,item in enumerate(img_idx): # Iterate iver the frames/images of a folder to read them in image = imread(source_path+'/'+ t[folder + (batchbatch_size)].strip().split(';')[0]+'/'+imgs[item]).astype(np.float32)

#crop the images and resize them. Note that the images are of 2 different shape

#and the conv3D will throw error if the inputs in a batch have different shapes

# Let us resize all the images.Let's use PIL.Image.NEAREST (use nearest neighbour) resampling filter.

resized_image = resize(image,(y,z)) ##default resample=1 or 'P' which indicates PIL.Image.NEAREST

resized_image = resized_image/255

batch_data[folder,idx,:,:,0] = (resized_image[:,:,0])#normalise and feed in the image

batch_data[folder,idx,:,:,1] = (resized_image[:,:,1])#normalise and feed in the image

batch_data[folder,idx,:,:,2] = (resized_image[:,:,2])#normalise and feed in the image

batch_labels[folder, int(t[folder + (batch*batch_size)].strip().split(';')[2])] = 1

yield batch_data, batch_labels #you yield the batch_data and the batch_labels, remember what does yield do

# write the code for the remaining data points which are left after full batches

if (len(folder_list) != batch_size*num_batches):

batch_size = len(folder_list) - (batch_size*num_batches)

batch_data = np.zeros((batch_size,x,y,z,3)) # x is the number of images you use for each video, (y,z) is the final size of the input images and 3 is the number of channels RGB

batch_labels = np.zeros((batch_size,5)) # batch_labels is the one hot representation of the output

for folder in range(batch_size): # iterate over the batch_size

imgs = os.listdir(source_path+'/'+ t[folder + (batch*batch_size)].split(';')[0]) # read all the images in the folder

for idx,item in enumerate(img_idx): # Iterate iver the frames/images of a folder to read them in

image = imread(source_path+'/'+ t[folder + (batch*batch_size)].strip().split(';')[0]+'/'+imgs[item]).astype(np.float32)

#crop the images and resize them. Note that the images are of 2 different shape

#and the conv3D will throw error if the inputs in a batch have different shapes

resized_image = resize(image,(y,z)) ##default resample=1 or 'P' which indicates PIL.Image.NEAREST

resized_image = resized_image/255 #Normalize data

batch_data[folder,idx,:,:,0] = (resized_image[:,:,0])

batch_data[folder,idx,:,:,1] = (resized_image[:,:,1])

batch_data[folder,idx,:,:,2] = (resized_image[:,:,2])

batch_labels[folder, int(t[folder + (batch*batch_size)].strip().split(';')[2])] = 1

yield batch_data, batch_labels

curr_dt_time = datetime.datetime.now() train_path = '/Users/mj/Desktop/Masters Docs/Lecture Files/Gesture Recognition Project/Project_data/train' val_path = '/Users/mj/Desktop/Masters Docs/Lecture Files/Gesture Recognition Project/Project_data/val' num_train_sequences = len(train_doc) print('# training sequences =', num_train_sequences) num_val_sequences = len(val_doc) print('# validation sequences =', num_val_sequences) num_epochs = 10# choose the number of epochs print ('# epochs =', num_epochs)

@mj-mayank, Code shared is full of indentation errors, please share a colab gist with issue reported or simple stand alone indented code with all dependencies such that we can replicate the issue reported. Thank you!

hi @tilakrayal please fidn belowl ink for notebook https://colab.research.google.com/drive/1Y7OHw92gQzkqchmz7SmMJgrO3xW7fAxa?authuser=1

@mj-mayank, I do not have access to the link you have provided. Could you please provide the required permissions to view the files. Thank you!

@tilakrayal I have already provided rights to view the file. Please see below

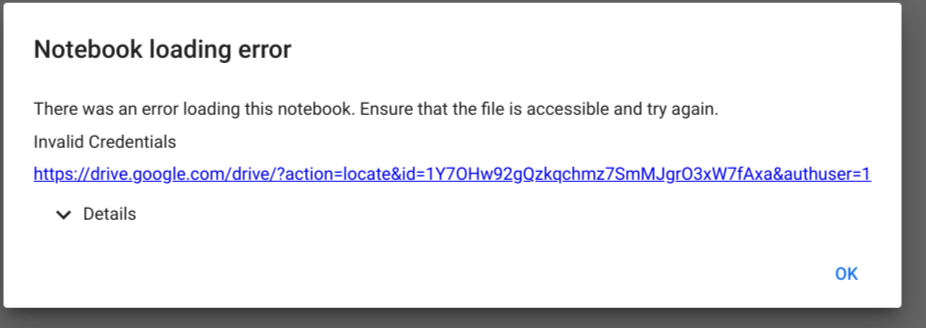

@mj-mayank, I was facing the below error while trying to access the link you are provided.

Could you please provide the required permissions to access the files. Thank you!

This issue has been automatically marked as stale because it has no recent activity. It will be closed if no further activity occurs. Thank you.

I am also experiencing this issue. Please re-open this issue if possible.

@dkargilis could you share your code snippet with @tilakrayal. I tried sharing the notebook but he is not able to access it(though I have provided all the necessary access)

Is it possible to try again with the link, @tilakrayal ? I am able to access the notebook here https://colab.research.google.com/drive/1Y7OHw92gQzkqchmz7SmMJgrO3xW7fAxa?authuser=1

@dkargilis & @mj-mayank , I was facing the below error while trying to access the link you are provided.

This issue has been automatically marked as stale because it has no recent activity. It will be closed if no further activity occurs. Thank you.

@tilakrayal I am not sure what else to do here, since @dkargilis is able to access the notebook

Closing as stale. Please reopen if you'd like to work on this further.

@mj-mayank,

As mentioned, I do not have access to the link you have provided. Could you please provide the required permissions to view the files or share a colab gist with the reported error. Thank you!

This issue has been automatically marked as stale because it has no recent activity. It will be closed if no further activity occurs. Thank you.

Closing as stale. Please reopen if you'd like to work on this further.

I am experiencing the same problem as mentioned in this thread, unable to use conv3D with the M1 Mac. I am not an expert with github so I may not be able to provide additional information, but while I experienced the same problem accessing the link I think it may be a browser-related issue. Using edge, I could only open it in a private window, but using chrome I could open it both in-private and a regular window.

Hope this helps.