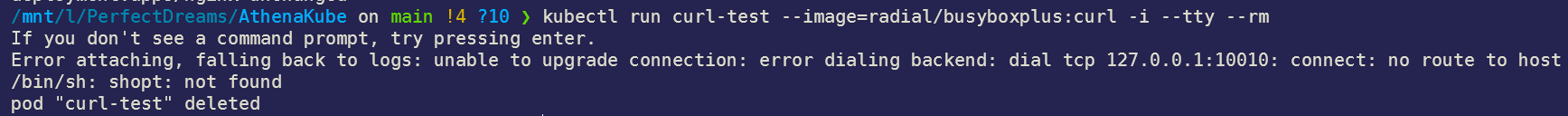

If a service is bound to port `10010`, attaching to a container breaks with `unable to upgrade connection: error dialing backend: dial tcp 127.0.0.1:10010: connect: no route to host`

Environmental Info: K3s Version:

k3s version v1.22.5+k3s1 (405bf79d)

go version go1.16.10

Node(s) CPU architecture, OS, and Version:

Linux k3s-athena1 5.10.0-10-amd64 #1 SMP Debian 5.10.84-1 (2021-12-08) x86_64 GNU/Linux

Cluster Configuration: One control plane and one agent

Describe the bug: If a service has port "10010" bound, kubectl attach will fail because it seems like kubectl uses port 10010 for attaching. This is kinda confusing because it isn't easy to figure out what is the issue due to lack of documentation about k3s using 10010 for attachment, and depending on the service that you are running on port 10010, it is hard to track the real issue.

Steps To Reproduce:

- Apply this service

kind: Service

apiVersion: v1

metadata:

name: nginx

spec:

type: LoadBalancer

selector:

app: nginx

ports:

- protocol: TCP

# Port bound in the node VM itself, you can access it externally

port: 10010

# Port within the container

targetPort: 80

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

protocol: TCP

- Run

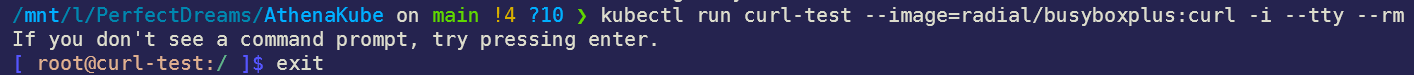

kubectl run curl-test --image=radial/busyboxplus:curl -i --tty --rm

Expected behavior:

Actual behavior:

Additional context / logs: I haven't found anything in k3s or Kubernetes docs that requires port 10010 to be available, so that's why I'm creating this issue.

This is also a very nasty bug because depending on the service that you are hosting on port 10010, it isn't obvious the issue because the error can be Error attaching, falling back to logs:.

(It seems like it is this? https://github.com/moby/moby/issues/37507)

Backporting

- [ ] Needs backporting to older releases

Are you using k3s with the --docker flag, or using the containerd embedded in k3s?

Are you using k3s with the --docker flag, or using the containerd embedded in k3s?

containerd embedded in k3s

Indeed, this does appear to be the default containerd cri plugin stream server port:

https://github.com/containerd/containerd/blob/release/1.4/PLUGINS.md#:~:text=stream_server_port https://github.com/k3s-io/k3s/blob/c08d394994956dd144cd99e4ef2899aaec1fe3be/pkg/agent/templates/templates_linux.go#L16

I am guessing that this has something to do with the service iptables rules hijacking connections to the containerd cri stream server port and sending traffic to the pod instead.

This repository uses a bot to automatically label issues which have not had any activity (commit/comment/label) for 180 days. This helps us manage the community issues better. If the issue is still relevant, please add a comment to the issue so the bot can remove the label and we know it is still valid. If it is no longer relevant (or possibly fixed in the latest release), the bot will automatically close the issue in 14 days. Thank you for your contributions.

beep boop not stale

While the fix is easy (just... don't bind things to the 10010 port) it would still be nice if there was something about this documented.

This repository uses a bot to automatically label issues which have not had any activity (commit/comment/label) for 180 days. This helps us manage the community issues better. If the issue is still relevant, please add a comment to the issue so the bot can remove the label and we know it is still valid. If it is no longer relevant (or possibly fixed in the latest release), the bot will automatically close the issue in 14 days. Thank you for your contributions.