icefall

icefall copied to clipboard

icefall copied to clipboard

Accelerating RNN-T Training and Inference Using CTC guidance https://arxiv.org/pdf/2210.16481.pdf ### with scaling Lconv #### greedy_search | Model | test-clean | test-other | Decoding time(s) (3090) | Config | | ----------...

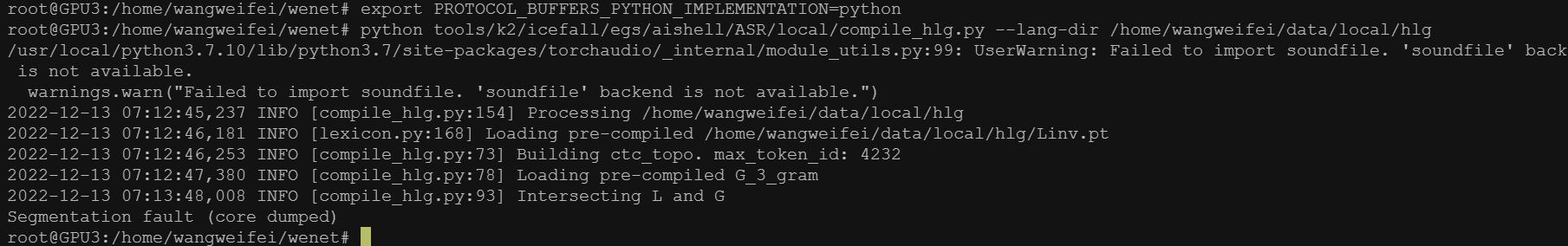

now i have lm.arpa 、lexicon.txt 、wordlist ,Which script should I use to generate HLG icefall/egs/librispeech/ASR/prepare.sh ?

Is there any way to get timestamp,not only the start time of word,but also duration

I create this PR to post my current results for smaller models (i.e. the models with less parameters). I'd say the parameters used to construct the models were chosen arbitrarily....

**Note:** Not finish yet. Preview here: https://pkufool.github.io/docs/icefall/ I separate recipes into two directories, streaming asr and non streaming asr.

I want to use LM-GRAM fromhttps://github.com/k2-fsa/icefall/blob/b293db4baf1606cfe95066cf28ffde56173a7ddb/icefall/ngram_lm.py#L27 But don't know how to get backoff-id when building LG graph. L: Subword. G: n-Gram Model. Build from https://github.com/k2-fsa/icefall/blob/master/egs/librispeech/ASR/local/prepare_lang_bpe.py & https://github.com/k2-fsa/icefall/blob/master/egs/librispeech/ASR/local/compile_lg.py

This PR support transformer LM training in icefall. Initial testing: an RNNLM and a transformer LM trained on LibriSpeech 960h text and their best perplexities are shown below. | LM...

I am trying to understand the requirements on the RNNLM for LODR rescoring I am using something along the lines of Librispeech pruned_transducer_stateless3 recipe with https://github.com/k2-fsa/icefall/tree/master/egs/ptb/LM as prototype for LM...

Add ConvRNN-T Encoder ConvRNN-T: Convolutional Augmented Recurrent Neural Network Transducers for Streaming Speech Recognition https://arxiv.org/pdf/2209.14868.pdf model size: 44M The best WER on LibriSpeech 960h within 20 epoch is: epoch-20 avg-4...