k0sctl

k0sctl copied to clipboard

k0sctl copied to clipboard

node worker initialize error

I use LXD for create the cluster.

I launch worker and controller based on Ubuntu 20.04.4 LTS and copy my SSH key.

My k0sctl.yaml file:

apiVersion: k0sctl.k0sproject.io/v1beta1

kind: Cluster

metadata:

name: k0s-cluster

spec:

hosts:

- ssh:

address: 10.6.27.14

user: root

port: 22

keyPath: /root/.ssh/id_rsa

role: controller

- ssh:

address: 10.6.27.119

user: root

port: 22

keyPath: /root/.ssh/id_rsa

role: worker

k0s:

version: 1.23.5+k0s.0

I run k0sctl apply --config k0sctl.yaml:

⠀⣿⣿⡇⠀⠀⢀⣴⣾⣿⠟⠁⢸⣿⣿⣿⣿⣿⣿⣿⡿⠛⠁⠀⢸⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀█████████ █████████ ███

⠀⣿⣿⡇⣠⣶⣿⡿⠋⠀⠀⠀⢸⣿⡇⠀⠀⠀⣠⠀⠀⢀⣠⡆⢸⣿⣿⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀███ ███ ███

⠀⣿⣿⣿⣿⣟⠋⠀⠀⠀⠀⠀⢸⣿⡇⠀⢰⣾⣿⠀⠀⣿⣿⡇⢸⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀███ ███ ███

⠀⣿⣿⡏⠻⣿⣷⣤⡀⠀⠀⠀⠸⠛⠁⠀⠸⠋⠁⠀⠀⣿⣿⡇⠈⠉⠉⠉⠉⠉⠉⠉⠉⢹⣿⣿⠀███ ███ ███

⠀⣿⣿⡇⠀⠀⠙⢿⣿⣦⣀⠀⠀⠀⣠⣶⣶⣶⣶⣶⣶⣿⣿⡇⢰⣶⣶⣶⣶⣶⣶⣶⣶⣾⣿⣿⠀█████████ ███ ██████████

k0sctl v0.12.6 Copyright 2021, k0sctl authors.

Anonymized telemetry of usage will be sent to the authors.

By continuing to use k0sctl you agree to these terms:

https://k0sproject.io/licenses/eula

INFO ==> Running phase: Connect to hosts

INFO [ssh] 10.6.27.14:22: connected

INFO [ssh] 10.6.27.119:22: connected

INFO ==> Running phase: Detect host operating systems

INFO [ssh] 10.6.27.14:22: is running Ubuntu 20.04.4 LTS

INFO [ssh] 10.6.27.119:22: is running Ubuntu 20.04.4 LTS

INFO ==> Running phase: Prepare hosts

INFO ==> Running phase: Gather host facts

INFO [ssh] 10.6.27.119:22: using worker as hostname

INFO [ssh] 10.6.27.14:22: using controller as hostname

INFO [ssh] 10.6.27.119:22: discovered eth0 as private interface

INFO [ssh] 10.6.27.14:22: discovered eth0 as private interface

INFO ==> Running phase: Validate hosts

INFO ==> Running phase: Gather k0s facts

INFO ==> Running phase: Validate facts

INFO ==> Running phase: Download k0s on hosts

INFO [ssh] 10.6.27.14:22: downloading k0s 1.23.5+k0s.0

INFO [ssh] 10.6.27.119:22: downloading k0s 1.23.5+k0s.0

INFO ==> Running phase: Configure k0s

WARN [ssh] 10.6.27.14:22: generating default configuration

INFO [ssh] 10.6.27.14:22: validating configuration

INFO [ssh] 10.6.27.14:22: configuration was changed

INFO ==> Running phase: Initialize the k0s cluster

INFO [ssh] 10.6.27.14:22: installing k0s controller

INFO [ssh] 10.6.27.14:22: waiting for the k0s service to start

INFO [ssh] 10.6.27.14:22: waiting for kubernetes api to respond

INFO ==> Running phase: Install workers

INFO [ssh] 10.6.27.119:22: validating api connection to https://10.6.27.14:6443

INFO [ssh] 10.6.27.14:22: generating token

INFO [ssh] 10.6.27.119:22: writing join token

INFO [ssh] 10.6.27.119:22: installing k0s worker

INFO [ssh] 10.6.27.119:22: starting service

INFO [ssh] 10.6.27.119:22: waiting for node to become ready

INFO * Running clean-up for phase: Initialize the k0s cluster

INFO * Running clean-up for phase: Install workers

ERRO apply failed - log file saved to /var/cache/k0sctl/k0sctl.log

FATA failed on 1 hosts:

- [ssh] 10.6.27.119:22: [ssh] 10.6.27.14:22: node worker status not reported as ready

Log file: https://gist.github.com/murka/383d4de9e7f1e5ea8353fd8eb68fdd0a

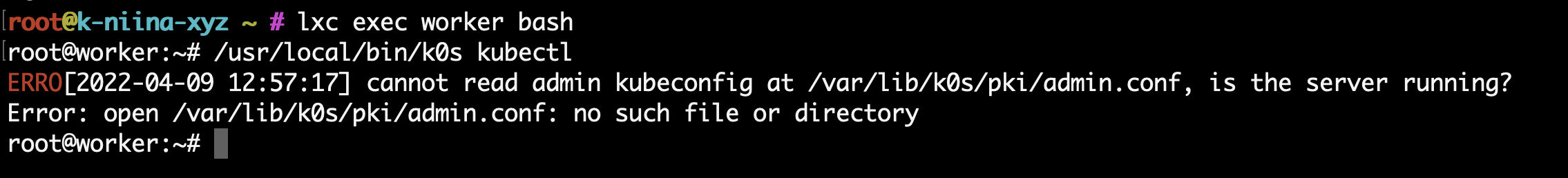

The worker instance have no admin.conf file:

My guess is this is a networking issue between the VMs.

The worker instance have no admin.conf file

It's not supposed to, it's only on controllers.

@murka you need to dive into the logs on the workers to see why stuff does not come up properly. My guess it's something about kubelet not being happy which is a common cause in container based setups.