kaggle_ndsb2

kaggle_ndsb2 copied to clipboard

kaggle_ndsb2 copied to clipboard

Dropout placement

Hey, thanks for the nice code and blog post!

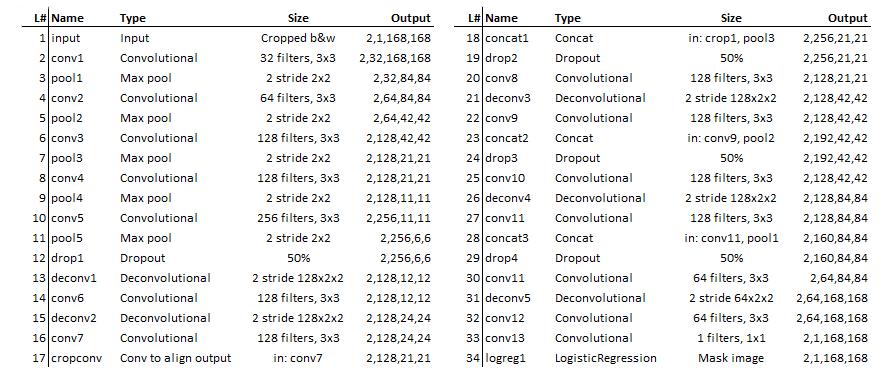

I don't know if it's important, though it confused me a bit. In the blog post the first dropout layer was placed after all downsampling layers, i.e. after pool5, but in the code I see that it was placed after pool4. I think it would be more consistent approach to put it after pool5 as in the post.

...

pool4 = convolution_module(net, kernel_size, pad_size, filter_count=filter_count * 4, down_pool=True)

net = pool4

net = mx.sym.Dropout(net)

pool5 = convolution_module(net, kernel_size, pad_size, filter_count=filter_count * 8, down_pool=True)

net = pool5

net = convolution_module(net, kernel_size, pad_size, filter_count=filter_count * 4, up_pool=True)

...

Hello, thanks. First of all the dropout part is still something under heavy debate for FCN as I understand. 4 or 5 made no real big difference although I agree 5 made more sence. Somehow I think it was a typo. It helped a little but since there are so many paths to the end I don't know if it's really useful. I even read that spatial dropout works better. http://luna16.grand-challenge.org/serve/public_html/pdfs/ZNET_NDET.pdf/

Btw. good luck with the nerve challenge. :) I'm very curious what you came up with. Just between us. If you use logistic regression (and maybe even dice) small batch sizes (2 or 4) gave me a difference in the loss (better).. I don't know if it really helps with the end result though. In this challenge there were so many other factors that I could not notice the difference very much.

Ha-ha ) thanks for wishes and advices! I think my solution is pretty basic and with your experience you could easily achieve better results )

I'm using dice loss. Didn't notice much improvements from dropout (at least regular version of it). I tried smaller batch sizes, but experienced convergence issues in the beginning - may be I should try smaller learning rate...

I also noticed the first dropout layer was after pool4 instead of pool5. I even want to try removing all the dropout layers, will this make it worse?

It helped a little but not much if I remember correctly.

@juliandewit Thanks for the reply. I'm having a try on the nerve challenge.