loky

loky copied to clipboard

loky copied to clipboard

AttributeError: 'NoneType' object has no attribute 'fileno'

Tried switching our code from concurrent.futures to loky and can't get passed the below error.

Even if I do what this says:

https://stackoverflow.com/questions/45126368/nonetype-object-has-no-attribute-fileno

it doesn't help.

2018-11-01 13:56:28,766 A task has failed to un-serialize. Please ensure that the arguments of the function are all picklable.

2018-11-01 13:56:28,769 loky.process_executor._RemoteTraceback:

'''

Traceback (most recent call last):

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/process_executor.py", line 383, in _process_worker

call_item = call_queue.get(block=True, timeout=timeout)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/multiprocessing/queues.py", line 113, in get

return _ForkingPickler.loads(res)

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 41, in <module>

import datatable as dt

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/__init__.py", line 8, in <module>

from .dt_append import rbind, cbind

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/dt_append.py", line 8, in <module>

from datatable.utils.misc import plural_form as plural

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/utils/misc.py", line 8, in <module>

from .typechecks import TImportError

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/utils/typechecks.py", line 14, in <module>

from datatable.utils.terminal import term

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/utils/terminal.py", line 62, in <module>

term = MyTerminal()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/utils/terminal.py", line 20, in __init__

super().__init__()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/blessed/terminal.py", line 171, in __init__

self._keyboard_fd = sys.__stdin__.fileno()

AttributeError: 'NoneType' object has no attribute 'fileno'

'''

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 2001, in call_subprocess_onetask

kwargs=kwargs, out=ret_list, justcount=False, proctitle=proctitle)

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 1513, in submit_tryget

self.initpool(proctitle=proctitle, proctitle_sub=proctitle_sub)

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 1147, in initpool

self.submit_dummy(None, dummy_function, (), {}, proctitle=proctitle, proctitle_sub=proctitle_sub)

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 1408, in submit_dummy

result.result()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/concurrent/futures/_base.py", line 432, in result

return self.__get_result()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/concurrent/futures/_base.py", line 384, in __get_result

raise self._exception

loky.process_executor.BrokenProcessPool: A task has failed to un-serialize. Please ensure that the arguments of the function are all picklable.

2018-11-01 13:56:28,769 Failed call_subprocesss_onetask again for func=<function get_have_lightgbm_subprocess at 0x7fc495a98840> after exception=A task has failed to un-serialize. Please ensure that the arguments of the function are all picklable.

_________________________________________________________________________________________________________ ERROR collecting tests/test_system_alt/test_gpu_lock_check.py __________________________________________________________________________________________________________

loky.process_executor._RemoteTraceback:

'''

Traceback (most recent call last):

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/process_executor.py", line 383, in _process_worker

call_item = call_queue.get(block=True, timeout=timeout)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/multiprocessing/queues.py", line 113, in get

return _ForkingPickler.loads(res)

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 41, in <module>

import datatable as dt

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/__init__.py", line 8, in <module>

from .dt_append import rbind, cbind

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/dt_append.py", line 8, in <module>

from datatable.utils.misc import plural_form as plural

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/utils/misc.py", line 8, in <module>

from .typechecks import TImportError

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/utils/typechecks.py", line 14, in <module>

from datatable.utils.terminal import term

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/utils/terminal.py", line 62, in <module>

term = MyTerminal()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/datatable/utils/terminal.py", line 20, in __init__

super().__init__()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/blessed/terminal.py", line 171, in __init__

self._keyboard_fd = sys.__stdin__.fileno()

AttributeError: 'NoneType' object has no attribute 'fileno'

A simple attempt to repro:

import datatable as dt

import os

from time import sleep

from loky import get_reusable_executor

def say_hello(k):

pid = os.getpid()

print("Hello from {} with arg {}".format(pid, k))

sleep(.01)

return pid

# Create an executor with 4 worker processes, that will

# automatically shutdown after idling for 2s

executor = get_reusable_executor(max_workers=4, timeout=2)

res = executor.submit(say_hello, 1)

print("Got results:", res.result())

results = executor.map(say_hello, range(50))

n_workers = len(set(results))

print("Number of used processes:", n_workers)

assert n_workers == 4

Doesn't fail. So it's unclear what is going on.

This is the only change I made to my code:

use_loky = True

if use_loky:

from concurrent.futures import ThreadPoolExecutor as pool_thread

#from loky import get_reusable_executor as pool_fork

from loky import ProcessPoolExecutor as pool_fork

from loky import TimeoutError as pool_timeout

from loky import BrokenProcessPool as pool_broken

from concurrent.futures import as_completed as pool_as_completed

sys.__stdin__ = sys.stdin

sys.__stdout__ = sys.stdout

else:

from concurrent.futures import ThreadPoolExecutor as pool_thread

from concurrent.futures import ProcessPoolExecutor as pool_fork

from concurrent.futures import TimeoutError as pool_timeout

from concurrent.futures.process import BrokenProcessPool as pool_broken

from concurrent.futures import as_completed as pool_as_completed

I can reproduce this with loky and the following script:

from loky import get_reusable_executor

def import_dt(k):

import datatable as dt

# Create an executor with 2 worker processes

executor = get_reusable_executor(max_workers=2)

executor.submit(import_dt, 1).result())

It was not failing in your previous example because the function say_hello did not use the module datatable, so it was not imported in the worker.

The issue here seems to be linked to MyTerminal object, instanciated when importing datatable, which expect stdin to implement a method fileno. However, when the new process is spawned, we set stdin to None. It should be fixed on datatable master (see here).

Can I ask why you do something different than concurrent.futures in this regard? It seems to break compatibility. Thanks!

Now that datatable fixed that blessed use of stdin/stdout, I get still:

Exception in thread QueueManagerThread:

Traceback (most recent call last):

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/threading.py", line 916, in _bootstrap_inner

self.run()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/threading.py", line 864, in run

self._target(*self._args, **self._kwargs)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/process_executor.py", line 635, in _queue_management_worker

thread_wakeup.clear()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/process_executor.py", line 157, in clear

while self._reader.poll():

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/multiprocessing/connection.py", line 255, in poll

self._check_closed()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/multiprocessing/connection.py", line 136, in _check_closed

raise OSError("handle is closed")

OSError: handle is closed

Also get:

Exception in thread QueueManagerThread:

Traceback (most recent call last):

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/threading.py", line 916, in _bootstrap_inner

self.run()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/threading.py", line 864, in run

self._target(*self._args, **self._kwargs)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/process_executor.py", line 635, in _queue_management_worker

thread_wakeup.clear()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/process_executor.py", line 158, in clear

self._reader.recv_bytes()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/multiprocessing/connection.py", line 216, in recv_bytes

buf = self._recv_bytes(maxlength)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/multiprocessing/connection.py", line 407, in _recv_bytes

buf = self._recv(4)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/multiprocessing/connection.py", line 379, in _recv

chunk = read(handle, remaining)

OSError: [Errno 9] Bad file descriptor

The discrepancy with concurrent.futures is because we do not start the processes with fork by default. Starting the processes with fork breaks the POSIX standard and can lead to unexpected behavior, in particular with third-party libraries such as openmp.

For your OSError, this is really weird. This is standard multiprocessing objects so they should be working. Could you share the code which lead to such exceptions? Also, do you know when in your code this error happens? Is it during the initialization of the Executor or the shutdown?

After a while, I was able to narrow it down to this:

self.p.shutdown(wait=self.shutdown_wait)

I was calling this for self.p assigned to your pool, just like I would concurrent.futures. However, for some reason this fails in the way above for your pool.

The other thing I notice is using your pool leads to very poor performance if I use:

from loky import ProcessPoolExecutor as pool_fork

If I just make that change above, and run a test that does the fork etc. over and over 100 times, your pool takes about 10X longer per fork. Is this expected?

But if I use your reusable version it seems ok:

from loky import get_reusable_executor as pool_fork

But this scares me. Why is the loky version of ProcessPoolExecutor so extremely slow?

Also, if I try to use your reusable pool class for my normal full code, then I get yet another problem:

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 1410, in submit_dummy

result.result()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/concurrent/futures/_base.py", line 432, in result

return self.__get_result()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/concurrent/futures/_base.py", line 384, in __get_result

raise self._exception

loky.process_executor.TerminatedWorkerError: A worker process managed by the executor was unexpectedly terminated. This could be caused by a segmentation fault while calling the function or by an excessive memory usage causing the Operating System to kill the worker. The exit codes of the workers are {SIGTERM(-15)}

This is just for submitting a dummy do-nothing function so the pool workers get initialized and I know their pids. concurrent.futures has no issues.

Your ProcessPoolExecutor doesn't have this failure, but as I said that one is way too slow.

Ok, I narrowed that down to me sending a SIGTERM and then SIGKILL once the task is done after the shutdown in order to ensure the workers really terminate.

So this all seems related to the reusable pool not being able to be fully shutdown. How do I do that?

10358 jon 20 0 96656 22308 7748 R 14.9 0.1 0:00.45 /home/jon/.pyenv/versions/3.6.4/bin/python -m loky.backend.popen_loky_posix --process-name LokyProcess-3 --pipe 17 --semaphore 9

The other thing you seem to do is mess with proctitles and command lines. This makes loky break compatibility with anything that relies upon proctitles or command lines. I use setproctitle package to change the process title/command line and I rely upon that heavily.

I get yet another problem when trying to again use the full code I have. I get the below for numerous tests.

Yet some other problem that never occurs with concurrent.futures.

[gw13] FAILED tests/test_models/test_stacking.py::test_rulefit_regression

___________________________________________________________________________________________________________________________________________________________________ test_rulefit_regression ____________________________________________________________________________________________________________________________________________________________________

[gw13] linux -- Python 3.6.4 /home/jon/.pyenv/versions/3.6.4/bin/python

loky.process_executor._RemoteTraceback:

"""

loky.process_executor._RemoteTraceback:

"""

Traceback (most recent call last):

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/backend/queues.py", line 150, in _feed

obj_ = dumps(obj, reducers=reducers)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/backend/reduction.py", line 230, in dumps

dump(obj, buf, reducers=reducers, protocol=protocol)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/backend/reduction.py", line 223, in dump

_LokyPickler(file, reducers=reducers, protocol=protocol).dump(obj)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/cloudpickle/cloudpickle.py", line 284, in dump

return Pickler.dump(self, obj)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 409, in dump

self.save(obj)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 521, in save

self.save_reduce(obj=obj, *rv)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 634, in save_reduce

save(state)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 476, in save

f(self, obj) # Call unbound method with explicit self

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 821, in save_dict

self._batch_setitems(obj.items())

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 847, in _batch_setitems

save(v)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 476, in save

f(self, obj) # Call unbound method with explicit self

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 736, in save_tuple

save(element)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 476, in save

f(self, obj) # Call unbound method with explicit self

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/cloudpickle/cloudpickle.py", line 703, in save_instancemethod

self.save_reduce(types.MethodType, (obj.__func__, obj.__self__), obj=obj)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 610, in save_reduce

save(args)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 476, in save

f(self, obj) # Call unbound method with explicit self

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 736, in save_tuple

save(element)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 476, in save

f(self, obj) # Call unbound method with explicit self

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/cloudpickle/cloudpickle.py", line 419, in save_function

self.save_function_tuple(obj)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/cloudpickle/cloudpickle.py", line 579, in save_function_tuple

save(state)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 476, in save

f(self, obj) # Call unbound method with explicit self

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 821, in save_dict

self._batch_setitems(obj.items())

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 847, in _batch_setitems

save(v)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 476, in save

f(self, obj) # Call unbound method with explicit self

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 821, in save_dict

self._batch_setitems(obj.items())

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 847, in _batch_setitems

save(v)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/pickle.py", line 496, in save

rv = reduce(self.proto)

TypeError: 'NoneType' object is not callable

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/process_executor.py", line 410, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

File "/home/jon/h2oai/tests/test_models/test_stacking.py", line 915, in run_subprocess

model.fit(X=train_X.copy(), y=train_y)

File "/home/jon/h2oai/h2oaicore/models.py", line 6549, in fit

early_stopping_limit=early_stopping_limit, verbose=verbose, **kwargs)

File "/home/jon/h2oai/h2oaicore/models.py", line 2556, in fit

kwargs=mykwargs, out=res)

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 1528, in submit_tryget

overloadcore_factor=self.overloadcore_factor)

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 1217, in try_get_internal

res = future[wfut].result(timeout=sleeptouse)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/concurrent/futures/_base.py", line 425, in result

return self.__get_result()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/concurrent/futures/_base.py", line 384, in __get_result

raise self._exception

_pickle.PicklingError: Could not pickle the task to send it to the workers.

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/home/jon/h2oai/tests/test_models/test_stacking.py", line 325, in test_rulefit_regression

num_classes=1, random_state=1234), "Pressure9am", 1, test_name=kwargs.pop('test_name', None))

File "/home/jon/h2oai/tests/test_models/test_stacking.py", line 884, in run

call_subprocess_onetask(run_subprocess, (model, target, num_classes, test_name), {})

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 2007, in call_subprocess_onetask

p.finish()

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 1585, in finish

timeout=timeouttouse)

File "/home/jon/h2oai/h2oaicore/systemutils.py", line 1217, in try_get_internal

res = future[wfut].result(timeout=sleeptouse)

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/concurrent/futures/_base.py", line 432, in result

return self.__get_result()

File "/home/jon/.pyenv/versions/3.6.4/lib/python3.6/concurrent/futures/_base.py", line 384, in __get_result

raise self._exception

_pickle.PicklingError: Could not pickle the task to send it to the workers.

Even the reuseable pool is very slow compared to concurrent.futures for many tests that work.

I also get these kind of errors:

platform linux -- Python 3.6.4

pytest==3.5.1

py==1.6.0

pluggy==0.6.0

rootdir: /home/jon/h2oai

inifile: pytest.ini

plugins: xdist-1.22.2, tldr-0.1.5, timeout-1.2.1, repeat-0.7.0, instafail-0.4.0, forked-0.2, cov-2.5.1

cachedir: .pytest_cache

pydev debugger: process 31859 is connecting

/home/jon/.pyenv/versions/3.6.4/lib/python3.6/site-packages/loky/process_executor.py:698: UserWarning: A worker stopped while some jobs were given to the executor. This can be caused by a too short worker timeout or by a memory leak.

"timeout or by a memory leak.", UserWarning

Error in atexit._run_exitfuncs:

Traceback (most recent call last):

File "/opt/pycharm-community-2017.2.3/helpers/pydev/pydevd.py", line 1307, in stoptrace

get_frame(), also_add_to_passed_frame=True, overwrite_prev_trace=True, dispatch_func=lambda *args:None)

File "/opt/pycharm-community-2017.2.3/helpers/pydev/pydevd.py", line 1038, in exiting

sys.stdout.flush()

ValueError: I/O operation on closed file.

pydev debugger: process 32119 is connecting

Error in atexit._run_exitfuncs:

Traceback (most recent call last):

File "/opt/pycharm-community-2017.2.3/helpers/pydev/pydevd.py", line 1307, in stoptrace

get_frame(), also_add_to_passed_frame=True, overwrite_prev_trace=True, dispatch_func=lambda *args:None)

File "/opt/pycharm-community-2017.2.3/helpers/pydev/pydevd.py", line 1038, in exiting

sys.stdout.flush()

ValueError: I/O operation on closed file.

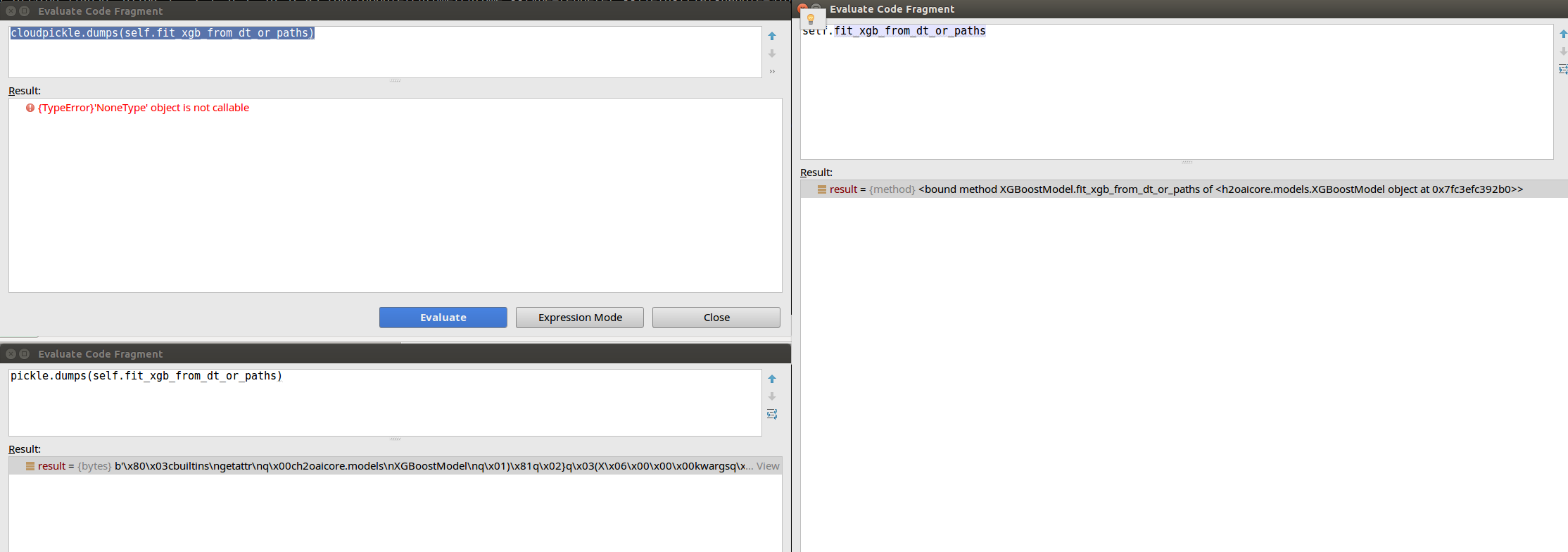

With the pickling problem, it seems cloudpickle is unable to pickle methods while pickle can. This is despite cloudpickle claiming improvements to pickle.

Pausing in my code before the failure, I see:

So maybe your choice to use cloudpickle was not a good one?

Overall, so far, still these issues:

- normal shutdown call causes loky to fail

- loky is much slower, especially its version of ProcessPoolExecutor

- "ValueError: I/O operation on closed file" errors

- pickling failures by cloudpickle.

- Unexpected Proctitle/command line modifications

#1 may not be a deal breaker, but #2-#5 are definitely.

First of all, what is your goal in using loky? It is unclear and you seem to be confused on what is the purpose of loky.

The goal of loky is to have a cross-platform, cross-version implementation of the ProcessPoolExecutor, which work in most cases without needing to tweak anything. We made some design choices which can lead to decreased performance in some cases but it depends on your needs.

Normal shutdown call causes loky to fail

In all our tests, normal shutdown works properly. So it is probably not a normal shutdown... Could you provide a minimal reproducing example (MVCE) so we can investigate this failure?

loky is much slower, especially its version of ProcessPoolExecutor

Yes indeed. The implementation of ProcessPoolExecutor in concurrent.futures start its processes with fork, which can break the POSIX convention and lead to bad interaction with third party library (such as openmp). The default in loky is to start processes with the loky context which is typically slower. To mitigate this issue, we provide get_reusable_executor which avoid restarting over and over the processes. So it is suppose to be slower if you use ProcessPoolExecutor as you are re-starting processes every time and starting processes can be up-to 100x slower with our implementation than with fork. Note that you should get similar performances with ProcessPoolExecutor by setting the context='fork' argument but it is not advised as it can lead to freezed processes with third party library.

Also, the reusable executor might be slower because of the serialization. As we rely on cloudpickle, it could be slower than pickle. It depends on your use case and I cannot be more specific without a proper MCVE.

"ValueError: I/O operation on closed file" errors

This is caused by bad interaction with pycharm that seems to try to get some traces from the workers. It seems to work with concurrent.futures but only with fork. Not sure we can do anything about it, as it seems to be because of uncatched error in pycharm, at exit of the workers.

pickling failures by cloudpickle.

You can use set_loky_pickler('pickle') to use pickle in loky if you don't need functionality of cloudpickle such as pickling interactively defined functions and you find pickle can serialize more object. Also, you could open an issue on the cloudpickle repo for this use case. But I suspect that once again, you don't see this error with concurrent.futures because it relies on fork and using pickle will also break in this case. This is probably due to the fact that you use xgboost which has some internal C objects that are not serializable.

Unexpected Proctitle/command line modifications

The new processes are started with different command line so we cannot change it without messing with OS-specific implementation details. The same proctitle "feature" is only a side-effect from the fork implementation and is not guaranteed, for instance with windows. As our goal is to be cross-platform, we do not change the way the processes are named.

Overall, you seem to rely heavily on the implementation details of fork which are not guaranteed in loky. The goal of loky is to move away from fork since it causes several critical error with scientific computation libraries and it is not cross-platform.

Also, if you want more specific answer, please provide MVCE. The errors you report just include the traceback and are often linked to some dependencies, so it is hard to parse without having an actual reproducing script.

No, the xgboost stuff is just one example, and I showed how it is fully picklable already by the image above

I am not relying upon fork, just the behavior of concurrent.futures. You give the impression that loky only improves upon it, but I see you have broken compatibility for various reasons or have bugs that require more testing. I could help you debug but I don’t have so much time as payoff may be low if never realky works properly.

Definitely the goal of a more reliable concurrent.futures with proper handling of threaded apps that rely upon OpenMP is what I want. XGBoost, lightgbm, datatable all use openmp. So no I am not confused

So maybe your choice to use cloudpickle was not a good one?

Please report a minimal example on the cloudpickle issue tracker so that we can fix it (if it's picklabe using the pickle implementation from the standard library).