RuntimeError: CUDA error: invalid configuration argument

Hi, Thanks for sharing your code. When I tested scene scene0000_01 using Scannet0000_01.ckpt, there was a CUDA error. Can you help me ? Thanks

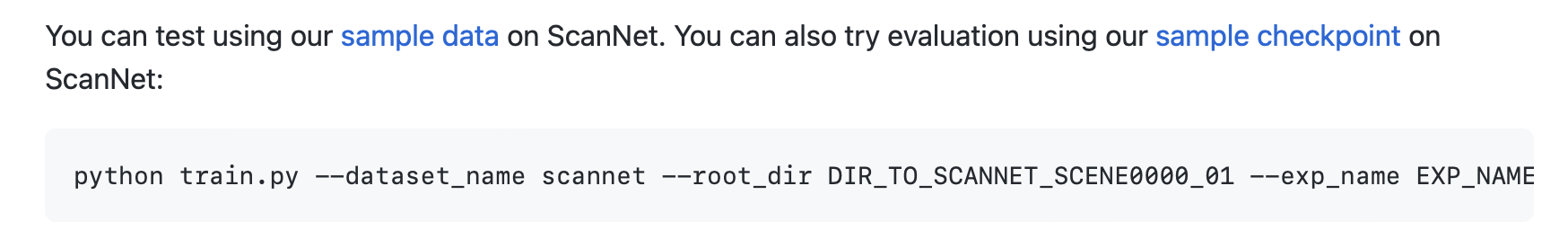

python train.py --dataset_name scannet --root_dir /data/scannet/scans/scene0000_01 --exp_name try_pretrain_scannnet0000_01 --val_only --ckpt_path ./Scannet0000_01.ckpt

File "/home/NeRFusion/models/nerfusion.py", line 161, in sample_uniform_and_occupied_cells cells += [(torch.cat([indices1, indices2]), torch.cat([coords1, coords2]))] RuntimeError: CUDA error: invalid configuration argument CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect. For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

Thanks for this feedback. Can you provide more information? Are you using the provided data and checkpoint? How many computing devices are you using? Also could you try with CUDA_LAUNCH_BLOCKING=1 as suggested in the error message and post the logs here?

I have the exact same issue. Yes, I used your instructions here.

So I used your data and your checkpoint exactly same as your provided. I only use one GPU that's Tesla V100-PCIE-32GB.

So I used your data and your checkpoint exactly same as your provided. I only use one GPU that's Tesla V100-PCIE-32GB.

Here's my env info

`PyTorch version: 1.12.1 Is debug build: False CUDA used to build PyTorch: 10.2 ROCM used to build PyTorch: N/A

OS: CentOS Linux 7 (Core) (x86_64) GCC version: (wliu25-vector2) 7.5.0 Clang version: Could not collect CMake version: version 3.25.1 Libc version: glibc-2.17

Python version: 3.8.15 (default, Nov 24 2022, 15:19:38) [GCC 11.2.0] (64-bit runtime) Python platform: Linux-3.10.0-1127.18.2.el7.x86_64-x86_64-with-glibc2.17 Is CUDA available: True CUDA runtime version: 10.2.89 GPU models and configuration: GPU 0: Tesla V100-PCIE-32GB GPU 1: Tesla V100-PCIE-32GB GPU 2: Tesla V100-PCIE-32GB GPU 3: Tesla V100-PCIE-32GB GPU 4: Tesla V100-PCIE-32GB GPU 5: Tesla V100-PCIE-32GB GPU 6: Tesla V100-PCIE-32GB GPU 7: Tesla V100-PCIE-32GB

Nvidia driver version: 440.95.01 cuDNN version: Could not collect HIP runtime version: N/A MIOpen runtime version: N/A Is XNNPACK available: True

Versions of relevant libraries: [pip3] numpy==1.23.5 [pip3] pytorch-lightning==1.7.7 [pip3] torch==1.12.1 [pip3] torch-scatter==2.1.0 [pip3] torchaudio==0.12.1 [pip3] torchmetrics==0.11.0 [pip3] torchsparse==1.4.0 [pip3] torchvision==0.13.1 [conda] blas 1.0 mkl [conda] cudatoolkit 10.2.89 hfd86e86_1 [conda] ffmpeg 4.3 hf484d3e_0 pytorch [conda] mkl 2021.4.0 h06a4308_640 [conda] mkl-service 2.4.0 py38h7f8727e_0 [conda] mkl_fft 1.3.1 py38hd3c417c_0 [conda] mkl_random 1.2.2 py38h51133e4_0 [conda] numpy 1.23.5 py38h14f4228_0 [conda] numpy-base 1.23.5 py38h31eccc5_0 [conda] pytorch 1.12.1 py3.8_cuda10.2_cudnn7.6.5_0 pytorch [conda] pytorch-lightning 1.7.7 pypi_0 pypi [conda] pytorch-mutex 1.0 cuda pytorch [conda] pytorch-scatter 2.1.0 py38_torch_1.12.0_cu102 pyg [conda] torchaudio 0.12.1 py38_cu102 pytorch [conda] torchmetrics 0.11.0 pypi_0 pypi [conda] torchsparse 1.4.0 pypi_0 pypi [conda] torchvision 0.13.1 py38_cu102 pytorch`

And there's no big change after adding CUDA_LAUNCH_BLOCKING=1, i.e.

I guess it might relate to

- CUDA version, I'm on 10.2,

- NVIDIA GPU with Compute Compatibility, I'm on less 75

here's a issue #https://github.com/pytorch/pytorch/issues/48573

Hi,sir, I'm wondering whether there's any update for my problem? I would appreciate it a lot if you could help me.

I got the same results, guess you could check the issue mentioned in https://github.com/kwea123/ngp_pl/issues/95 as the author uses the code of ngp_pl, and https://github.com/jetd1/NeRFusion/issues/8#issue-1409552026 also shows similar results with 0 size. Maybe scene0000_01.ckpt has something wrong... And G.ckpt cannot be loaded...

I use cu113+torch12.0.1, and installed dependencies, but an error occurs when importing vren: vren.cpython-38-x86_64-linux-gnu.so: undefined symbol: _zn2at4_ops5zeros4callen3c108arrayrefins2_6syminteeens2_8optionalins2_10scalartypeeeens6_ins2_6layouteeens6_ins2_6deviceeeens6_ibee >>> Can anyone tell me how to do this?

@jetd1 I have the exact same issue. Can you upload a good pre-trained weight again?