STRG

STRG copied to clipboard

STRG copied to clipboard

About your dataset

Hi, because I'm a green hands and I could not get Kinetics dataset, I only can read your code. So there are some questions: (1) In videodataset.py, class videodataset return a clip and a target in training. I notice that the length of clip is equal to the length of frame_indices which is 10, but in your paper, you select 32 frames as input. So could you tell me where you select 32 frames? (2)About strg.py, I test other size of input like 1332224224, whose batch size is 1 and depth is 32, but the batch size of output of extractor and reducer is 2. If that means I must process all my input with batch_size = 4? Could I use other size of input? All problems above are just primary but bothering me for several days. I would be very grateful to you if you could help me. Thanks.

Hi @IVparagigm,

1-1) Could you tell me which script did you run? on my side, the length of frame_indices is 32.

1-2) Also, I am not the author of the paper.

- I cannot understand the size of your input. Maybe there is a font mismatch. does it 1 * 3 * 32 * 224 * 224?

The batch_size is preserved.

1)in Videodataset.py class Videodataset _make_dataset()

frame_indices = list(range(segment[0], segment[1])) sample = { 'video': video_path, 'segment': segment, 'frame_indices': frame_indices, 'video_id': video_ids[i], 'label': label_id }

And I got the annotation like this:

"---QUuC4vJs": { "annotations": { "label": "testifying", "segment": [ 84.0, 94.0 ] },

so I consider the length of frame indices is 10. I'm sorry I can't got dataset so I just read your code to infer the size of data.

2)Yes, I first use github, so there are something wrong with my comment format. This is my test code:

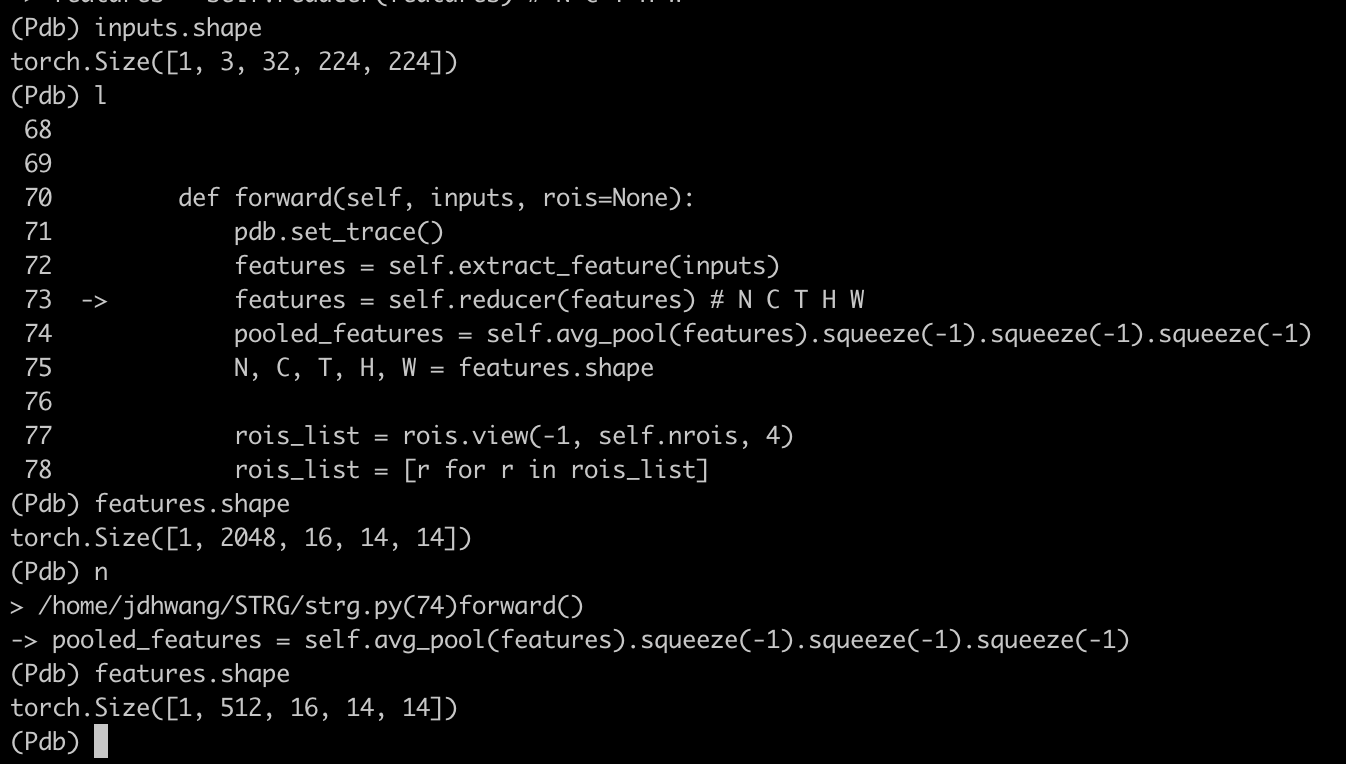

rois = torch.rand((1, 32, 10, 4)) inputs = torch.rand((1, 3, 32, 224, 224)) strg = STRG(model) out = strg(inputs, rois)

When I use this, I got the feauture with 2 * 512 * 7 * 7, but my expectation was getting a output with size of 32 * 512 * 7 * 7 beacuse the depth of input is 32(I sent 32 frames into network). Could you tell me what's wrong with me.

Tnank you very much if you could help me.

@IVparagigm

- If I remember correctly, that one is from https://github.com/kenshohara/3D-ResNets-PyTorch/blob/master/datasets/videodataset.py and it is for dense-sampling. it finally samples 32 frames on the next stages.

- what is

modelin your code? Is itresnet_strgcorrectly?

1)Yes, what I metioned is in this file.

frame_indices = self.data[index]['frame_indices'] if self.temporal_transform is not None: frame_indices = self.temporal_transform(frame_indices) clip = self.__loading(path, frame_indices)

The self.temporal_transform make frame_indices into the length of 32, right?

2)resnet_strg and resnet both I have tried.

In resnet_strg, after self.reducer I got the output with size of 16 * 512 * 14 * 14. If output with size of 32 * 512 * 7 * 7 is what I want. Is it achievable?