haproxy-ingress

haproxy-ingress copied to clipboard

haproxy-ingress copied to clipboard

Reload seems to sporadically reset running connections

Description of the problem

Please note that this the same environment as in #869, though different problem.

Clients sporadically get TCP connections reset. This happens in a large cluster with ~4800 ingresses. There high volume of new incoming connections and also a high volume of long-lived connections (hours). There are sometimes event bursts w.r.t to ingresses, e.g. many new ingresses / deleted ingresses / changed ingresses and then the frequency of the resets increases.

We have captured TCP dumps on various points and thereby found out that it is the haproxy-ingress pod that terminates the connection. As an example, we have a connection opened at 13:59:43 UTC and terminated at 14:00:04 UTC. 100.97.13.19 is the IP of the haproxy-ingress pod and 100.96.227.246 that of the server. We see this:

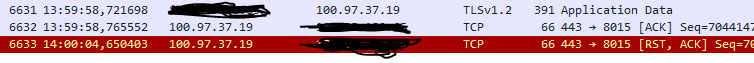

There is regular traffic up until 13:59:58, then nothing for six seconds and then a FIN/ACK is sent to the server. The server does not expect this and replies was a RST/ACK. The FIN/ACK is not coming from the client (where we have also capture dumps) The client just gets the last ACK and then the RST/ACK (client IP redacted):

There is regular traffic up until 13:59:58, then nothing for six seconds and then a FIN/ACK is sent to the server. The server does not expect this and replies was a RST/ACK. The FIN/ACK is not coming from the client (where we have also capture dumps) The client just gets the last ACK and then the RST/ACK (client IP redacted):

This does not really match with any of our timeout settings (see details below) and also the connection is very new.

In the logs of the haproxy-ingress we see a few updates, ingress events and then a reload of the embedded haproxy in the time of the last regular packets:

I0202 13:59:43.324320 6 controller.go:317] starting haproxy update id=29569

I0202 13:59:43.324456 6 instance.go:310] old and new configurations match

I0202 13:59:43.324485 6 controller.go:326] finish haproxy update id=29569: parse_ingress=0.063531ms write_maps=0.008127ms total=0.071658ms

...

I0202 13:59:49.324423 6 controller.go:317] starting haproxy update id=29572

I0202 13:59:49.338207 6 instance.go:307] haproxy updated without needing to reload. Commands sent: 3

I0202 13:59:49.338271 6 controller.go:326] finish haproxy update id=29572: parse_ingress=0.206048ms write_maps=0.099833ms write_config=13.445251ms total=13.751132ms

I0202 13:59:49.619040 6 event.go:282] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"default", Name:"f614705f-51e0-4978-8a35-7642d80a5632-0", UID:"b7f2afb4-d9e0-4e80-934b-95dd6059595b", APIVersion:"networking.k8s.io/v1", ResourceVersion:"2061866396", FieldPath:""}): type: 'Normal' reason: 'CREATE' Ingress default/f614705f-51e0-4978-8a35-7642d80a5632-0

...

I0202 13:59:55.589288 6 event.go:282] Event(v1.ObjectReference{Kind:"Ingress", Namespace:"default", Name:"9453efbd-b5f3-4a4f-b883-fa582a451f36", UID:"7ca24a16-6dc5-4d19-8ab0-defed1c27afc", APIVersion:"networking.k8s.io/v1", ResourceVersion:"2061866871", FieldPath:""}): type: 'Normal' reason: 'DELETE' Ingress default/9453efbd-b5f3-4a4f-b883-fa582a451f36

...

I0202 13:59:58.263516 6 controller.go:334] starting haproxy reload id=633

W0202 13:59:58.732242 6 instance.go:501] output from haproxy:

I0202 13:59:58.732511 6 instance.go:337] haproxy successfully reloaded (embedded)

I0202 13:59:58.732564 6 controller.go:338] finish haproxy reload id=633: reload_haproxy=468.960356ms total=468.960356ms

Note that the last regular packet from haproxy to server is recorded at 13:59:58.718081 and the reload is logged in the period of 13:59:58.263516 and 13:59:58.732564.

Because of the timing, we suspect that something goes wrong during the reload and a haproxy process gets terminated though it should not (yet).

The pod is under considerable load, i.e. ~36 CPUs in usage (nbthread is 63), ~40GiB memory, ~280 internal processes running. The number of processes also changes suggesting that some get terminated.

Expected behavior

Connections should be kept alive. The embedded haproxy should not terminate them.

Steps to reproduce the problem

We are working on reproducing it in a small environment, but cannot yet confirm. This is what we are doing:

- Have a number of backend servers, some ingresses, one haproxy-ingress pod

- Have a loop constantly doing ingress changes to trigger reloads

- Have a set of clients constantly opening connections and keeping them open, doing simple data transmission

Environment information

HAProxy Ingress version: v0.13.4

Command-line options:

- --default-backend-service=$(POD_NAMESPACE)/ingress-default-backend

- --default-ssl-certificate=$(POD_NAMESPACE)/ingress-default-backend-tls

- --configmap=$(POD_NAMESPACE)/haproxy-configmap-nlb

- --reload-strategy=reusesocket

- --alsologtostderr

- --reload-interval=60s

- –wait-before-update=10s

- --backend-shards=50

From the configmap:

config-frontend: |

maxconn 30000

option clitcpka

option contstats

config-global: |

pp2-never-send-local

health-check-interval: 10s

https-log-format: '%H\ %ci:%cp\ [%t]\ %ft\ %b/%s/%bi:%bp\ %Th/%Tw/%Tc/%Td/%Tt\ %B\

%ts\ %ac/%fc/%bc/%sc/%rc\ %sq/%bq'

max-connections: "30100"

nbthread: "63"

slots-min-free: "0"

timeout-client: 50s

timeout-client-fin: 50s

timeout-tunnel: 24h

Global options:

global

daemon

unix-bind mode 0600

nbthread 63

cpu-map auto:1/1-63 0-62

stats socket /var/run/haproxy/admin.sock level admin expose-fd listeners mode 600

maxconn 30100

tune.ssl.default-dh-param 2048

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options no-sslv3 no-tlsv10 no-tlsv11 no-tls-tickets

ssl-default-server-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-server-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

pp2-never-send-local

defaults

log global

maxconn 30100

option redispatch

option http-server-close

option http-keep-alive

timeout client 50s

timeout client-fin 50s

timeout connect 5s

timeout http-keep-alive 1m

timeout http-request 5s

timeout queue 5s

timeout server 50s

timeout server-fin 50s

timeout tunnel 24h

Ingress objects (parts redacted / changed):

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

ingress.kubernetes.io/config-backend: |

acl network_allowed src 0.0.0.0/0

tcp-request content reject if !network_allowed

ingress.kubernetes.io/maxconn-server: "1000"

ingress.kubernetes.io/proxy-protocol: v2

ingress.kubernetes.io/ssl-passthrough: "true"

kubernetes.io/ingress.class: haproxy

.....

name: some-uid

namespace: default

.....

spec:

rules:

- host: some-uid-********.com

http:

paths:

- backend:

service:

name: some-uid

port:

number: 1234

path: /

pathType: ImplementationSpecific

status:

loadBalancer:

ingress:

- {}

Resulting backend config:

backend default_**********************

mode tcp

balance roundrobin

option srvtcpka

acl network_allowed src 0.0.0.0/0

tcp-request content reject if !network_allowed

server srv001 100.96.227.246:1234 weight 1 maxconn 1000 send-proxy-v2 check inter 10s

Hi, I'm in the process of building an environment to stress test the controller and the proxy, and your scenario and configuration details are perfect to guide the first tests. A few doubts below:

- Would you say the ingress snippet above is similar with the majority of the ingress in the environment? I'll try to configure my test env as much similar with yours as possible;

- Is there any chance to update your haproxy version, either updating the controller or trying to use the external deployment? I remember to have seen in the past some of the old instances crashing just after the restart, you can see this via kernel log, dmesg, etc, or maybe in the console of the vm depending on your distro. A haproxy crash should justify the connection resets you're seeing and maybe it's already fixed on newer versions.

Thanks much for the feedback! If I can supply you with more information that would help you for the stress tests, let me know.

Would you say the ingress snippet above is similar with the majority of the ingress in the environment? I'll try to configure my test env as much similar with yours as possible;

Yes, basically all are of the kind and there are ~4500 of them. There are also ~200 ingresses for routing HTTPS and they will have a section with:

tls:

- hosts:

- some-uid-********.com

secretName: some-secret-tunnel-cert

but traffic for those is negligible.

Is there any chance to update your haproxy version, either updating the controller or trying to use the external deployment? I remember to have seen in the past some of the old instances crashing just after the restart, you can see this via kernel log, dmesg, etc, or maybe in the console of the vm depending on your distro. A haproxy crash should justify the connection resets you're seeing and maybe it's already fixed on newer versions.

In the affected cluster, we are running haproxy-ingress v0.13.4 (embedded haproxy 2.3.14). So at best we could go to controller v0.13.6 (embedded haproxy 2.3.17). The external deployment would mean a major refactoring for us unfortunately. I was hoping to find some form of shorter term mitigation. Looking at the changelog for 2.3 I am not spotting something that reads like a fixed crash bug in versions 2.3.15 - 2.3.17. Also I could not find an indication of a crash in the syslogs I have been looking at, but maybe there are more log sources I should have been looking at. I will keep digging.

Do you think that the reload strategy could have an impact? We are using reusesocket and switching to native is still under discussion at our end. It does have the known caveat of the small time window of blocked connections during reload, which is why we are still refraining from it. I just wonder if the socket handover might be a root cause for the connection reset we are seeing. But this is just speculation on my end, if you have more insights I'd appreciate it.

Thanks for the update. Regarding the proxy version you have also the option to build a new image yourself but migrate to v0.13.6 should be safe enough. Regarding possible errors some proxy instances might also lead to a oomk, since you reported that you have lots of reloads and old instances remains alive due to long lived connections, but I think you'd easily find them, either via syslog or monitoring host's memory usage. Regarding the reload strategy I think that the problems you could have copying the listener sockets would lead to messages like described in #696, but it's just some speculation from my side and maybe there are some more side effects that I'm not aware of. Depending on the SLA you have and how resilient your clients are, changing reload to native might be a reasonable temporary option, just to figure out if you continue to have the same kind of issues. Maybe in a month or so I have some numbers and can also improve something from the controller side, but in the mean time I think it worth to format a question and send it to the haproxy mailing list. Of course controller logs won't be helpful there but this can be translated to the moment the proxy was reloaded.

It has been a while, but I would still like to provide a short update on this ticket. From my point of view, setting a reload-interval resolved it. The resets happened in situations when there were burst events of many ingress changes which then triggered many reloads (in the order of several per second). Limiting the frequency of reloads made this problem go away.