react-native-tensorflow-lite

react-native-tensorflow-lite copied to clipboard

Quantized Int Model

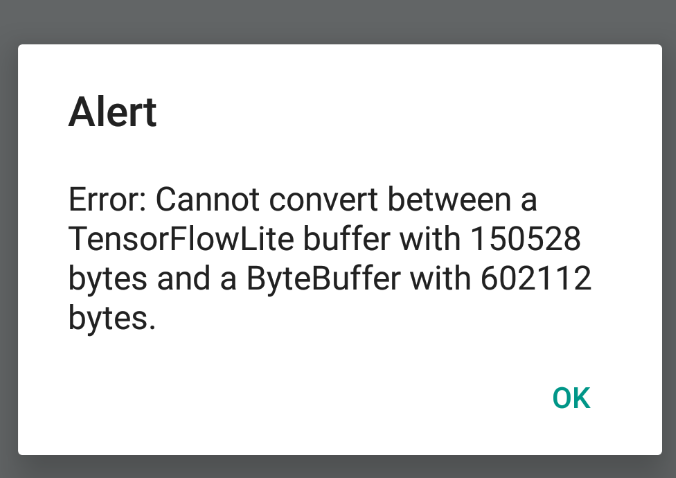

Looks like the quantized int model is 1/4th the size.

I tried removing the *4 on the ByteBuffer, but then I get a new error that just says Error: null

Help appreciated. I can post the code, too, if you'd like.

The quartered byteBuffer error for posterity.

Actually, I haven't commited yet the latest version that supports quantized model. I'll commit it later or tomorrow because I'm busy right now.

Sounds good! Thanks for your work!

its been a while you haven't fixed this issue. please fix it so that we can implement it in our apps