danfojs

danfojs copied to clipboard

danfojs copied to clipboard

Trying to load a (big) 800mb CSV file > Fail

Describe the bug I get this error after 6 minutes trying to load a 800mb CSV file into a dtaframe in danfoJS on VSCode.

<--- Last few GCs --->

[6838:0x158008000] 353868 ms: Scavenge (reduce) 3928.7 (4097.6) -> 3928.7 (4097.6) MB, 7.6 / 0.0 ms (average mu = 0.397, current mu = 0.448) allocation failure

[6838:0x158008000] 354091 ms: Scavenge (reduce) 3929.4 (4097.7) -> 3929.1 (4098.0) MB, 20.6 / 0.0 ms (average mu = 0.397, current mu = 0.448) allocation failure

[6838:0x158008000] 354199 ms: Scavenge (reduce) 3948.8 (4117.7) -> 3948.7 (4117.9) MB, 38.3 / 0.0 ms (average mu = 0.397, current mu = 0.448) allocation failure

<--- JS stacktrace --->

FATAL ERROR: Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memory

41: 0x106ded088

To Reproduce

Code:

const dfd = require('danfojs-node')

var dataframe;

const full = 'basic_full.csv';

const changes = 'basic_changes.csv';

dfd.readCSV(full)

.then(df => {

dataframe = df;

}).catch(err=>{

console.log(err);

})

Expected behavior Reasonably quick loading into dataframe with this computer

Screenshots

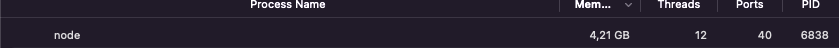

Desktop (please complete the following information): Macbook air M1 with 16gb memmory

Additional context In the activity monitor I see the nodeJS runtime taking up more and more memmory (up to 7 GB) before giving the error. Seems weird it would take that much memmory loading a 800mb csvv.

I treid upping the memmory allocation for NodeJS: export NODE_OPTIONS="--max_old_space_size=8096"

bruh, same

@riekusr @renatocfrancisco we plan of making creating a second backend with apache-arrow compared to using tensorflow. I believe this should resolve the memory issue.