jamestch

jamestch

> You need to install `git lfs` to download large models from hf.  I have installed **git lfs**, model files showed above is downloaded from huggingface by command **git...

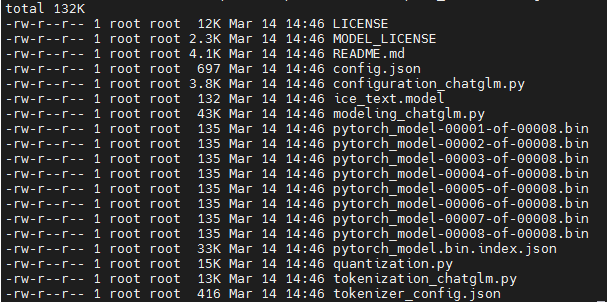

> > > > > > > > > I have the same error. I have downloaded model files from huggingface, model files as followed > >  > >...

我修改了ZeRO-3的配置文件为deepspeed_zero3_config.json问题似乎解决了 CUDA_VISIBLE_DEVICES=6,7 deepspeed pretrain.py --deepspeed --deepspeed_config models/**deepspeed_zero3_config.json** --pretrained_model_path models/llama-13b.bin --dataset_path dataset.pt --spm_model_path /path_to_llama/tokenizer.model --config_path models/llama/13b_config.json --output_model_path models/output_model.llama_13.bin --world_size 2 --data_processor lm --batch_size 2 --enable_zero3

同样的疑问