jaeger-kubernetes

jaeger-kubernetes copied to clipboard

jaeger-kubernetes copied to clipboard

Editing configmap.yml and elasticsearch.yml in order to change password

Update elasticsearch default password.

Problem

I want to implement a jaeger installation with persistent storage using elasticsearch like backend on my Kubernetes cluster on Google cloud platform.

I am using the jaeger kubernetes templates and I start with elasticsearch production setup.

I've downloaded and modified the configmap.yml file in order to change the password field value and the elasticsearch.yml file in order to fix the password value which I've changed.

My customized .yml files has stayed of this way:

-

configmap.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: jaeger-configuration

labels:

app: jaeger

jaeger-infra: configuration

data:

span-storage-type: elasticsearch

collector: |

es:

server-urls: http://elasticsearch:9200

username: elastic

password: **my-password-value**

collector:

zipkin:

http-port: 9411

query: |

es:

server-urls: http://elasticsearch:9200

username: elastic

password: **my-password-value**

agent: |

collector:

host-port: "jaeger-collector:14267"

-

elasticsearch.yml

apiVersion: v1

kind: List

items:

- apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: elasticsearch

labels:

app: jaeger

jaeger-infra: elasticsearch-statefulset

spec:

serviceName: elasticsearch

replicas: 1

template:

metadata:

labels:

app: jaeger-elasticsearch

jaeger-infra: elasticsearch-replica

spec:

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:5.6.0

imagePullPolicy: Always

command:

- bin/elasticsearch

args:

- "-Ehttp.host=0.0.0.0"

- "-Etransport.host=127.0.0.1"

volumeMounts:

- name: data

mountPath: /data

readinessProbe:

exec:

command:

- curl

- --fail

- --silent

- --output

- /dev/null

- --user

- elastic:**my-password-value**

- localhost:9200

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 4

volumes:

- name: data

emptyDir: {}

- apiVersion: v1

kind: Service

metadata:

name: elasticsearch

labels:

app: jaeger

jaeger-infra: elasticsearch-service

spec:

clusterIP: None

selector:

app: jaeger-elasticsearch

ports:

- port: 9200

name: elasticsearch

- port: 9300

name: transport

- And then, I've created the kubernetes cluster configuration with the new password value from my machine to my KGE via

kubectlcommand

~/w/j/AddPersistVolumToPods ❯❯❯ kubectl create -f configmap.yml

configmap/jaeger-configuration created

~/w/j/AddPersistVolumToPods ❯❯❯

- And I've created the elasticsearch service via StatefulSet specialized pod (also with the new password value) from my machine to my KGE via

kubectlcommand

~/w/j/AddPersistVolumToPods ❯❯❯ kubectl create -f elasticsearch.yml statefulset.apps/elasticsearch created service/elasticsearch created ~/w/j/AddPersistVolumToPods ❯❯❯

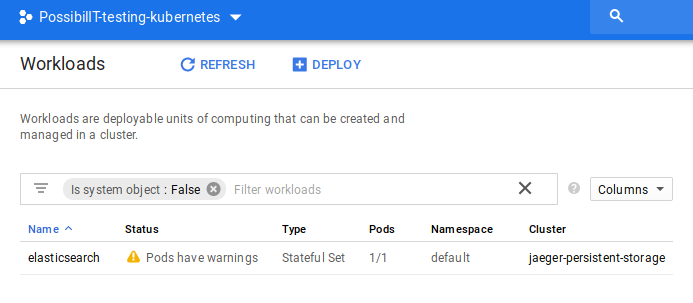

I can see that I have the elasticsearch service created on my GKE cluster

~/w/j/A/production-elasticsearch ❯❯❯ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 41m

kubernetes ClusterIP 10.39.240.1 <none> 443/TCP 1h

~/w/j/A/production-elasticsearch ❯❯❯

And I have the elasticsearch-0 pod which have the docker container of elasticsearch service

~/w/j/A/production-elasticsearch ❯❯❯ kubectl get pod elasticsearch-0

NAME READY STATUS RESTARTS AGE

elasticsearch-0 0/1 Running 0 25m

~/w/j/A/production-elasticsearch ❯❯❯

But when I can detail my pod on KGE, I see that my pod have some warnings and is not healthy ...

I get the pod description detail and I get this warning

Warning Unhealthy 2m6s (x296 over 26m) kubelet, gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk Readiness probe failed:

Here, my entire output to describe command

~/w/j/A/production-elasticsearch ❯❯❯ kubectl describe pod elasticsearch-0

Name: elasticsearch-0

Namespace: default

Node: gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk/10.164.0.2

Start Time: Tue, 08 Jan 2019 13:57:52 +0100

Labels: app=jaeger-elasticsearch

controller-revision-hash=elasticsearch-c684bb745

jaeger-infra=elasticsearch-replica

statefulset.kubernetes.io/pod-name=elasticsearch-0

Annotations: kubernetes.io/limit-ranger: LimitRanger plugin set: cpu request for container elasticsearch

Status: Running

IP: 10.36.2.7

Controlled By: StatefulSet/elasticsearch

Containers:

elasticsearch:

Container ID: docker://54d935f3e07ead105464a003745b80446865eb2417da593857d21c56610f704b

Image: docker.elastic.co/elasticsearch/elasticsearch:5.6.0

Image ID: docker-pullable://docker.elastic.co/elasticsearch/elasticsearch@sha256:f95e7d4256197a9bb866b166d9ad37963dc7c5764d6ae6400e551f4987a659d7

Port: <none>

Host Port: <none>

Command:

bin/elasticsearch

Args:

-Ehttp.host=0.0.0.0

-Etransport.host=127.0.0.1

State: Running

Started: Tue, 08 Jan 2019 13:58:14 +0100

Ready: False

Restart Count: 0

Requests:

cpu: 100m

Readiness: exec [curl --fail --silent --output /dev/null --user elastic:L4I^1xxhcu#S localhost:9200] delay=5s timeout=4s period=5s#success=1 #failure=3

Environment: <none>

Mounts:

/data from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-vkxnj (ro)

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

Volumes:

data:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

default-token-vkxnj:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-vkxnj

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 27m default-scheduler Successfully assigned elasticsearch-0 to gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk

Normal SuccessfulMountVolume 27m kubelet, gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk MountVolume.SetUp succeeded for volume "data"

Normal SuccessfulMountVolume 27m kubelet, gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk MountVolume.SetUp succeeded for volume "default-token-vkxnj"

Normal Pulling 27m kubelet, gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk pulling image "docker.elastic.co/elasticsearch/elasticsearch:5.6.0"

Normal Pulled 26m kubelet, gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk Successfully pulledimage "docker.elastic.co/elasticsearch/elasticsearch:5.6.0"

Normal Created 26m kubelet, gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk Created container

Normal Started 26m kubelet, gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk Started container

Warning Unhealthy 2m6s (x296 over 26m) kubelet, gke-jaeger-persistent-st-default-pool-d72f7fde-ggrk Readiness probe failed:

~/w/j/A/production-elasticsearch ❯❯❯

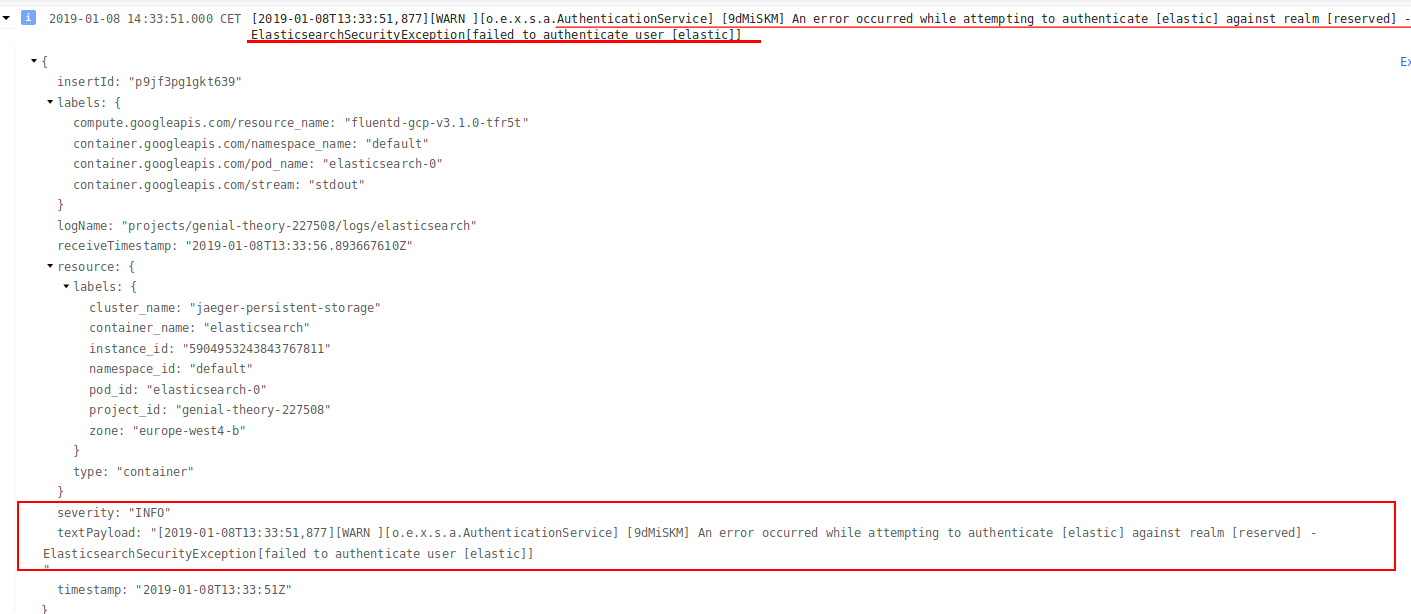

I go to the container log section on GCP and I get the following:

And in the audit log section I can see something like this:

resourceName: "core/v1/namespaces/default/pods/elasticsearch-0"

response: {

@type: "core.k8s.io/v1.Status"

apiVersion: "v1"

code: 500

details: {…}

kind: "Status"

message: "The POST operation against Pod could not be completed at this time, please try again."

metadata: {…}

reason: "ServerTimeout"

status: "Failure"

}

serviceName: "k8s.io"

status: {

code: 13

message: "The POST operation against Pod could not be completed at this time, please try again."

}

}

If I try with the original files and I change the password via KGE on GCP I get this error:

Pod "elasticsearch-0" is invalid: spec: Forbidden: pod updates may not change fields other than `spec.containers[*].image`, `spec.initContainers[*].image`, `spec.activeDeadlineSeconds` or `spec.tolerations` (only additions to existing tolerations)

After that I've create a pod, is not possible update or perform some changes?

kubectl apply -f ..... ? I suposse

How to can I change the elasticsearch password?

If I want configure a persistent volume claim on this pod, can I perform this before the kubectl create -f command and my volume and mountPath will be created on container and KGE?

After that I've create a pod, is not possible update or perform some changes? kubectl apply -f ..... ? I suposse

It depeds on the changes, you will have to restart pods in some situations.

How to can I change the elasticsearch password?

The authentication is managed by xpack. This document https://www.elastic.co/guide/en/elasticsearch/reference/current/security-api-change-password.html describes how to change the password.

If I want configure a persistent volume claim on this pod, can I perform this before the kubectl create -f command and my volume and mountPath will be created on container and KGE?

Just follow the standard k8s docs and it should work on GCE.

It depends on the changes, you will have to restart pods in some situations.

@pavolloffay What is the best strategic to restart the pod?

have been reading some posts blogs and speak about that, but I haven't clear how to restart the pods, because many sites talk about it depending of the way in that they has been created, and when I delete some pods via kubectl GKE inmediately create other pod with similar characteristics.

Even recommend go to the specific container (s) inside the pod and restart or kill the process container inside the pod, like strategic to restart ..https://stackoverflow.com/a/47799862/2773461 (edited)

What is the best strategic to restart the pod?

Just kill the pod and let the Kubernetes controller start a new one. You might lose any inflight data, like spans that were received but not persisted.

Ideally, we would implement a file watcher in Jaeger itself, to restart the internal process without the need to kill the pod, but I don't think we have it in place already.

@jpkrohling I will take it in my mind when I need it.