vscode-dvc

vscode-dvc copied to clipboard

vscode-dvc copied to clipboard

exp --queue and --run-all can hang and cause future exp show runs to hang

The original Issue has since been solved, but there's still a related problem which this Issue now tracks. See this comment for info on the still open issue.

Original issue:

I had mentioned this when working on plots, but it seems this is more of a general problem that occurs on master as well.

After running one experiment, live updating experiments ~stops working~ delays for at least 5 and up to 15 checkpoints before dumping all of them at once, sometimes crashing VSCode in the process. You can see the output changes as well, showing nothing until the experiments finishes and pushing all the checkpoints at once. It almost looks like it could be a dvc issue, but I don't know how it would be considering it's related to the vscode session.

https://user-images.githubusercontent.com/9111807/134056404-8ef0d288-4184-446d-8d8c-c21f7678245b.mp4

This issue also happens when running dvc exp show on the CLI outisde of VSCode, with slightly different symptoms on the table itself where updates don't happen until a bit after the command is finished

https://user-images.githubusercontent.com/9111807/134057377-608bd480-3600-4cde-9ed9-7d88dbe8f12d.mp4

I've also check combinations of running from both CLI and VSCode, in both CLI -> VSCode and VSCode -> CLI. Table symptoms reflect the command run second.

So, this is DVC issue?

To me it looks like an issue with restarting from existing checkpoints, what happens if you increase the number of epochs to 15 or some number like that? I think that the 3 epochs happen so quickly that it seems like "nothing is sent until the experiment finishes". What happens if you change the params so that you are forcing another experiment to start?

Here is a demo from another repo:

https://user-images.githubusercontent.com/37993418/134087833-ed91d7fc-18e2-4dc3-b4e4-cb6f601fc0da.mov

We still have issues where commands are running into each other so things can be slow to update but live updates are not broken.

Let me try to replicate with higher epochs and non-continuation, I remember the same thing happening when I did so when trying plots, but it's always good to confirm.

With a few tries and a bit of luck, I finally got a demo of a 15 epoch run on two experiments that didn't crash.

https://user-images.githubusercontent.com/9111807/134100716-aa39ea7a-e61c-4f73-9282-ee67f4a9863f.mp4

On a few attempts, I saw what @mattseddon hypothesized with a delayed update before live update resumed, but even when that happened the delay was between 5 and 10 epochs, and occasionally VSCode crashed at the time where other successful attempts dumped those epochs and resumed. They all share the same commonality of the first run working totally fine, but subsequent runs having issues.

@shcheklein It's really hard to pin down whether or not this actually is a DVC issue. At least for now, I'd lean towards no since symptoms can only be seen within VSCode.

@rogermparent I cannot reproduce:

https://user-images.githubusercontent.com/37993418/134101351-be39868d-13b0-42bb-b543-5cf4f4d217b0.mov

https://user-images.githubusercontent.com/37993418/134101373-803e32ca-b78c-47c6-9e48-93460f5dd3ef.mov

When was the last time that you upgraded your .env (source .env/bin/activate && pip install -r requirements.txt -U)?

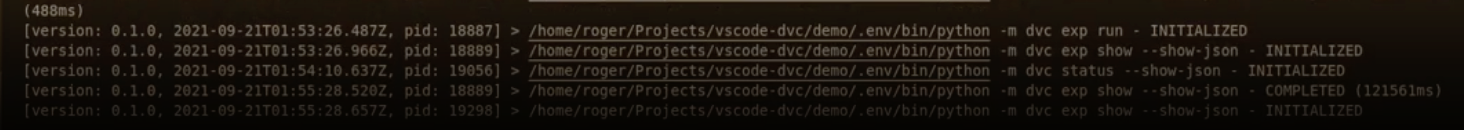

@rogermparent wait a minute, HOW is exp show taking 121s to run?

When was the last time you garbage collected experiments... even then WAT! How long does that action take in the CLI? The problem is whatever is causing exp show to run for an eternity. Takes ~1.2s on my machine (and my machine is garbage).

When was the last time that you upgraded your

.env(source .env/bin/activate && pip install -r requirements.txt -U)?

I ran a git clean -fxd and reinstalled all dependencies before attempting that second recording, and just verified with those commands that my .env is up-to-date. It's worth noting that extension dev host + dvc reaches 13/16gb of my RAM, but doesn't seem to cross the threshold.

wait a minute, HOW is

exp showtaking121sto run?When was the last time you garbage collected experiments... even then WAT! How long does that action take in the CLI?

Great catch! It looks like exp show is starting alongside exp run, and idling until some point in the middle of exp run without ever failing like it does on your examples. Maybe I spoke too soon, and this could be a Linux-specific DVC issue related to commands running into each other- specifically run and show?

I ran GC quite a few times in the process, running it after that example (and a couple experiments later) only cleaned 4 experiments.

relates to https://github.com/iterative/dvc/issues/5739

I've been able to recreate this issue on Codespaces, leading me to believe it's an issue related to running on Linux.

https://user-images.githubusercontent.com/9111807/134733165-655bc0db-9cee-4e3d-ac66-9397053eb645.mp4

Bad news that it's happening, good news that it should be much easier for everyone to replicate this way!

As discussed in the meeting, I tried to replicate the bug using only the CLI and not VSCode. I couldn't successfully do so. Here's what I did:

- run

dvc status --show-json,dvc diff --show-json, anddvc exp show --show-jsonin a loop withwatch -n0(re-run as soon as the last update ends) in three separate terminals to simulate what we assumed would be causing lock issues with dvc dvc exp runin a fourth terminal- Observe the

dvc exp showterminal for delays in changes

Recording:

https://user-images.githubusercontent.com/9111807/138955175-928c7005-5d72-44d9-a3b0-a7dd0299a9d2.mp4

I couldn't see any delays like we're experiencing in VSCode, only a couple seconds at most. Due to this, maybe we can hypothesize the issue has something to do with VSCode being a single-threaded Node application that's executing and reading the output of the dvc calls? We've run into issues before with Node's process execution behaving differently on different platforms, so that could explain the platform-specific nature of this Issue.

I am now seeing a similar issue where exp show hung for a long time, here are the steps that I went through

- queue 4 experiments

- run all experiments

- after first experiment completed exp show began to hang

- after killing the session

exp showbegan running in a loop - my repo is now in this weird state where

exp showis now "touching"vscode-dvc/.git/refs/exps/93/3131de80b564742afb0795f683af6b955b327b/exp-a593b&vscode-dvc/.git/refs/exps/exec/EXEC_CHECKPOINTevery time it runs (even after runningdvc gc -T) this is causing an infinite loop ofexp showcommands

still investigating

@efiop I now have a minimal reproducible example for this scenario. I have been able to recreate with two terminal sessions and without VS Code doing anything in the background.

Here are the steps:

- In our

vscode-dvc/demorepo setEPOCHS = 36intrain.pyand queue 4 experiments:

dvc exp run --queue -S lr=0.001

dvc exp run --queue -S lr=0.005

dvc exp run --queue -S lr=0.0001

dvc exp run --queue -S lr=0.0005

dvc exp run --run-all- Wait for the first experiment to finish.

- At the start of the second run

dvc exp show --show-jsonwill hang and keep the.dvc/tmp/lock - As far as I can see

dvc exp show --show-jsonwill never return dvc exp run --run-allgets to the final step but after finishing the final experiment I left it running for 30+ minutes before I gave up and killed it, it was burning a lot of CPU and running 4 threads.

After reproducing for the second time I killed the --run-all process earlier and calls to dvc exp show then hung indefinitely. The exp ref tree looked like this:

├── 93

│ └── 3131de80b564742afb0795f683af6b955b327b

│ ├── exp-47c59

│ └── exp-d028d

├── exec

│ └── EXEC_CHECKPOINT

└── stash

and .dvc/tmp/exps looked like this:

├── run

│ ├── 84d95dd90354e1113745e031c91b1b472d097040

│ │ └── 84d95dd90354e1113745e031c91b1b472d097040.run

│ ├── abd5144a227994494fc6bd8e08a7ac3eda20f686

│ │ └── abd5144a227994494fc6bd8e08a7ac3eda20f686.run

│ ├── c55ad5478175c4a4cc972720c99a0fce02e0467e

│ │ └── c55ad5478175c4a4cc972720c99a0fce02e0467e.run

│ ├── d27f02d47c5b0530cbe515fd9abc3d0cf2347df9

│ │ └── d27f02d47c5b0530cbe515fd9abc3d0cf2347df9.run

│ └── e4d733134154805708e61661df783a58ab89c5bf

│ └── e4d733134154805708e61661df783a58ab89c5bf.run

├── tmp2t9f3aqn

│ ├── ...whole repo

├── tmp3jm778wy

│ ├── ...whole repo

├── tmppxj8hjr9

│ ├── ...whole repo

├── tmptwluxju2

│ ├── ...whole repo

├── tmpux4x5eii

│ ├── ...whole repo

├── tmpv_sqh75p

│ ├── ...whole repo

After running dvc exp gc -T dvc exp show began working as expected again.

I tried to reproduce the issue by queueing the following in example-dvc-experiments but the process completed as expected after 44m 36s:

dvc exp run --queue -S params.yaml:train.epochs=20 -S params.yaml:model.conv_units=16

dvc exp run --queue -S params.yaml:train.epochs=20 -S params.yaml:model.conv_units=16

dvc exp run --queue -S params.yaml:train.epochs=20 -S params.yaml:model.conv_units=16

dvc exp run --queue -S params.yaml:train.epochs=20 -S params.yaml:model.conv_units=16

This leads me to believe this has something to do with checkpoints being present in the table.

Note: At some point in the early stages of fighting against this bug I got the repo in a state where dvc exp show --show-json was triggering the file watcher that causes dvc exp show --show-json to run by touching .git/refs/exps/exec/EXEC_CHECKPOINT and another ref and my computer nearly melted.

I tried to recreate with dvc-checkpoints-mnist.

I set the epochs to 36 and queued 4 experiments twice. On the 7th experiment run, the experiment finished and returned ERROR: too many open files, please visit <https://error.dvc.org/many-files> to see how to handle this problem. Trying to run dvc exp run --run-all gives the same error every time now.

I am now seeing a similar issue where

exp showhung for a long time, here are the steps that I went through

- my repo is now in this weird state where

exp showis now "touching"vscode-dvc/.git/refs/exps/93/3131de80b564742afb0795f683af6b955b327b/exp-a593b&vscode-dvc/.git/refs/exps/exec/EXEC_CHECKPOINTevery time it runs (even after runningdvc gc -T) this is causing an infinite loop ofexp showcommands

Note: At some point in the early stages of fighting against this bug I got the repo in a state where dvc exp show --show-json was triggering the file watcher that causes dvc exp show --show-json to run by touching .git/refs/exps/exec/EXEC_CHECKPOINT and another ref and my computer nearly melted.

So exp show will touch git refs in this scenario, because it works by git fetching refs from each of the temporary workspaces (where your 4 experiments are running) into the main git workspace.

What (directories/files) does the file watcher currently trigger exp show on? Is it possible to exclude paths from being watched? It seems like we may need to have a discussion on what exactly needs to be monitored (for the repo to be considered "changed").

@pmrowla we can (should) definitely discuss that. Any ideas on why exp show is hanging?

Some more info from running queued experiments.

Queueing a set of 4 experiments with 20 epochs that don't collide with the existing experiment in the workspace (iterative/dvc#7126) led to the following behaviour:

dvc exp show --show-json hung twice during --run-all. Once at the beginning of the 3rd experiment and once at the beginning of the 4th. With the total following run times -

dvc exp show --show-json - COMPLETED (236861ms)

dvc exp show --show-json - COMPLETED (307770ms)

For reference exp show usually takes ~2 seconds in this repo.

Before the 1st hang, the following appeared in the log:

dvc exp show --show-json - FAILED with code 255 (6525ms)

ERROR: unexpected error - b'2f5b6e9b70e2adeb46ca9c9bc58215384ad04d8e'

& Not long before the 2nd hang, this message appeared in the log:

dvc exp show --show-json - FAILED with code 255 (5362ms)

ERROR: unexpected error - b'b84b556bcf58bf75dddafdcdd43b87774e11fa7e'

After all the experiments seemed to have been completed the workspace was in this state:

https://user-images.githubusercontent.com/37993418/146692288-18aa33ce-6581-4ae3-997f-e19adae11086.mov

The yellow point on the LHS of the plot (two points in the table) represents the 3rd experiment run. After a further 10 minutes of waiting the "broken" state appeared to resolve and all of the remaining refs made their way into the workspace:

It should also be noted that experiments 1 and 2 only showed data for the first 18 epochs up until not long before I started recording the screen.

https://user-images.githubusercontent.com/37993418/146692686-fa889b81-7238-46d5-884b-7d2d0d78d718.mov

The total run time for the 4 experiments was -> dvc exp run --run-all - COMPLETED (1774709ms).

From reading https://github.com/iterative/dvc/issues/7126#issuecomment-992157559 I can see that --queue/--run-all are mostly experimental and not a long term solution to this problem. I think we will need to make a call as to whether or not we are going to include queueing/running all experiments within the initial release as the behaviour can be very confusing. We can discuss this in the various meetings this week.

@pmrowla we can (should) definitely discuss that. Any ideas on why

exp showis hanging?

I'm not sure what makes it hang yet, but it wasn't really designed to be spammed in this way (especially if there's an infinite loop of more exp show calls being made).

For one reason or another, this issue no longer occurs for me. I haven't been consistently checking so it may have been resolved a bit ago.

https://user-images.githubusercontent.com/9111807/158500893-b8d07217-532a-4e8a-bd97-33f89b1b94f6.mp4

This issue could likely be closed, though I'm not sure if @mattseddon is still experiencing his similar issue.

@rogermparent see https://github.com/iterative/vscode-dvc/issues/828#issuecomment-996437072 I could only recreate the issue using the queue. We might want to update the title of the issue but we should leave it open for now.

We might want to update the title of the issue but we should leave it open for now.

I've updated the title and OP to point to your comment.

Could be mitigated by https://github.com/iterative/dvc/pull/7574 combined with https://github.com/iterative/vscode-dvc/pull/1598

@pmrowla @karajan1001 @mattseddon is it resolved now? it not, does it still hang or it's not about something else?

I hadn't noticed any hanging caused by exp --queue recently. For the exp run --run-all the fetching progress might cause exp show to freeze a few seconds, at the result collections progress.

Seems to be fixed. We can reopen if it is still an issue.