iri

iri copied to clipboard

iri copied to clipboard

Epic: Update Milestone Solidification

Description

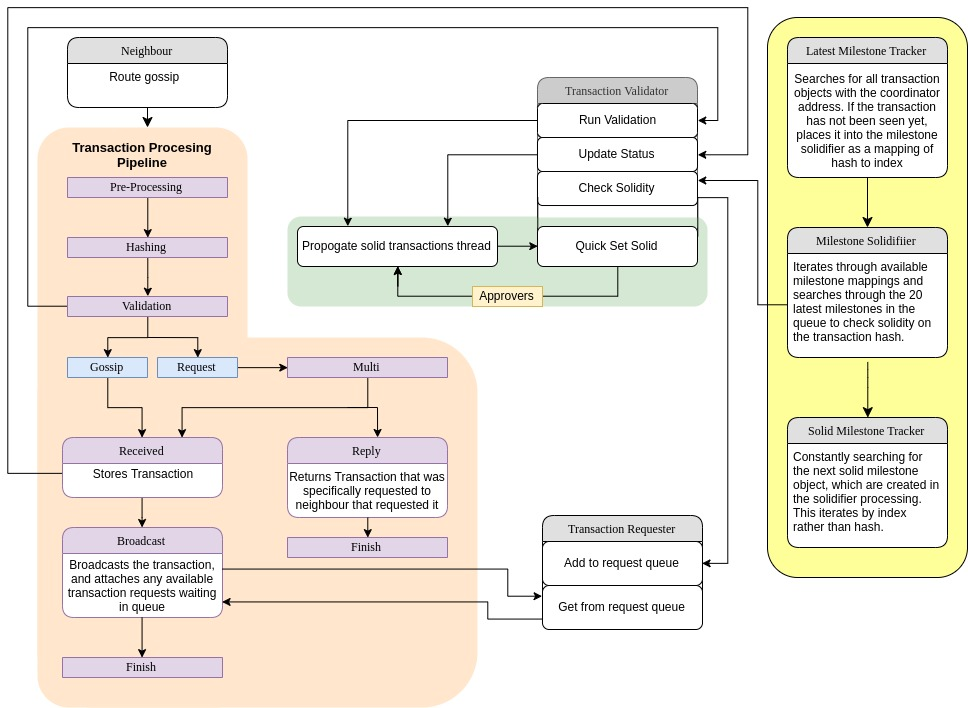

The current milestone solidification logic relies on a 3 step process that is rather disjointed. Milestones are found in the LatestMilestoneTracker (LMT) through an address search and then processed through milestone/bundle validity checks before being placed into the MilestoneSolidifier (MS) where it will then go through a separate process of transaction requesting and solidity checking to fetch missing reference transactions for milestone candidates. When a milestone is solidified through this process a milestone object is created in the DB. Lastly the LatestSolidMilestoneTracker (LSMT) searches sequentially for the next milestone object by index and updates the nodes solidity index by index. This strikes me as an unnecessarily disconnected process. With the new TransactionProcessingPipeline(TPP) following the last network rewrite, we can utilise the processing stages to more directly handle milestones and milestone reference requests to streamline milestone solidification and process them in a more connected order that carries the hash references straight through to the LSMT checks. Currently the LMS checks rely on transaction addresses and hash references to pass to the MS, whereas the LSMT is reliant on the milestone index, which is only created when the MS successfully solidifies the transaction. The MS does not rely on index while solidifying and may produce the occasional false INVALID or INCOMPLETE which then stops a specific Milestone from solidifying correctly.

Motivation

To process milestone objects more efficiently and improve solidification and request logic.

Requirements

- [ ] New transaction requesting logic using the TPP

- [ ] New milestone stages and payload processing through the TPP

- [ ] Rewrite/Consolidate milestone solidification pipeline with better milestone hash/index mapping

Open Questions (optional)

Am I planning to do it myself with a PR?

Yes

Relates to #1448

I would like to outline my proposal for changes to milestone processing. With the TPP in place and the new solidification process coming into play, we now have a good opportunity to consolidate behaviour into a less disjointed flow. The solidification of milestones can be handled from within the TPP rather than as an external set of threads which each run independently of one another.

As is currently, the processing flow looks something like this:

With the addition of the solidification stage it's starting to become more streamlined as follows:

But in both of these cases, milestones are still handled as a separate entity and process, and within this disconnected process, we recreate numerous objects over and over to analyse and determine the milestone state of the tangle/node. The new flow I would like to propose is as follows:

In this flow, transactions that enter the received stage will have their address checked to see if it matches that of the coordinator. If it does it will then check if the potential milestone has already been seen, and if not, it will run a check to pull the index of the milestone. If the milestone index is within the range of what the node is looking for (higher than the latest snapshot index) then the transaction will be placed into the MilestoneStage where it will undergo milestone validation. If the milestone is registered as valid, it will then be placed into the new MilestoneSolidifier (might want to rename it) which will act similar to the TransactionRequester as a temporary holding space for milestone mappings. In the same stage, a check for the latest solid milestone will be conducted, and the oldest milestone in the mapping will be pulled from the MilestoneSolidifier and passed forward to the SolidifyStage. In this way milestones will be processed within the same pipeline as all other transactions, and we reduce the 3 thread process to a 1 stage process handling all three tasks.

Beyond the milestone side I would also like to investigate the potential of migrating the transaction requesting process away from the BroadcastStage and into its own stage so that we aren't dependent on broadcasting for transaction requesting, which in my opinion is a little hacky in its own way.

How do you handle out of order milestones? What do you do if a milestone was received stored and then the node got shut off? So the milestone is in the DB but never went through milestone processing?

Beyond the milestone side I would also like to investigate the potential of migrating the transaction requesting process away from the BroadcastStage and into its own stage so that we aren't dependent on broadcasting for transaction requesting, which in my opinion is a little hacky in its own way.

This can be resolved by implementing the STING protocol that the Hornet people use. Since we need to make the nodes compatible, it should be the solution...

Updated diagram describing transaction flow

In this new flow the TransactionSolidifier queue will be populated from the MilestoneSolidifier rather than from the solidify stage. The solidify stage will instead verify the solidity of a transaction that has passed through the Received stage, and forward the transaction to broadcasting if it is solid. If the transaction is not solid, it will fetch a solid tip and forward that instead. This is to keep up the broadcast rate, as transaction requesting is still dependent upon broadcasts in the current protocol. This will change a bit when STING is implemented, and we should no longer need to forward tips for synchronisation due to the delineation between gossip transactions and request transactions.

Another thing to consider doing is to have milestone transactions fail to solidify in the event that a milestone object is not created for the transaction. This will allow the milestone transaction to be re-processed if re-received over time during a sync.