Correct timestep for img2img initial noise addition

There seems to be difference between huggingface's diffusers and compvis's implementation for img2img as the wrong timestep is used for the additive noise for image-2-image.

Summary from the relevant PR raised by the HuggingFace team:

t+1is used as the timestep to add noise to the original image, but[0, ..., t]is used afterwards for the denoising process. We should however also usetwhen adding the noise to the original image. This can be quite easily verified by doing the following. Run a img2img with a small number of update steps and a very low strength because then differences between t and t+1 become quite clear.

From

torch.tensor([t_enc]).to(self.model.device)

to

torch.tensor([t_enc - 1]).to(self.model.device)

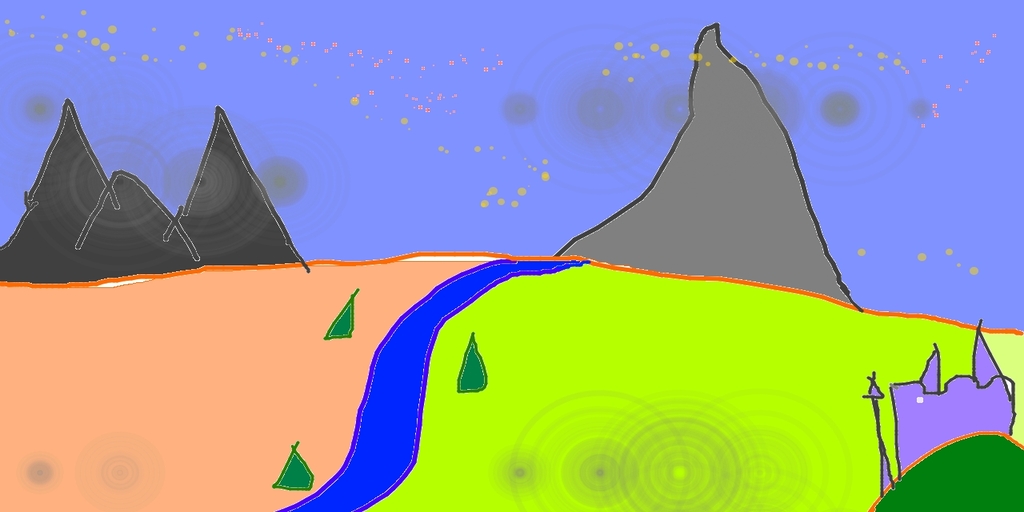

For example, using the following init_img and configuration:

prompt: A fantasy landscape, trending on artstationstrength: 0.1seed: 42sampler_name: DDIMsteps: 10

The existing code will generate an image with the following noise:

The new implementation will produce an image with lesser noise:

This PR affects only the img2img script. I realized that text2img2img and inpainting share the same code base but I am not sure if the same implementation should be applied as well.

Thanks so much for finding this problem. I will test it out the proposed solution later today.