[bug]: configuration script with --no-interactive does not download SD models, could use more improvements

Is there an existing issue for this?

- [X] I have searched the existing issues

OS

Linux

GPU

cuda

VRAM

16

What happened?

1. non-interactive configuration

Main issue is that fully non-interactive configuration is not possible at the moment. At some point, user interaction is still required on the CLI. This makes it hard or impossible to configure the installation end-to-end without some user interaction along the way.

$ scripts/configure_invokeai.py --no-interactive

...

# (.............only supporting models are downloaded, because HF token was not provided)

$ scripts/invoke.py

# The script goes back into the interactive configuration mode, prompting to select the models to download.

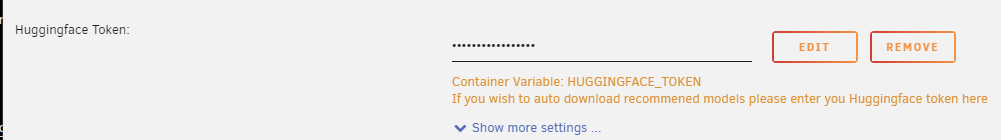

Suggestion: the script should recognize the HUGGINGFACE_TOKEN environment variable, or accept the token as a CLI argument. This will allow the configuration to complete fully unattended.

2. Malformed prompt for output directory

(Trivial fix) when prompted for the output directory, the CLI prompt is missing the f-string:

** INITIALIZING INVOKEAI RUNTIME DIRECTORY **

Select the default directory for image outputs [{default}]: /workspace/invokeai/outputs

should be:

** INITIALIZING INVOKEAI RUNTIME DIRECTORY **

Select the default directory for image outputs [ /workspace/invokeai/outputs ]: /workspace/invokeai/outputs

3. Config file location may cause it to be lost

The configuration file is saved in ~/.invokeai. This breaks in containerized setups, when running with persistent storage mounted outside of the user's home directory, because the home directory of the container user is lost. The config file should be saved in the persistent directory together with other configs. Or perhaps the user should be prompted for the location (but that creates a catch-22 and is probably over-engineering at this point).

Contact Details

discord: brodsky

@lstein I'd be keen to tackle all of these if the team desires

@ebr Please feel free to checkout the latest version of the development branch and go to town with improvements to the configuration script. For what it's worth, the latest version of the script has a --yes option that accepts all the defaults and operates in a truly non-interactive fashion, including downloading the recommended models. The other issues you raise have not been addressed and I would very much welcome a pull request from you.

Suggestion: the script should recognize the HUGGINGFACE_TOKEN environment variable, or accept the token as a CLI argument. This will allow the configuration to complete fully unattended.

having an environment variable for this would be helpful. it would also be nice when using --yes and token string is empty, it should skip the hugging face models and just download the support models, instead of just dropping out of script

eg in unraid when user is creating the container they can choose to enter a token or not

maybe something like this, I changed this section in configure_invokeai.py: (my hacky little attempt at it, I know nothing about python this was just me playing around)

maybe something like this, I changed this section in configure_invokeai.py: (my hacky little attempt at it, I know nothing about python this was just me playing around)

def download_weights(opt:dict):

if opt.yes_to_all:

models = recommended_datasets()

access_token = (os.environ['HUGGINGFACE_TOKEN'])

if len(models)>0 and access_token:

successfully_downloaded = download_weight_datasets(models, access_token)

update_config_file(successfully_downloaded,opt)

return

else:

print('** Cannot download models because no Hugging Face access token could be found, Skipping....')

if not opt.yes_to_all:

choice = user_wants_to_download_weights()

if choice == 'recommended':

models = recommended_datasets()

elif choice == 'customized':

models = select_datasets(choice)

if models is None and yes_or_no('Quit?',default_yes=False):

sys.exit(0)

else: #'skip'

return

print('** LICENSE AGREEMENT FOR WEIGHT FILES **')

access_token = authenticate()

print('\n** DOWNLOADING WEIGHTS **')

successfully_downloaded = download_weight_datasets(models, access_token)

update_config_file(successfully_downloaded,opt)

The configuration file is saved in

~/.invokeai. This breaks in containerized setups, when running with persistent storage mounted outside of the user's home directory, because the home directory of the container user is lost. The config file should be saved in the persistent directory together with other configs. Or perhaps the user should be prompted for the location (but that creates a catch-22 and is probably over-engineering at this point

Is there any reason this file cant be in root of InvokeAI by default or look for it it elsewhere if --root is used

eg. on my unraid dev docker I am using --root="/userfiles/" would be nice for the .invokeai file to be in here

@ebr, just confirming that you'll be addressing the issues that @mickr777 is bringing up?

An additional thought that I had is to have a "models directory autoscan" function, which scans through an existing models directory, and if it finds any of the .ckpt files that it knows about to add them automatically to models.yaml. This is to address the case of the user who has a lot of models files already downloaded and needs to reconstruct their models.yaml file.

I will work on this today, yes. I agree with @mickr777's suggestions, was thinking along the same exact lines. :)

And :100: to the autoscan idea, I can certainly do that too. For clarity:

- should

INITIAL_MODELS.yamlserve as the ground truth for what it knows about? - do we want to update an existing

models.yamlfile, or only generate one if not exists? - if we do update an existing one, should we remove any model references to non-existing (e.g. deleted)

.ckptfiles? On one hand, this prevents aFileNotFoundErrorand immediate drop into config mode (which is a blocker when running web ui). On the other, it would modify user's potentially custom config without warning. Maybe they deleted the.ckptby accident.... Would appreciate your input - thx