DiCE

DiCE copied to clipboard

DiCE copied to clipboard

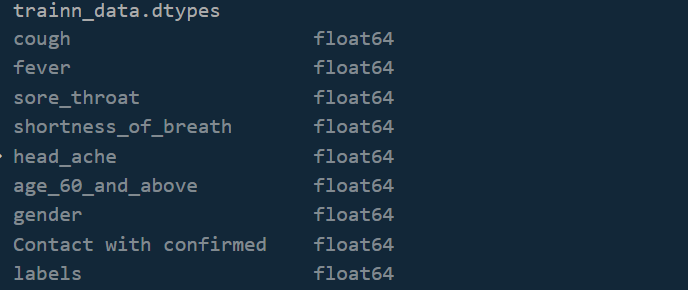

DataFrame.dtypes for data must be int, float or bool. Error even if the data is float

the code was running fine last week but today as i try to run the same code i'm bumping into this issue/ Error:

even if the data is in float type .

and can't seem to fix this error .

anyone knows how to slove it ?

@ShadiKhoury If all your feature are continuous then why don't you specify them in the dicedata initialization?

can you try setting all your features as a python list in dicedata initialization?

Regards,

@ShadiKhoury does the above suggestion help you make progress on generating counterfactuals? If, so may be we can close this isssue.

Regards,

My features are all binary in a float format either 0.0 or 1.0 , even if I try to convert them all into int it still give’s the same error. And as I stated before this issue was not present when running DICE on the same data set , it’s new bug .

@ShadiKhoury can you share a sample dataset and notebook? Not sure we can help in triaging this issue without a local repro.

Regards,

def interpretation (trian_data,test_data,trian_labels,test_labels,model,feature_imprtance_type="dice_local_cf"):

#imports

import pandas as pd

import numpy as np

import math

import json

import plotly.graph_objs as go

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report

from sklearn.preprocessing import OneHotEncoder

from sklearn.metrics import plot_roc_curve

from sklearn.model_selection import cross_val_score

import matplotlib.pyplot as plt

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.ensemble import GradientBoostingClassifier

from sklearn import preprocessing

from collections import Counter

import lightgbm as lgb

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.metrics import mean_squared_error,roc_auc_score,precision_score

pd.options.display.max_columns = 999

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import label_binarize

from sklearn.metrics import roc_curve, auc

from sklearn.multiclass import OneVsRestClassifier

from itertools import cycle

plt.style.use('ggplot')

import dice_ml

from dice_ml.utils import helpers

from sklearn.metrics import precision_score, roc_auc_score, recall_score, confusion_matrix, roc_curve, precision_recall_curve, accuracy_score

# DiCE imports

import dice_ml

from dice_ml.utils import helpers

#########

#importing from CSV to Pandas

trian_data=pd.read_csv("%s" % trian_data);

test_data=pd.read_csv("%s"% test_data);

trian_labels=pd.read_csv("%s"% trian_labels);

test_labels=pd.read_csv("%s"% test_labels);

col_names=trian_data.columns;

# loop to change each column to float type

for col in col_names:

trian_data[col] = trian_data[col].astype('float',copy=False);

test_data[col]= test_data[col].astype('float',copy=False);

#selecting raws with no nan values

new_train=trian_data

new_train["Result"]=trian_labels

new_test=test_data

new_test["Result"]=test_labels

trian_data_nonull=new_train.dropna()

test_data_nonull=new_test.dropna()

trian_label_nonull=trian_data_nonull["Result"]

test_label_nonull=test_data_nonull["Result"]

trian_data_nonull.drop(labels = ["Result",], axis=1,inplace=True )

test_data_nonull.drop(labels = ["Result",], axis=1,inplace=True )

for col in col_names:

trian_data_nonull[col] = trian_data_nonull[col].astype('float',copy=False);

test_data_nonull[col]= test_data_nonull[col].astype('float',copy=False);

trian_data_nonull.reset_index(drop=True, inplace=True)

test_data_nonull.reset_index(drop=True, inplace=True)

#Scaling using the Standard Scaler

sc_1=StandardScaler();

X_1=pd.DataFrame(sc_1.fit_transform(trian_data_nonull));

X_train, X_val, y_train, y_val = train_test_split(X_1, trian_label_nonull, test_size=0.25, random_state=0) # 0.25 x 0.8 = 0

test_scale_data=pd.DataFrame(sc_1.fit_transform(test_data_nonull))

if model =="lgbm":

#Bulding them Model

lgbm_clf = lgb.LGBMClassifier(

num_leaves= 20,

min_data_in_leaf= 4,

feature_fraction= 0.2,

bagging_fraction=0.8,

bagging_freq=5,

learning_rate= 0.05,

verbose=1,

num_boost_round=603,

early_stopping_rounds=5,

metric="auc",

objective = 'binary',)

#Fitting the Model

lgbm_clf.fit(

X_train,

y_train,

eval_set = [(X_val, y_val)],

eval_metric="auc",

)

preds = lgbm_clf.predict_proba(test_scale_data,num_iteration=100)

predict_model=lgbm_clf;

#dice local with cf

if feature_imprtance_type=="dice_local_cf":

trainn_data=trian_data_nonull;

trainn_data["labels"]=trian_label_nonull

dicedata = dice_ml.Data(dataframe=trainn_data,continuous_features=[], outcome_name="labels")

# Using sklearn backend

m = dice_ml.Model(model=predict_model, backend="sklearn",model_type = 'classifier')

# Using method=random for generating CFs

exp_dice = dice_ml.Dice(dicedata, m, method="random")

query_instance=test_data_nonull[4:5];

e1 = exp_dice.generate_counterfactuals(query_instance, total_CFs=10,

desired_class="opposite",

verbose=False,

features_to_vary="all")

#Local Feature Importance Scores with Counterfactuals list

imp = exp_dice.local_feature_importance(query_instance, cf_examples_list=e1.cf_examples_list);

result = imp.local_importance[0].items()

# Convert object to a list

data_imp = list(result);

feature_imp1 = pd.DataFrame(sorted(data_imp), columns=['Feature','Value'])

importance_df_dice_local_cf=feature_imp1

importance_df_dice_local_cf.columns = ['name', 'importance'];

importance_df_dice_local_cf = importance_df_dice_local_cf.sort_values('importance', ascending=False)

#Ploting

importance_df_dice_local_cf.plot.barh(y="importance",x="name",color="#FF6103");

plt.gca().invert_yaxis()

plt.tight_layout()

plt.savefig("dice_local_cf_importance.pdf")

#plt.show()

#js_dice_lc_cf = importance_df_dice_local_cf.to_json(orient = "values")

#parsed_3 = json.loads(js_dice_lc_cf)

#Json

importance_dict_dicecflo = importance_df_dice_local_cf.set_index('name').T.to_dict('records')[0]

with open('Dice_local_cf_Feature_Importance.json', 'w') as outfile:

return json.dump(importance_dict_dicecflo,outfile)

Was this resolved?

@ShadiKhoury can you set continuous_features to all your train feature names in line dicedata = dice_ml.Data(dataframe=trainn_data,continuous_features=[], outcome_name="labels") can give another try?

when applying all continuous_features to all the features in my data set it worked like before 👍 . for now, it's okay but doesn't this defies the purpose of this input? as my features aren't continuous at all ?

Maybe a better name is "numeric_features", rather than continuous_features. Regardless, putting numeric features inside continuous_features fix should work for anyone facing this issue.