vitest

vitest copied to clipboard

vitest copied to clipboard

feat: benchmark

fix #917

one level benchmark interface to support benchmark test.

- add deps lib benchmark.

- add benchmark task runner.

Deploy Preview for vitest-dev ready!

Built without sensitive environment variables

| Name | Link |

|---|---|

| Latest commit | facaf8928e4e02cb4f046d9f9d20e5f812d6d9b7 |

| Latest deploy log | https://app.netlify.com/sites/vitest-dev/deploys/629f5bc82c6ad70007dd3853 |

| Deploy Preview | https://deploy-preview-1029--vitest-dev.netlify.app |

| Preview on mobile | Toggle QR Code...Use your smartphone camera to open QR code link. |

To edit notification comments on pull requests, go to your Netlify site settings.

Let's discuss the design in #917 first.

About benchmark.js, if we really end up using it, I could try to contact the author and see if our team could grant the access and help on maintaining it.

It seems need to make benchmark(xxxx.bench.js) whit diff files and add a new command to run benchmark 👏

I think we can now use tinybench by @Uzlopak?

yes, It seems to had the same api with benchmark

Published version 1.0.2 of tinybench.

😁

Created a PR against github-action-benchmark. Does the color have some effect on the stdout and parsing by benchmark.js?

https://github.com/benchmark-action/github-action-benchmark/pull/126

I realized that the async functionality is not working well.

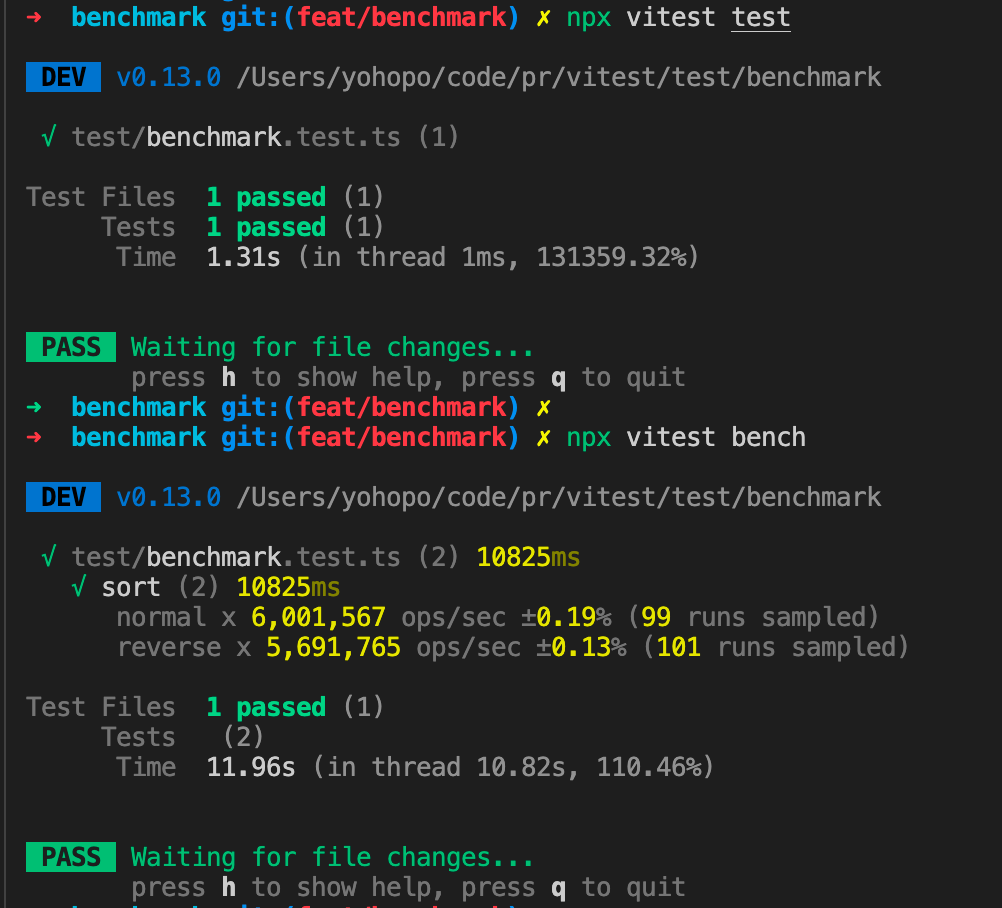

Hmm, looking at the screenshot, average time per run is not mentioned, there is no breakdown by percentile, and which test was fastest is not mentioned (this can be important if you're comparing 4+ things). Can that be added?

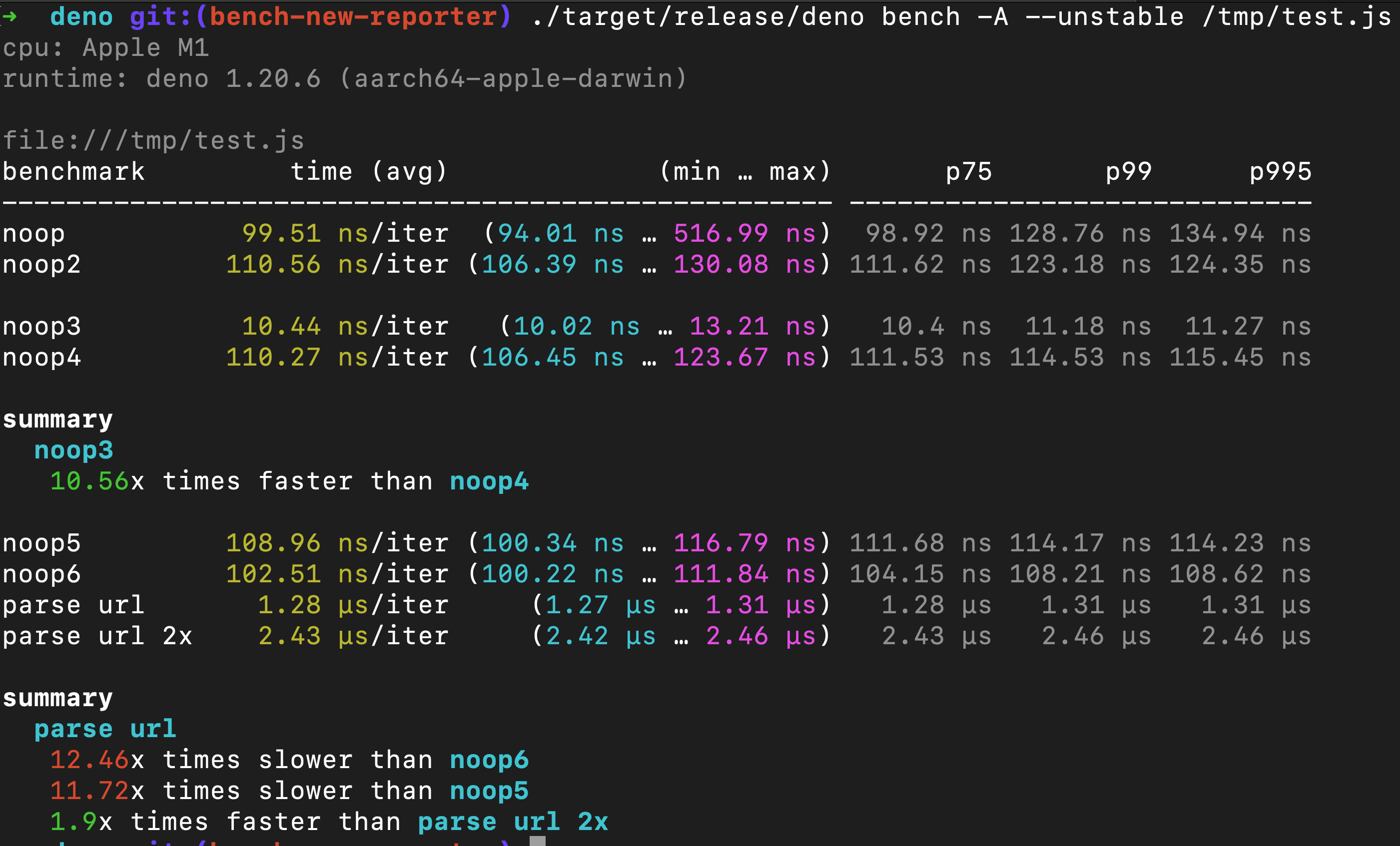

I strongly suggest taking a look at the mitata NPM package before this PR gets merged. This is how it looks integrated into Deno (it's what Deno uses for the deno bench command):

I guess we should log an experiment warning when ppl use this command, addressing it "API might change in the future"

@mangs hi, why need to breakdown by percentile? And now show the speed of ops/sec and it also need to show average time per run? The log is the simple output of the lib of benchmark.js.

@Uzlopak https://github.com/evanwashere/mitata/blob/master/src/lib.mjs#L12-L21 It seems show the sorted sample by sample[75p] sample[99p] sample[99.5p] sample[99.9p] and min max is sample[0] and sample[-1]

Hi @poyoho. The percentiles show outliers which can be important when tracking down performance issues which can get masked by averages. This is a very contrived example, but if you have 11 runs showing an average of 5 seconds, you can get that result with five items being zero, five 10, and one 5; percentiles would make that very clear. I think the function you found would do the trick and would be great! Thank you for considering my idea. As a big fan of what this project is doing, I don't mean to be a bother, I just want this feature to be as good as it can be. 😀

Sorry, I noticed with rebase came a lot of @jest packages. Is this intentional?

@sheremet-va after I run pnpm i it will update the pnpm-lock.yml. I don't know why but it seems to remove a lot of @jest packages?

I think now log data is more, and the detailed data need to log in another way. so the pxx data I want to report in the file. 😁

Thank you for the link to the mitata repo. I analyzed the code and tbh I think their code is much more cleaner than tinybench/benchmark.js can ever be. Maybe we should really consider switching to mitata. Also I assume that as it is integrated into deno it will have much more traction in the development than tinybench will ever be.

@evanwashere

Maybe you want to add your thoughts to this feature?

@evanwashere

Maybe you want to add your thoughts to this feature?

in your case mitata's lib has very easy api for measuring function and leaving rest to you

import { sync, async } from 'mitata/src/lib.mjs';

const time = 500; // in ms

const noop_stats = sync(time, () => {});

const async_noop_stats = await async(time, async () => {});

console.log(noop_stats, async_noop_stats);

but you are free to reuse rest of mitata's infrastructure for formatting and reporting

@Uzlopak so Can I switch the tinybench to mitata ? But to be honest, I like tinybench more, because it can share the running event now, but I think it is easy to do in mitata. 😁

Do we have news on this PR? @Aslemammad you said, you wanted to take a look?

I'll take a look, I'm trying to implement a typesafe tinybench and modern API for it (based on the current)! and see if I can something out of it!

@poyoho could you please let me know what you like about other testing libs over tinybench, I'd like to add those features in the new huge refactor.

@Aslemammad sure! I think tinybench can export some runtime hook, so we can make the log to be beautiful and show more runtime info. And mitata is seems just run the fn and show the benchmark info.

tinybench can calculate many benchmark results based on his exposed hook. some like mitata I think we can even make it in vitest. It look like very easy 😁

@poyoho That's great, let's have a chat about it then. here's my discord: Aslemammad#6670

@poyoho The latest version of tinybench is 2.x.x and the 1.x.x is for the previous version which was based on benchmark.js.

I know. Rebase first 😁

@Aslemammad 🎉🎉🎉

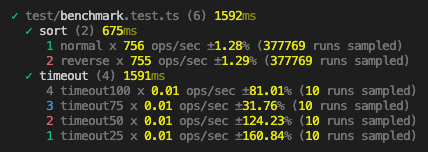

@poyoho Happy to see this result! Thank you so much for the work. how about changing the benchmark function name to bench (in the benchmark.test.ts)? That'd be an easier naming in my opinion.

benchmark -> bench

Just thought for improvements:

Consider running each benchmark in a separate process. So maybe it makes sense to recommend one benchmark per .bench.js/ts file.

Also maybe consider adding a "benchmark normalizer". E.g. run first a benchmark calculating the fibonacci numbers and use the ops/s as 100%. So if you run the benchmarks on different machines, the benchmarks get more comparable.