flow

flow copied to clipboard

flow copied to clipboard

Sometimes client(s) enter a UI Resync loop; Resync every 6s

Description of the bug

Since a few weeks we notice abnormal traffic to our web application, that is usually caused by a client that is resynchronizing the complete website in 6s intervals.

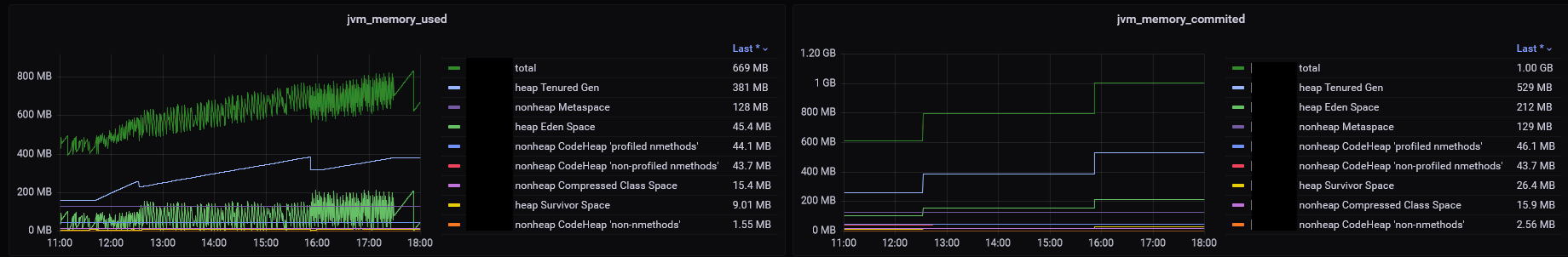

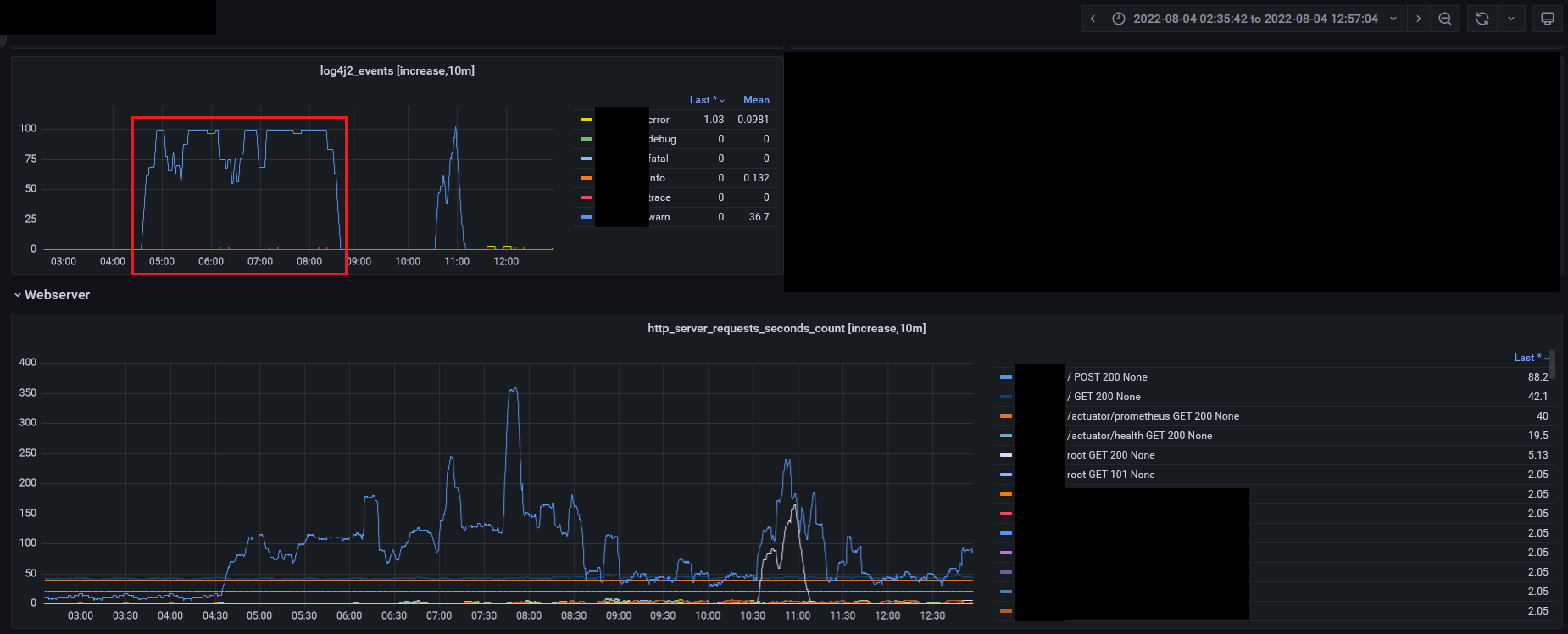

Our monitoring noticed that here:

First occurrence

Last occurrence (today)

Some key aspects of the problem:

- There are exactly 100requests in 10min → a request every 6s

- We use a PUSH connection (Automatic -> Should use Websockets), maybe the problem is somehow related to that.

- Multiple or a single client have that problem, however they usually fix themself after a few hours likely when the user closes his browser, when the session is closed or the page is reloaded

- The traffic also causes abnormal GC/RAM activity.

- The following entries are found in the log hundreds of times:

Jun 21 11:43:47 ip-10-1-0-60 6fca0fd80ea3[700]: 2022-06-21 11:43:47 WARN [http-nio-8080-exec-12] [nication.ServerRpcHandler] Resynchronizing UI by client's request. A network message was lost before reaching the client and the client is reloading the full UI state. This typically happens because of a bad network connection with packet loss or because of some part of the network infrastructure (load bala...

Jun 21 11:43:53 ip-10-1-0-60 6fca0fd80ea3[700]: 2022-06-21 11:43:53 WARN [http-nio-8080-exec-19] [nication.ServerRpcHandler] Resynchronizing UI by client's request. A network message was lost before reaching the client and the client is reloading the full UI state. This typically happens because of a bad network connection with packet loss or because of some part of the network infrastructure (load bala...

Jun 21 11:43:59 ip-10-1-0-60 6fca0fd80ea3[700]: 2022-06-21 11:43:59 WARN [http-nio-8080-exec-21] [nication.ServerRpcHandler] Resynchronizing UI by client's request. A network message was lost before reaching the client and the client is reloading the full UI state. This typically happens because of a bad network connection with packet loss or because of some part of the network infrastructure (load bala...

Jun 21 11:44:05 ip-10-1-0-60 6fca0fd80ea3[700]: 2022-06-21 11:44:05 WARN [http-nio-8080-exec-21] [nication.ServerRpcHandler] Resynchronizing UI by client's request. A network message was lost before reaching the client and the client is reloading the full UI state. This typically happens because of a bad network connection with packet loss or because of some part of the network infrastructure (load bala..

- This is also seen hundreds of times in the nginx-logs and looks like this

1.2.3.4 - - [27/Jun/2022:11:28:: +0200] "POST /?v-r=uidl&v-uiId=0 HTTP/1.1" 200 74265 "https://xxxdomainxxx/home/2022-06-27" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36" "-"

1.2.3.4 - - [27/Jun/2022:11:28:: +0200] "POST /?v-r=uidl&v-uiId=0 HTTP/1.1" 200 74265 "https://xxxdomainxxx/home/2022-06-27" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36" "-"

...

2.3.4.5 - - [06/Jul/2022:05:52:33 +0200] "POST /?v-r=uidl&v-uiId=1 HTTP/1.1" 200 100484 "https://xxxdomainxxx/home/2022-07-05" "Mozilla/5.0 (X11; Linux x86_64; rv:102.0) Gecko/20100101 Firefox/102.0" "-"

The affected application is a Spring Boot 2.7.1 + Vaadin 23.1.2 app

with the following relevant web-config

application.yml

...

server:

servlet:

session:

cookie:

http-only: true

secure: true

...

which is running behind an nginx proxy

nginx config

/etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

# ...

access_log /var/log/nginx/access.log main;

sendfile on;

server_tokens off;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/sites-enabled/*;

}

/etc/nginx/sites-available/xxxdomainxxx

upstream xxx {

server 127.0.0.1:8081;

}

server {

if ($server_protocol = HTTP/1.0) { return 444; }

server_name xxxdomainxxx;

listen 80;

if ($host = xxxdomainxxx) {

return 301 https://$host$request_uri;

}

return 404;

}

server {

if ($server_protocol = HTTP/1.0) { return 444; }

server_name xxxdomainxxx;

listen 443 ssl;

ssl_certificate /etc/letsencrypt/live/xxxdomainxxx/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/xxxdomainxxx/privkey.pem;

include /etc/letsencrypt/options-ssl-nginx.conf;

ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem;

proxy_buffers 16 64k;

proxy_buffer_size 128k;

proxy_connect_timeout 7d;

proxy_send_timeout 7d;

proxy_read_timeout 7d;

add_header Strict-Transport-Security "max-age=63072000; includeSubdomains";

location / {

proxy_pass http://xxx/;

proxy_next_upstream error timeout invalid_header http_500 http_502 http_503 http_504;

proxy_redirect off;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto https;

}

location /.well-known {

root /usr/share/nginx;

}

}

both are running on an AWS machine.

Today I also manged to aquire some client side logs from someone who had their browser open through the night:

console log

InstallTrigger sollte nicht mehr verwendet werden und wird in Zukunft entfernt. constants.js:50:14

Die Verbindung zu wss://xxxdomainxxx/vaadinServlet/?v-r=push&v-uiId=1&v-pushId=61709ea3-9efd-45c8-83df-2b93fc548569&X-Atmosphere-tracking-id=0&X-Atmosphere-Framework=3.1.2-javascript&X-Atmosphere-Transport=websocket&X-Atmosphere-TrackMessageSize=true&Content-Type=application/json;%20charset=UTF-8&X-atmo-protocol=true wurde unterbrochen, während die Seite geladen wurde. vaadinPush-min.js:1:12680

// ↑ This means that the browser lost the wss-Connection somehow. However as it occurred sometime in the night there is no timestamp.

...

Wed Jul 06 2022 07:57:57 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 07:57:57 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 07:57:57 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 07:57:57 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 07:57:57 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 07:57:57 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

... Same thing a few hundred times more

... (Enabled more logging options, like XHR)

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:34 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

XHRPOSThttps://xxxdomainxxx/?v-r=uidl&v-uiId=1

[HTTP/1.1 200 194ms]

Wed Jul 06 2022 08:04:39 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:39 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:40 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:40 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:40 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:40 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:40 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:40 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:40 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: websocket.onmessage vaadinPush-min.js:1:41460

Wed Jul 06 2022 08:04:40 GMT+0200 (Mitteleuropäische Sommerzeit) Atmosphere: Firing onMessage 2 vaadinPush-min.js:1:41460

XHRPOSThttps://xxxdomainxxx/?v-r=uidl&v-uiId=1

[HTTP/1.1 200 171ms]

... (the same thing a few hundred times)

Network analysis HAR-file: xxxREMOVEDxxx_Archive [22-07-06 08-05-26].zip

Expected behavior

No abnormal resyncs.

Either resync and be fine with it or abort after X retries and reload the complete page. If the websocket connection failed create a new one or reload the complete page.

Minimal reproducible example

I was not able to reproduce the bug myself locally :/

Versions

- Vaadin / Flow version: 23.1.2

- Java version: OpenJDK 64-Bit Server VM Temurin-17.0.3+7 (build 17.0.3+7, mixed mode)

- OS version: Server: Alpine Linux

- Browser version (if applicable): happens on all browsers

- Application Server (if applicable): none/Spring Boot 2.7.1

- IDE (if applicable): -

I can see from the HAR that the server responses have the syncId each time 6 greater than the client request had.

This puts the resync message to a waiting queue on the client and the client remains We are waiting for an earlier message

The clientId seems to be sent each time as expected. So it would seem that there is missing something that would reset the syncId so that it would start matching. @Legioth would you have a better insight how the syncId could get this much out of sync?

From what I can see in the code, the server-side sync id value is incremented once every time ServerRpcHandler::handleRpc is run for a given UI instance. Since this is consistently happening exactly 6 times per request originated by the client, I would guess that this is a "deterministic" issue rather than something related to threading.

It's not a good guess, but my best guess is that there's something that causes the load balancer to dispatch the same request 6 times to the same server. If you have access to request logs both for nginx and the application server, they you could maybe check if this seems to be the case?

Thank you for having a look at this.

It's not a good guess, but my best guess is that there's something that causes the load balancer to dispatch the same request 6 times to the same server.

I'm not sure what you mean with "load balancer", but we have no server side load balancing setup. Requests go straight to the nginx (one instance) and from there to the app (also one instance).

If you have access to request logs both for nginx and the application server, they you could maybe check if this seems to be the case?

We aren't logging the traffic contents due to privacy reasons, the amount of data and because they are (partial) encrypted with HTTPS. I only have metadata available.

I also enabled closeIdleSessions in the meantime but the problem still occurs.

Here an example from last week:

Right, I was thinking if nginx when I wrote "load balancer".

You wouldn't need to log the request contents to verify my hypothesis about request multiplication between nginx and the application itself, but only the regular access logs like the one that you originally referred to as "nginx-logs". What I'm suggesting is to compare such logs from nginx with the corresponding logs from the app to see if you've got roughly the same amount of requests on both sides.

Full logs of last weeks incident (seen in my comment above), anonymized:

All times in MESZ (UTC+2).

@AB-xdev did you perhaps upgraded to the latest Vaadin version? It contains a couple of fixes that prevents some of the causes of the resynchronization issue

I close the issue as there have been no updates for a while and several fixes for related issues have been released. @AB-xdev please comment here or open a new ticket if the problem persists.