fast-bert

fast-bert copied to clipboard

fast-bert copied to clipboard

XLNet training is extremely slow on RTX 2060 [Is it updating every layer?]

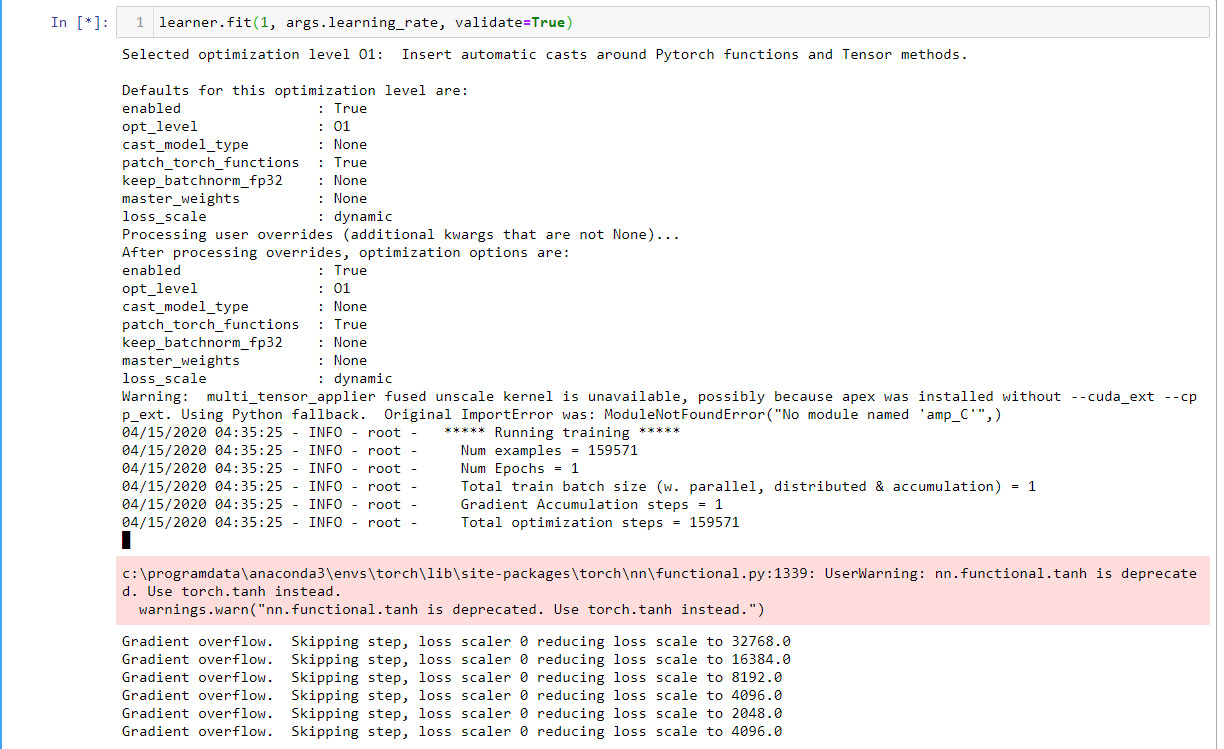

I was trying to train XLNet on a multilabel text classification dataset. I'm using an RTX 2060 to train, but the training seems extremely slow, I wanted to test only for 1 epoch, I am training with batch=1, as I have only a 6GB GPU.

It's been more than 9 hours, it's still running.

Here's my model details:

04/15/2020 04:34:59 - INFO - transformers.configuration_utils - loading configuration file https://s3.amazonaws.com/models.huggingface.co/bert/xlnet-base-cased-config.json from cache at C:\Users\Zabir\.cache\torch\transformers\c9cc6e53904f7f3679a31ec4af244f4419e25ebc8e71ebf8c558a31cbcf07fc8.8df552e150a401a37ae808caf2a2c86fb6fedaa1f6963d1f21fbf3d0085c9e74

04/15/2020 04:34:59 - INFO - transformers.configuration_utils - Model config XLNetConfig {

"_num_labels": 6,

"architectures": [

"XLNetLMHeadModel"

],

"attn_type": "bi",

"bad_words_ids": null,

"bi_data": false,

"bos_token_id": 1,

"clamp_len": -1,

"d_head": 64,

"d_inner": 3072,

"d_model": 768,

"decoder_start_token_id": null,

"do_sample": false,

"dropout": 0.1,

"early_stopping": false,

"end_n_top": 5,

"eos_token_id": 2,

"ff_activation": "gelu",

"finetuning_task": null,

"id2label": {

"0": "LABEL_0",

"1": "LABEL_1",

"2": "LABEL_2",

"3": "LABEL_3",

"4": "LABEL_4",

"5": "LABEL_5"

},

"initializer_range": 0.02,

"is_decoder": false,

"is_encoder_decoder": false,

"label2id": {

"LABEL_0": 0,

"LABEL_1": 1,

"LABEL_2": 2,

"LABEL_3": 3,

"LABEL_4": 4,

"LABEL_5": 5

},

"layer_norm_eps": 1e-12,

"length_penalty": 1.0,

"max_length": 20,

"mem_len": null,

"min_length": 0,

"model_type": "xlnet",

"n_head": 12,

"n_layer": 12,

"no_repeat_ngram_size": 0,

"num_beams": 1,

"num_return_sequences": 1,

"output_attentions": false,

"output_hidden_states": false,

"output_past": true,

"pad_token_id": 5,

"prefix": null,

"pruned_heads": {},

"repetition_penalty": 1.0,

"reuse_len": null,

"same_length": false,

"start_n_top": 5,

"summary_activation": "tanh",

"summary_last_dropout": 0.1,

"summary_type": "last",

"summary_use_proj": true,

"task_specific_params": null,

"temperature": 1.0,

"top_k": 50,

"top_p": 1.0,

"torchscript": false,

"untie_r": true,

"use_bfloat16": false,

"vocab_size": 32000

}

xlnet-base-cased

<class 'str'>

04/15/2020 04:35:01 - INFO - transformers.modeling_utils - loading weights file https://s3.amazonaws.com/models.huggingface.co/bert/xlnet-base-cased-pytorch_model.bin from cache at C:\Users\Zabir\.cache\torch\transformers\24197ba0ce5dbfe23924431610704c88e2c0371afa49149360e4c823219ab474.7eac4fe898a021204e63c88c00ea68c60443c57f94b4bc3c02adbde6465745ac

04/15/2020 04:35:03 - INFO - transformers.modeling_utils - Weights of XLNetForMultiLabelSequenceClassification not initialized from pretrained model: ['sequence_summary.summary.weight', 'sequence_summary.summary.bias', 'logits_proj.weight', 'logits_proj.bias']

04/15/2020 04:35:03 - INFO - transformers.modeling_utils - Weights from pretrained model not used in XLNetForMultiLabelSequenceClassification: ['lm_loss.weight', 'lm_loss.bias']

epochs

1

args.num_train_epochs

Is it supposed to be that slow or did I mis-configure something? I suspect the model is training as a whole instead of just additional layer? Is it correct? How can I just train the last layer?