SinGAN

SinGAN copied to clipboard

SinGAN copied to clipboard

RuntimeError:`dtype` or `out`

hello! When I ran this program, I had some problems.

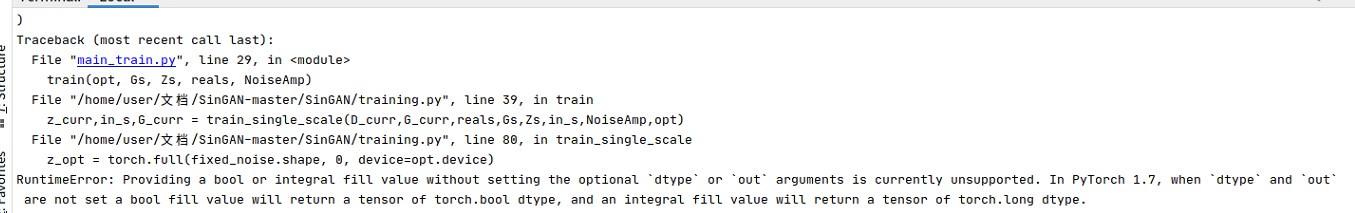

Traceback (most recent call last):

File "main_train.py", line 29, in dtype or out arguments is currently unsupported. In PyTorch 1.7, when dtype and out are not set a bool fill value will return a tensor of torch.bool dtype, and an integral fill value will return a tensor of torch.long dtype.

If you have time, could you look over this problem for me. thank u!

I didn't check it yet, but there's a pool request with update for python 1.6. it might help with this.

In training.py, set dtype=int in torch.full() for every instance of torch.full(), it worked for me

Thank you for the update! I'll check this on my side and update the code.

谢谢你的更新!我将自己检查并更新代码。

Ok,thank you very much!

dtype=int didn't work for me.. It leads for some new error

3 frames /content/content/My Drive/SinGAN/SinGAN/training.py in train(opt, Gs, Zs, reals, NoiseAmp) 37 D_curr.load_state_dict(torch.load('%s/%d/netD.pth' % (opt.out_,scale_num-1))) 38 ---> 39 z_curr,in_s,G_curr = train_single_scale(D_curr,G_curr,reals,Gs,Zs,in_s,NoiseAmp,opt) 40 41 G_curr = functions.reset_grads(G_curr,False)

/content/content/My Drive/SinGAN/SinGAN/training.py in train_single_scale(netD, netG, reals, Gs, Zs, in_s, NoiseAmp, opt, centers) 176 #D_fake_map = output.detach() 177 errG = -output.mean() --> 178 errG.backward(retain_graph=True) 179 if alpha!=0: 180 loss = nn.MSELoss()

/usr/local/lib/python3.6/dist-packages/torch/tensor.py in backward(self, gradient, retain_graph, create_graph)

183 products. Defaults to False.

184 """

--> 185 torch.autograd.backward(self, gradient, retain_graph, create_graph)

186

187 def register_hook(self, hook):

/usr/local/lib/python3.6/dist-packages/torch/autograd/init.py in backward(tensors, grad_tensors, retain_graph, create_graph, grad_variables) 125 Variable._execution_engine.run_backward( 126 tensors, grad_tensors, retain_graph, create_graph, --> 127 allow_unreachable=True) # allow_unreachable flag 128 129

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.FloatTensor [3, 32, 3, 3]] is at version 2; expected version 1 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

Hello, this error is not because of setting dtype=int. It is due to version mismatch. As @tamarott has already suggested in another issue, "please use torch==1.4.0 torchvision==0.5.0". Hope it works.