bark

bark copied to clipboard

bark copied to clipboard

CUDA out of memory, running on RTX 3050ti, how to fix?

Exception has occurred: OutOfMemoryError

CUDA out of memory. Tried to allocate 16.00 MiB (GPU 0; 4.00 GiB total capacity; 3.46 GiB already allocated; 0 bytes free; 3.47 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

File "C:\Users\smast\OneDrive\Desktop\Code Projects\Johnny Five\audio test.py", line 8, in

got the same problem !

Same problem on 360ti with 8gb vram while loading the model on my local install. Works fine for me on colab though for some reason

try using the small models. set the environment variable USE_SMALL_MODELS="True"

Like this?: import os

os.environ["SUNO_USE_SMALL_MODELS"] = "True"

Yup that should do it

Still got the same error: torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 12.00 MiB (GPU 0; 8.00 GiB total capacity; 7.30 GiB already allocated; 0 bytes free; 7.33 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

This is the code I am using: from bark import SAMPLE_RATE, generate_audio, preload_models import os

os.environ["SUNO_USE_SMALL_MODELS"] = "True"

preload_models()

Any fix for this?

Yup that should do it

doesn't work for me, same error

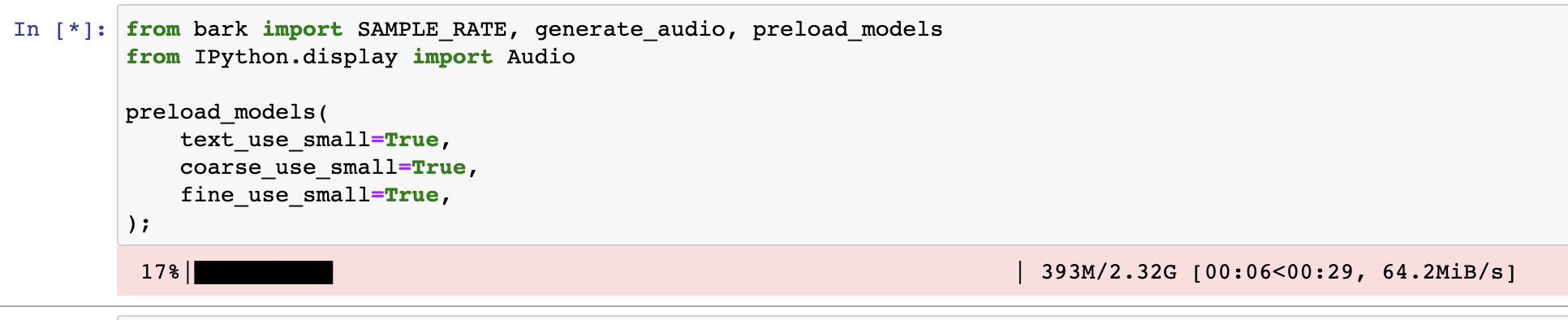

made a new update where you can control model size and location (gpu/cpu) directly on load, like this for example:

preload_models(

text_use_small=True,

coarse_use_small=True,

fine_use_gpu=False,

fine_use_small=True,

)

anyone tried this ?? how did it go?

from bark import SAMPLE_RATE, generate_audio, preload_models

from IPython.display import Audio

#import gc

#gc.collect()

#torch.cuda.empty_cache()

# download and load all models

#preload_models()

preload_models(

text_use_small=True,

coarse_use_small=True,

fine_use_gpu=True,

fine_use_small=True,

)

# generate audio from text

text_prompt = """

Hello, my name is Suno. And, uh — and I like pizza. [laughs]

But I also have other interests such as playing tic tac toe.

"""

audio_array = generate_audio(text_prompt)

# play text in notebook

Audio(audio_array, rate=SAMPLE_RATE)

ran the above. Got the error below: OutOfMemoryError: CUDA out of memory. Tried to allocate 44.00 MiB (GPU 0; 3.82 GiB total capacity; 2.40 GiB already allocated; 30.75 MiB free; 2.54 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

I then set: export PYTORCH_CUDA_ALLOC_CONF=garbage_collection_threshold:0.6,max_split_size_mb:21

and then the code was able to run with another ipython main.py.

Note the fine_use_gpu=True,

from bark import SAMPLE_RATE, generate_audio, preload_models from IPython.display import Audio

download and load all models

preload_models( text_use_small=True, coarse_use_small=True, fine_use_gpu=False, fine_use_small=True, )

generate audio from text

text_prompt = """ Hello, my name is Suno. And, uh — and I like pizza. [laughs] But I also have other interests such as playing tic tac toe. """ audio_array = generate_audio(text_prompt)

play text in notebook

Audio(audio_array, rate=SAMPLE_RATE)

It appears the script does not remove the cache. First time, I was able to get the script running. I ran the exact same script and get the following:

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 20.00 MiB (GPU 0; 3.82 GiB total capacity; 2.75 GiB already allocated; 20.00 MiB free; 2.88 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

No changes to the code or env. If I were to guess, it appears the memory is not being cleared on CUDA. Script is very cool though! Thanks for providing the source on this!

tried on my 4g gpu, oh man, it worked! thanks

I had this problem on my GTX 970. I tried adding this code to preload the models before generating audio:

preload_models(text_use_gpu=False, text_use_small=True, coarse_use_gpu=False, coarse_use_small=True, fine_use_gpu=False, fine_use_small=True, codec_use_gpu=False)

But it still gave me a CUDA out of memory error, even though I said to only use the CPU. Turns out, the generate_audio function doesn't respect the values you pass to preload_models... unless the models are the same size as generate_audio defaults to.

You need to set the environment variable SET SUNO_USE_SMALL_MODELS=True before you import bark. I ended up setting it in the batch file I use to start my program. Then it will use small models AND it will respect the use_gpu values you pass into preload_models.

You should be able to load some of the small models on the GPU, even if you can't load all of them on GPU, but I'm tired so I'll have to test that tomorrow.

You need to set the environment variable

SET SUNO_USE_SMALL_MODELS=Truebefore you import bark. I ended up setting it in the batch file I use to start my program. Then it will use small models AND it will respect the use_gpu values you pass into preload_models.

Seconding this. Without setting the environment variable before importing bark, the smaller models will not download unless you change the preload_models() arguments instead.

hmmm, definitely downloads the small version for me...

They do when calling preload_models with those options. I was referring to when only using the env var.