llama_index

llama_index copied to clipboard

llama_index copied to clipboard

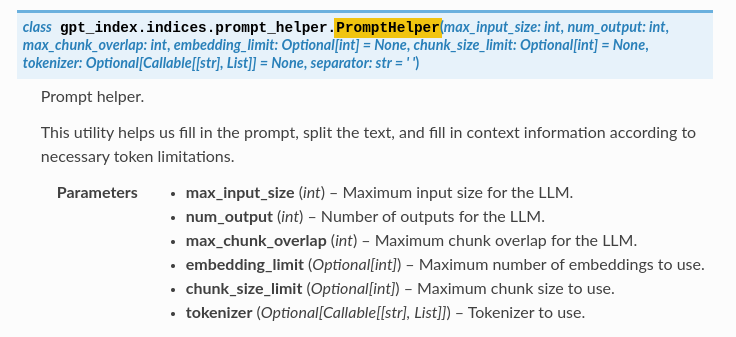

Doc : PromptHelper - need more info on parameters

It would be great to have more information about parameters of PromptHelper :

- Definition of parameters, the impact, for what they are used to

- Precise the unity (nb of chars ? etc...)

- Min / Max ?

Enventually, we can provide external link documentation from LLM Theory.

Agreed, we should definitely make the documentation clearer. Will take a todo here.

Hello, have you figured out the purpose of these parameters? I want to control the number of tokens used by the query each time. Which parameters are mainly used? @ezawadzki

@mingxin-yang I guess number of chars but not sure...

[Edit @mingxin-yang ] : token = chunk of text Source

The documentation has been updated with more info on token parameters. All parameters related to chunk sizes are measured in tokens. Furthermore, the prompt helper isn't really user facing anymore. Instead, you can use the service context directly.

Check the docs out! https://gpt-index.readthedocs.io/en/latest/core_modules/supporting_modules/service_context.html#configuring-the-service-context