redpanda

redpanda copied to clipboard

redpanda copied to clipboard

Scale test for recovery from S3

Create a scale test which exercises cluster recovery from S3 with larger amounts of data and partitions.

Note: Based on #5667, so ignore duplicate commits here until that is merged.

@ajfabbri looks like a merge conflict

Force-push:

- Latest test code, and fixing merge conflicts.

- Remove scale test suite.. work towards getting into nightlies for now.

- Parallelize file checksum computation (was ~30% of test runtime)

This should be ready to merge now.

Force-push: rebase on latest dev, and tune scale smaller for nightly runs (at least until we have another schedule with longer period).

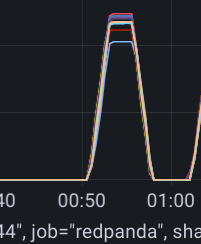

Now that segment checksumming has been parallelized (somewhat), the longest contributor to test runtime appears to be waiting for all the segment s3 objects' metadata to be updated. In the upload bandwidth graph below, you can see the current test takes a bit less than 10 minutes to upload all the segments:

However, if you watch the test debug log while it is running, you can see that the sum of lengths of s3 objects doesn't add up to the expected total bytes for multiple minutes after that.

:shrug: Failed in CI. Looking into it.

Force-push: Rebase on latest dev, and remove backoff time tweak that worked nice for scale tests, but caused failure for the unit tests, since the _wait_for_data_in_s3() loop requires 6 consecutive measurements to succeed, and a bigger backoff makes that less likely within the given timeout.

/ci-repeat 5

Force push: keep timeout constant as is for topic recovery tests, while still allowing scale test to pass in larger timeouts.

/ci-repeat 5

Some unrelated failures in the 5x CI run. All release builds passed, but 3/5 debug builds saw unrelated failures:

Force-push: Rebase on latest dev and fix conflicts. Address two nits from @abhijat

Force push: address nit (empty python method--we should add this to our linter).. CI failure is k8s operator (not related).

LGTM One question. As I understand the test uses the same size based logic as old recovery test to validate results. But also it uses verifiable consumer. Is it correct? If this is the case, maybe we should get rid of size based validation and keep only verifiable consumer validation.

Thank you @Lazin .. I plan on doing some more refactoring on the test in future (to avoid subclassing the main recovery test directly).. and I think this is a good idea.

k8s operator test is failing again

Force-push: rebase to latest dev in hopes of resolving k8s tCI est issue.