mypy

mypy copied to clipboard

mypy copied to clipboard

Unable to specify a default value for a generic parameter

Simplified Example:

from typing import TypeVar

_T = TypeVar('_T')

def foo(a: _T = 42) -> _T: # E: Incompatible types in assignment (expression has type "int", variable has type "_T")

return a

Real World example is something closer to:

_T = TypeVar('_T')

def noop_parser(x: str) -> str:

return x

def foo(value: str, parser: Callable[[str], _T] = noop_parser) -> _T:

return parser(value)

I think the problem here is that in general, e.g. if there are other parameters also using _T in their type, the default value won't work. Since this is the only parameter, you could say that this is overly restrictive, and if there are no parameters, the return type should just be determined by that default value (in your simplified example, you'd want it to return int). But I'm not so keen on doing that, since it breaks as soon as another generic parameter is used.

To make this work, you can use @overload, e.g.

@overload

def foo() -> int: ...

@overload

def foo(a: _T) -> _T: ...

def foo(a = 42): # Implementation, assuming this isn't a stub

return a

That's what we do in a few places in typeshed too (without the implementation though), e.g. check out Mapping.get() in typing.pyi.

I'm running in to a similar issue when trying to iron out a bug in the configparser stubs. Reducing RawConfigParser to the relevant parts gives:

_section = Mapping[str, str]

class RawConfigParser:

def __init__(self, dict_type: Mapping[str, str] = ...) -> None: ...

def defaults(self) -> _section: ...

This is incorrect at the moment, because dict_type should (as the name indicates) be a type. Furthermore, defaults() returns an instance of dict_type, so this seems like a good application of generics. The following works as expected when a dict_type is passed (i.e. reveal_type(RawConfigParser(dict_type=dict).default()) gives builtins.dict*[Any, Any]):

_T1 = TypeVar('_T1', bound=Mapping)

class RawConfigParser(Generic[_T1]):

def __init__(self, dict_type: Type[_T1] = ...) -> None: ...

def defaults(self) -> _T1: ...

but when you don't pass a dict_type, mypy can't infer what type it should be using and asks for a type annotation where it is being used. This is less than ideal for the default instantiation of the class (which will be what the overwhelming majority of people are using). So I tried adding the appropriate default to the definition:

_T1 = TypeVar('_T1', bound=Mapping)

class RawConfigParser(_parser, Generic[_T1]):

def __init__(self, dict_type: Type[_T1] = OrderedDict) -> None: ...

def defaults(self) -> _T1: ...

but then I hit the error that OP was seeing:

stdlib/3/configparser.pyi:51: error: Incompatible default for argument "dict_type" (default has type Type[OrderedDict[Any, Any]], argument has type Type[_T1])

This feels like something that isn't currently supported, but I'm not sure if I'm missing a way that I could do this...

@OddBloke

This default is, strictly speaking, not safe. This would require a lower bound for _T1 in order to be safe.

@ilevkivskyi I don't follow, I'm afraid; could you expand on that a little, please?

Consider this code:

class UserMapping(Mapping):

...

RawConfigParser[UserMapping]()

In this case OrderedDict will be "assigned" to dict_type, which will be Type[UserMapping], which is not a supertype of Type[OrderedDict]. In order for the default value to be safe it is necessary to have some guarantee that _T1 will be always a supertype of OrderedDict (this is called a lower bound), but this is not supported, only upper bounds are allowed for type variables.

@ilevkivskyi Thanks, that makes sense. Do you think what I'm trying to do is unrepresentable as things stand?

I didn't think enough about this, but my general rule is not to be "obsessed" with precise types, sometimes Any is OK. If a certain problem appears repeatedly, then maybe we need to improve something (also now we have plugin system). In this particular case my naive guess would be to play with overloads, as Guido suggested (note that __init__ can also be overloaded).

Allowing default values for generic parameters might also enable using type constraints to ensure that generics are only used in specified ways. This is a bit esoteric, but here is an example of how you might use the same implementation for a dict and a set while requiring that consumers of the dict type always provide a value to insert() while consumers of the set type never do.

class Never(Enum):

"""An uninhabited type"""

class Only(Enum):

"""A type with one member (kind of like NoneType)"""

ONE = 1

NOT_PASSED = Only(1)

class MyGenericMap(Generic[K, V]):

def insert(key, value=cast(Never, NOT_PASSED)):

# type: (K, V) -> None

...

if value is NOT_PASSED:

....

class MySet(MyGenericMap[K, Never]):

pass

class MyDict(MyGenericMap[K, V]):

pass

my_set = MySet[str]()

my_set.insert('hi') # this is fine, default value matches concrete type

my_set.insert('hi', 'hello') # type error, because 'hello' is not type never

my_dict = MyDict[str, int]()

my_dict.insert('hi') # type error because value is type str, not Never

my_dict.insert('hi', 22) # this is fine, because the default value is not used

Huh, this just came up in our code.

This request already appeared five times, so I am raising priority to high.

I think my preferred solution for this would be to support lower bounds for type variables. It is not too hard to implement (but still a large addition), and at the same time it may provide more expressiveness in other situations.

A second part for the workaround with overload should be noted: The header of implementation of the overloaded function must be without a generic annotation for that variable, but it is not acceptable for more complex functions where the body should be checked. Only the binding between input and output types is checked by overloading. The most precise solution for the body seems to encapsulate it by a new function with the same types, but without a default.

@overload

def foo() -> int: ...

@overload

def foo(a: _T) -> _T: ...

def foo(a = 42): # unchecked implementation

return foo_internal(a)

def foo_internal(a: _T) -> _T:

# a checked complicated body moved here

return a

Almost everything could be checked even in more complicated cases, but the number of necessary overloaded declarations could rise exponentially by 2 ** number_of generic_vars_with_defaults.

EDIT

Another solution is to use a broad static type for that parameter and immediately assign it in the body to the original exact generic type. It is a preferable solution for a class overload.

An example is a database cursor with a generic row type:

_T = TypeVar('_T', list, dict)

class Cursor(Generic[_T]):

@overload

def __init__(self, connection: Connection) -> None: ...

@overload

def __init__(self, connection: Connection, row_type: Type[_T]) -> None: ...

def __init__(self, connection: Connection, row_type: Type=list) -> None:

self.row_type: Type[_T] = row_type # this annotation is important

...

def fetchone(self) -> Optional[_T]: ...

def fetchall(self) -> List[_T]: ... # more methods depend on _T type

cursor = Cursor(connection, dict) # cursor.execute(...)

reveal_type(cursor.fetchone()) # dict

I think the problem here is that in general, e.g. if there are other parameters also using

_Tin their type, the default value won't work.

Wait. Why is that a problem? If default value doesn’t work for some calls, that should be a type error at the call site.

_T = TypeVar('_T')

def foo(a: List[_T] = [42], b: List[_T] = [42]) -> List[_T]:

return a + b

foo() # This should work.

foo(a=[17]) # This should work.

foo(b=[17]) # This should work.

foo(a=["foo"]) # This should be a type error (_T cannot be both str and int).

foo(b=["foo"]) # This should be a type error (_T cannot be both int and str).

foo(a=["foo"], b=["foo"]) # This should work.

This default is, strictly speaking, not safe. This would require a lower bound for

_T1in order to be safe.

Only if we retain the requirement that the default value always works, which is not what’s being requested here. The desired result is:

_T1 = TypeVar('_T1', bound=Mapping)

class RawConfigParser(_parser, Generic[_T1]):

def __init__(self, dict_type: Type[_T1] = OrderedDict) -> None: ...

def defaults(self) -> _T1: ...

class UserMapping(Mapping):

...

RawConfigParser[OrderedDict]() # This should work.

RawConfigParser[UserMapping]() # This should be a type error (_T1 cannot be both UserMapping and OrderedDict).

RawConfigParser[UserMapping](UserMapping) # This should work.

I ran into this trying to write

_T = TypeVar('_T')

def str2int(s:str, default:_T = None) -> Union[int, _T]: # error: Incompatible default for argument "default" (default has type "None", argument has type "_T")

try:

return int(s)

except ValueError:

return default

(after figuring out that I need --no-implicit-optional mypy parameter as well). @overload is not ideal because then the body of the function is no longer type-checked.

@overload

def str2int(s:str) -> Optional[int]: ...

@overload

def str2int(s:str, default:_T) -> Union[int, _T]: ...

def str2int(s, default = None):

the_body_is_no_longer_type_checked

I think this raises the bar for type-checking newbies.

This comes up a lot (and will likely come up even more if we ever get around to making --no-implicit-optional the default), so I'd like to spew some thoughts in the hope that it leads to something.

I believe what Anders is proposing is:

- When checking a definition ignore the default value, but keep it around for later

- Run mypy's existing type inference at call sites (crucially, continuing to ignore the default value)

- Check that default value's type is a subtype of whatever we solved for T (assuming

x: T = ..., but doing the appropriate thing forx: Optional[T] = ...and so on)

This is different from what I believe Ivan is proposing in that it would cause foo to behave unlike bar in the following:

def foo(a: T = 42, b: T = 42) -> List[T]: ...

def bar(a: T, b: T) -> List[T]: ...

reveal_type(foo("asdf")) # type error for Anders, because default value 42 is not a str

reveal_type(bar("asdf", 42)) # reveals List[object]

Or if you're the kind of person who hates joins that end with object, maybe you'll find this more intuitive:

class X: ...

class Y(X): ...

def foo(a: T = X(), b: T = X()) -> List[T]: ...

def bar(a: T, b: T) -> List[T]: ...

reveal_type(foo(Y())) # type error for Anders, because default value X() is not a Y

reveal_type(bar(Y(), X())) # reveals List[X]

I think typevar lower bounds would result in behaviour generally more consistent with what mypy does elsewhere. Since Anders' proposal is less permissive than type var lower bounds, it's also more likely to be disruptive. But the differences here might be somewhat moot, since I reckon the implementations would have a good bit in common: for starters, both will have to modify parsing and CallableType to keep track of default values. Type var lower bounds would need to do some subtraction to associate a CallableType.variable in cases like Optional[T] (or worse, List[T]), but Anders' would have to do something similar in applytype. Typevar lower bounds would probably have to touch more code, though.

A fun side note about both solutions is causing default values to matter to type checking might mean we'd sometimes want to use them in stubs. Although with lower bounds, we could add a lower bound attribute to typing.TypeVar which stubs could use (instead of inferring it from default values)...

I believe what Anders is proposing is:

- When checking a definition ignore the default value, but keep it around for later

- Run mypy's existing type inference at call sites (crucially, continuing to ignore the default value)

No, at the call site we know whether the default value is being used, so we don’t need to ignore it; we can allow it to participate in type inference, if it is being used.

To put it another way, we can treat

def foo(a: T = X(), b: T = X()) -> List[T]: ...

foo(Y())

as syntactic sugar for

a_default = X()

b_default = X()

def foo(a: T, b: T) -> List[T]: ...

foo(Y(), b_default)

Thanks for clarifying! Just to make sure I get things straight, this means the foo(a="foo") and foo(b="foo") examples in your original comment wouldn't in fact be a type error, since T = object is a totally valid inference.

In which case I'm not sure I see a difference in semantics between lower bounded typevars and what you're proposing; it's more just a difference in implementation (in one case, the lower bound is something we automatically add to the TypeVarDef, in the other the lower bound is an additional constraint we infer from the CallableType).

Yes, strictly speaking, you’re right about _T = object in the first example. I’ve edited it to use invariant List[_T] types to avoid that possibility. The second example stands as written because the generic type parameter is explicitly provided.

Lower bounded typevars do not solve the same problem; they don’t allow you to provide defaults unless the defaults would be valid at all call sites, even call sites where the defaults are not used. In the first example, I want foo(a=["foo"], b=["foo"]) to be a List[str], not a List[object], even though 42 is not a str. In the second example, I want RawConfigParser[UserMapping](UserMapping) to be allowed even though UserMapping isn’t a subclass of OrderedDict.

Seems like the current workaround is to wrap the parameter in an optional and handle the default in code, or to use overloads.

Optional probably is better due to it's terseness compared to the overloads.

Is there any update on this in general? These kind of things do make it harder to get larger adoption.

@efagerberg Optional is not a workaround at all, neither for the caller side nor for the callee side.

from typing import Optional, TypeVar

_T = TypeVar("_T")

def foo(a: Optional[_T] = None) -> _T:

if a is None:

return 42 # error: Incompatible return value type (got "int", expected "_T")

return a

b = foo() # error: Need type annotation for 'b'

c: str = foo() # false negative!

c.lower() # AttributeError: 'int' object has no attribute 'lower'

Overloads sort of help, but they are not checked on the callee side, and have the exponential explosion issue explained above.

@hynekcer I cannot get the suggested workaround for class generic types running unless the TypeVar is limited to concrete types. Otherwise, as soon the the actual default parameter is used (i.e. drop the dict from construction), mypy will complain Need type annotation for "cursor" and reveal an Optional[Any] rather than Optional[list]. When I just change the order of types in the TypeVar, it break and I get the wrong type revealed.

I must say, among the many small annoyances this is by far the biggest typing issue I encountered so far. I need to either compromise on actual non-typing functionality, i.e. no default arguments or vastly different interface, or completely loose the generic typing for the class. You can't just slap a type: ignore on some small hidden statement and there seems to be no viable workaround.

Consider the following:

from typing import TypeVar, Generic, overload, Type, Optional, List, TypedDict, Any, cast

T = TypeVar('T', str, int)

class BaseUpdater(Generic[T]):

@overload

def __init__(self) -> None: ...

@overload

def __init__(self, rowType: Type[T]) -> None: ...

def __init__(self, rowType: Type=str) -> None:

self.rowType: Type[T] = rowType # this annotation is important

pass

def fun(self) -> T:

return cast(T, self.rowType('42'))

class Updater(BaseUpdater[T]):

pass

reveal_type(Updater(int).fun()) # int

reveal_type(Updater(str).fun()) # str

# depends on order str|int or int|str in T= ..... line !!! WHY ?!

# "Type=str" or "Type=int" is irrelevant in constructor (!)

reveal_type(Updater().fun())

T = TypeVar('T', bound=str|int) make this scheme not working. Last reveal_type will show Revealed type is "<nothing>"

Coming up on the fifth anniversary of this issue. Shouldn't this either be addressed or classified as "won't fix"? This limitation makes it overly complicated to annotate what should be a fairly basic case, so it would be good to know if it will ever change.

Edit: my mistake, I didn't reread the issue since I started following it years ago!

@markedwards Default values were recently drafted in PEP 696 slated for Python 3.12, and have been added to typing-extensions already.

Either this issue could be renamed to track its implementation or you could create one (here's an example issue I created for PEP 681 and the MyPy team was kind enough to update it as work progresses; you could copy that template).

The MyPy team has been doing amazing work with Python 3.11 issues. I am just guessing, but I imagine they will start on the Python 3.12 issues after the 3.11 issues.

@NeilGirdhar, fair enough. So then the simplified example in the original issue would become:

from typing_extensions import TypeVar

_T = TypeVar('_T', default=int)

def foo(a: _T = 42) -> _T:

return a

Not confirmable yet: https://mypy-play.net/?mypy=master&python=3.12&flags=strict&gist=d083d1bbf2f8f9426f876866947834a0

@markedwards Looks fine to me (although this is a pointless use of defaults). Yes, as far as I know, no one implements PEP 696 yet.

as far as I know, no one implements PEP 696 yet.

Pyright has implemented PEP 696.

Pyright has implemented PEP 696.

Wow! Thanks for letting me know 😄

PEP 696 is different from this issue.

PEP 696 is about providing a type-level default for a type variable used in a generic type—example from the PEP:

T = TypeVar("T", default=int)

@dataclass

class Box(Generic[T]):

value: T | None = None

This issue is about providing a value-level default for a function parameter whose type is generic—example from the description:

_T = TypeVar('_T')

def foo(a: _T = 42) -> _T:

return a

To @andersk's point, I also don't see how PEP 696 makes any difference here. The TypeVar default does not address the core issue, which is that the default value might conflict with the type variable.

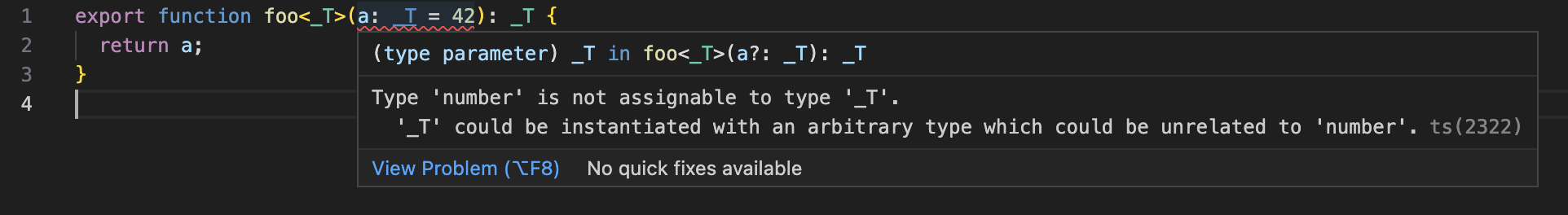

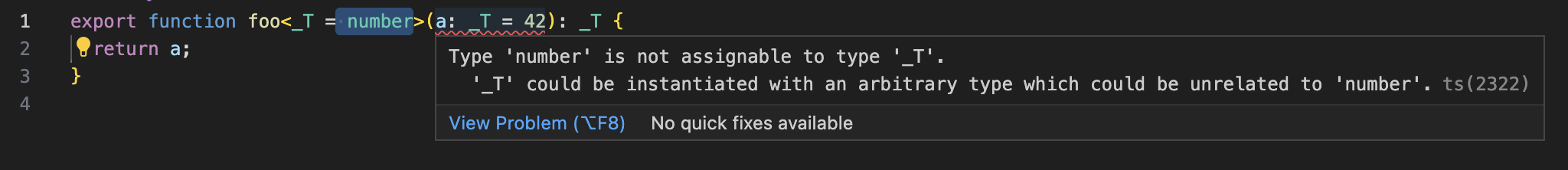

For what its worth, Typescript also does not support this pattern:

And adding a type variable default in Typescript does not "fix" the issue:

That's not to say that Python typing is for any reason bound by what Typescript can do, just that this limitation is not a peculiarity of Python or mypy.

It seems to me that a final decision should be made here and this should be documented so developers don't need to find this thread to sort it out.